New pope chose his name based on AI’s threats to “human dignity”

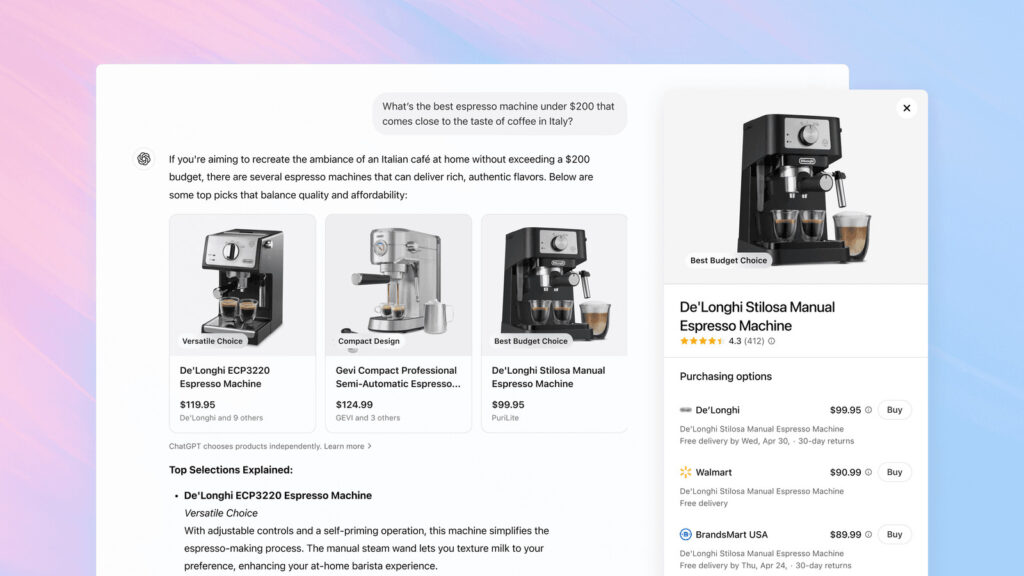

“Like any product of human creativity, AI can be directed toward positive or negative ends,” Francis said in January. “When used in ways that respect human dignity and promote the well-being of individuals and communities, it can contribute positively to the human vocation. Yet, as in all areas where humans are called to make decisions, the shadow of evil also looms here. Where human freedom allows for the possibility of choosing what is wrong, the moral evaluation of this technology will need to take into account how it is directed and used.”

History repeats with new technology

While Pope Francis led the call for respecting human dignity in the face of AI, it’s worth looking a little deeper into the historical inspiration for Leo XIV’s name choice.

In the 1891 encyclical Rerum Novarum, the earlier Leo XIII directly confronted the labor upheaval of the Industrial Revolution, which generated unprecedented wealth and productive capacity but came with severe human costs. At the time, factory conditions had created what the pope called “the misery and wretchedness pressing so unjustly on the majority of the working class.” Workers faced 16-hour days, child labor, dangerous machinery, and wages that barely sustained life.

The 1891 encyclical rejected both unchecked capitalism and socialism, instead proposing Catholic social doctrine that defended workers’ rights to form unions, earn living wages, and rest on Sundays. Leo XIII argued that labor possessed inherent dignity and that employers held moral obligations to their workers. The document shaped modern Catholic social teaching and influenced labor movements worldwide, establishing the church as an advocate for workers caught between industrial capital and revolutionary socialism.

Just as mechanization disrupted traditional labor in the 1890s, artificial intelligence now potentially threatens employment patterns and human dignity in ways that Pope Leo XIV believes demand similar moral leadership from the church.

“In our own day,” Leo XIV concluded in his formal address on Saturday, “the Church offers to everyone the treasury of her social teaching in response to another industrial revolution and to developments in the field of artificial intelligence that pose new challenges for the defense of human dignity, justice, and labor.”

New pope chose his name based on AI’s threats to “human dignity” Read More »