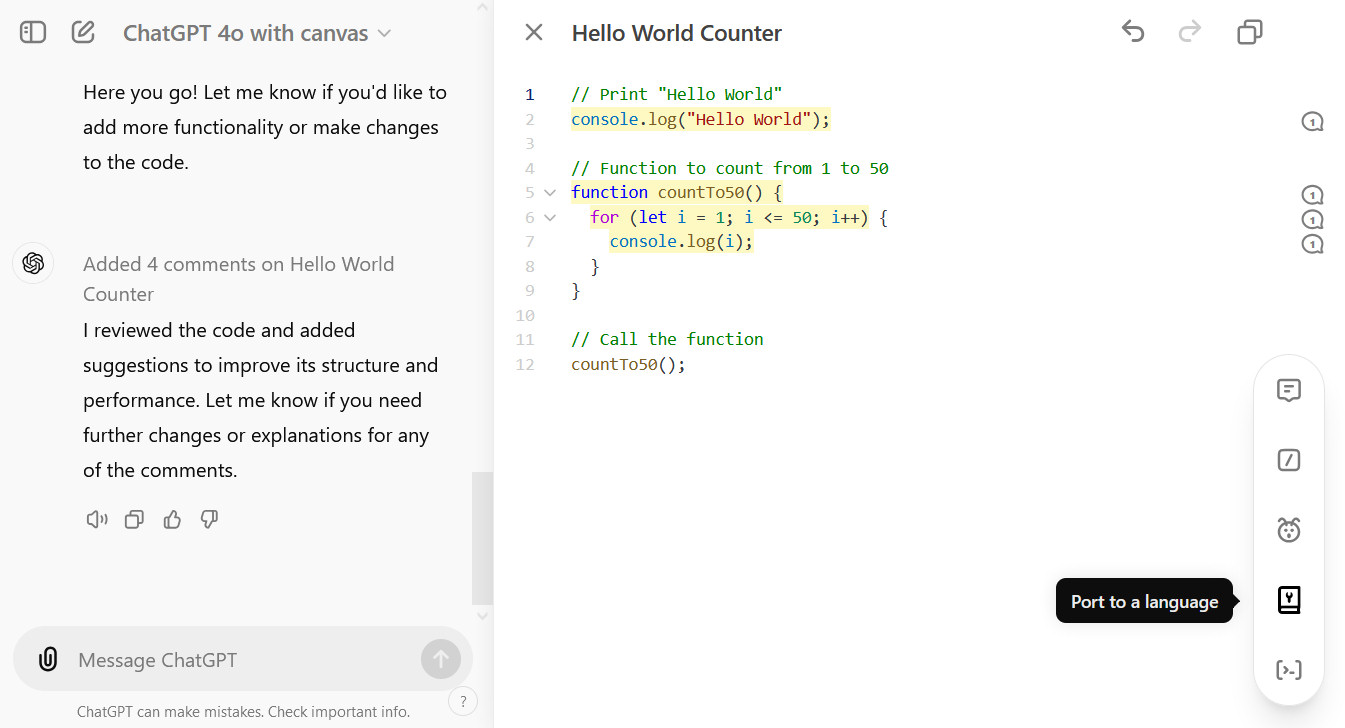

Man learns he’s being dumped via “dystopian” AI summary of texts

The evolution of bad news via texting

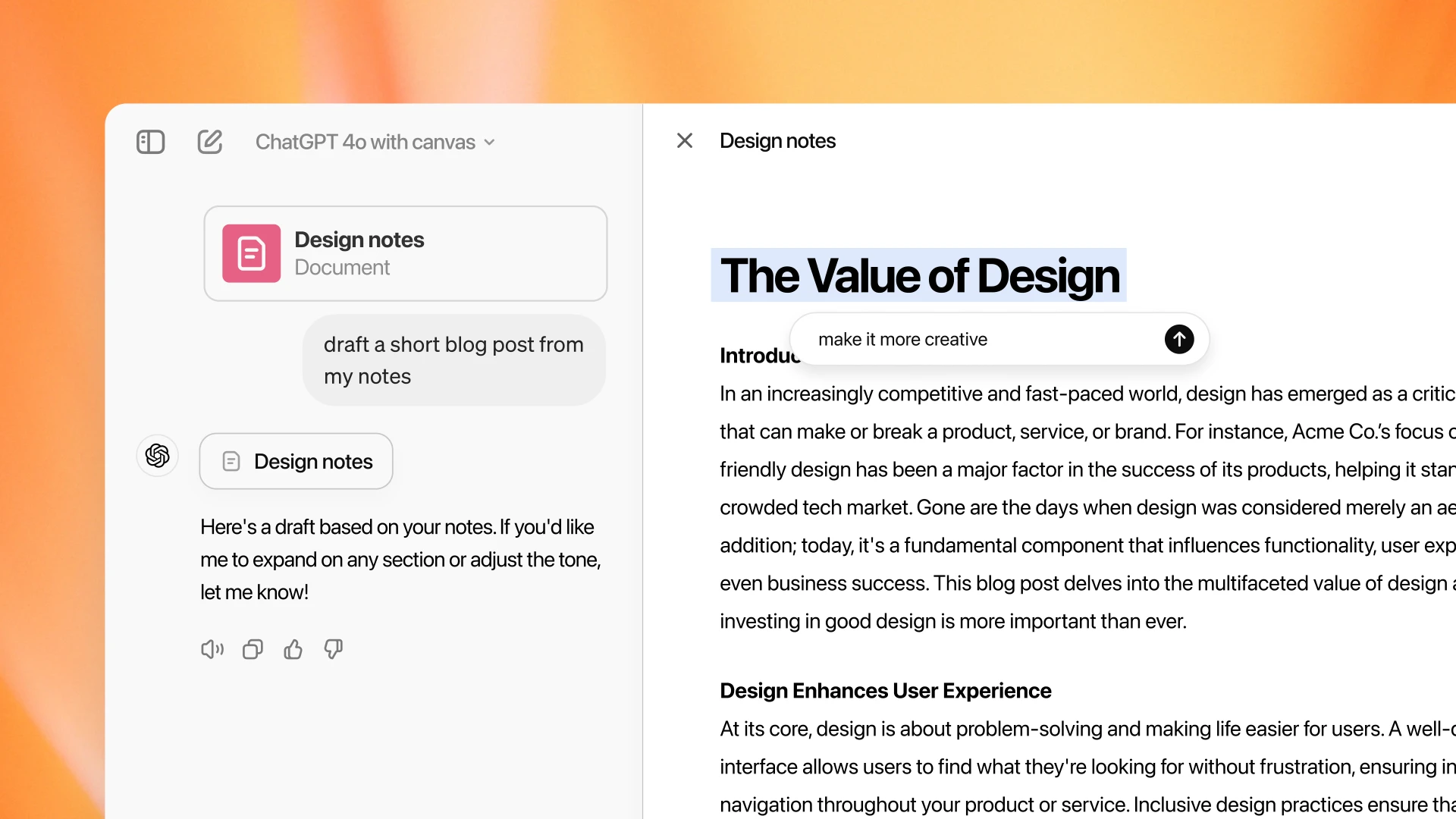

Spreen’s message is the first time we’ve seen an AI-mediated relationship breakup, but it likely won’t be the last. As the Apple Intelligence feature rolls out widely and other tech companies embrace AI message summarization, many people will probably be receiving bad news through AI summaries soon. For example, since March, Google’s Android Auto AI has been able to deliver summaries to users while driving.

If that sounds horrible, consider our ever-evolving social tolerance for tech progress. Back in the 2000s when SMS texting was still novel, some etiquette experts considered breaking up a relationship through text messages to be inexcusably rude, and it was unusual enough to generate a Reuters news story. The sentiment apparently extended to Americans in general: According to The Washington Post, a 2007 survey commissioned by Samsung showed that only about 11 percent of Americans thought it was OK to break up that way.

What texting looked like back in the day.

By 2009, as texting became more commonplace, the stance on texting break-ups began to soften. That year, ABC News quoted Kristina Grish, author of “The Joy of Text: Mating, Dating, and Techno-Relating,” as saying, “When Britney Spears dumped Kevin Federline I thought doing it by text message was an abomination, that it was insensitive and without reason.” Grish was referring to a 2006 incident with the pop singer that made headline news. “But it has now come to the point where our cell phones and BlackBerries are an extension of ourselves and our personality. It’s not unusual that people are breaking up this way so much.”

Today, with text messaging basically being the default way most adults communicate remotely, breaking up through text is commonplace enough that Cosmopolitan endorsed the practice in a 2023 article. “I can tell you with complete confidence as an experienced professional in the field of romantic failure that of these options, I would take the breakup text any day,” wrote Kayle Kibbe.

Who knows, perhaps in the future, people will be able to ask their personal AI assistants to contact their girlfriend or boyfriend directly to deliver a personalized break-up for them with a sensitive message that attempts to ease the blow. But what’s next—break-ups on the moon?

This article was updated at 3: 33 PM on October 10, 2024 to clarify that the ex-girlfriend’s full real name has not been revealed by the screenshot image.

Man learns he’s being dumped via “dystopian” AI summary of texts Read More »