New simulation of Titanic’s sinking confirms historical testimony

NatGeo documentary follows a cutting-edge undersea scanning project to make a high-resolution 3D digital twin of the ship.

The bow of the Titanic Digital Twin, seen from above at forward starboard side. Credit: Magellan Limited/Atlantic Productions

In 2023, we reported on the unveiling of the first full-size 3D digital scan of the remains of the RMS Titanic—a “digital twin” that captured the wreckage in unprecedented detail. Magellan Ltd, a deep-sea mapping company, and Atlantic Productions conducted the scans over a six-week expedition. That project is the subject of the new National Geographic documentary Titanic: The Digital Resurrection, detailing several fascinating initial findings from experts’ ongoing analysis of that full-size scan.

Titanic met its doom just four days into the Atlantic crossing, roughly 375 miles (600 kilometers) south of Newfoundland. At 11: 40 pm ship’s time on April 14, 1912, Titanic hit that infamous iceberg and began taking on water, flooding five of its 16 watertight compartments, thereby sealing its fate. More than 1,500 passengers and crew perished; only around 710 of those on board survived.

Titanic remained undiscovered at the bottom of the Atlantic Ocean until an expedition led by Jean-Louis Michel and Robert Ballard reached the wreck on September 1, 1985. The ship split apart as it sank, with the bow and stern sections lying roughly one-third of a mile apart. The bow proved to be surprisingly intact, while the stern showed severe structural damage, likely flattened from the impact as it hit the ocean floor. There is a debris field spanning a 5×3-mile area, filled with furniture fragments, dinnerware, shoes and boots, and other personal items.

The joint mission by Magellan and Atlantic Productions deployed two submersibles nicknamed Romeo and Juliet to map every millimeter of the wreck, including the debris field spanning some three miles. The result was a whopping 16 terabytes of data, along with over 715,000 still images and 4K video footage. That raw data was then processed to create the 3D digital twin. The resolution is so good, one can make out part of the serial number on one of the propellers.

“I’ve seen the wreck in person from a submersible, and I’ve also studied the products of multiple expeditions—everything from the original black-and-white imagery from the 1985 expedition to the most modern, high-def 3D imagery,” deep ocean explorer Parks Stephenson told Ars. “This still managed to blow me away with its immense scale and detail.”

The Juliet ROV scans the bow railing of the Titanic wreck site. Magellan Limited/Atlantic Productions

The NatGeo series focuses on some of the fresh insights gained from analyzing the digital scan, enabling Titanic researchers like Stephenson to test key details from eyewitness accounts. For instance, some passengers reported ice coming into their cabins after the collision. The scan shows there is a broken porthole that could account for those reports.

One of the clearest portions of the scan is Titanic‘s enormous boiler rooms right at the rear bow section where the ship snapped in half. Eyewitness accounts reported that the ship’s lights were still on right up until the sinking, thanks to the tireless efforts of Joseph Bell and his team of engineers, all of whom perished. The boilers show up as concave on the digital replica of Titanic, and one of the valves is in an open position, supporting those accounts.

The documentary spends a significant chunk of time on a new simulation of the actual sinking, taking into account the ship’s original blueprints, as well as information on speed, direction, and position. Researchers at University College London were also able to extrapolate how the flooding progressed. Furthermore, a substantial portion of the bow hit the ocean floor with so much force that much of it remains buried under mud. Romeo’s scans of the debris field scattered across the ocean floor enabled researchers to reconstruct the damage to the buried portion.

Titanic was famously designed to stay afloat if up to four of its watertight compartments flooded. But the ship struck the iceberg from the side, causing a series of punctures along the hull across 18 feet, affecting six of the compartments. Some of those holes were quite small, about the size of a piece of paper, but water could nonetheless seep in and eventually flood the compartments. So the analysis confirmed the testimony of naval architect Edward Wilding—who helped design Titanic—as to how a ship touted as unsinkable could have met such a fate. And as Wilding hypothesized, the simulations showed that had Titanic hit the iceberg head-on, she would have stayed afloat.

These are the kinds of insights that can be gleaned from the 3D digital model, according to Atlantic Productions CEO Anthony Geffen, who produced the NatGeo series. “It’s not really a replica. It is a digital twin, down to the last rivet,” he told Ars. “That’s the only way that you can start real research. The detail here is what we’ve never had. It’s like a crime scene. If you can see what the evidence is, in the context of where it is, you can actually piece together what happened. You can extrapolate what you can’t see as well. Maybe we can’t physically go through the sand or the silt, but we can simulate anything because we’ve actually got the real thing.”

Ars caught up with Stephenson and Geffen to learn more.

A CGI illustration of the bow of the Titanic as it sinks into the ocean. National Geographic

Ars Technica: What is so unique and memorable about experiencing the full-size 3D scan of Titanic, especially for those lucky enough to have seen the actual wreckage first-hand via submersible?

Parks Stephenson: When you’re in the submersible, you are restricted to a 7-inch viewport and as far as your light can travel, which is less than 100 meters or so. If you have a camera attached to the exterior of the submersible, you can only get what comes into the frame of the camera. In order to get the context, you have to stitch it all together somehow, and, even then, you still have human bias that tends to make the wreck look more like the original Titanic of 1912 than it actually does today. So in addition to seeing it full-scale and well-lit wherever you looked, able to wander around the wreck site, you’re also seeing it for the first time as a purely data-driven product that has no human bias. As an analyst, this is an analytical dream come true.

Ars Technica: One of the most visually arresting images from James Cameron’s blockbuster film Titanic was the ship’s stern sticking straight up out of the water after breaking apart from the bow. That detail was drawn from eyewitness accounts, but a 2023 computer simulation called it into question. What might account for this discrepancy?

Parks Stephenson: One thing that’s not included in most pictures of Titanic sinking is the port heel that she had as she’s going under. Most of them show her sinking on an even keel. So when she broke with about a 10–12-degree port heel that we’ve reconstructed from eyewitness testimony, that stern would tend to then roll over on her side and go under that way. The eyewitness testimony talks about the stern sticking up as a finger pointing to the sky. If you even take a shallow angle and look at it from different directions—if you put it in a 3D environment and put lifeboats around it and see the perspective of each lifeboat—there is a perspective where it does look like she’s sticking up like a finger in the sky.

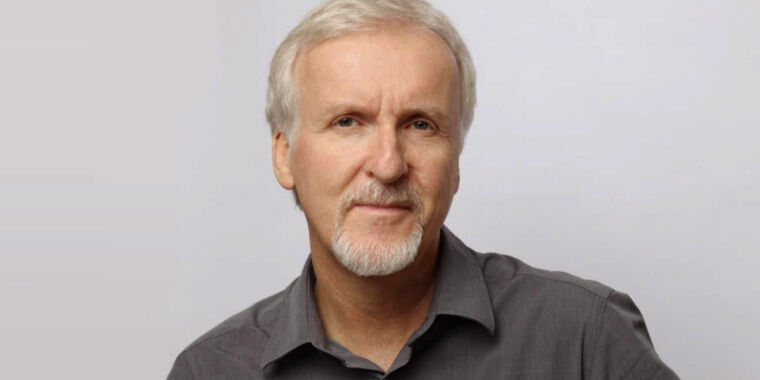

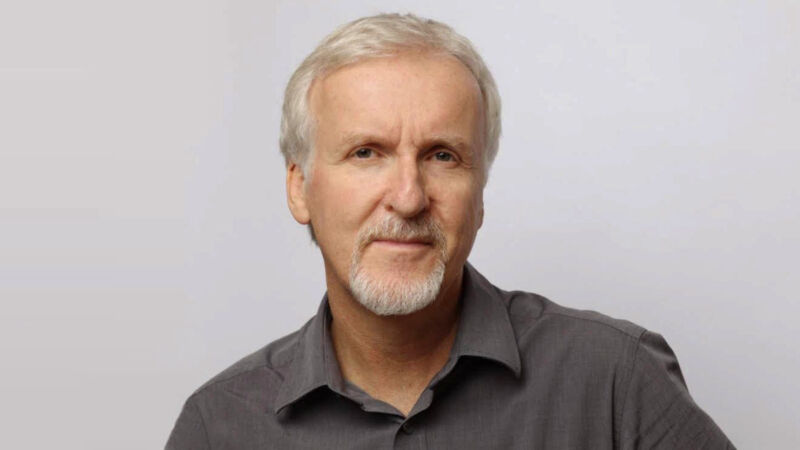

Titanic analyst Parks Stephenson, metallurgist Jennifer Hooper, and master mariner Captain Chris Hearn find evidence exonerating First Officer William Murdoch, long accused of abandoning his post.

This points to a larger thing: the Titanic narrative as we know it today can be challenged. I would go as far as to say that most of what we know about Titanic now is wrong. With all of the human eyewitnesses having passed away, the wreck is our only remaining witness to the disaster. This photogrammetry scan is providing all kinds of new evidence that will help us reconstruct that timeline and get closer to the truth.

Ars Technica: What more are you hoping to learn about Titanic‘s sinking going forward? And how might those lessons apply more broadly?

Parks Stephenson: The data gathered in this 2022 expedition yielded more new information that could be put into this program. There’s enough material already to have a second show. There are new indicators about the condition of the wreck and how long she’s going to be with us and what happens to these wrecks in the deep ocean environment. I’ve already had a direct application of this. My dives to Titanic led me to another shipwreck, which led me to my current position as executive director of a museum ship in Louisiana, the USS Kidd.

She’s now in dry dock, and there’s a lot that I’m understanding about some of the corrosion issues that we experienced with that ship based on corrosion experiments that have been conducted at the Titanic wreck sites—specifically how metal acts underwater over time if it’s been stressed on the surface. It corrodes differently than just metal that’s been submerged. There’s all kinds of applications for this information. This is a new ecosystem that has taken root in Titanic. I would say between my dive in 2005 and 2019, I saw an explosion of life over that 14-year period. It’s its own ecosystem now. It belongs more to the creatures down there than it does to us anymore.

The bow of the Titanic Digital Twin. Magellan Limited/Atlantic Productions

As far as Titanic itself is concerned, this is key to establishing the wreck site, which is one of the world’s largest archeological sites, as an archeological site that follows archeological rigor and standards. This underwater technology—that Titanic has accelerated because of its popularity—is the way of the future for deep-ocean exploration. And the deep ocean is where our future is. It’s where green technology is going to continue to get its raw elements and minerals from. If we don’t do it responsibly, we could screw up the ocean bottom in ways that would destroy our atmosphere faster than all the cars on Earth could do. So it’s not just for the Titanic story, it’s for the future of deep-ocean exploration.

Anthony Geffen: This is the beginning of the work on the digital scan. It’s a world first. Nothing’s ever been done like this under the ocean before. This film looks at the first set of things [we’ve learned], and they’re very substantial. But what’s exciting about the digital twin is, we’ll be able to take it to location-based experiences where the public will be able to engage with the digital twin themselves, walk on the ocean floor. Headset technology will allow the audience to do what Parks did. I think that’s really important for citizen science. I also think the next generation is going to engage with the story differently. New tech and new platforms are going to be the way the next generation understands the Titanic. Any kid, anywhere on the planet, will be able to walk in and engage with the story. I think that’s really powerful.

Titanic: The Digital Resurrection premieres on April 11, 2025, on National Geographic. It will be available for streaming on Disney+ and Hulu on April 12, 2025.

Jennifer is a senior writer at Ars Technica with a particular focus on where science meets culture, covering everything from physics and related interdisciplinary topics to her favorite films and TV series. Jennifer lives in Baltimore with her spouse, physicist Sean M. Carroll, and their two cats, Ariel and Caliban.

New simulation of Titanic’s sinking confirms historical testimony Read More »