Ars Technica System Guide: Five sample PC builds, from $500 to $5,000

Despite everything, it’s still possible to build decent PCs for decent prices.

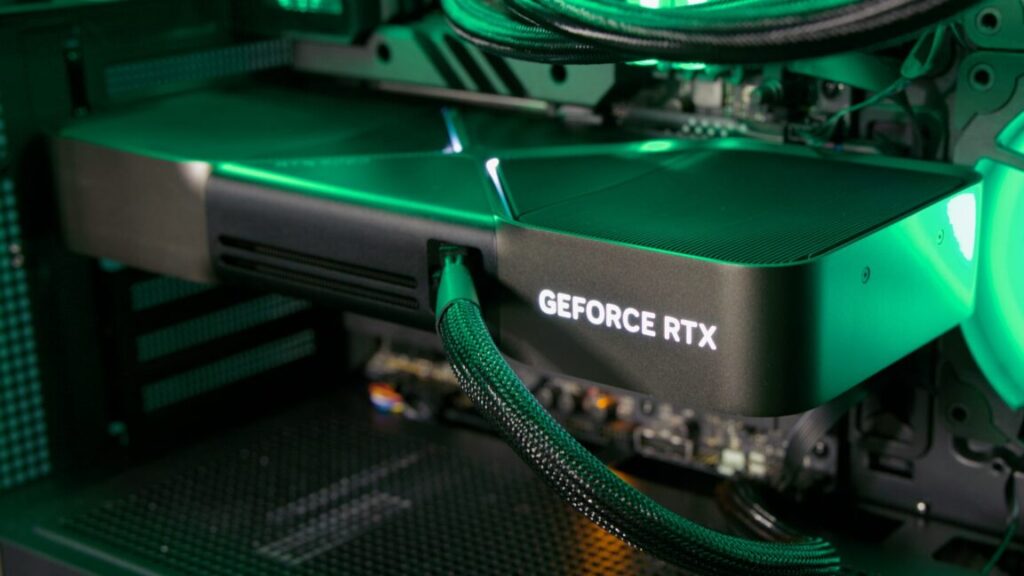

You can buy a great 4K gaming PC for less than it costs to buy a GeForce RTX 5090. Let us show you some examples. Credit: Andrew Cunningham

Sometimes I go longer than I intend without writing an updated version of our PC building guide. And while I could just claim to be too busy to spend hours on Newegg or Amazon or other sites digging through dozens of near-identical parts, the lack of updates usually correlates with “times when building a desktop PC is actually a pain in the ass.”

Through most of 2025, fluctuating and inflated graphics card pricing and limited availability have once again conspired to make a normally fun hobby an annoying slog—and honestly kind of a bad way to spend your money, relative to just buying a Steam Deck or something and ignoring your desktop for a while.

But three things have brought me back for another round. First, GPU pricing and availability have improved a little since early 2025. Second, as unreasonable as pricing is for PC parts, pre-built PCs with worse specs and other design compromises are unreasonably priced, too, and people should have some sense of what their options are. And third, I just have the itch—it’s been a while since I built (or helped someone else build) a PC, and I need to get it out of my system.

So here we are! Five different suggestions for builds for a few different budgets and needs, from basic browsing to 4K gaming. And yes, there is a ridiculous “God Box,” despite the fact that the baseline ridiculousness of PC building is higher than it was a few years ago.

Notes on component selection

Part of the fun of building a PC is making it look the way you want. We’ve selected cases that will physically fit the motherboards and other parts we’re recommending and which we think will be good stylistic fits for each system. But there are many cases out there, and our picks aren’t the only options available.

It’s also worth trying to build something that’s a little future-proof—one of the advantages of the PC as a platform is the ability to swap out individual components without needing to throw out the entire system. It’s worth spending a little extra money on something you know will be supported for a while. Right this minute, that gives an advantage to AMD’s socket AM5 ecosystem over slightly cheaper but fading or dead-end platforms like AMD’s socket AM4 and Intel’s LGA 1700 or (according to rumors) LGA 1851.

As for power supplies, we’re looking for 80 Plus certified power supplies from established brands with positive user reviews on retail sites (or positive professional reviews, though these can be somewhat hard to come by for any given PSU these days). If you have a preferred brand, by all means, go with what works for you. The same goes for RAM—we’ll recommend capacities and speeds, and we’ll link to kits from brands that have worked well for us in the past, but that doesn’t mean they’re better than the many other RAM kits with equivalent specs.

For SSDs, we mostly stick to drives from known brands like Samsung, Crucial, Western Digital, and SK hynix. Our builds also include built-in Bluetooth and Wi-Fi, so you don’t need to worry about running Ethernet wires and can easily connect to Bluetooth gamepads, keyboards, mice, headsets, and other accessories.

We also haven’t priced in peripherals like webcams, monitors, keyboards, or mice, as we’re assuming most people will reuse what they already have or buy those components separately. If you’re feeling adventurous, you could even make your own DIY keyboard! If you need more guidance, Kimber Streams’ Wirecutter keyboard guides are exhaustive and educational, and Wirecutter has some monitor-buying advice, too.

Finally, we won’t be including the cost of a Windows license in our cost estimates. You can pay many different prices for Windows—$139 for an official retail license from Microsoft, $120 for an “OEM” license for system builders, or anywhere between $15 and $40 for a product key from shady gray market product key resale sites. Windows 10 keys will also work to activate Windows 11, though Microsoft stopped letting old Windows 7 and Windows 8 keys activate new Windows 10 and 11 installs a couple of years ago. You could even install Linux, given recent advancements in game compatibility layers! But if you plan to go that route, know that AMD’s graphics cards tend to be better-supported than Nvidia’s.

The budget all-rounder

What it’s good for: Browsing, schoolwork or regular work, amateur photo or video editing, and very light casual gaming. A low-cost, low-complexity introduction to PC building.

What it sucks at: You’ll need to use low settings at best for modern games, and it’s hard to keep costs down without making big sacrifices.

Cost as of this writing: $479 to $504, depending on your case

The entry point for a basic desktop PC from Dell, HP, and Lenovo is somewhere between $400 and $500 as of this writing. You can beat that pricing with a self-built one if you cut your build to the bone, and you can find tons of cheap used and refurbished stuff and serviceable mini PCs for well under that price, too. But if you’re chasing the thrill of the build, we can definitely match the big OEMs’ pricing while doing better on specs and future-proofing.

The AMD Ryzen 5 8500G should give you all the processing power you need for everyday computing and less-demanding games, despite most of its CPU cores using the lower-performing Zen 4c variant of AMD’s last-gen CPU architecture. The Radeon 740M GPU should do a decent job with many games at lower settings; it’s not a gaming GPU, but it will handle kid-friendly games like Roblox or Minecraft or undemanding battle royale or MOBA games like Fortnite and DOTA 2.

The Gigabyte B650M Gaming Plus WiFi board includes Wi-Fi, Bluetooth, and extra RAM and storage slots for future expandability. Most companies that make AM5 motherboards are pretty good about releasing new BIOS updates that patch vulnerabilities and add support for new CPUs, so you shouldn’t have a problem popping in a new processor a few years down the road if this one is no longer meeting your needs.

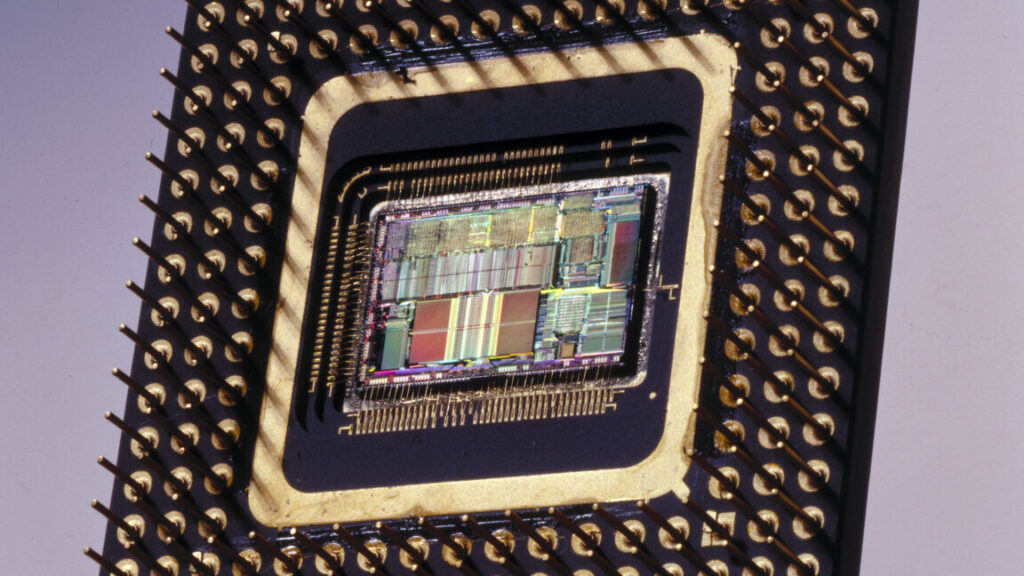

An AMD Ryzen 7 8700G. The 8500G is a lower-end relative of this chip, with good-enough CPU and GPU performance for light work. Credit: Andrew Cunningham

This system is spec’d for general usage and exceptionally light gaming, and 16GB of RAM and a 500 GB SSD should be plenty for that kind of thing. You can get the 1TB version of the same SSD for just $20 more, though—not a bad deal if you think light gaming is in the cards. The 600 W power supply is overkill, but it’s just $5 more than the 500 W version of the same PSU, and 600 W is enough headroom to add a GeForce RTX 4060 or 5060-series card or a Radeon RX 9600 XT to the build later on without having to worry.

The biggest challenge when looking for a decent, cheap PC case is finding one without a big, tacky acrylic window. Our standby choice for the last couple of years has been the Thermaltake Versa H17, an understated and reasonably well-reviewed option that doesn’t waste internal space on legacy features like external 3.5 and 5.25-inch drive bays or internal cages for spinning hard drives. But stock seems to be low as of this writing, suggesting it could be unavailable soon.

We looked for some alternatives that wouldn’t be a step down in quality or utility and which wouldn’t drive the system’s total price above $500. YouTubers and users generally seem to like the $70 Phanteks XT Pro, which is a lot bigger than this motherboard needs but is praised for its airflow and flexibility (it has a tempered glass side window in its cheapest configuration, and a solid “silent” variant will run you $88). The Fractal Design Focus 2 is available with both glass and solid side panels for $75.

The budget gaming PC

What it’s good for: Solid all-round performance, plus good 1080p (and sometimes 1440p) gaming performance.

What it sucks at: Future proofing, top-tier CPU performance.

Cost as of this writing: $793 to $828, depending on components

Budget gaming PCs are tough right now, but my broad advice would be the same as it’s always been: Go with the bare minimum everywhere you can so you have more money to spend on the GPU. I went into this totally unsure if I could recommend a PC I’d be happy with for the $700 to $800 we normally hit, and getting close to that number meant making some hard decisions.

I talked myself into a socket AM5 build for our non-gaming budget PC because of its future proof-ness and its decent integrated GPU, but I went with an Intel-based build for this one because we didn’t need the integrated GPU for it and because AMD still mostly uses old socket AM4 chips to cover the $150-and-below part of the market.

Given the choice between aging AMD CPUs and aging Intel CPUs, I have to give Intel the edge, thanks to the Core i5-13400F’s four E-cores. And if a 13th-gen Core chip lacks cutting-edge performance, it’s plenty fast for a midrange GPU. The $109 Core i5-12400F would also be OK and save a little more money, but we think the extra cores and small clock speed boost are worth the $20-ish premium.

For a budget build, we think your best strategy is to save money everywhere you can so you can squeeze a 16GB AMD Radeon RX 9060 XT into the budget. Credit: Andrew Cunningham

Going with a DDR4 motherboard and RAM saves us a tiny bit, and we’ve also stayed at 16GB of RAM instead of stepping up (some games, sometimes can benefit from 32GB, especially if you want to keep a bunch of other stuff running in the background, but it still usually won’t be a huge bottleneck). We upgraded to a 1TB SSD; huge AAA games will eat that up relatively quickly, but there is another M.2 slot you can use to put in another drive later. The power supply and case selections are the same as in our budget pick.

All of that cost-cutting was done in service of stretching the budget to include the 16GB version of AMD’s Radeon RX 9060 XT graphics card.

You could go with the 8GB version of the 9060 XT or Nvidia’s GeForce RTX 5060 and get solid 1080p gaming performance for almost $100 less. But we’re at a point where having 8GB of RAM in your graphics card can be a bottleneck, and that’s a problem that will only get worse over time. The 9060 XT has a consistent edge over the RTX 5060 in our testing, even in games with ray-tracing effects enabled, and at 1440p, the extra memory can easily be the difference between a game that runs and a game that doesn’t.

A more future-proofed budget gaming PC

What it’s good for: Good all-round performance with plenty of memory and storage, plus room for future upgrades.

What it sucks at: Getting you higher frame rates than our budget-budget build.

Cost as of this writing: $1,070 to $1,110, depending on components

As I found myself making cut after cut to maximize the fps-per-dollar we could get from our budget gaming PC, I decided I wanted to spec out a system with the same GPU but with other components that would make it better for non-gaming use and easier to upgrade in the future, with more generous allotments of memory and storage.

This build shifts back to many of the AMD AM5 components we used in our basic budget build, but with an 8-core Ryzen 7 7700X CPU at its heart. Its Zen 4 architecture isn’t the latest and greatest, but Zen 5 is a modest upgrade, and you’ll still get better single- and multi-core processor performance than you do with the Core i5 in our other build. It’s not worth spending more than $50 to step up to a Ryzen 7 9700X, and it’s overkill to spend $330 on a 12-core Ryzen 9 7900X or $380 on a Ryzen 7 7800X3D.

This chip doesn’t come with its own fan, so we’ve included an inexpensive air cooler we like that will give you plenty of thermal headroom.

A 32GB kit of RAM and 2TB of storage will give you ample room for games and enough RAM that you won’t have to worry about the small handful of outliers that benefit from more than 16GB of system RAM, while a marginally beefier power supply gives you a bit more headroom for future upgrades while still keeping costs relatively low.

This build won’t benefit your frame rates much since we’re sticking with the same 16GB RX 9060 XT. But the rest of it is specced generously enough that you could add a GeForce RTX 5070 (currently around $550) or a non-XT Radeon RX 9070 card (around $600) without needing to change any of the other components.

A comfortable 4K gaming rig

What it’s good for: Just about anything! But it’s built to play games at higher resolutions than our budget builds.

What it sucks at: Getting you top-of-the-line bragging rights.

Cost as of this writing: $1,829 to $1,934, depending on components.

Our budget builds cover 1080p-to-1440p gaming, and with an RTX 5070 or an RX 9070, they could realistically stretch to 4K in some games. But for more comfortable 4K gaming or super-high-frame-rate 1440p performance, you’ll thank yourself for spending a bit more.

You’ll note that the quality of the component selections here has been bumped up a bit all around. X670 or X870-series boards don’t just get you better I/O; they’ll also get you full PCI Express 5.0 support in the GPU slot and components better-suited to handling faster and more power-hungry components. We’ve swapped to a modular ATX 3.x-compliant power supply to simplify cable management and get a 12V-2×6 power connector. And we picked out a slightly higher-end SSD, too. But we’ve tried not to spend unnecessary money on things that won’t meaningfully improve performance—no 1,000+ watt power supplies, PCIe 5.0 SSDs, or 64GB RAM kits here.

A Ryzen 7 7800X3D might arguably be overkill for this build—especially at 4K, where the GPU will still be the main bottleneck—but it will be useful for getting higher frame rates at lower resolutions and just generally making sure performance stays consistent and smooth. Ryzen 7900X, 7950X, or 9900X chips are all good alternatives if you want more multi-core CPU performance—if you plan to stream as you play, for instance. A 9700X or even a 7700X would probably hold up fine if you won’t be doing that kind of thing and want to save a little.

You could cool any of these with a closed-loop AIO cooler, but a solid air cooler like the Thermalright model will keep it running cool for less money, and with a less-complicated install process.

A GeForce RTX 5070 Ti is the best 4K performance you can get for less than $1,000, but that doesn’t make it cheap. Credit: Andrew Cunningham

Based on current pricing and availability, I think the RTX 5070 Ti makes the most sense for a non-absurd 4K-capable build. Its prices are still elevated slightly above its advertised $749 MSRP, but it’s giving you RTX 4080/4080 Super-level performance for between $200 and $400 less than those cards launched for. Nvidia’s next step up, the RTX 5080, will run you at least $1,200 or $1,300—and usually more. AMD’s best option, the RX 9070 XT, is a respectable contender, and it’s probably the better choice if you plan on using Linux instead of Windows. But for a Windows-based gaming box, Nvidia still has an edge in games with ray-tracing effects enabled, plus DLSS upscaling and frame generation.

Is it silly that the GPU costs as much as our entire budget gaming PC? Of course! But it is what it is.

Even more than the budget-focused builds, the case here is a matter of personal preference, and $100 or $150 is enough to buy you any one of several dozen competent cases that will fit our chosen components. We’ve highlighted a few from case makers with good reputations to give you a place to start. Some of these also come in multiple colors, with different side panel options and both RGB and non-RGB options to suit your tastes.

If you like something a little more statement-y, the Fractal Design North ($155) and Lian Li Lancool 217 ($120) both include the wood accents that some case makers have been pushing lately. The Fractal Design case comes with both mesh and tempered glass side panel options, depending on how into RGB you are, while the Lancool case includes a whopping five case fans for keeping your system cool.

The “God Box”

What it’s good for: Anything and everything.

What it sucks at: Being affordable.

Cost as of this writing: $4,891 to $5,146

We’re avoiding Xeon and Threadripper territory here—frankly, I’ve never even tried to do a build centered on those chips and wouldn’t trust myself to make recommendations—but this system is as fast as consumer-grade hardware gets.

An Nvidia GeForce RTX 5090 guarantees the fastest GPU performance you can buy and continues the trend of “paying as much for a GPU as you could for an entire fully functional PC.” And while we have specced this build with a single GPU, the motherboard we’ve chosen has a second full-speed PCIe 5.0 x16 slot that you could use for a dual-GPU build.

A Ryzen 9950X3D chip gets you top-tier gaming performance and tons of CPU cores. We’re cooling this powerful chip with a 360 mm Arctic Liquid Freezer III Pro cooler, which has generally earned good reviews from Gamers Nexus and other outlets for its value, cooling performance, and quiet performance. A white option is also available if you’re going for a light-mode color scheme instead of our predominantly dark-mode build.

Other components have been pumped up similarly gratuitously. A 1,000 W power supply is the minimum for an RTX 5090, but to give us some headroom, why not use a 1,200 W model with lights on it? Is PCIe 5.0 storage strictly necessary for anything? No! But let’s grab a 4 TB PCIe 5.0 SSD anyway. And populating all four of our RAM slots with a 32GB stick of DDR5 avoids any unsightly blank spots inside our case.

We’ve selected a couple of largish case options to house our big builds, though as usual, there are tons of other options to fit all design sensibilities and tastes. Just make sure, if you’re selecting a big Extended ATX motherboard like the X870E Taichi, that your case will fit a board that’s slightly wider than a regular ATX or micro ATX board (the Taichi is 267 mm wide, which should be fine in either of our case selections).

Andrew is a Senior Technology Reporter at Ars Technica, with a focus on consumer tech including computer hardware and in-depth reviews of operating systems like Windows and macOS. Andrew lives in Philadelphia and co-hosts a weekly book podcast called Overdue.

Ars Technica System Guide: Five sample PC builds, from $500 to $5,000 Read More »