Pay-per-output? AI firms blindsided by beefed up robots.txt instructions.

“Really Simple Licensing” makes it easier for creators to get paid for AI scraping.

Logo for the “Really Simply Licensing” (RSL) standard. Credit: via RSL Collective

Leading Internet companies and publishers—including Reddit, Yahoo, Quora, Medium, The Daily Beast, Fastly, and more—think there may finally be a solution to end AI crawlers hammering websites to scrape content without permission or compensation.

Announced Wednesday morning, the “Really Simply Licensing” (RSL) standard evolves robots.txt instructions by adding an automated licensing layer that’s designed to block bots that don’t fairly compensate creators for content.

Free for any publisher to use starting today, the RSL standard is an open, decentralized protocol that makes clear to AI crawlers and agents the terms for licensing, usage, and compensation of any content used to train AI, a press release noted.

The standard was created by the RSL Collective, which was founded by Doug Leeds, former CEO of Ask.com, and Eckart Walther, a former Yahoo vice president of products and co-creator of the RSS standard, which made it easy to syndicate content across the web.

Based on the “Really Simply Syndication” (RSS) standard, RSL terms can be applied to protect any digital content, including webpages, books, videos, and datasets. The new standard supports “a range of licensing, usage, and royalty models, including free, attribution, subscription, pay-per-crawl (publishers get compensated every time an AI application crawls their content), and pay-per-inference (publishers get compensated every time an AI application uses their content to generate a response),” the press release said.

Leeds told Ars that the idea to use the RSS “playbook” to roll out the RSL standard arose after he invited Walther to speak to University of California, Berkeley students at the end of last year. That’s when the longtime friends with search backgrounds began pondering how AI had changed the search industry, as publishers today are forced to compete with AI outputs referencing their own content as search traffic nosedives.

Eckart had watched the RSS standard quickly become adopted by millions of sites, and he realized that RSS had actually always been a licensing standard, Leeds said. Essentially, by adopting the RSS standard, publishers agreed to let search engines license a “bit” of their content in exchange for search traffic, and Eckart realized that it could be just as straightforward to add AI licensing terms in the same way. That way, publishers could strive to recapture lost search revenue by agreeing to license all or some part of their content to train AI in return for payment each time AI outputs link to their content.

Leeds told Ars that the RSL standard doesn’t just benefit publishers, though. It also solves a problem for AI companies, which have complained in litigation over AI scraping that there is no effective way to license content across the web.

“We have listened to them, and what we’ve heard them say is… we need a new protocol,” Leeds said. With the RSL standard, AI firms get a “scalable way to get all the content” they want, while setting an incentive that they’ll only have to pay for the best content that their models actually reference.

“If they’re using it, they pay for it, and if they’re not using it, they don’t pay for it,” Leeds said.

No telling yet how AI firms will react to RSL

At this point, it’s hard to say if AI companies will embrace the RSL standard. Ars reached out to Google, Meta, OpenAI, and xAI—some of the big tech companies whose crawlers have drawn scrutiny—to see if it was technically feasible to pay publishers for every output referencing their content. xAI did not respond, and the other companies declined to comment without further detail about the standard, appearing to have not yet considered how a licensing layer beefing up robots.txt could impact their scraping.

Today will likely be the first chance for AI companies to wrap their heads around the idea of paying publishers per output. Leeds confirmed that the RSL Collective did not consult with AI companies when developing the RSL standard.

But AI companies know that they need a constant stream of fresh content to keep their tools relevant and to continually innovate, Leeds suggested. In that way, the RSL standard “supports what supports them,” Leeds said, “and it creates the appropriate incentive system” to create sustainable royalty streams for creators and ensure that human creativity doesn’t wane as AI evolves.

While we’ll have to wait to see how AI firms react to RSL, early adopters of the standard celebrated the launch today. That included Neil Vogel, CEO of People Inc., who said that “RSL moves the industry forward—evolving from simply blocking unauthorized crawlers, to setting our licensing terms, for all AI use cases, at global web scale.”

Simon Wistow, co-founder of Fastly, suggested the solution “is a timely and necessary response to the shifting economics of the web.”

“By making it easy for publishers to define and enforce licensing terms, RSL lays the foundation for a healthy content ecosystem—one where innovation and investment in original work are rewarded, and where collaboration between publishers and AI companies becomes frictionless and mutually beneficial,” Wistow said.

Leeds noted that a key benefit of the RSL standard is that even small creators will now have an opportunity to generate revenue for helping to train AI. Tony Stubblebine, CEO of Medium, did not mince words when explaining the battle that bloggers face as AI crawlers threaten to divert their traffic without compensating them.

“Right now, AI runs on stolen content,” Stubblebine said. “Adopting this RSL Standard is how we force those AI companies to either pay for what they use, stop using it, or shut down.”

How will the RSL standard be enforced?

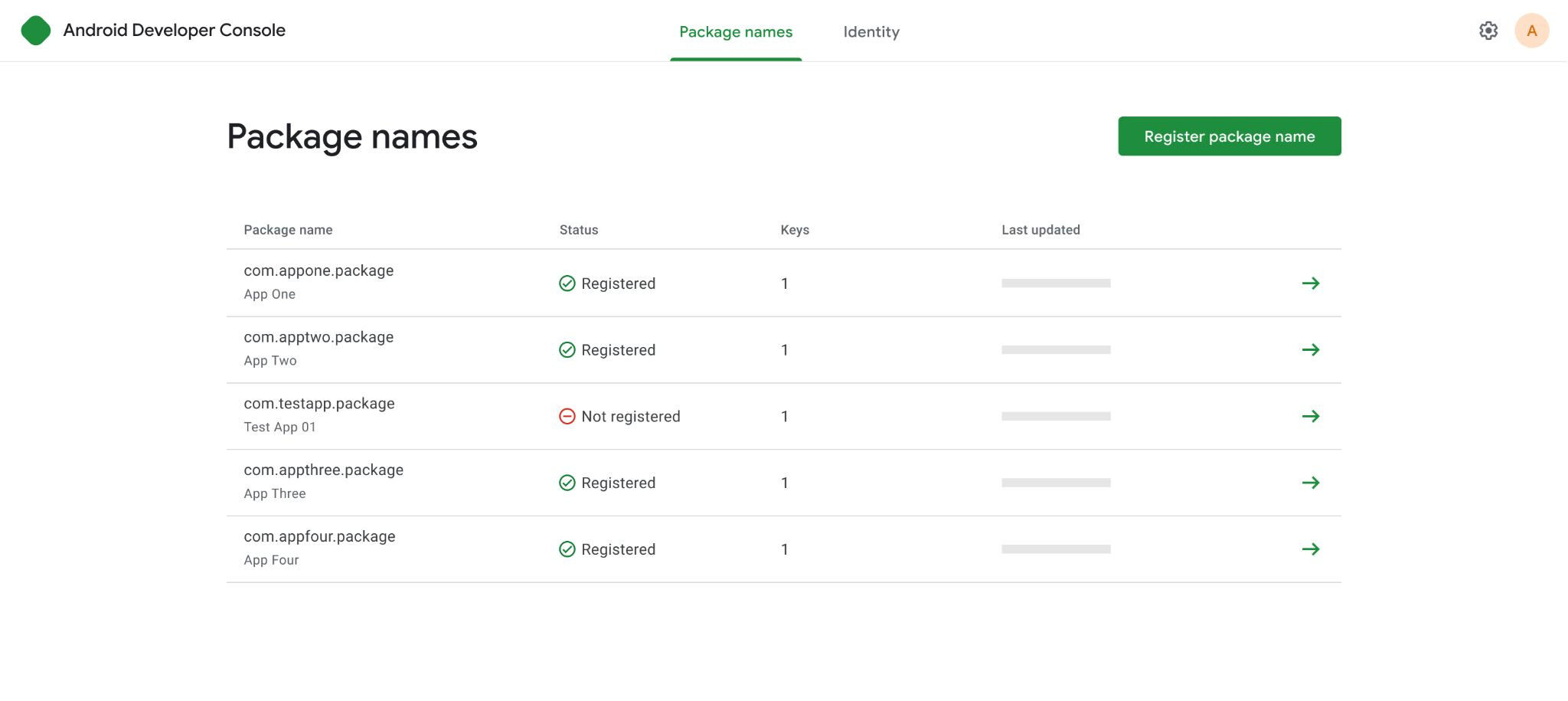

On the RSL standard site, publishers can find common terms to add templated or customized text to their robots.txt files to adopt the RSL standard today and start protecting their content from unfettered AI scraping. Here’s an example of how machine-readable licensing terms could look, added directly to robots.txt files:

# NOTICE: all crawlers and bots are strictly prohibited from using this

# content for AI training without complying with the terms of the RSL

# Collective AI royalty license. Any use of this content for AI training

# without a license is a violation of our intellectual property rights.

License: https://rslcollective.org/royalty.xml

Through RSL terms, publishers can automate licensing, with the cloud company Fastly partnering with the collective to provide technical enforcement that Leeds described as tech that acts as a bouncer to keep unapproved bots away from valuable content. It seems likely that Cloudflare, which launched a pay-per-crawl program blocking greedy crawlers in July, could also help enforce the RSL standard.

For publishers, the standard “solves a business problem immediately,” Leeds told Ars, so the collective is hopeful that RSL will be rapidly and widely adopted. As further incentive, publishers can also rely on the RSL standard to “easily encrypt and license non-published, proprietary content to AI companies, including paywalled articles, books, videos, images, and data,” the RSL Collective site said, and that potentially could expand AI firms’ data pool.

On top of technical enforcement, Leeds said that publishers and content creators could legally enforce the terms, noting that the recent $1.5 billion Anthropic settlement suggests “there’s real money at stake” if you don’t train AI “legitimately.”

Should the industry adopt the standard, it could “establish fair market prices and strengthen negotiation leverage for all publishers,” the press release said. And Leeds noted that it’s very common for regulations to follow industry solutions (consider the Digital Millennium Copyright Act). Since the RSL Collective is already in talks with lawmakers, Leeds thinks “there’s good reason to believe” that AI companies will soon “be forced to acknowledge” the standard.

“But even better than that,” Leeds said, “it’s in their interest” to adopt the standard.

With RSL, AI firms can license content at scale “in a way that’s fair [and] preserves the content that they need to make their products continue to innovate.”

Additionally, the RSL standard may solve a problem that risks gutting trust and interest in AI at this early stage.

Leeds noted that currently, AI outputs don’t provide “the best answer” to prompts but instead rely on mashing up answers from different sources to avoid taking too much content from one site. That means that not only do AI companies “spend an enormous amount of money on compute costs to do that,” but AI tools may also be more prone to hallucination in the process of “mashing up” source material “to make something that’s not the best answer because they don’t have the rights to the best answer.”

“The best answer could exist somewhere,” Leeds said. But “they’re spending billions of dollars to create hallucinations, and we’re talking about: Let’s just solve that with a licensing scheme that allows you to use the actual content in a way that solves the user’s query best.”

By transforming the “ecosystem” with a standard that’s “actually sustainable and fair,” Leeds said that AI companies could also ensure that humanity never gets to the point where “humans stop producing” and “turn to AI to reproduce what humans can’t.”

Failing to adopt the RSL standard would be bad for AI innovation, Leeds suggested, perhaps paving the way for AI to replace search with a “sort of self-fulfilling swap of bad content that actually one doesn’t have any current information, doesn’t have any current thinking, because it’s all based on old training information.”

To Leeds, the RSL standard is ultimately “about creating the system that allows the open web to continue. And that happens when we get adoption from everybody,” he said, insisting that “literally the small guys are as important as the big guys” in pushing the entire industry to change and fairly compensate creators.

Pay-per-output? AI firms blindsided by beefed up robots.txt instructions. Read More »