Gemini “coming together in really awesome ways,” Google says after 2.5 Pro release

Google’s Tulsee Doshi talks vibes and efficiency in Gemini 2.5 Pro.

Google was caught flat-footed by the sudden skyrocketing interest in generative AI despite its role in developing the underlying technology. This prompted the company to refocus its considerable resources on catching up to OpenAI. Since then, we’ve seen the detail-flubbing Bard and numerous versions of the multimodal Gemini models. While Gemini has struggled to make progress in benchmarks and user experience, that could be changing with the new 2.5 Pro (Experimental) release. With big gains in benchmarks and vibes, this might be the first Google model that can make a dent in ChatGPT’s dominance.

We recently spoke to Google’s Tulsee Doshi, director of product management for Gemini, to talk about the process of releasing Gemini 2.5, as well as where Google’s AI models are going in the future.

Welcome to the vibes era

Google may have had a slow start in building generative AI products, but the Gemini team has picked up the pace in recent months. The company released Gemini 2.0 in December, showing a modest improvement over the 1.5 branch. It only took three months to reach 2.5, meaning Gemini 2.0 Pro wasn’t even out of the experimental stage yet. To hear Doshi tell it, this was the result of Google’s long-term investments in Gemini.

“A big part of it is honestly that a lot of the pieces and the fundamentals we’ve been building are now coming together in really awesome ways, ” Doshi said. “And so we feel like we’re able to pick up the pace here.”

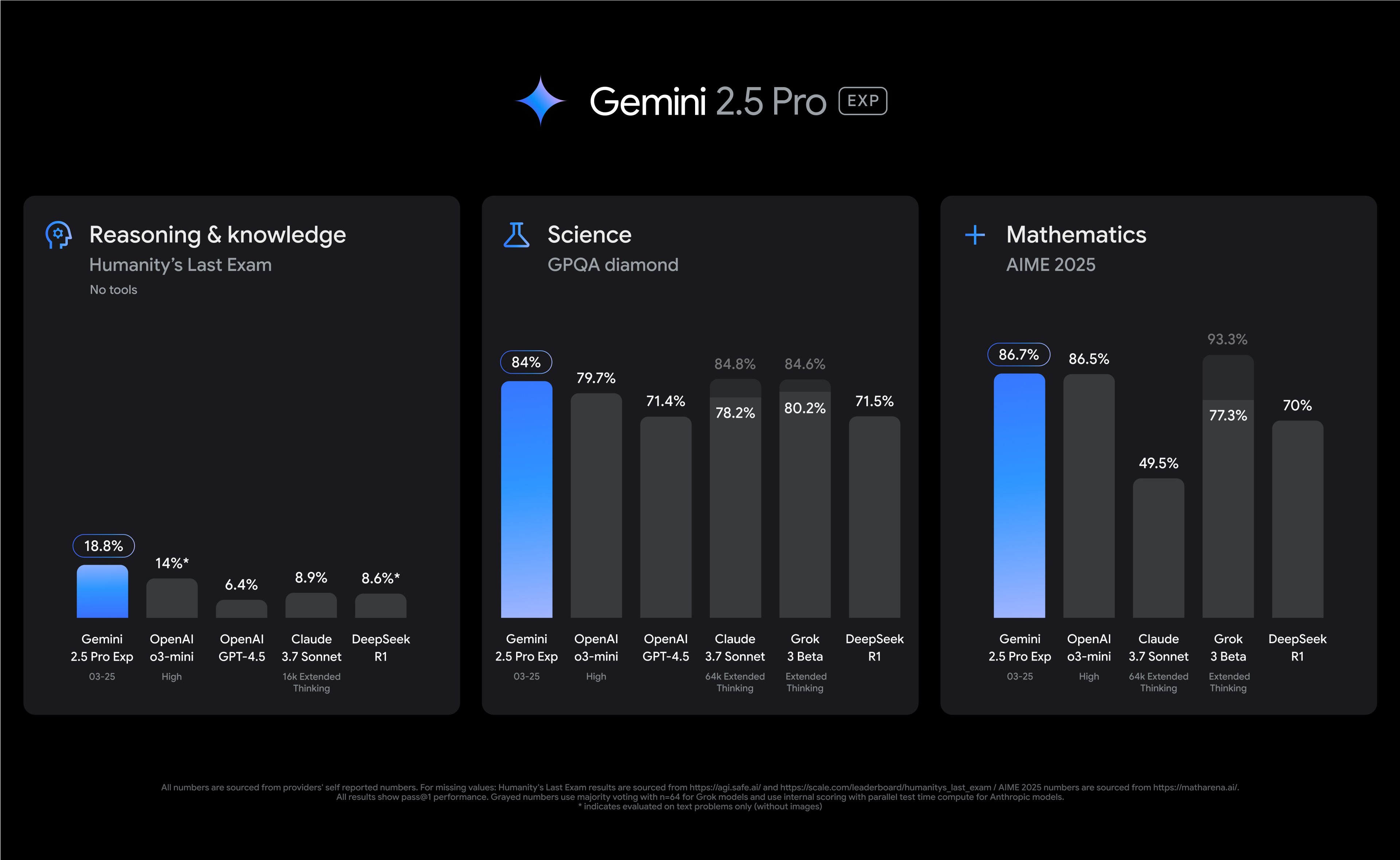

The process of releasing a new model involves testing a lot of candidates. According to Doshi, Google takes a multilayered approach to inspecting those models, starting with benchmarks. “We have a set of evals, both external academic benchmarks as well as internal evals that we created for use cases that we care about,” she said.

Credit: Google

The team also uses these tests to work on safety, which, as Google points out at every given opportunity, is still a core part of how it develops Gemini. Doshi noted that making a model safe and ready for wide release involves adversarial testing and lots of hands-on time.

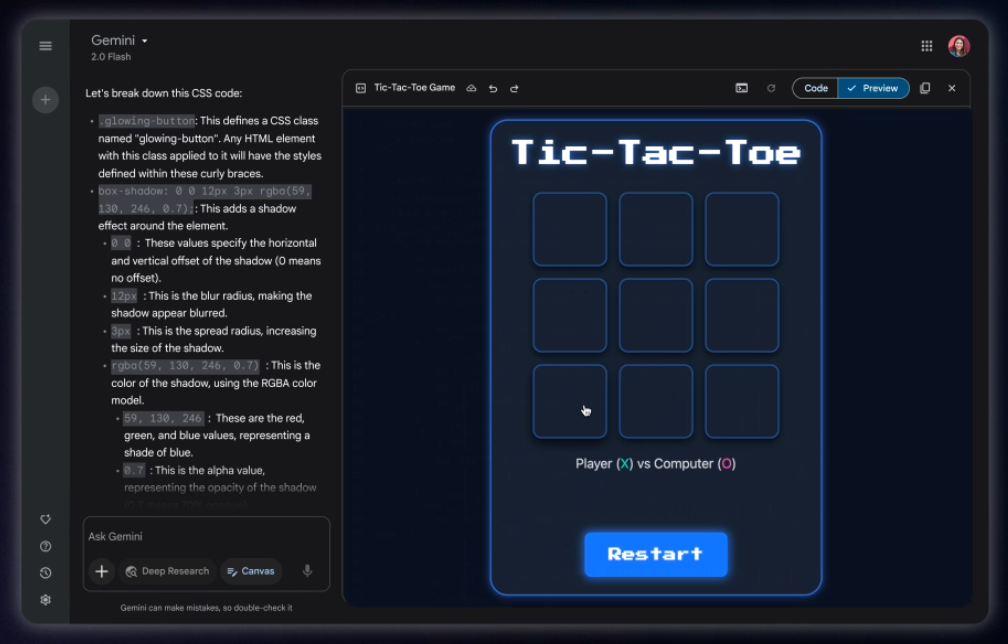

But we can’t forget the vibes, which have become an increasingly important part of AI models. There’s great focus on the vibe of outputs—how engaging and useful they are. There’s also the emerging trend of vibe coding, in which you use AI prompts to build things instead of typing the code yourself. For the Gemini team, these concepts are connected. The team uses product and user feedback to understand the “vibes” of the output, be that code or just an answer to a question.

Google has noted on a few occasions that Gemini 2.5 is at the top of the LM Arena leaderboard, which shows that people who have used the model prefer the output by a considerable margin—it has good vibes. That’s certainly a positive place for Gemini to be after a long climb, but there is some concern in the field that too much emphasis on vibes could push us toward models that make us feel good regardless of whether the output is good, a property known as sycophancy.

If the Gemini team has concerns about feel-good models, they’re not letting it show. Doshi mentioned the team’s focus on code generation, which she noted can be optimized for “delightful experiences” without stoking the user’s ego. “I think about vibe less as a certain type of personality trait that we’re trying to work towards,” Doshi said.

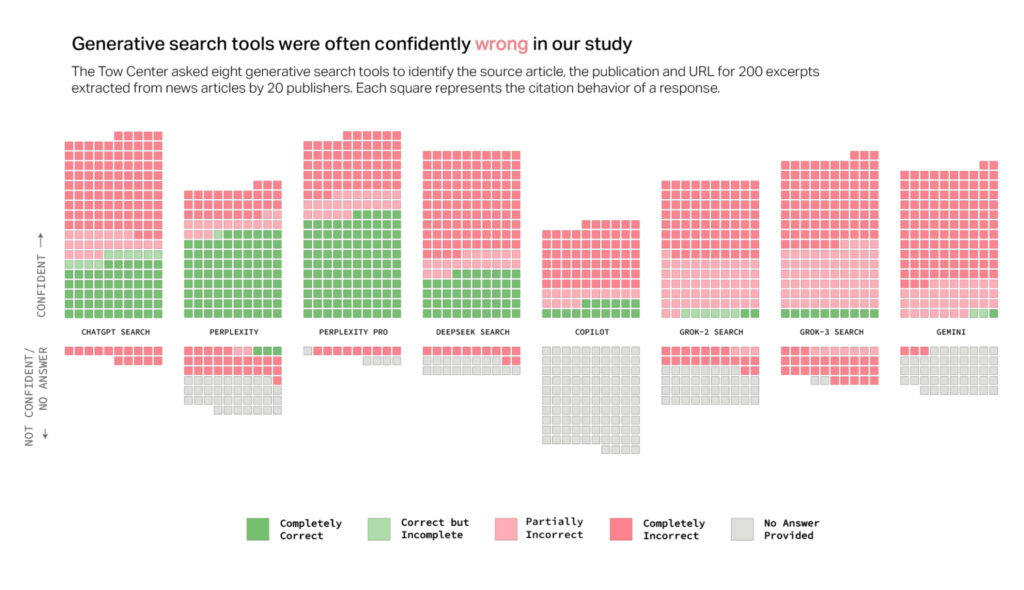

Hallucinations are another area of concern with generative AI models. Google has had plenty of embarrassing experiences with Gemini and Bard making things up, but the Gemini team believes they’re on the right path. Gemini 2.5 apparently has set a high-water mark in the team’s factuality metrics. But will hallucinations ever be reduced to the point we can fully trust the AI? No comment on that front.

Don’t overthink it

Perhaps the most interesting thing you’ll notice when using Gemini 2.5 is that it’s very fast compared to other models that use simulated reasoning. Google says it’s building this “thinking” capability into all of its models going forward, which should lead to improved outputs. The expansion of reasoning in large language models in 2024 resulted in a noticeable improvement in the quality of these tools. It also made them even more expensive to run, exacerbating an already serious problem with generative AI.

The larger and more complex an LLM becomes, the more expensive it is to run. Google hasn’t released technical data like parameter count on its newer models—you’ll have to go back to the 1.5 branch to get that kind of detail. However, Doshi explained that Gemini 2.5 is not a substantially larger model than Google’s last iteration, calling it “comparable” in size to 2.0.

Gemini 2.5 is more efficient in one key area: the chain of thought. It’s Google’s first public model to support a feature called Dynamic Thinking, which allows the model to modulate the amount of reasoning that goes into an output. This is just the first step, though.

“I think right now, the 2.5 Pro model we ship still does overthink for simpler prompts in a way that we’re hoping to continue to improve,” Doshi said. “So one big area we are investing in is Dynamic Thinking as a way to get towards our [general availability] version of 2.5 Pro where it thinks even less for simpler prompts.”

Credit: Ryan Whitwam

Google doesn’t break out earnings from its new AI ventures, but we can safely assume there’s no profit to be had. No one has managed to turn these huge LLMs into a viable business yet. OpenAI, which has the largest user base with ChatGPT, loses money even on the users paying for its $200 Pro plan. Google is planning to spend $75 billion on AI infrastructure in 2025, so it will be crucial to make the most of this very expensive hardware. Building models that don’t waste cycles on overthinking “Hi, how are you?” could be a big help.

Missing technical details

Google plays it close to the chest with Gemini, but the 2.5 Pro release has offered more insight into where the company plans to go than ever before. To really understand this model, though, we’ll need to see the technical report. Google last released such a document for Gemini 1.5. We still haven’t seen the 2.0 version, and we may never see that document now that 2.5 has supplanted 2.0.

Doshi notes that 2.5 Pro is still an experimental model. So, don’t expect full evaluation reports to happen right away. A Google spokesperson clarified that a full technical evaluation report on the 2.5 branch is planned, but there is no firm timeline. Google hasn’t even released updated model cards for Gemini 2.0, let alone 2.5. These documents are brief one-page summaries of a model’s training, intended use, evaluation data, and more. They’re essentially LLM nutrition labels. It’s much less detailed than a technical report, but it’s better than nothing. Google confirms model cards are on the way for Gemini 2.0 and 2.5.

Given the recent rapid pace of releases, it’s possible Gemini 2.5 Pro could be rolling out more widely around Google I/O in May. We certainly hope Google has more details when the 2.5 branch expands. As Gemini development picks up steam, transparency shouldn’t fall by the wayside.

Ryan Whitwam is a senior technology reporter at Ars Technica, covering the ways Google, AI, and mobile technology continue to change the world. Over his 20-year career, he’s written for Android Police, ExtremeTech, Wirecutter, NY Times, and more. He has reviewed more phones than most people will ever own. You can follow him on Bluesky, where you will see photos of his dozens of mechanical keyboards.

Gemini “coming together in really awesome ways,” Google says after 2.5 Pro release Read More »