Google search’s made-up AI explanations for sayings no one ever said, explained

But what does “meaning” mean?

A partial defense of (some of) AI Overview’s fanciful idiomatic explanations.

Mind…. blown Credit: Getty Images

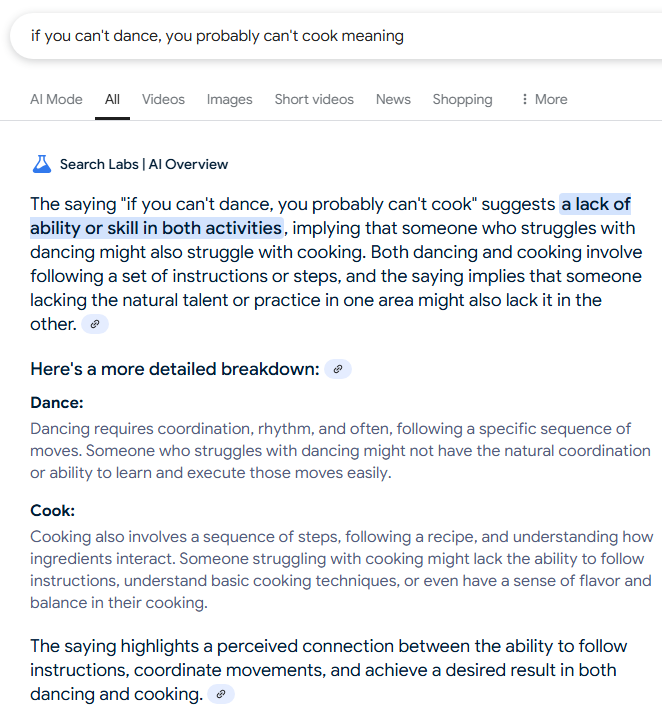

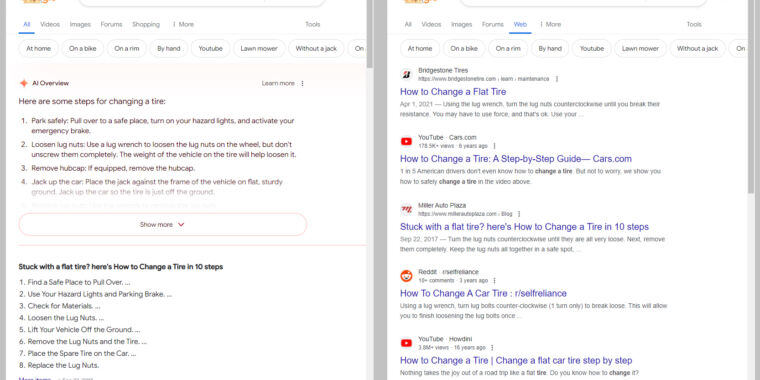

Last week, the phrase “You can’t lick a badger twice” unexpectedly went viral on social media. The nonsense sentence—which was likely never uttered by a human before last week—had become the poster child for the newly discovered way Google search’s AI Overviews makes up plausible-sounding explanations for made-up idioms (though the concept seems to predate that specific viral post by at least a few days).

Google users quickly discovered that typing any concocted phrase into the search bar with the word “meaning” attached at the end would generate an AI Overview with a purported explanation of its idiomatic meaning. Even the most nonsensical attempts at new proverbs resulted in a confident explanation from Google’s AI Overview, created right there on the spot.

In the wake of the “lick a badger” post, countless users flocked to social media to share Google’s AI interpretations of their own made-up idioms, often expressing horror or disbelief at Google’s take on their nonsense. Those posts often highlight the overconfident way the AI Overview frames its idiomatic explanations and occasional problems with the model confabulating sources that don’t exist.

But after reading through dozens of publicly shared examples of Google’s explanations for fake idioms—and generating a few of my own—I’ve come away somewhat impressed with the model’s almost poetic attempts to glean meaning from gibberish and make sense out of the senseless.

Talk to me like a child

Let’s try a thought experiment: Say a child asked you what the phrase “you can’t lick a badger twice” means. You’d probably say you’ve never heard that particular phrase or ask the child where they heard it. You might say that you’re not familiar with that phrase or that it doesn’t really make sense without more context.

Someone on Threads noticed you can type any random sentence into Google, then add “meaning” afterwards, and you’ll get an AI explanation of a famous idiom or phrase you just made up. Here is mine

— Greg Jenner (@gregjenner.bsky.social) April 23, 2025 at 6: 15 AM

But let’s say the child persisted and really wanted an explanation for what the phrase means. So you’d do your best to generate a plausible-sounding answer. You’d search your memory for possible connotations for the word “lick” and/or symbolic meaning for the noble badger to force the idiom into some semblance of sense. You’d reach back to other similar idioms you know to try to fit this new, unfamiliar phrase into a wider pattern (anyone who has played the excellent board game Wise and Otherwise might be familiar with the process).

Google’s AI Overview doesn’t go through exactly that kind of human thought process when faced with a similar question about the same saying. But in its own way, the large language model also does its best to generate a plausible-sounding response to an unreasonable request.

As seen in Greg Jenner’s viral Bluesky post, Google’s AI Overview suggests that “you can’t lick a badger twice” means that “you can’t trick or deceive someone a second time after they’ve been tricked once. It’s a warning that if someone has already been deceived, they are unlikely to fall for the same trick again.” As an attempt to derive meaning from a meaningless phrase —which was, after all, the user’s request—that’s not half bad. Faced with a phrase that has no inherent meaning, the AI Overview still makes a good-faith effort to answer the user’s request and draw some plausible explanation out of troll-worthy nonsense.

Contrary to the computer science truism of “garbage in, garbage out, Google here is taking in some garbage and spitting out… well, a workable interpretation of garbage, at the very least.

Google’s AI Overview even goes into more detail explaining its thought process. “Lick” here means to “trick or deceive” someone, it says, a bit of a stretch from the dictionary definition of lick as “comprehensively defeat,” but probably close enough for an idiom (and a plausible iteration of the idiom, “Fool me once shame on you, fool me twice, shame on me…”). Google also explains that the badger part of the phrase “likely originates from the historical sport of badger baiting,” a practice I was sure Google was hallucinating until I looked it up and found it was real.

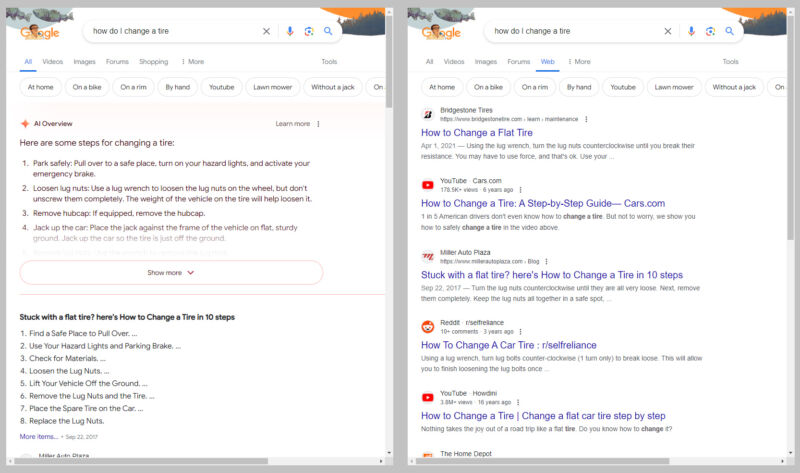

It took me 15 seconds to make up this saying but now I think it kind of works! Credit: Kyle Orland / Google

I found plenty of other examples where Google’s AI derived more meaning than the original requester’s gibberish probably deserved. Google interprets the phrase “dream makes the steam” as an almost poetic statement about imagination powering innovation. The line “you can’t humble a tortoise” similarly gets interpreted as a statement about the difficulty of intimidating “someone with a strong, steady, unwavering character (like a tortoise).”

Google also often finds connections that the original nonsense idiom creators likely didn’t intend. For instance, Google could link the made-up idiom “A deft cat always rings the bell” to the real concept of belling the cat. And in attempting to interpret the nonsense phrase “two cats are better than grapes,” the AI Overview correctly notes that grapes can be potentially toxic to cats.

Brimming with confidence

Even when Google’s AI Overview works hard to make the best of a bad prompt, I can still understand why the responses rub a lot of users the wrong way. A lot of the problem, I think, has to do with the LLM’s unearned confident tone, which pretends that any made-up idiom is a common saying with a well-established and authoritative meaning.

Rather than framing its responses as a “best guess” at an unknown phrase (as a human might when responding to a child in the example above), Google generally provides the user with a single, authoritative explanation for what an idiom means, full stop. Even with the occasional use of couching words such as “likely,” “probably,” or “suggests,” the AI Overview comes off as unnervingly sure of the accepted meaning for some nonsense the user made up five seconds ago.

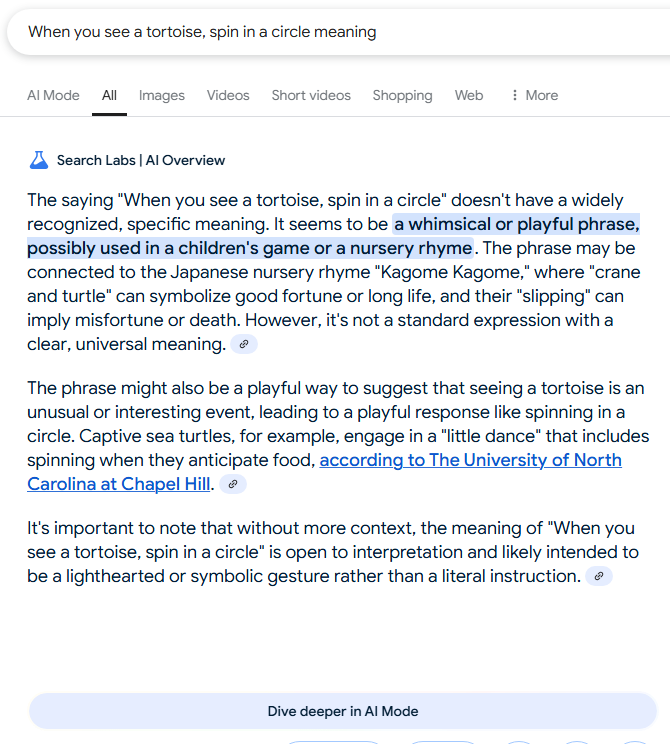

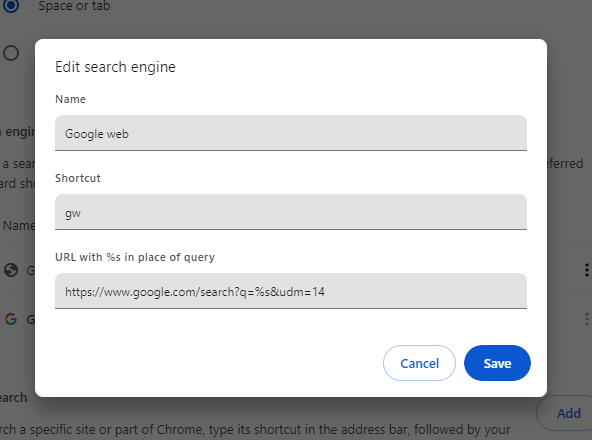

If Google’s AI Overviews always showed this much self-doubt, we’d be getting somewhere. Credit: Google / Kyle Orland

I was able to find one exception to this in my testing. When I asked Google the meaning of “when you see a tortoise, spin in a circle,” Google reasonably told me that the phrase “doesn’t have a widely recognized, specific meaning” and that it’s “not a standard expression with a clear, universal meaning.” With that context, Google then offered suggestions for what the phrase “seems to” mean and mentioned Japanese nursery rhymes that it “may be connected” to, before concluding that it is “open to interpretation.”

Those qualifiers go a long way toward properly contextualizing the guesswork Google’s AI Overview is actually conducting here. And if Google provided that kind of context in every AI summary explanation of a made-up phrase, I don’t think users would be quite as upset.

Unfortunately, LLMs like this have trouble knowing what they don’t know, meaning moments of self-doubt like the turtle interpretation here tend to be few and far between. It’s not like Google’s language model has some master list of idioms in its neural network that it can consult to determine what is and isn’t a “standard expression” that it can be confident about. Usually, it’s just projecting a self-assured tone while struggling to force the user’s gibberish into meaning.

Zeus disguised himself as what?

The worst examples of Google’s idiomatic AI guesswork are ones where the LLM slips past plausible interpretations and into sheer hallucination of completely fictional sources. The phrase “a dog never dances before sunset,” for instance, did not appear in the film Before Sunrise, no matter what Google says. Similarly, “There are always two suns on Tuesday” does not appear in The Hitchhiker’s Guide to the Galaxy film despite Google’s insistence.

Literally in the one I tried.

— Sarah Vaughan (@madamefelicie.bsky.social) April 23, 2025 at 7: 52 AM

There’s also no indication that the made-up phrase “Welsh men jump the rabbit” originated on the Welsh island of Portland, or that “peanut butter platform heels” refers to a scientific experiment creating diamonds from the sticky snack. We’re also unaware of any Greek myth where Zeus disguises himself as a golden shower to explain the phrase “beware what glitters in a golden shower.” (Update: As many commenters have pointed out, this last one is actually a reference to the greek myth of Danaë and the shower of gold, showing Google’s AI knows more about this potential symbolism than I do)

The fact that Google’s AI Overview presents these completely made-up sources with the same self-assurance as its abstract interpretations is a big part of the problem here. It’s also a persistent problem for LLMs that tend to make up news sources and cite fake legal cases regularly. As usual, one should be very wary when trusting anything an LLM presents as an objective fact.

When it comes to the more artistic and symbolic interpretation of nonsense phrases, though, I think Google’s AI Overviews have gotten something of a bad rap recently. Presented with the difficult task of explaining nigh-unexplainable phrases, the model does its best, generating interpretations that can border on the profound at times. While the authoritative tone of those responses can sometimes be annoying or actively misleading, it’s at least amusing to see the model’s best attempts to deal with our meaningless phrases.

Kyle Orland has been the Senior Gaming Editor at Ars Technica since 2012, writing primarily about the business, tech, and culture behind video games. He has journalism and computer science degrees from University of Maryland. He once wrote a whole book about Minesweeper.

Google search’s made-up AI explanations for sayings no one ever said, explained Read More »