Framework Laptop 16 update brings Nvidia GeForce to the modular gaming laptop

It’s been a busy year for Framework, the company behind the now well-established series of repairable, upgradeable, modular laptops (and one paradoxically less-upgradeable desktop). The company has launched a version of the Framework Laptop 13 with Ryzen AI processors, the new Framework Laptop 12, and the aforementioned desktop in the last six months, and last week, Framework teased that it still had “something big coming.”

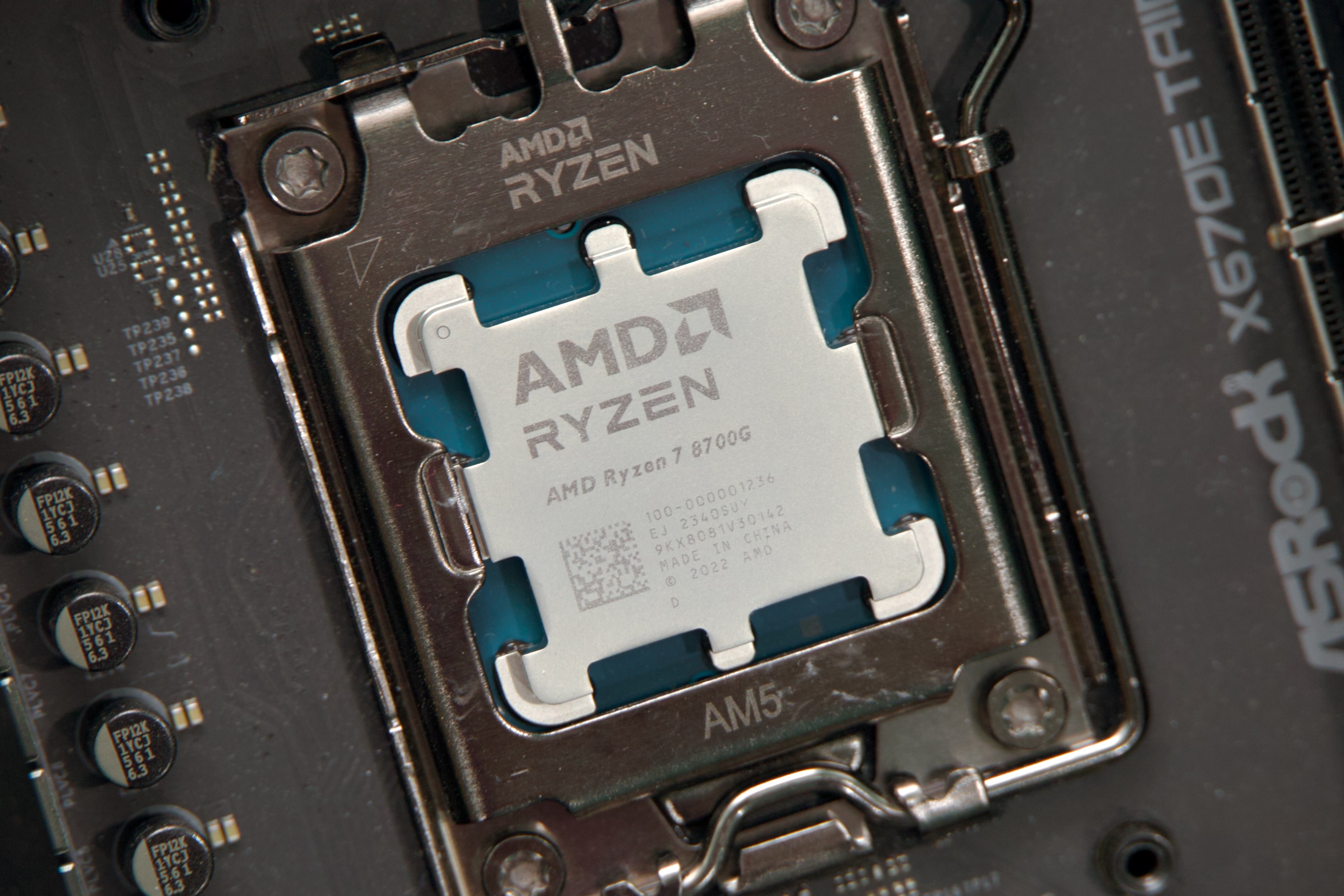

That “something big” turns out to be the first-ever update to the Framework Laptop 16, Framework’s more powerful gaming-laptop-slash-mobile-workstation. Framework is updating the laptop with Ryzen AI processors and new integrated Radeon GPUs and is introducing a new graphics module with the mobile version of Nvidia’s GeForce RTX 5070—one that’s also fully compatible with the original Laptop 16, for upgraders.

Preorders for the new laptop open today, and pricing starts at $1,499 for a DIY Edition without RAM, storage, an OS, or Expansion Cards, a $100 increase from the price of the first Framework Laptop 16. The first units will begin shipping in November.

While Framework has launched multiple updates for its original Laptop 13, this is the first time it has updated the hardware of one of its other computers. We wouldn’t expect the just-launched Framework Laptop 12 or Framework Desktop to get an internal overhaul any time soon, but the Laptop 16 will be pushing 2-years-old by the time this upgrade launches.

The old Ryzen 7 7840HS CPU version of the Laptop 16 will still be available going forward at a slightly reduced starting price of $1,299 (for the DIY edition, before RAM and storage). The Ryzen 9 7940HS model will stick around until it sells out, at which point Framework says it’s going away.

GPU details and G-Sync asterisks

The Laptop 16’s new graphics module and cooling system, also exploded. Credit: Framework

This RTX 5070 graphics module includes a redesigned heatsink and fan system, plus an additional built-in USB-C port that supports both display output and power input (potentially freeing up one of your Expansion Card slots for something else). Because of the additional power draw of the GPU and the other new components, Framework is switching to a 240 W default power supply for the new Framework Laptop 16, up from the previous 180 W power brick.

Framework Laptop 16 update brings Nvidia GeForce to the modular gaming laptop Read More »