2026 Lucid Air Touring review: This feels like a complete car now

Life as a startup carmaker is hard—just ask Lucid Motors.

When we met the brand and its prototype Lucid Air sedan in 2017, the company planned to put the first cars in customers’ hands within a couple of years. But you know what they say about plans. A lack of funding paused everything until late 2018, when Saudi Arabia’s sovereign wealth fund bought itself a stake. A billion dollars meant Lucid could build a factory—at the cost of alienating some former fans because of the source.

Then the pandemic happened, further pushing back timelines as supply shortages took hold. But the Air did go on sale, and it has more recently been joined by the Gravity SUV. There’s even a much more affordable midsize SUV in the works called the Earth. Sales more than doubled in 2025, and after spending a week with a model year 2026 Lucid Air Touring, I can understand why.

There are now quite a few different versions of the Air to choose from. For just under a quarter of a million dollars, there’s the outrageously powerful Air Sapphire, which offers acceleration so rapid it’s unlikely your internal organs will ever truly get used to the experience. At the other end of the spectrum is the $70,900 Air Pure, a single-motor model that’s currently the brand’s entry point but which also stands as a darn good EV.

The last time I tested a Lucid, it was the Air Grand Touring almost three years ago. That car mostly impressed me but still felt a little unfinished, especially at $138,000. This time, I looked at the Air Touring, which starts at $79,900, and the experience was altogether more polished.

Which one?

The Touring features a less-powerful all-wheel-drive powertrain than the Grand Touring, although to put “less-powerful” into context, with 620 hp (462 kW) on tap, there are almost as many horses available as in the legendary McLaren F1. (That remains a mental benchmark for many of us of a certain age.)

The Touring’s 885 lb-ft (1,160 Nm) is far more than BMW’s 6-liter V12 can generate, but at 5,009 lbs (2,272 kg), the electric sedan weighs twice as much as the carbon-fiber supercar. The fact that the Air Touring can reach 60 mph (98 km/h) from a standing start in just 0.2 seconds more than the McLaren tells you plenty about how much more accessible acceleration has become in the past few decades.

At least, it will if you choose the fastest of the three drive modes, labeled Sprint. There’s also Swift, and the least frantic of the three, Smooth. Helpfully, each mode remembers your regenerative braking setting when you lift the accelerator pedal. Unlike many other EVs, Lucid does not use a brake-by-wire setup, and pressing the brake pedal will only ever slow the car via friction brakes. Even with lift-off regen set to off, the car does not coast well due to its permanent magnet electric motors, unlike the electric powertrains developed by German OEMs like Mercedes-Benz.

This is not to suggest that Lucid is doing something wrong—not with its efficiency numbers. On 19-inch aero-efficient wheels, the car has an EPA range of 396 miles (673 km) from a 92 kWh battery pack. As just about everyone knows, you won’t get ideal EV efficiency during winter, and our test with the Lucid in early January coincided with some decidedly colder temperatures, as well as larger ($1,750) 20-inch wheels. Despite this, I averaged almost 4 miles/kWh (15.5 kWh/100 km) on longer highway drives, although this fell to around 3.5 miles/kWh (17.8 kWh/100 km) in the city.

Recharging the Air Touring also helped illustrate how the public DC fast-charging experience has matured over the years. The Lucid uses the ISO 15118 “plug and charge” protocol, so you don’t need to mess around with an app or really do anything more complicated than plug the charging cable into the Lucid’s CCS1 socket.

After the car and charger complete their handshake, the car gives the charger account and billing info, then the electrons flow. Charging from 27 to 80 percent with a manually preconditioned battery took 36 minutes. During that time, the car added 53.3 kWh, which equated to 209 miles (336 km) of range, according to the dash. Although we didn’t test AC charging, 0–100 percent should take around 10 hours.

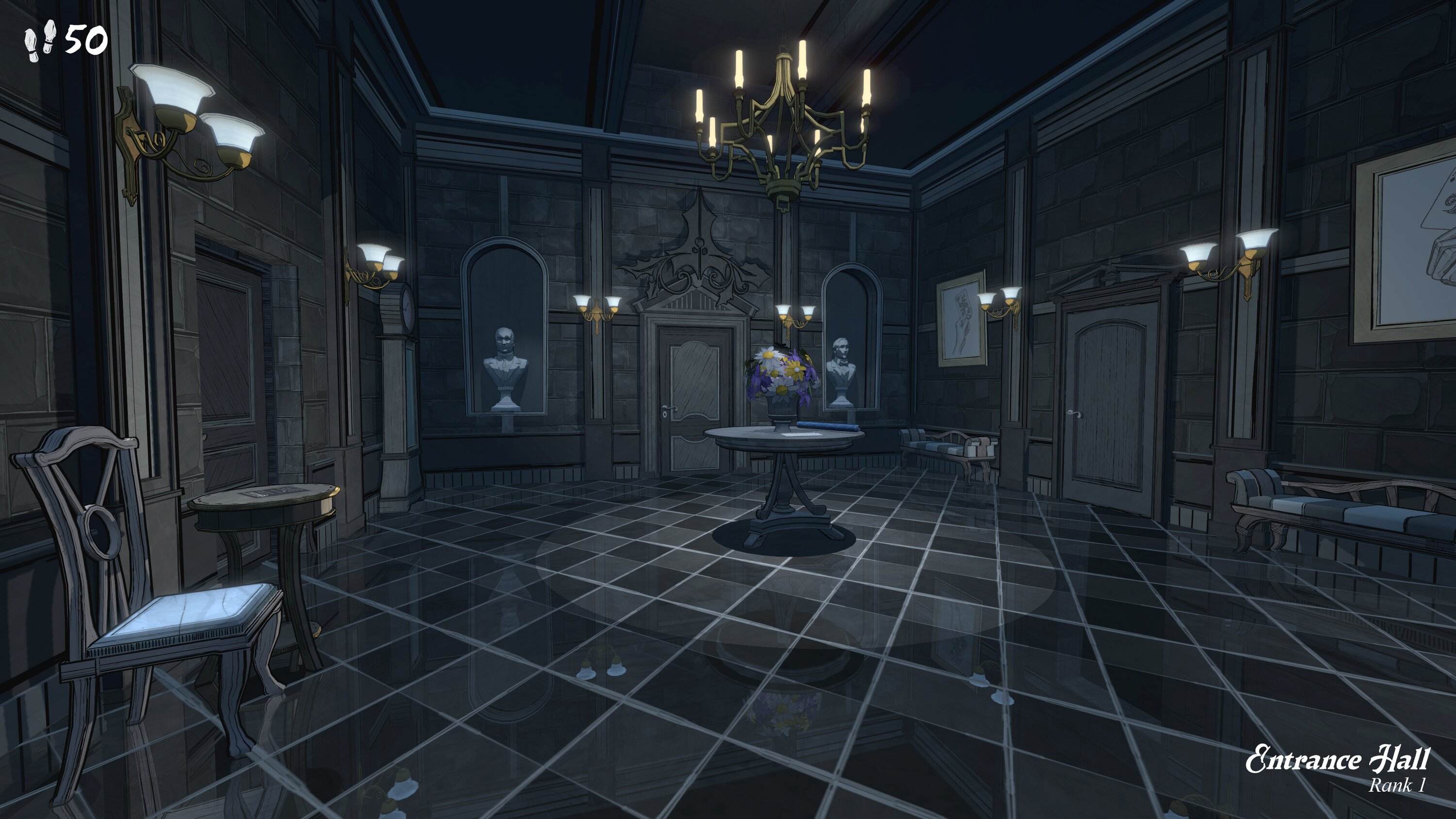

The Air Touring is an easy car to live with. Credit: Jonathan Gitlin

Monotone

I’ll admit, I’m a bit of a sucker for the way the Air looks when it’s not two-tone. That’s the Stealth option ($1,750), and the dark Fathom Blue Metallic paint ($800) and blacked-out aero wheels pushed many of my buttons. I found plenty to like from the driver’s seat, too. The 34-inch display that wraps around the driver once looked massive—now it feels relatively restrained compared to the “Best Buy on wheels” effect in some other recent EVs. The fact that the display isn’t very tall helps its feeling of restraint here.

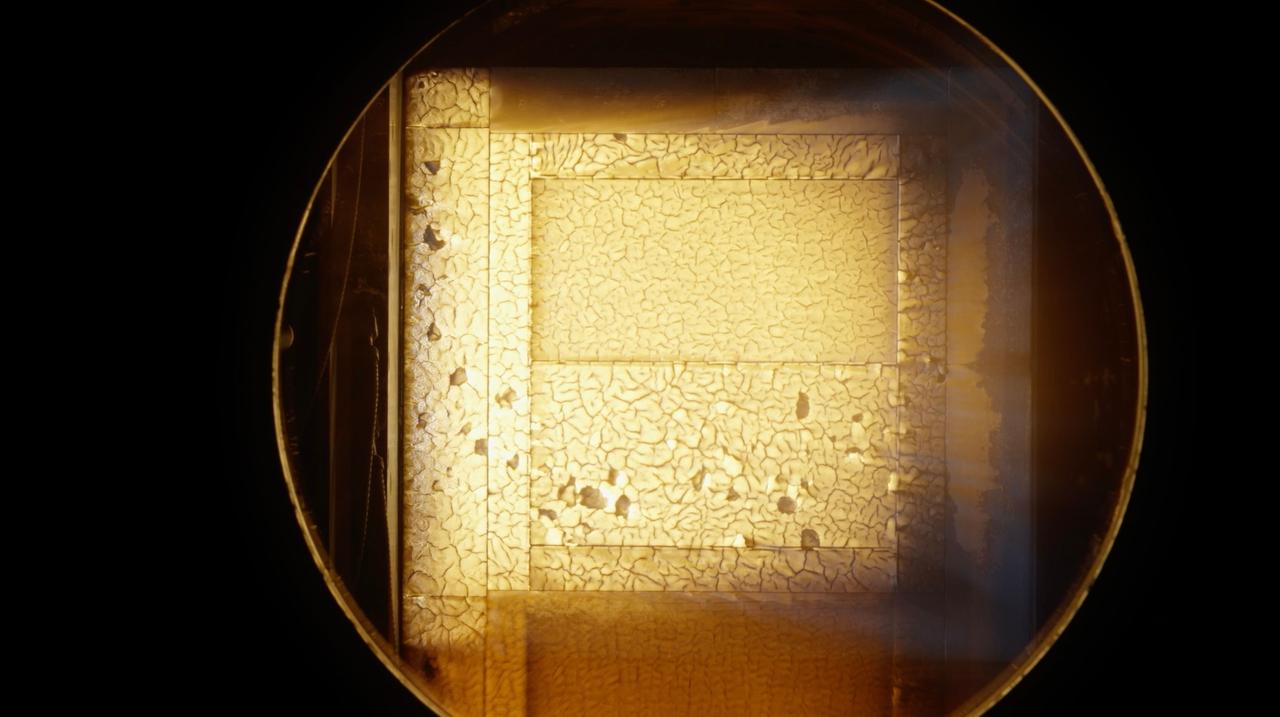

In the middle is a minimalist display for the driver, with touch-sensitive displays on either side. To your left are controls for the lights, locks, wipers, and so on. These icons are always in the same place, though there’s no tactile feedback. The infotainment screen to the right is within the driver’s reach, and it’s here that (wireless) Apple CarPlay will show up. As you can see in a photo below, CarPlay fills the irregularly shaped screen with a wallpaper but keeps its usable area confined to the rectangle in the middle.

The curved display floats above the textile-covered dash, and the daylight visible between them helps the cabin’s sense of spaciousness, even without a panoramic glass roof. A stowable touchscreen display lower down on the center console is where you control vehicle, climate, seat, and lighting settings, although there are also physical controls for temperature and volume on the dash. The relatively good overall ergonomics take a bit of a hit from the steeply raked A pillar, which creates a blind spot for the driver.

The layout is mostly great, although the A pillar causes a blind spot. Jonathan Gitlin

For all the Air Touring’s power, it isn’t a car that goads you into using it all. In fact, I spent most of the week in the gentlest setting, Smooth. It’s an easy car to drive slowly, and the rather artificial feel of the steering at low speeds means you probably won’t take it hunting apices on back roads. I should note, though, that each drive mode has its own steering calibration.

On the other hand, as a daily driver and particularly on longer drives, the Touring did a fine job. Despite being relatively low to the ground, it’s easy to get into and out of. The rear seat is capacious, and the ride is smooth, so passengers will enjoy it. Even more so if they sit up front—Lucid has some of the best (optional, $3,750) massaging seats in the business, which vibrate as well as kneading you. There’s a very accessible 22 cubic foot (623 L) trunk as well as a 10 cubic foot (283 L) frunk, so it’s practical, too.

Future-proof?

Our test Air was fitted with Lucid’s DreamDrive Pro advanced driver assistance system ($6,750), which includes a hands-free “level 2+” assist that requires you to pay attention to the road ahead but which handles accelerating, braking, and steering. Using the turn signal tells the car to perform a lane change if it’s safe, and I found it to be an effective driver assist with an active driver monitoring system (which uses a gaze-tracking camera to ensure the driver is doing their part).

Lucid rolled out the more advanced features of DreamDrive Pro last summer, and it plans to develop the system into a more capable “level 3” partially automated system that lets the driver disengage completely from the act of driving, at least at lower speeds. Although that system is some ways off—and level 3 systems are only road-legal in Nevada and California right now anyway—even the current level 2+ system leverages lidar as well as cameras, radar, and ultrasonics, and the dash display does a good job of showing you what other vehicles the Air is perceiving around it when the system is active.

As mentioned above, the model year 2026 Air feels polished, far more so than the last Lucid I drove. Designed by a refugee from Tesla, the car promised to improve on the EVs from that brand in every way. And while early Airs might have fallen short in execution, the cars can now credibly be called finished products, with much better fit and finish than a few years ago.

I’ll go so far as to say that I might have a hard time deciding between an Air or an equivalently priced Porsche Taycan were I in the market for a luxury electric four-door, even though they both offer quite different driving experiences. Be warned, though, like with the Porsche, the options can add up quickly, and the resale prices can be shockingly low.

2026 Lucid Air Touring review: This feels like a complete car now Read More »