It feels like every time I try to get friends to have some fun in VR with me, the experience is somehow horribly painful. This time I kept a journal of the entire experience to catalogue the struggles seen by real Quest users every day.

The advent of Quest was supposed to streamline the usage of VR. But what was once friction of complicated hardware and requirements has been replaced with a mess of usability issues that make people not want to come back.

As much fun as I know it is to play VR with my friends, there’s a little part in the back of my mind that dreads it. I’m so used to telling my friends about some fun new VR game we can play together, only to have to drag them through a string of frustrating issues to finally reach the fun I had promised. It’s such a problem that I don’t ask my friends to play anything but the very best looking VR games with me, because the amount of struggle has to be offset by a great experience.

This week when I decided that the newly released Dungeons of Eternity looked good enough that I could convince my friends to give it a shot, that feeling of dread crept in again. I decided from the outset to keep journal of the experience because I knew there would be strife. There always is.

These Aren’t Novices

So let’s set the scene. I asked two of my good friends to play the game with me. Both are life-long hardcore gamers who own multiple consoles, have built their own PCs, and regularly seek out and play the latest non-VR games. Friend 1, let’s call him, has owned multiple PC VR headsets before getting Quest 2. On the other hand, Friend 2 got Quest 2 as their first VR headset.

Both have owned their Quest 2 for more than a year, but neither had used the headset in the last six months (after reading this journey you’ll understand why).

Imagine This, But Without Expert Guidance

And let’s be clear here. I’m a highly experience VR user and know the Quest headsets and their software inside and out. I knew there would be struggles for them, so I anticipated and offered to walk them through the process of getting everything set up. With me there, they skipped any amount of googling for solutions to the issues they encountered. No normal VR user gets the benefit of an expert holding their hand through the process. This is to say: the experience that you read here is the absolute best case scenario—and it was still a struggle.

I knew since they hadn’t used their Quests recently that the headsets would need to get plugged in charged, updated, and controller batteries replaced. I told them both from the outset to make sure this happened before our planned play session (had they not realized they needed their headsets updated, it would have meant our planned play session would have begun with at least 15 minutes of updates, restarts, and game installs). In anticipation of stumbles along the way, I got Friend 1 into voice chat to make the process as seamless as possible. Here’s how that went.

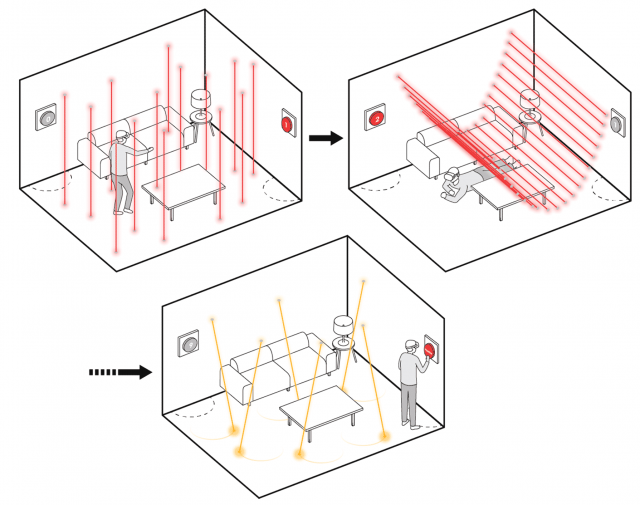

Put on his headset to update. Controllers weren’t working and neither was hand tracking.

Fix: I walked him through the process of using the ‘cursor’ and ‘up volume’ button as a mouse click (an input modality most people in my experience don’t know exists on the headset). I had an inkling that hand-tracking might be disabled on his headset, so I told him to go to Settings and enable it.

Didn’t know where to find settings.

Fix: Told him to “click on the clock” then hit Settings at the top right. Mind you, the Settings ‘button’ at the top right does not have any visual indication that it is in fact, a button. It easily could be mistaken for the label of the panel.

Didn’t know where to find hand-tracking option.

Fix: He wandered through multiple sections of the Settings until finding it

With hand-tracking enabled, it was easier to guide him to the Software Update section of the Settings and have him hit the ‘check for update’ button.

Headset updated and restarted, but controllers still weren’t working.

Fix: I guided him through the process of holding two buttons on the controller to make the power LED flash. Had to tell him where to find the LED on the upper ring of the controller (it’s invisible when not active). Concluded that batteries weren’t charged, so he replaced them.

Now he needed to install the game. He had already purchased it online but couldn’t find it in his headset.

Fix: I told him to find the Store search and pull up the game and click the install button.

As we were going through this process, Friend 1 asked me about Dungeons of Eternity: “is the multiplayer pretty seamless?” I told him I didn’t know because I hadn’t tried multiplayer yet. Drawing upon his past experiences of VR he responded, “I’m guessing the answer is no.”

Installed and Ready to Play, Right?

So we got through the process required just to get the game installed and ready to play. But the issues didn’t end there, and not just for Friend 1 but also for me.

I had the foresight to start a party call in the headset with both friends so we could be in constant communication if when things went wrong. If I hadn’t done this we would have ended up separated, communicating by text or phone while in the headset trying to get all of the following solved, and that would have been far worse.

But when I first sent the party call invite to both friends, Friend 2 joined and I could hear him for a few moments, but then I got dropped out of the call. Friend 1 said he never got a notification to join the call in the first place.

Ok, so I hung up the call and tried again. This time Friend 2 got in and we didn’t get dropped out, but Friend 2 still got no notification about the call. So I walked him through how to find the headset’s notification section, from which he was able to join the party call.

Ok so we’re talking. Now how to get my friends into the game with me? I opened the Quest menu and found my way to the party call where I was able to choose to bring the party to the game lobby. When I clicked the button to do so, both friends got a pop-up asking to travel to the game. “Awesome! Something is going to work!” I thought to myself.

Of course not. All three of us loaded into the game, but we weren’t connected together into a lobby. Ok, well at least we’re all in the game now, so let me try inviting them directly into the game instead of using the party travel system.

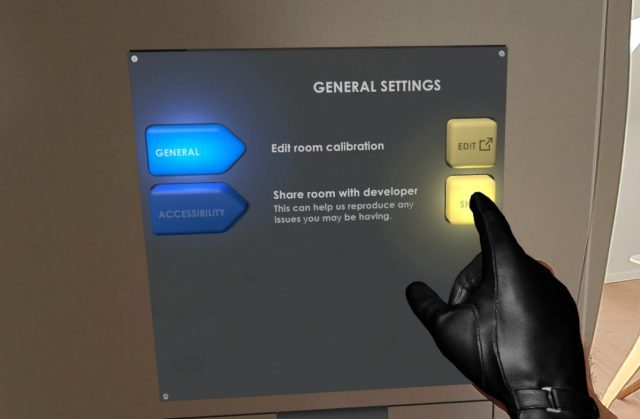

I opened the Quest menu, found the ‘invite’ button on the game panel, and when I clicked it, nothing appeared. I knew a list of friends should have appeared, but there was simply nothing. I backed out of the menu and tried again. Nothing appeared. This wasn’t even a blank page… just… air.

Attempting to invite my friends to the game. After the normal invite button was broken I searched for invite buttons elsewhere but didn’t find any | Note: While attempting to retrieve this video from my headset, the Gallery section of the Quest smartphone app bugged out and had to be force-quit before the video would appear.

At this point my friends were getting impatient just standing around in their uncomfortable headsets. So I tell them both to run through the tutorial separately, and we’d all meet up when that was done.

In the meantime, I tried going through the party call interface to pull up each friend’s Quest profile to see if I could invite them that way. This is very standard stuff for every other game platform… navigate to a friend’s profile and click an invite button. But I could only call or message them from there. I also went to the ‘People’ tab in the Quest menu to see if I could find them on my friends list and invite them that way. Nada.

Ok so I quit and relaunched the game. Upon trying the regular invite process again, the invite panel actually appeared!

Can We Play Yet?

They had finished their tutorials, so I sent them both an invite. And get this: it actually worked and they loaded into my lobby! Finally. Finally we’re going to play the game together.

If only.

I told them to drop out of our party chat so we could use in-game spatial audio. But they couldn’t hear me.

Eventually I saw an error pop up in the game, “attempt to login to voice chat timed out.”

Luckily I recalled that the first time I launched the game several days prior it had asked for permission to ‘record audio’. Since I had selected Solo Mode to play the game by myself, I didn’t initially understand why the game would want to ‘record audio’, so I reflexively denied the permission.

That meant when we tried to use in-game chat, it couldn’t connect me. Fixing this meant going into the Settings to find App Permissions, then toggle the microphone permission for the specific game.

That meant when we tried to use in-game chat, it couldn’t connect me. Fixing this meant going into the Settings to find App Permissions, then toggle the microphone permission for the specific game.

Now you might think ‘oh that’s just user error you obviously should have accepted the permission in the first place.’

And yet… no. This was a contextless permission request that goes against every modern guideline. I had opened the game to play it solo, not even thinking of its multiplayer component at the time. The permission was requested after I selected ‘Solo Play’. Why would a game want to ‘record audio’ in a single player mode?

Not only was this the wrong time to ask for the permission, the permission itself is unclear. ‘Record audio’ is very different than ‘transmit your voice for multiplayer chat’. Had the permission asked with that added context, I might have better understood what it was asking and why, even though it had asked at the wrong time.

Ok so the permission is sorted out. Then I had to restart the game. Of course that meant I also had to re-invite them to my lobby. I braced myself for disappointment when I clicked the button for the invite menu… alas, it actually appeared.

Found the Fun

After all of that—maybe 20 or 30 minutes of trying to get it all to work—we were finally standing next to each other in VR and also able to hear one another.

Perhaps the most frustrating part of all of this is how much it hides the magic of VR.

Within minutes, maybe even less then one minute, from launching into a mission together we were laughing together and having an absolute blast just screwing around in the very first room of the very first tutorial mission. Multiplayer VR is magical like that, especially with good friends. But it can be so painful to get there.

And here’s the kicker. Even though we had a really fun time together, the repeated pain of finally getting to the fun burns into the subconscious like a scar that doesn’t go away. It had been more than six months since I was able to convince them to play a VR game together. The next time I ask them to play with me again, I won’t be surprised if they say ‘nah let’s play a flat gam’.

– – — – –

And last but not least, it’s important to point out here that I’m not just ripping on Quest. I’m not saying other VR platforms do social better. I’m saying Quest doesn’t do it well enough.

Am I alone in this or have you had your own nightmares trying to play VR with friends? Drop a line in the comments below.