Critics scoff after Microsoft warns AI feature can infect machines and pilfer data

Integration of Copilot Actions into Windows is off by default, but for how long?

Credit: Photographer: Chona Kasinger/Bloomberg via Getty Images

Microsoft’s warning on Tuesday that an experimental AI agent integrated into Windows can infect devices and pilfer sensitive user data has set off a familiar response from security-minded critics: Why is Big Tech so intent on pushing new features before their dangerous behaviors can be fully understood and contained?

As reported Tuesday, Microsoft introduced Copilot Actions, a new set of “experimental agentic features” that, when enabled, perform “everyday tasks like organizing files, scheduling meetings, or sending emails,” and provide “an active digital collaborator that can carry out complex tasks for you to enhance efficiency and productivity.”

Hallucinations and prompt injections apply

The fanfare, however, came with a significant caveat. Microsoft recommended users enable Copilot Actions only “if you understand the security implications outlined.”

The admonition is based on known defects inherent in most large language models, including Copilot, as researchers have repeatedly demonstrated.

One common defect of LLMs causes them to provide factually erroneous and illogical answers, sometimes even to the most basic questions. This propensity for hallucinations, as the behavior has come to be called, means users can’t trust the output of Copilot, Gemini, Claude, or any other AI assistant and instead must independently confirm it.

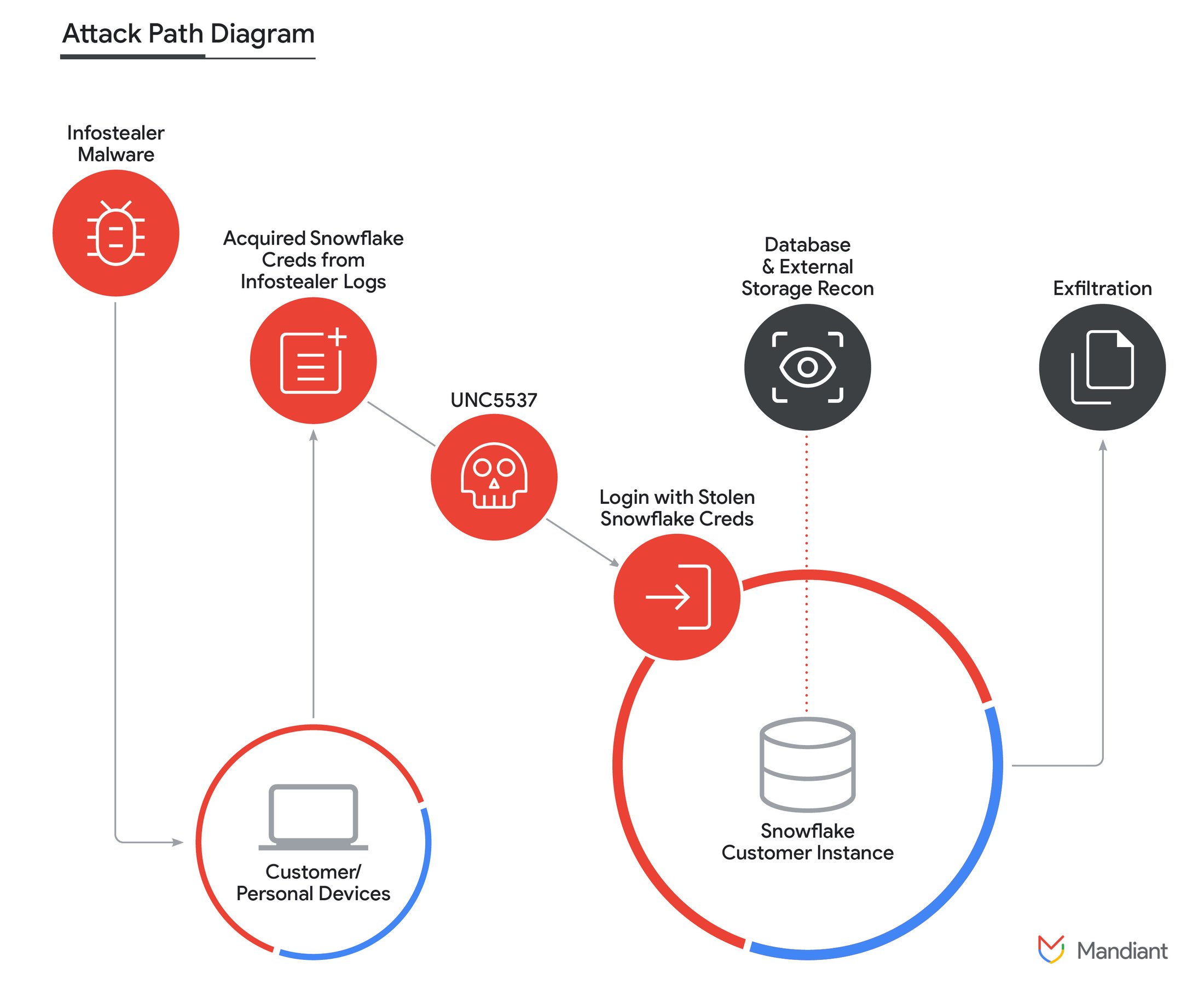

Another common LLM landmine is the prompt injection, a class of bug that allows hackers to plant malicious instructions in websites, resumes, and emails. LLMs are programmed to follow directions so eagerly that they are unable to discern those in valid user prompts from those contained in untrusted, third-party content created by attackers. As a result, the LLMs give the attackers the same deference as users.

Both flaws can be exploited in attacks that exfiltrate sensitive data, run malicious code, and steal cryptocurrency. So far, these vulnerabilities have proved impossible for developers to prevent and, in many cases, can only be fixed using bug-specific workarounds developed once a vulnerability has been discovered.

That, in turn, led to this whopper of a disclosure in Microsoft’s post from Tuesday:

“As these capabilities are introduced, AI models still face functional limitations in terms of how they behave and occasionally may hallucinate and produce unexpected outputs,” Microsoft said. “Additionally, agentic AI applications introduce novel security risks, such as cross-prompt injection (XPIA), where malicious content embedded in UI elements or documents can override agent instructions, leading to unintended actions like data exfiltration or malware installation.”

Microsoft indicated that only experienced users should enable Copilot Actions, which is currently available only in beta versions of Windows. The company, however, didn’t describe what type of training or experience such users should have or what actions they should take to prevent their devices from being compromised. I asked Microsoft to provide these details, and the company declined.

Like “macros on Marvel superhero crack”

Some security experts questioned the value of the warnings in Tuesday’s post, comparing them to warnings Microsoft has provided for decades about the danger of using macros in Office apps. Despite the long-standing advice, macros have remained among the lowest-hanging fruit for hackers out to surreptitiously install malware on Windows machines. One reason for this is that Microsoft has made macros so central to productivity that many users can’t do without them.

“Microsoft saying ‘don’t enable macros, they’re dangerous’… has never worked well,” independent researcher Kevin Beaumont said. “This is macros on Marvel superhero crack.”

Beaumont, who is regularly hired to respond to major Windows network compromises inside enterprises, also questioned whether Microsoft will provide a means for admins to adequately restrict Copilot Actions on end-user machines or to identify machines in a network that have the feature turned on.

A Microsoft spokesperson said IT admins will be able to enable or disable an agent workspace at both account and device levels, using Intune or other MDM (Mobile Device Management) apps.

Critics voiced other concerns, including the difficulty for even experienced users to detect exploitation attacks targeting the AI agents they’re using.

“I don’t see how users are going to prevent anything of the sort they are referring to, beyond not surfing the web I guess,” researcher Guillaume Rossolini said.

Microsoft has stressed that Copilot Actions is an experimental feature that’s turned off by default. That design was likely chosen to limit its access to users with the experience required to understand its risks. Critics, however, noted that previous experimental features—Copilot, for instance—regularly become default capabilities for all users over time. Once that’s done, users who don’t trust the feature are often required to invest time developing unsupported ways to remove the features.

Sound but lofty goals

Most of Tuesday’s post focused on Microsoft’s overall strategy for securing agentic features in Windows. Goals for such features include:

- Non-repudiation, meaning all actions and behaviors must be “observable and distinguishable from those taken by a user”

- Agents must preserve confidentiality when they collect, aggregate, or otherwise utilize user data

- Agents must receive user approval when accessing user data or taking actions

The goals are sound, but ultimately they depend on users reading the dialog windows that warn of the risks and require careful approval before proceeding. That, in turn, diminishes the value of the protection for many users.

“The usual caveat applies to such mechanisms that rely on users clicking through a permission prompt,” Earlence Fernandes, a University of California, San Diego professor specializing in AI security, told Ars. “Sometimes those users don’t fully understand what is going on, or they might just get habituated and click ‘yes’ all the time. At which point, the security boundary is not really a boundary.”

As demonstrated by the rash of “ClickFix” attacks, many users can be tricked into following extremely dangerous instructions. While more experienced users (including a fair number of Ars commenters) blame the victims falling for such scams, these incidents are inevitable for a host of reasons. In some cases, even careful users are fatigued or under emotional distress and slip up as a result. Other users simply lack the knowledge to make informed decisions.

Microsoft’s warning, one critic said, amounts to little more than a CYA (short for cover your ass), a legal maneuver that attempts to shield a party from liability.

“Microsoft (like the rest of the industry) has no idea how to stop prompt injection or hallucinations, which makes it fundamentally unfit for almost anything serious,” critic Reed Mideke said. “The solution? Shift liability to the user. Just like every LLM chatbot has a ‘oh by the way, if you use this for anything important be sure to verify the answers” disclaimer, never mind that you wouldn’t need the chatbot in the first place if you knew the answer.”

As Mideke indicated, most of the criticisms extend to AI offerings other companies—including Apple, Google, and Meta—are integrating into their products. Frequently, these integrations begin as optional features and eventually become default capabilities whether users want them or not.

Dan Goodin is Senior Security Editor at Ars Technica, where he oversees coverage of malware, computer espionage, botnets, hardware hacking, encryption, and passwords. In his spare time, he enjoys gardening, cooking, and following the independent music scene. Dan is based in San Francisco. Follow him at here on Mastodon and here on Bluesky. Contact him on Signal at DanArs.82.

Critics scoff after Microsoft warns AI feature can infect machines and pilfer data Read More »