Microsoft’s new “Copilot Vision” AI experiment can see what you browse

On Monday, Microsoft unveiled updates to its consumer AI assistant Copilot, introducing two new experimental features for a limited group of $20/month Copilot Pro subscribers: Copilot Labs and Copilot Vision. Labs integrates OpenAI’s latest o1 “reasoning” model, and Vision allows Copilot to see what you’re browsing in Edge.

Microsoft says Copilot Labs will serve as a testing ground for Microsoft’s latest AI tools before they see wider release. The company describes it as offering “a glimpse into ‘work-in-progress’ projects.” The first feature available in Labs is called “Think Deeper,” and it uses step-by-step processing to solve more complex problems than the regular Copilot. Think Deeper is Microsoft’s version of OpenAI’s new o1-preview and o1-mini AI models, and it has so far rolled out to some Copilot Pro users in Australia, Canada, New Zealand, the UK, and the US.

Copilot Vision is an entirely different beast. The new feature aims to give the AI assistant a visual window into what you’re doing within the Microsoft Edge browser. When enabled, Copilot can “understand the page you’re viewing and answer questions about its content,” according to Microsoft.

Microsoft’s Copilot Vision promo video.

The company positions Copilot Vision as a way to provide more natural interactions and task assistance beyond text-based prompts, but it will likely raise privacy concerns. As a result, Microsoft says that Copilot Vision is entirely opt-in and that no audio, images, text, or conversations from Vision will be stored or used for training. The company is also initially limiting Vision’s use to a pre-approved list of websites, blocking it on paywalled and sensitive content.

The rollout of these features appears gradual, with Microsoft noting that it wants to balance “pioneering features and a deep sense of responsibility.” The company said it will be “listening carefully” to user feedback as it expands access to the new capabilities. Microsoft has not provided a timeline for wider availability of either feature.

Mustafa Suleyman, chief executive of Microsoft AI, told Reuters that he sees Copilot as an “ever-present confidant” that could potentially learn from users’ various Microsoft-connected devices and documents, with permission. He also mentioned that Microsoft co-founder Bill Gates has shown particular interest in Copilot’s potential to read and parse emails.

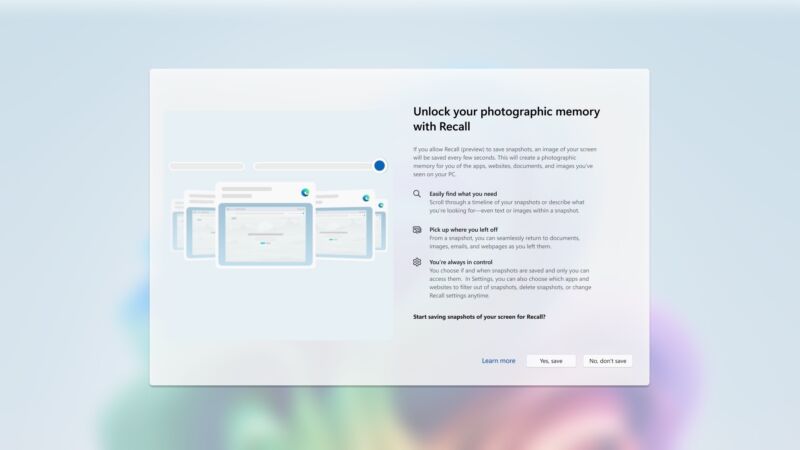

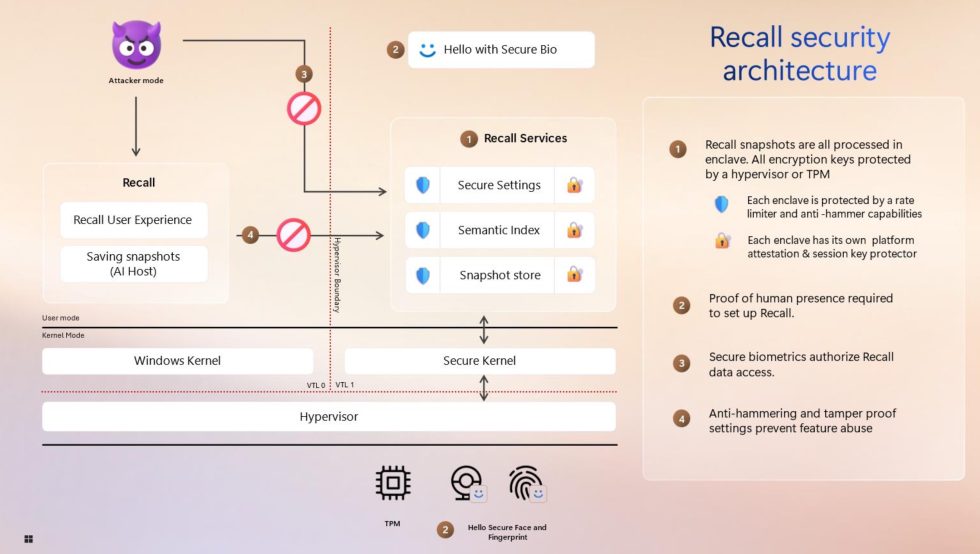

But judging by the visceral reaction to Microsoft’s Recall feature, which keeps a record of everything you do on your PC so an AI model can recall it later, privacy-sensitive users may not appreciate having an AI assistant monitor their activities—especially if those features send user data to the cloud for processing.

Microsoft’s new “Copilot Vision” AI experiment can see what you browse Read More »