Google reveals sky-high Gemini usage numbers in antitrust case

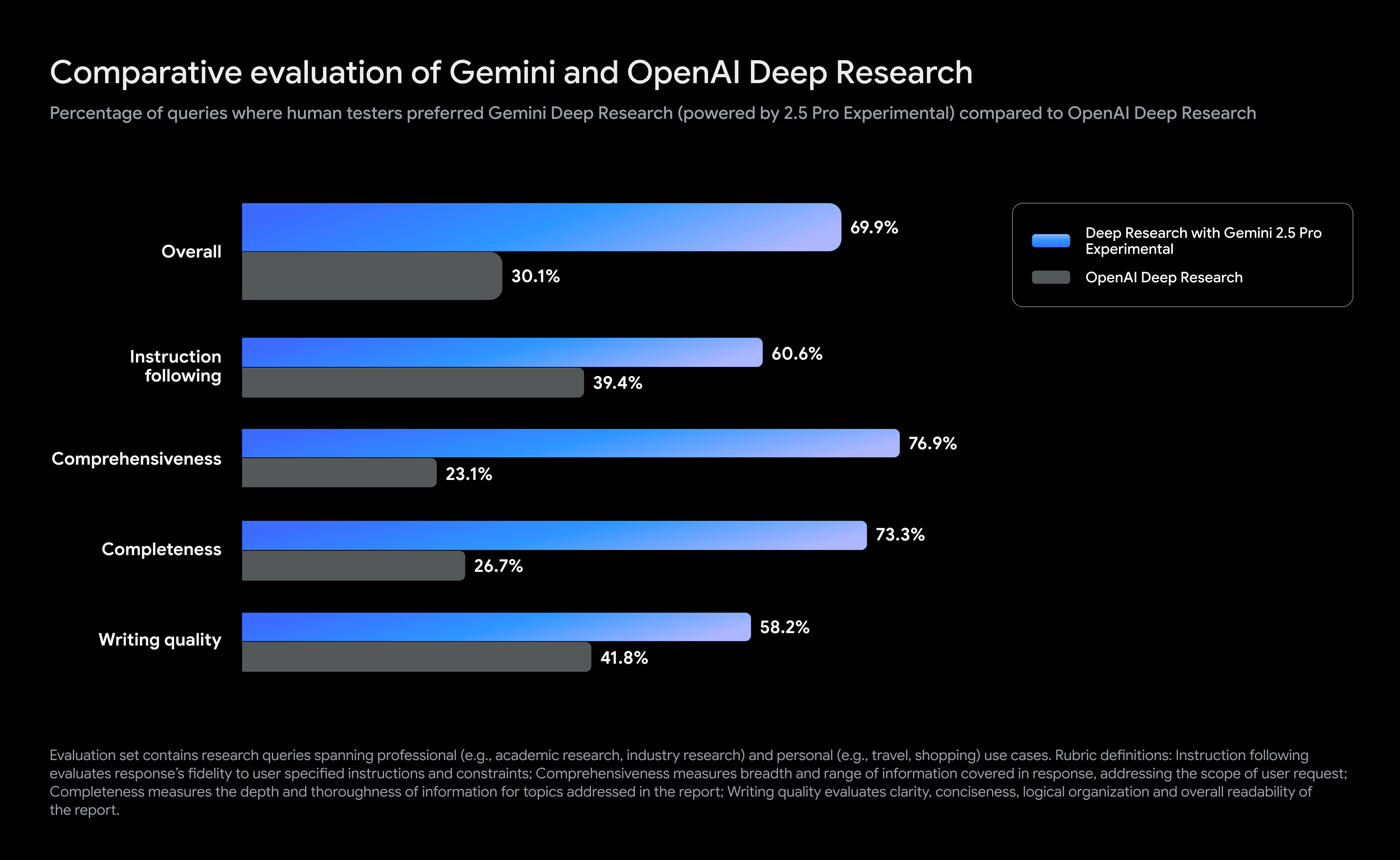

Despite the uptick in Gemini usage, Google is still far from catching OpenAI. Naturally, Google has been keeping a close eye on ChatGPT traffic. OpenAI has also seen traffic increase, putting ChatGPT around 600 million monthly active users, according to Google’s analysis. Early this year, reports pegged ChatGPT usage at around 400 million users per month.

There are many ways to measure web traffic, and not all of them tell you what you might think. For example, OpenAI has recently claimed weekly traffic as high as 400 million, but companies can choose the seven-day period in a given month they report as weekly active users. A monthly metric is more straightforward, and we have some degree of trust that Google isn’t using fake or unreliable numbers in a case where the company’s past conduct has already harmed its legal position.

While all AI firms strive to lock in as many users as possible, this is not the total win it would be for a retail site or social media platform—each person using Gemini or ChatGPT costs the company money because generative AI is so computationally expensive. Google doesn’t talk about how much it earns (more likely loses) from Gemini subscriptions, but OpenAI has noted that it loses money even on its $200 monthly plan. So while having a broad user base is essential to make these products viable in the long term, it just means higher costs unless the cost of running massive AI models comes down.

Google reveals sky-high Gemini usage numbers in antitrust case Read More »