If you want to satiate AI’s hunger for power, Google suggests going to space

Google engineers think they already have all the pieces needed to build a data center in orbit.

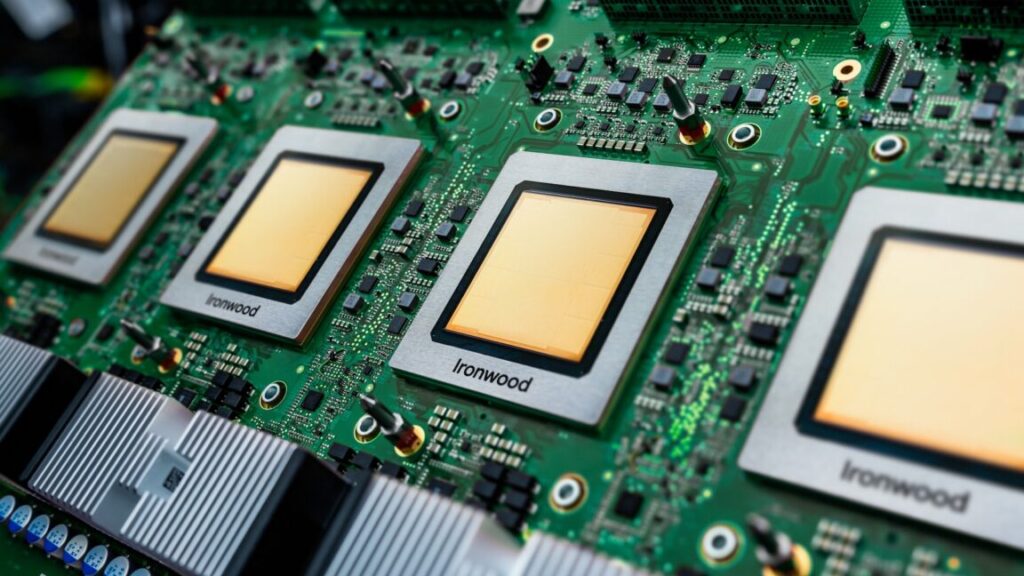

With Project Suncatcher, Google will test its Tensor Processing Units on satellites. Credit: Google

It was probably always when, not if, Google would add its name to the list of companies intrigued by the potential of orbiting data centers.

Google announced Tuesday a new initiative, named Project Suncatcher, to examine the feasibility of bringing artificial intelligence to space. The idea is to deploy swarms of satellites in low-Earth orbit, each carrying Google’s AI accelerator chips designed for training, content generation, synthetic speech and vision, and predictive modeling. Google calls these chips Tensor Processing Units, or TPUs.

“Project Suncatcher is a moonshot exploring a new frontier: equipping solar-powered satellite constellations with TPUs and free-space optical links to one day scale machine learning compute in space,” Google wrote in a blog post.

“Like any moonshot, it’s going to require us to solve a lot of complex engineering challenges,” Google’s CEO, Sundar Pichai, wrote on X. Pichai noted that Google’s early tests show the company’s TPUs can withstand the intense radiation they will encounter in space. “However, significant challenges still remain like thermal management and on-orbit system reliability.”

The why and how

Ars reported on Google’s announcement on Tuesday, and Google published a research paper outlining the motivation for such a moonshot project. One of the authors, Travis Beals, spoke with Ars about Project Suncatcher and offered his thoughts on why it just might work.

“We’re just seeing so much demand from people for AI,” said Beals, senior director of Paradigms of Intelligence, a research team within Google. “So, we wanted to figure out a solution for compute that could work no matter how large demand might grow.”

Higher demand will lead to bigger data centers consuming colossal amounts of electricity. According to the MIT Technology Review, AI alone could consume as much electricity annually as 22 percent of all US households by 2028. Cooling is also a problem, often requiring access to vast water resources, raising important questions about environmental sustainability.

Google is looking to the sky to avoid potential bottlenecks. A satellite in space can access an infinite supply of renewable energy and an entire Universe to absorb heat.

“If you think about a data center on Earth, it’s taking power in and it’s emitting heat out,” Beals said. “For us, it’s the satellite that’s doing the same. The satellite is going to have solar panels … They’re going to feed that power to the TPUs to do whatever compute we need them to do, and then the waste heat from the TPUs will be distributed out over a radiator that will then radiate that heat out into space.”

Google envisions putting a legion of satellites into a special kind of orbit that rides along the day-night terminator, where sunlight meets darkness. This north-south, or polar, orbit would be synchronized with the Sun, allowing a satellite’s power-generating solar panels to remain continuously bathed in sunshine.

“It’s much brighter even than the midday Sun on Earth because it’s not filtered by Earth’s atmosphere,” Beals said.

This means a solar panel in space can produce up to eight times more power than the same collecting area on the ground, and you don’t need a lot of batteries to reserve electricity for nighttime. This may sound like the argument for space-based solar power, an idea first described by Isaac Asimov in his short story Reason published in 1941. But instead of transmitting the electricity down to Earth for terrestrial use, orbiting data centers would tap into the power source in space.

“As with many things, the ideas originate in science fiction, but it’s had a number of challenges, and one big one is, how do you get the power down to Earth?” Beals said. “So, instead of trying to figure out that, we’re embarking on this moonshot to bring [machine learning] compute chips into space, put them on satellites that have the solar panels and the radiators for cooling, and then integrate it all together so you don’t actually have to be powered on Earth.”

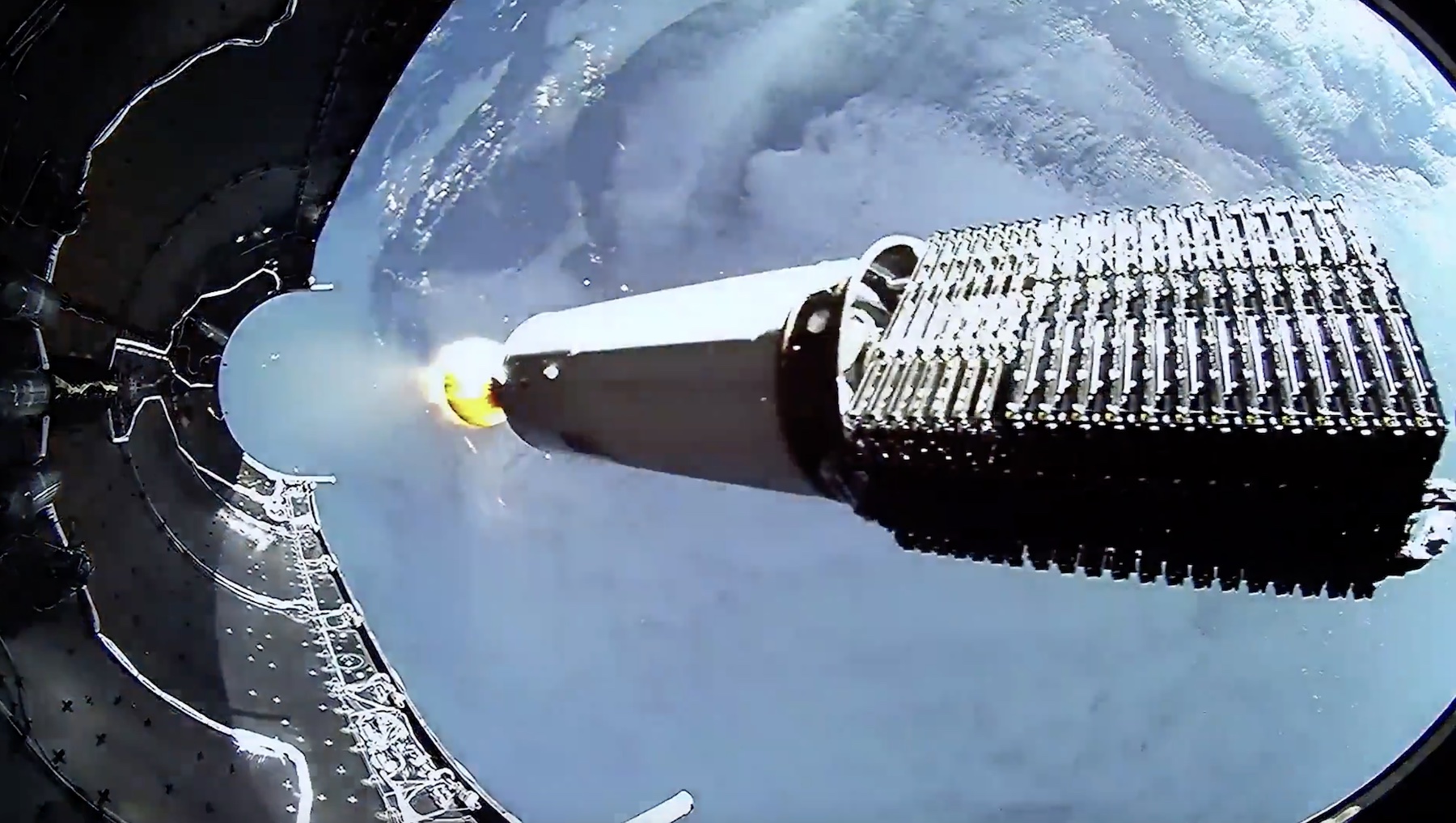

SpaceX is driving down launch costs, thanks to reusable rockets and an abundant volume of Starlink satellite launches. Credit: SpaceX

Google has a mixed record with its ambitious moonshot projects. One of the most prominent moonshot graduates is the self-driving car kit developer Waymo, which spun out to form a separate company in 2016 and is now operational. The Project Loon initiative to beam Internet signals from high-altitude balloons is one of the Google moonshots that didn’t make it.

Ars published two stories last week on the promise of space-based data centers. One of the startups in this field, named Starcloud, is partnering with Nvidia, the world’s largest tech company by market capitalization, to build a 5 gigawatt orbital data center with enormous solar and cooling panels approximately 4 kilometers (2.5 miles) in width and length. In response to that story, Elon Musk said SpaceX is pursuing the same business opportunity but didn’t provide any details. It’s worth noting that Google holds an estimated 7 percent stake in SpaceX.

Strength in numbers

Google’s proposed architecture differs from that of Starcloud and Nvidia in an important way. Instead of putting up just one or a few massive computing nodes, Google wants to launch a fleet of smaller satellites that talk to one another through laser data links. Essentially, a satellite swarm would function as a single data center, using light-speed interconnectivity to aggregate computing power hundreds of miles over our heads.

If that sounds implausible, take a moment to think about what companies are already doing in space today. SpaceX routinely launches more than 100 Starlink satellites per week, each of which uses laser inter-satellite links to bounce Internet signals around the globe. Amazon’s Kuiper satellite broadband network uses similar technology, and laser communications will underpin the US Space Force’s next-generation data-relay constellation.

Artist’s illustration of laser crosslinks in space. Credit: TESAT

Autonomously constructing a miles-long structure in orbit, as Nvidia and Starcloud foresee, would unlock unimagined opportunities. The concept also relies on tech that has never been tested in space, but there are plenty of engineers and investors who want to try. Starcloud announced an agreement last week with a new in-space assembly company, Rendezvous Robotics, to explore the use of modular, autonomous assembly to build Starcloud’s data centers.

Google’s research paper describes a future computing constellation of 81 satellites flying at an altitude of some 400 miles (650 kilometers), but Beals said the company could dial the total swarm size to as many spacecraft as the market demands. This architecture could enable terawatt-class orbital data centers, according to Google.

“What we’re actually envisioning is, potentially, as you scale, you could have many clusters,” Beals said.

Whatever the number, the satellites will communicate with one another using optical inter-satellite links for high-speed, low-latency connectivity. The satellites will need to fly in tight formation, perhaps a few hundred feet apart, with a swarm diameter of a little more than a mile, or about 2 kilometers. Google says its physics-based model shows satellites can maintain stable formations at such close ranges using automation and “reasonable propulsion budgets.”

“If you’re doing something that requires a ton of tight coordination between many TPUs—training, in particular—you want links that have as low latency as possible and as high bandwidth as possible,” Beals said. “With latency, you run into the speed of light, so you need to get things close together there to reduce latency. But bandwidth is also helped by bringing things close together.”

Some machine-learning applications could be done with the TPUs on just one modestly sized satellite, while others may require the processing power of multiple spacecraft linked together.

“You might be able to fit smaller jobs into a single satellite. This is an approach where, potentially, you can tackle a lot of inference workloads with a single satellite or a small number of them, but eventually, if you want to run larger jobs, you may need a larger cluster all networked together like this,” Beals said.

Google has worked on Project Suncatcher for more than a year, according to Beals. In ground testing, engineers tested Google’s TPUs under a 67 MeV proton beam to simulate the total ionizing dose of radiation the chip would see over five years in orbit. Now, it’s time to demonstrate Google’s AI chips, and everything else needed for Project Suncatcher will actually work in the real environment.

Google is partnering with Planet, the Earth-imaging company, to develop a pair of small prototype satellites for launch in early 2027. Planet builds its own satellites, so Google has tapped it to manufacture each spacecraft, test them, and arrange for their launch. Google’s parent company, Alphabet, also has an equity stake in Planet.

“We have the TPUs and the associated hardware, the compute payload… and we’re bringing that to Planet,” Beals said. “For this prototype mission, we’re really asking them to help us do everything to get that ready to operate in space.”

Beals declined to say how much the demo slated for launch in 2027 will cost but said Google is paying Planet for its role in the mission. The goal of the demo mission is to show whether space-based computing is a viable enterprise.

“Does it really hold up in space the way we think it will, the way we’ve tested on Earth?” Beals said.

Engineers will test an inter-satellite laser link and verify Google’s AI chips can weather the rigors of spaceflight.

“We’re envisioning scaling by building lots of satellites and connecting them together with ultra-high bandwidth inter-satellite links,” Beals said. “That’s why we want to launch a pair of satellites, because then we can test the link between the satellites.”

Evolution of a free-fall (no thrust) constellation under Earth’s gravitational attraction, modeled to the level of detail required to obtain Sun-synchronous orbits, in a non-rotating coordinate system. Credit: Google

Getting all this data to users on the ground is another challenge. Optical data links could also route enormous amounts of data between the satellites in orbit and ground stations on Earth.

Aside from the technical feasibility, there have long been economic hurdles to fielding large satellite constellations. But SpaceX’s experience with its Starlink broadband network, now with more than 8,000 active satellites, is proof that times have changed.

Google believes the economic equation is about to change again when SpaceX’s Starship rocket comes online. The company’s learning curve analysis shows launch prices could fall to less than $200 per kilogram by around 2035, assuming Starship is flying about 180 times per year by then. This is far below SpaceX’s stated launch targets for Starship but comparable to SpaceX’s proven flight rate with its workhorse Falcon 9 rocket.

It’s possible there could be even more downward pressure on launch costs if SpaceX, Nvidia, and others join Google in the race for space-based computing. The demand curve for access to space may only be eclipsed by the world’s appetite for AI.

“The more people are doing interesting, exciting things in space, the more investment there is in launch, and in the long run, that could help drive down launch costs,” Beals said. “So, it’s actually great to see that investment in other parts of the space supply chain and value chain. There are a lot of different ways of doing this.”

If you want to satiate AI’s hunger for power, Google suggests going to space Read More »