Apple brings OpenAI’s GPT-5 to iOS and macOS

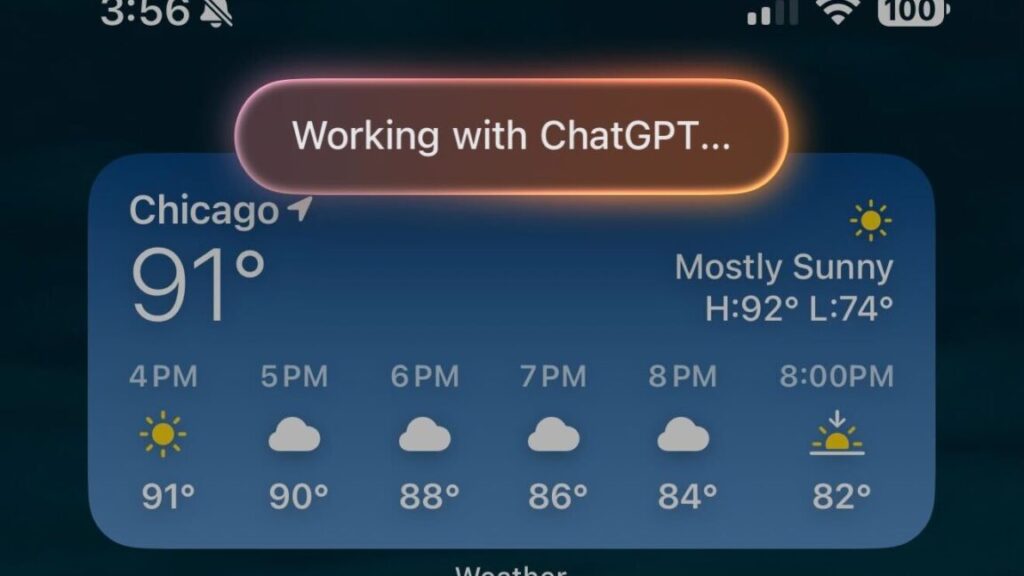

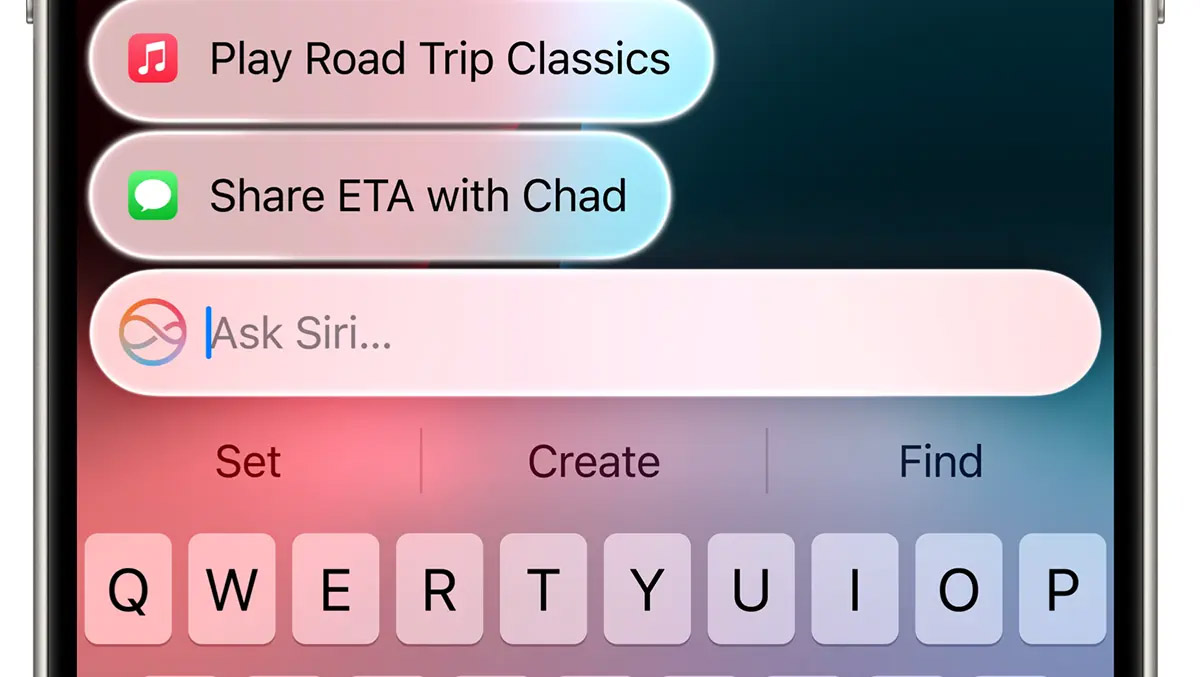

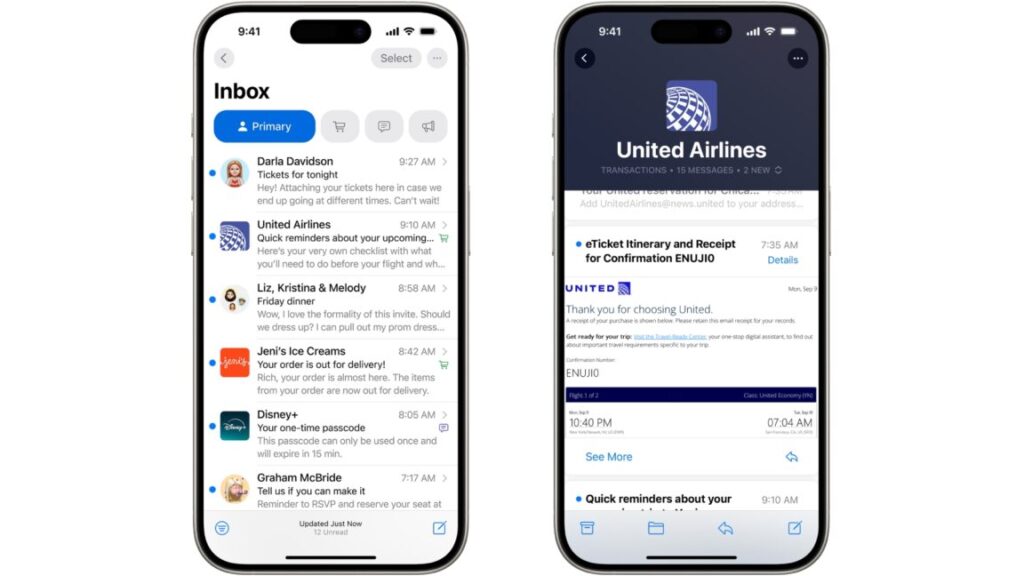

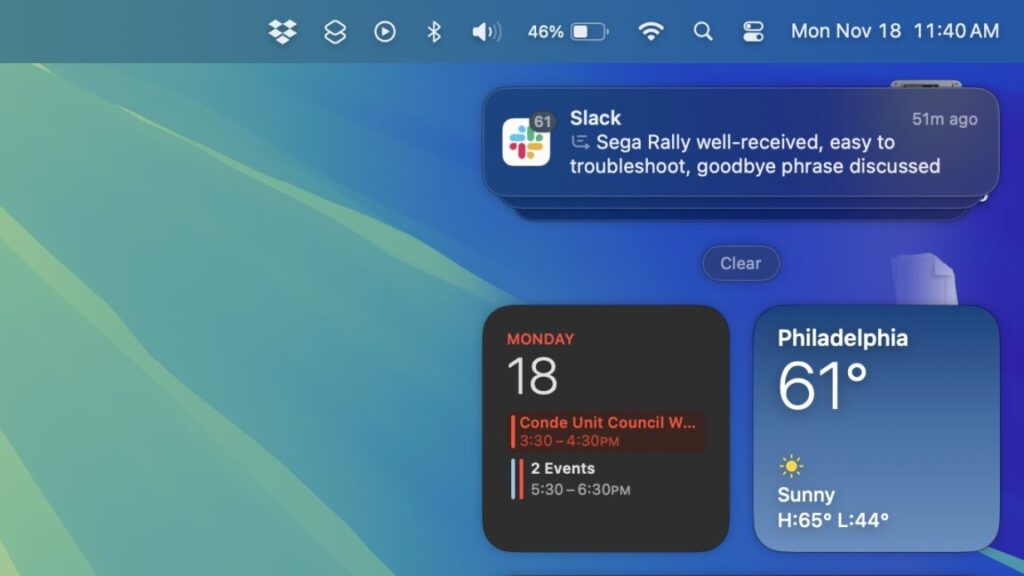

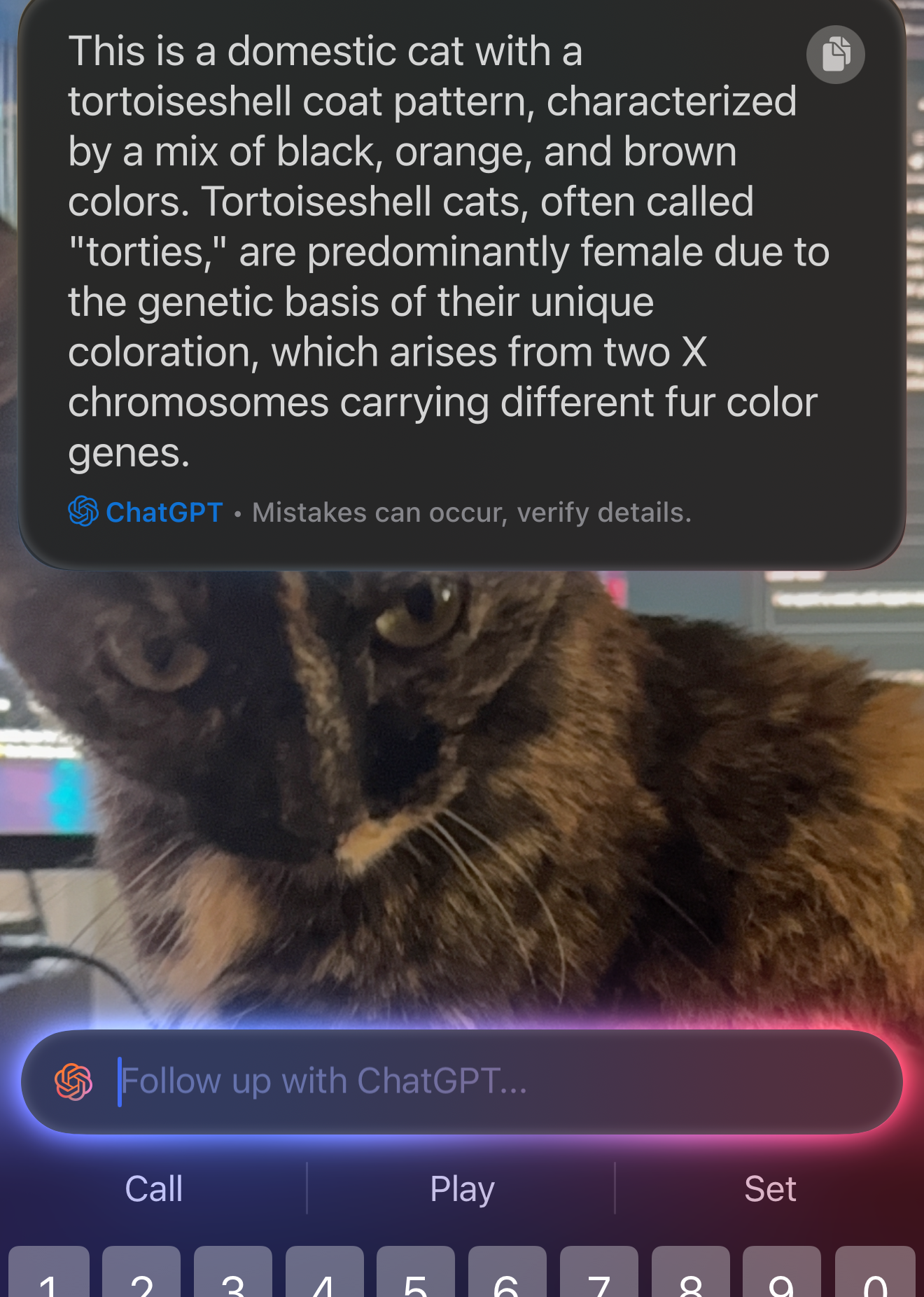

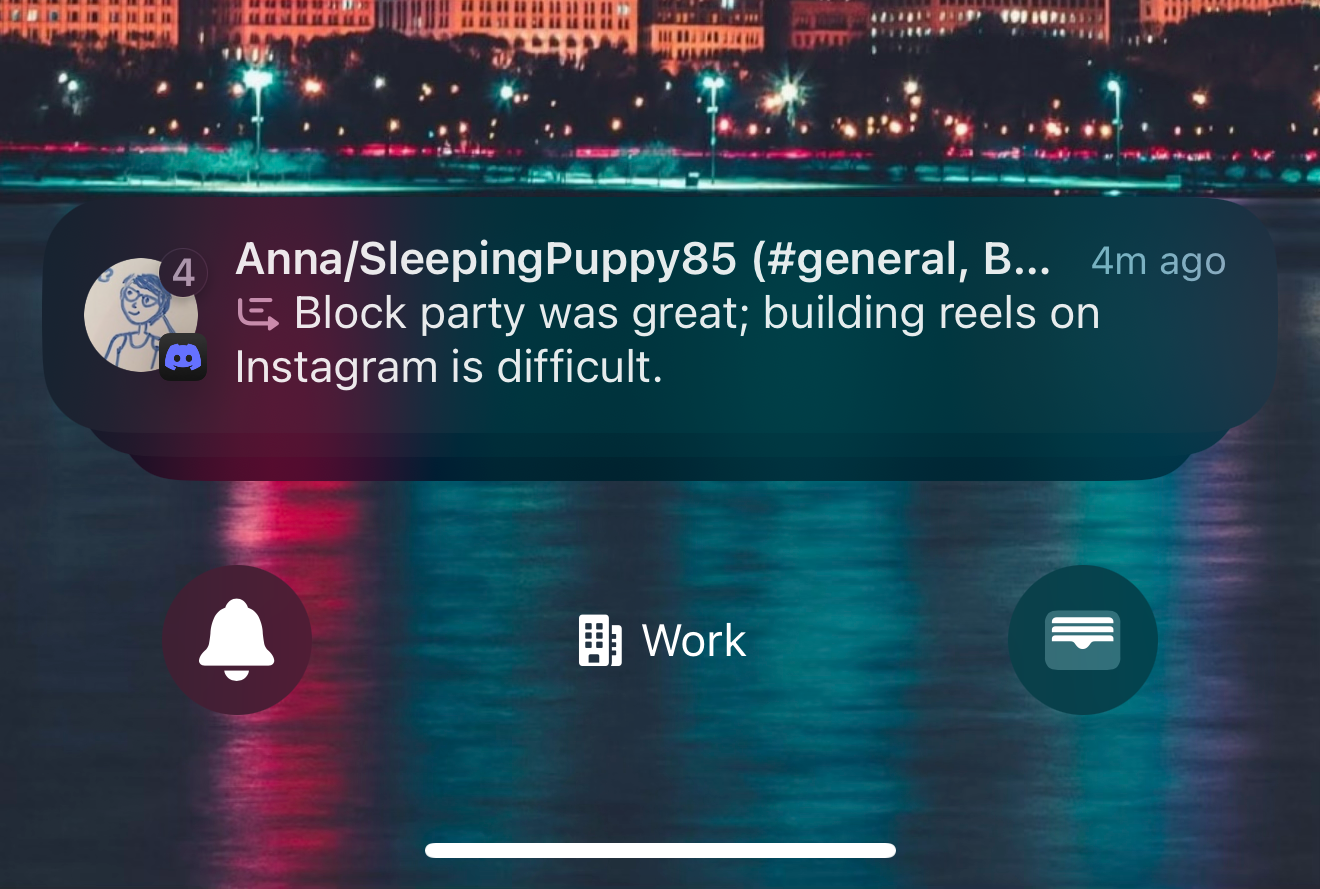

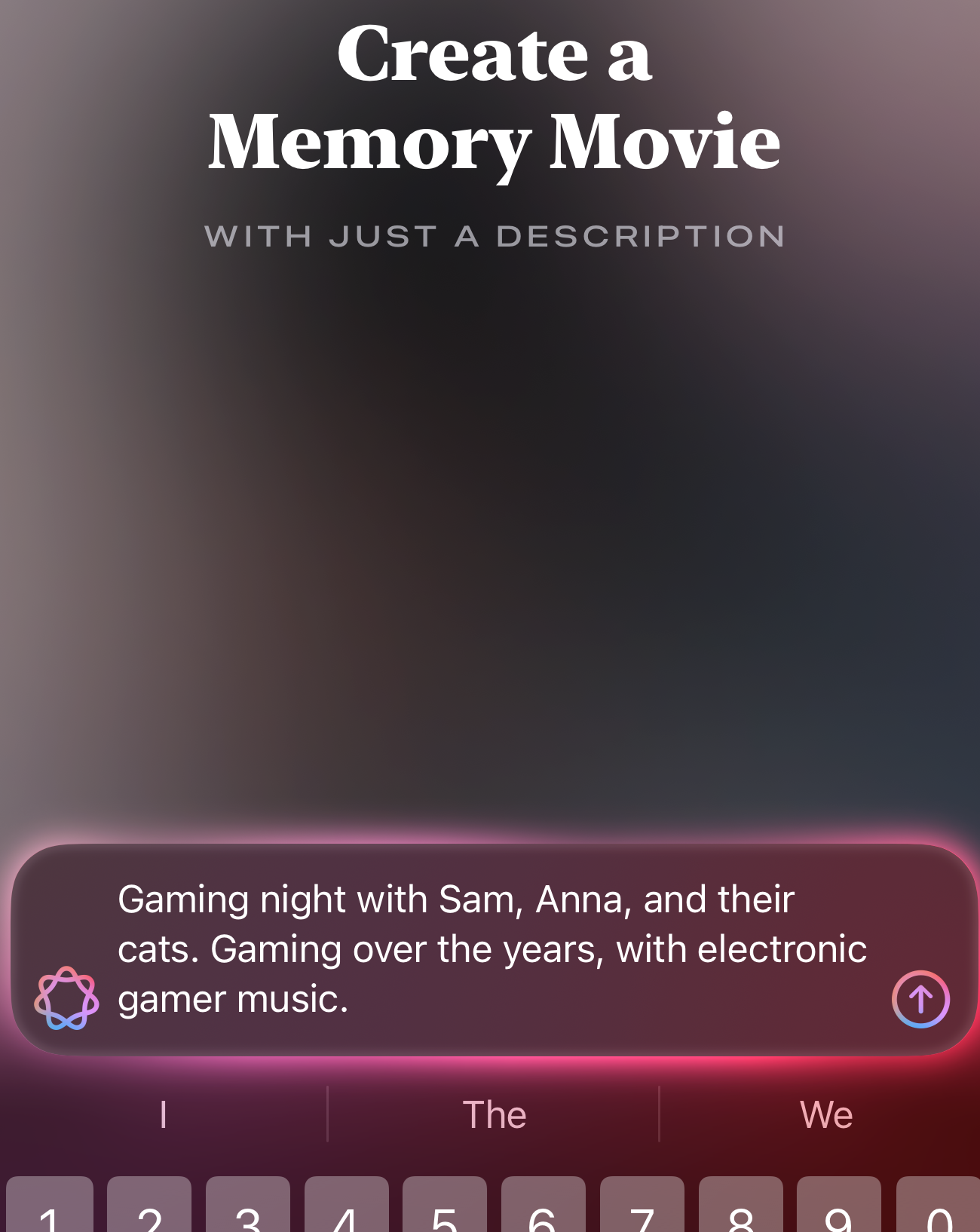

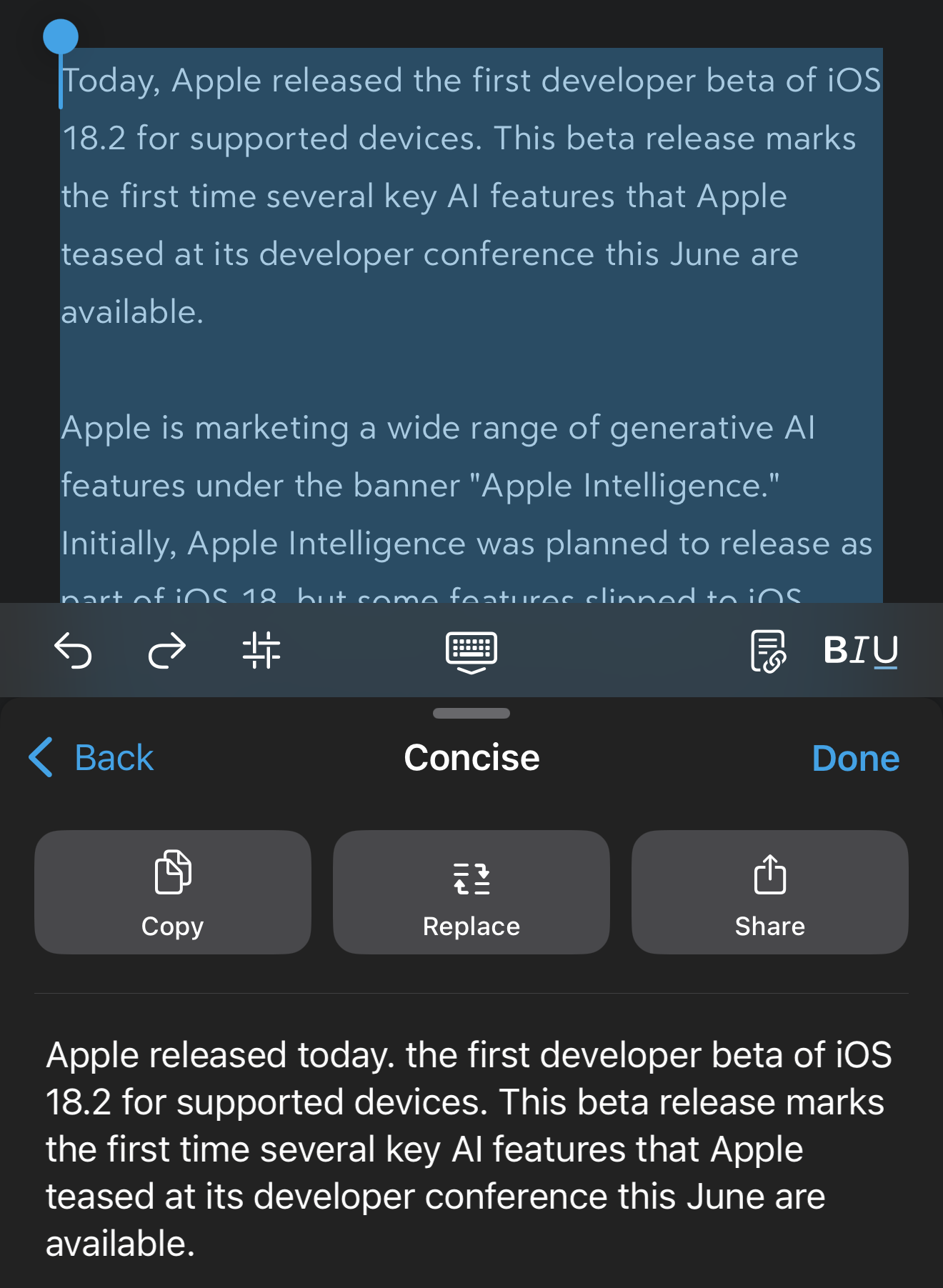

OpenAI’s GPT-5 model went live for most ChatGPT users this week, but lots of people use ChatGPT not through OpenAI’s interface but through other platforms or tools. One of the largest deployments is iOS, the iPhone operating system, which allows users to make certain queries via GPT-4o. It turns out those users won’t have to wait long for the latest model: Apple will switch to GPT-5 in iOS 26, iPadOS 26, and macOS Tahoe 26, according to 9to5Mac.

Apple has not officially announced when those OS updates will be released to users’ devices, but these major releases have typically been released in September in recent years.

The new model had already rolled out on some other platforms, like the coding tool GitHub Copilot via public preview, as well as Microsoft’s general-purpose Copilot.

GPT-5 purports to hallucinate 80 percent less and heralds a major rework of how OpenAI positions its models; for example, GPT-5 by default automatically chooses whether to use a reasoning-optimized model based on the nature of the user’s prompt. Free users will have to accept whatever the choice is, while paid ChatGPT accounts allow manually picking which model to use on a prompt-by-prompt basis. It’s unclear how that will work in iOS; will it stick to GPT-5’s non-reasoning mode all the time, or will it utilize GPT-5 “(with thinking)”? And if it supports the latter, will paid ChatGPT users be able to manually pick like they can in the ChatGPT app, or will they be limited to whatever ChatGPT deems appropriate, like free users? We don’t know yet.

Apple brings OpenAI’s GPT-5 to iOS and macOS Read More »