Anthropic’s Haiku 3.5 surprises experts with an “intelligence” price increase

Speaking of Opus, Claude 3.5 Opus is nowhere to be seen, as AI researcher Simon Willison noted to Ars Technica in an interview. “All references to 3.5 Opus have vanished without a trace, and the price of 3.5 Haiku was increased the day it was released,” he said. “Claude 3.5 Haiku is significantly more expensive than both Gemini 1.5 Flash and GPT-4o mini—the excellent low-cost models from Anthropic’s competitors.”

Cheaper over time?

So far in the AI industry, newer versions of AI language models typically maintain similar or cheaper pricing to their predecessors. The company had initially indicated Claude 3.5 Haiku would cost the same as the previous version before announcing the higher rates.

“I was expecting this to be a complete replacement for their existing Claude 3 Haiku model, in the same way that Claude 3.5 Sonnet eclipsed the existing Claude 3 Sonnet while maintaining the same pricing,” Willison wrote on his blog. “Given that Anthropic claim that their new Haiku out-performs their older Claude 3 Opus, this price isn’t disappointing, but it’s a small surprise nonetheless.”

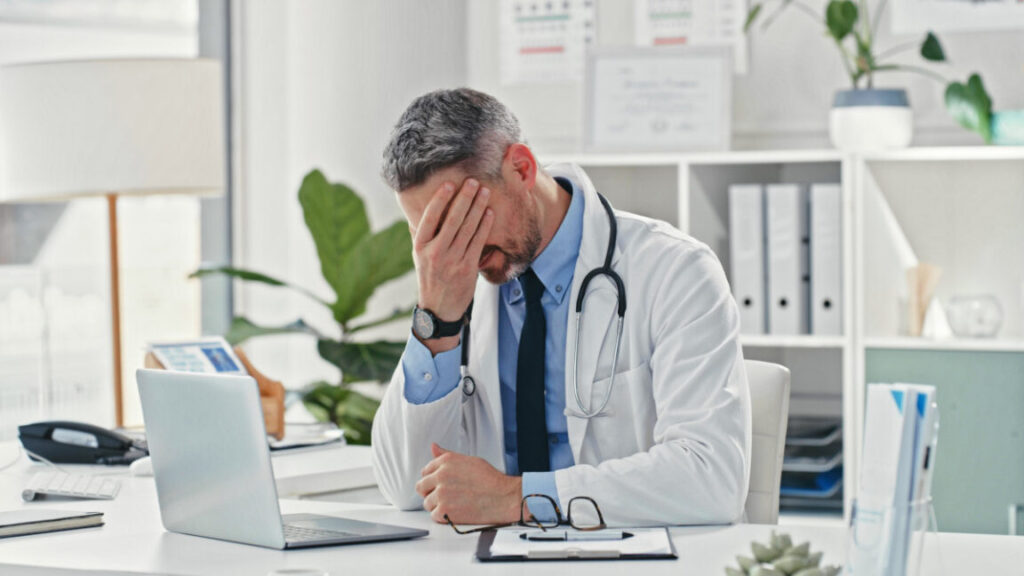

Claude 3.5 Haiku arrives with some trade-offs. While the model produces longer text outputs and contains more recent training data, it cannot analyze images like its predecessor. Alex Albert, who leads developer relations at Anthropic, wrote on X that the earlier version, Claude 3 Haiku, will remain available for users who need image processing capabilities and lower costs.

The new model is not yet available in the Claude.ai web interface or app. Instead, it runs on Anthropic’s API and third-party platforms, including AWS Bedrock. Anthropic markets the model for tasks like coding suggestions, data extraction and labeling, and content moderation, though, like any LLM, it can easily make stuff up confidently.

“Is it good enough to justify the extra spend? It’s going to be difficult to figure that out,” Willison told Ars. “Teams with robust automated evals against their use-cases will be in a good place to answer that question, but those remain rare.”

Anthropic’s Haiku 3.5 surprises experts with an “intelligence” price increase Read More »