Anthropic’s new AI search feature digs through the web for answers

Caution over citations and sources

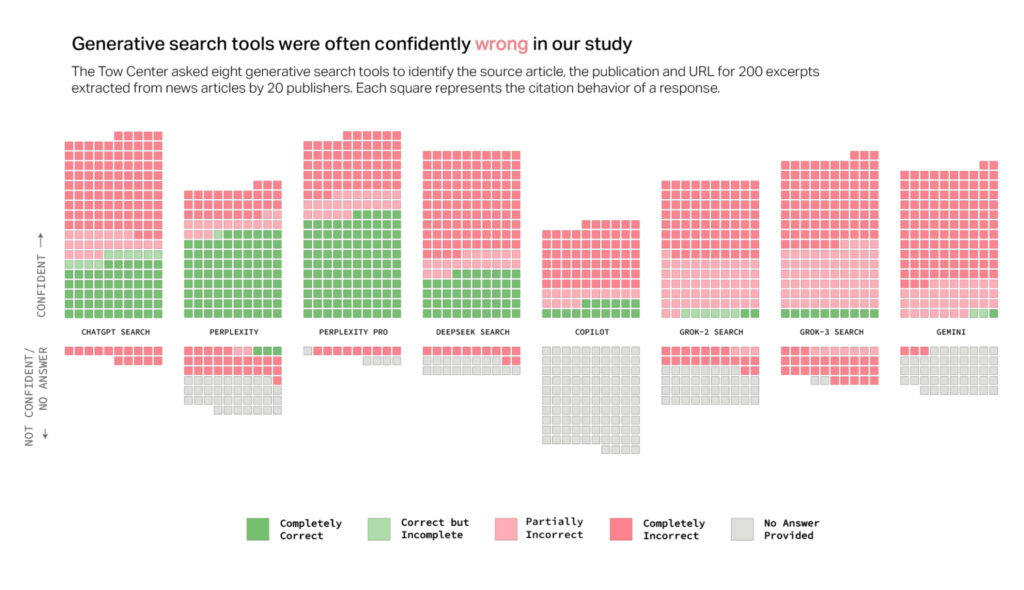

Claude users should be warned that large language models (LLMs) like those that power Claude are notorious for sneaking in plausible-sounding confabulated sources. A recent survey of citation accuracy by LLM-based web search assistants showed a 60 percent error rate. That particular study did not include Anthropic’s new search feature because it took place before this current release.

When using web search, Claude provides citations for information it includes from online sources, ostensibly helping users verify facts. From our informal and unscientific testing, Claude’s search results appeared fairly accurate and detailed at a glance, but that is no guarantee of overall accuracy. Anthropic did not release any search accuracy benchmarks, so independent researchers will likely examine that over time.

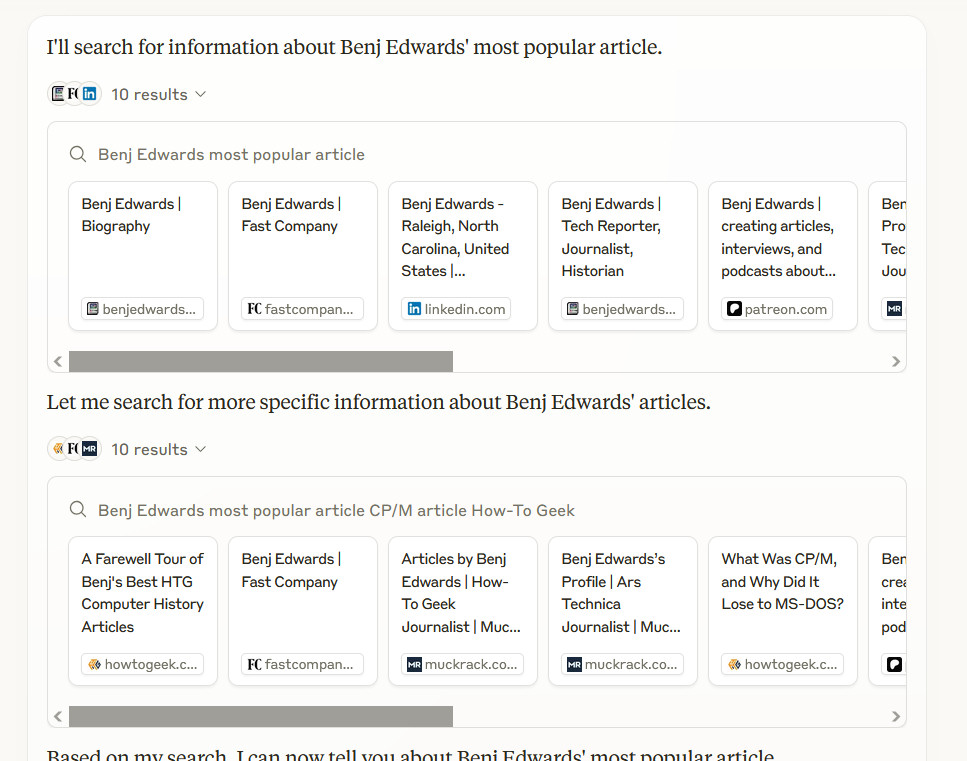

A screenshot example of what Anthropic Claude’s web search citations look like, captured March 21, 2025. Credit: Benj Edwards

Even if Claude search were, say, 99 percent accurate (a number we are making up as an illustration), the 1 percent chance it is wrong may come back to haunt you later if you trust it blindly. Before accepting any source of information delivered by Claude (or any AI assistant) for any meaningful purpose, vet it very carefully using multiple independent non-AI sources.

A partnership with Brave under the hood

Behind the scenes, it looks like Anthropic partnered with Brave Search to power the search feature, from a company, Brave Software, perhaps best known for its web browser app. Brave Search markets itself as a “private search engine,” which feels in line with how Anthropic likes to market itself as an ethical alternative to Big Tech products.

Simon Willison discovered the connection between Anthropic and Brave through Anthropic’s subprocessor list (a list of third-party services that Anthropic uses for data processing), which added Brave Search on March 19.

He further demonstrated the connection on his blog by asking Claude to search for pelican facts. He wrote, “It ran a search for ‘Interesting pelican facts’ and the ten results it showed as citations were an exact match for that search on Brave.” He also found evidence in Claude’s own outputs, which referenced “BraveSearchParams” properties.

The Brave engine under the hood has implications for individuals, organizations, or companies that might want to block Claude from accessing their sites since, presumably, Brave’s web crawler is doing the web indexing. Anthropic did not mention how sites or companies could opt out of the feature. We have reached out to Anthropic for clarification.

Anthropic’s new AI search feature digs through the web for answers Read More »