In the age of AI, we must protect human creativity as a natural resource

Ironically, our present AI age has shone a bright spotlight on the immense value of human creativity as breakthroughs in technology threaten to undermine it. As tech giants rush to build newer AI models, their web crawlers vacuum up creative content, and those same models spew floods of synthetic media, risking drowning out the human creative spark in an ocean of pablum.

Given this trajectory, AI-generated content may soon exceed the entire corpus of historical human creative works, making the preservation of the human creative ecosystem not just an ethical concern but an urgent imperative. The alternative is nothing less than a gradual homogenization of our cultural landscape, where machine learning flattens the richness of human expression into a mediocre statistical average.

A limited resource

By ingesting billions of creations, chatbots learn to talk, and image synthesizers learn to draw. Along the way, the AI companies behind them treat our shared culture like an inexhaustible resource to be strip-mined, with little thought for the consequences.

But human creativity isn’t the product of an industrial process; it’s inherently throttled precisely because we are finite biological beings who draw inspiration from real lived experiences while balancing creativity with the necessities of life—sleep, emotional recovery, and limited lifespans. Creativity comes from making connections, and it takes energy, time, and insight for those connections to be meaningful. Until recently, a human brain was a prerequisite for making those kinds of connections, and there’s a reason why that is valuable.

Every human brain isn’t just a store of data—it’s a knowledge engine that thinks in a unique way, creating novel combinations of ideas. Instead of having one “connection machine” (an AI model) duplicated a million times, we have seven billion neural networks, each with a unique perspective. Relying on the diversity of thought derived from human cognition helps us escape the monolithic thinking that may emerge if everyone were to draw from the same AI-generated sources.

Today, the AI industry’s business models unintentionally echo the ways in which early industrialists approached forests and fisheries—as free inputs to exploit without considering ecological limits.

Just as pollution from early factories unexpectedly damaged the environment, AI systems risk polluting the digital environment by flooding the Internet with synthetic content. Like a forest that needs careful management to thrive or a fishery vulnerable to collapse from overexploitation, the creative ecosystem can be degraded even if the potential for imagination remains.

Depleting our creative diversity may become one of the hidden costs of AI, but that diversity is worth preserving. If we let AI systems deplete or pollute the human outputs they depend on, what happens to AI models—and ultimately to human society—over the long term?

AI’s creative debt

Every AI chatbot or image generator exists only because of human works, and many traditional artists argue strongly against current AI training approaches, labeling them plagiarism. Tech companies tend to disagree, although their positions vary. For example, in 2023, imaging giant Adobe took an unusual step by training its Firefly AI models solely on licensed stock photos and public domain works, demonstrating that alternative approaches are possible.

Adobe’s licensing model offers a contrast to companies like OpenAI, which rely heavily on scraping vast amounts of Internet content without always distinguishing between licensed and unlicensed works.

OpenAI has argued that this type of scraping constitutes “fair use” and effectively claims that competitive AI models at current performance levels cannot be developed without relying on unlicensed training data, despite Adobe’s alternative approach.

The “fair use” argument often hinges on the legal concept of “transformative use,” the idea that using works for a fundamentally different purpose from creative expression—such as identifying patterns for AI—does not violate copyright. Generative AI proponents often argue that their approach is how human artists learn from the world around them.

Meanwhile, artists are expressing growing concern about losing their livelihoods as corporations turn to cheap, instantaneously generated AI content. They also call for clear boundaries and consent-driven models rather than allowing developers to extract value from their creations without acknowledgment or remuneration.

Copyright as crop rotation

This tension between artists and AI reveals a deeper ecological perspective on creativity itself. Copyright’s time-limited nature was designed as a form of resource management, like crop rotation or regulated fishing seasons that allow for regeneration. Copyright expiration isn’t a bug; its designers hoped it would ensure a steady replenishment of the public domain, feeding the ecosystem from which future creativity springs.

On the other hand, purely AI-generated outputs cannot be copyrighted in the US, potentially brewing an unprecedented explosion in public domain content, although it’s content that contains smoothed-over imitations of human perspectives.

Treating human-generated content solely as raw material for AI training disrupts this ecological balance between “artist as consumer of creative ideas” and “artist as producer.” Repeated legislative extensions of copyright terms have already significantly delayed the replenishment cycle, keeping works out of the public domain for much longer than originally envisioned. Now, AI’s wholesale extraction approach further threatens this delicate balance.

The resource under strain

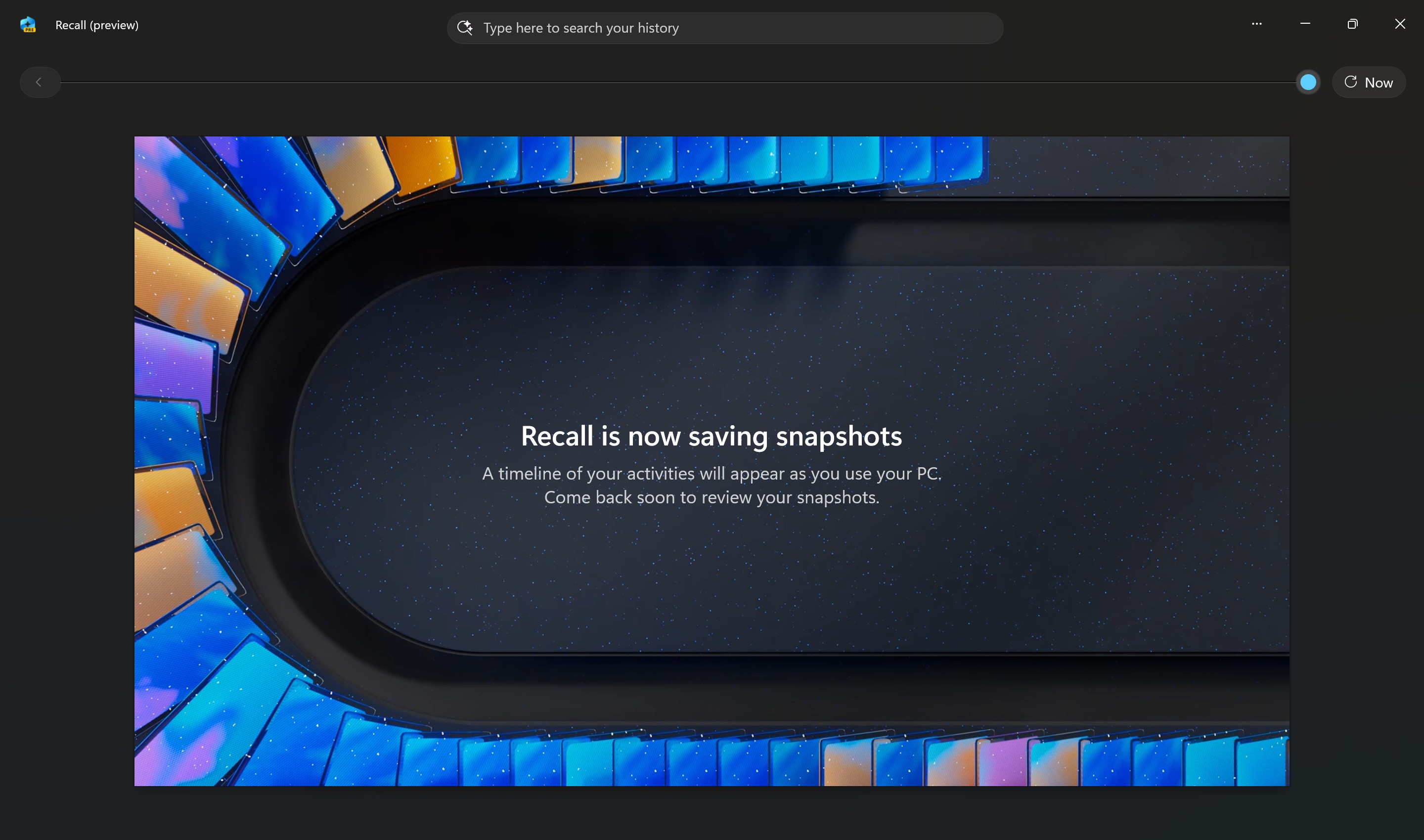

Our creative ecosystem is already showing measurable strain from AI’s impact, from tangible present-day infrastructure burdens to concerning future possibilities.

Aggressive AI crawlers already effectively function as denial-of-service attacks on certain sites, with Cloudflare documenting GPTBot’s immediate impact on traffic patterns. Wikimedia’s experience provides clear evidence of current costs: AI crawlers caused a documented 50 percent bandwidth surge, forcing the nonprofit to divert limited resources to defensive measures rather than to its core mission of knowledge sharing. As Wikimedia says, “Our content is free, our infrastructure is not.” Many of these crawlers demonstrably ignore established technical boundaries like robots.txt files.

Beyond infrastructure strain, our information environment also shows signs of degradation. Google has publicly acknowledged rising volumes of “spammy, low-quality,” often auto-generated content appearing in search results. A Wired investigation found concrete examples of AI-generated plagiarism sometimes outranking original reporting in search results. This kind of digital pollution led Ross Anderson of Cambridge University to compare it to filling oceans with plastic—it’s a contamination of our shared information spaces.

Looking to the future, more risks may emerge. Ted Chiang’s comparison of LLMs to lossy JPEGs offers a framework for understanding potential problems, as each AI generation summarizes web information into an increasingly “blurry” facsimile of human knowledge. The logical extension of this process—what some researchers term “model collapse“—presents a risk of degradation in our collective knowledge ecosystem if models are trained indiscriminately on their own outputs. (However, this differs from carefully designed synthetic data that can actually improve model efficiency.)

This downward spiral of AI pollution may soon resemble a classic “tragedy of the commons,” in which organizations act from self-interest at the expense of shared resources. If AI developers continue extracting data without limits or meaningful contributions, the shared resource of human creativity could eventually degrade for everyone.

Protecting the human spark

While AI models that simulate creativity in writing, coding, images, audio, or video can achieve remarkable imitations of human works, this sophisticated mimicry currently lacks the full depth of the human experience.

For example, AI models lack a body that endures the pain and travails of human life. They don’t grow over the course of a human lifespan in real time. When an AI-generated output happens to connect with us emotionally, it often does so by imitating patterns learned from a human artist who has actually lived that pain or joy.

Even if future AI systems develop more sophisticated simulations of emotional states or embodied experiences, they would still fundamentally differ from human creativity, which emerges organically from lived biological experience, cultural context, and social interaction.

That’s because the world constantly changes. New types of human experience emerge. If an ethically trained AI model is to remain useful, researchers must train it on recent human experiences, such as viral trends, evolving slang, and cultural shifts.

Current AI solutions, like retrieval-augmented generation (RAG), address this challenge somewhat by retrieving up-to-date, external information to supplement their static training data. Yet even RAG methods depend heavily on validated, high-quality human-generated content—the very kind of data at risk if our digital environment becomes overwhelmed with low-quality AI-produced output.

This need for high-quality, human-generated data is a major reason why companies like OpenAI have pursued media deals (including a deal signed with Ars Technica parent Condé Nast last August). Yet paradoxically, the same models fed on valuable human data often produce the low-quality spam and slop that floods public areas of the Internet, degrading the very ecosystem they rely on.

AI as creative support

When used carelessly or excessively, generative AI is a threat to the creative ecosystem, but we can’t wholly discount the tech as a tool in a human creative’s arsenal. The history of art is full of technological changes (new pigments, brushes, typewriters, word processors) that transform the nature of artistic production while augmenting human creativity.

Bear with me because there’s a great deal of nuance here that is easy to miss among today’s more impassioned reactions to people using AI as a blunt instrument of creating mediocrity.

While many artists rightfully worry about AI’s extractive tendencies, research published in Harvard Business Review indicates that AI tools can potentially amplify rather than merely extract creative capacity, suggesting that a symbiotic relationship is possible under the right conditions.

Inherent in this argument is that the responsible use of AI is reflected in the skill of the user. You can use a paintbrush to paint a wall or paint the Mona Lisa. Similarly, generative AI can mindlessly fill a canvas with slop, or a human can utilize it to express their own ideas.

Machine learning tools (such as those in Adobe Photoshop) already help human creatives prototype concepts faster, iterate on variations they wouldn’t have considered, or handle some repetitive production tasks like object removal or audio transcription, freeing humans to focus on conceptual direction and emotional resonance.

These potential positives, however, don’t negate the need for responsible stewardship and respecting human creativity as a precious resource.

Cultivating the future

So what might a sustainable ecosystem for human creativity actually involve?

Legal and economic approaches will likely be key. Governments could legislate that AI training must be opt-in, or at the very least, provide a collective opt-out registry (as the EU’s “AI Act” does).

Other potential mechanisms include robust licensing or royalty systems, such as creating a royalty clearinghouse (like the music industry’s BMI or ASCAP) for efficient licensing and fair compensation. Those fees could help compensate human creatives and encourage them to keep creating well into the future.

Deeper shifts may involve cultural values and governance. Inspired by models like Japan’s “Living National Treasures“—where the government funds artisans to preserve vital skills and support their work. Could we establish programs that similarly support human creators while also designating certain works or practices as “creative reserves,” funding the further creation of certain creative works even if the economic market for them dries up?

Or a more radical shift might involve an “AI commons”—legally declaring that any AI model trained on publicly scraped data should be owned collectively as a shared public domain, ensuring that its benefits flow back to society and don’t just enrich corporations.

Meanwhile, Internet platforms have already been experimenting with technical defenses against industrial-scale AI demands. Examples include proof-of-work challenges, slowdown “tarpits” (e.g., Nepenthes), shared crawler blocklists (“ai.robots.txt“), commercial tools (Cloudflare’s AI Labyrinth), and Wikimedia’s “WE5: Responsible Use of Infrastructure” initiative.

These solutions aren’t perfect, and implementing any of them would require overcoming significant practical hurdles. Strict regulations might slow beneficial AI development; opt-out systems burden creators, while opt-in models can be complex to track. Meanwhile, tech defenses often invite arms races. Finding a sustainable, equitable balance remains the core challenge. The issue won’t be solved in a day.

Invest in people

While navigating these complex systemic challenges will take time and collective effort, there is a surprisingly direct strategy that organizations can adopt now: investing in people. Don’t sacrifice human connection and insight to save money with mediocre AI outputs.

Organizations that cultivate unique human perspectives and integrate them with thoughtful AI augmentation will likely outperform those that pursue cost-cutting through wholesale creative automation. Investing in people acknowledges that while AI can generate content at scale, the distinctiveness of human insight, experience, and connection remains priceless.

In the age of AI, we must protect human creativity as a natural resource Read More »