It’s “frighteningly likely” many US courts will overlook AI errors, expert says

Order in the court! Order in the court! Judges are facing outcry over a suspected AI-generated order in a court.

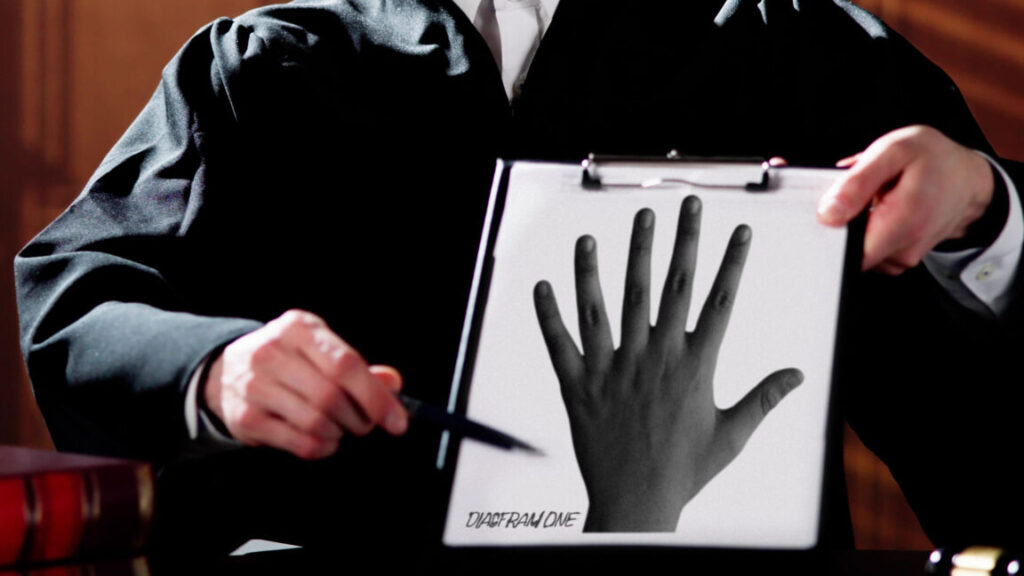

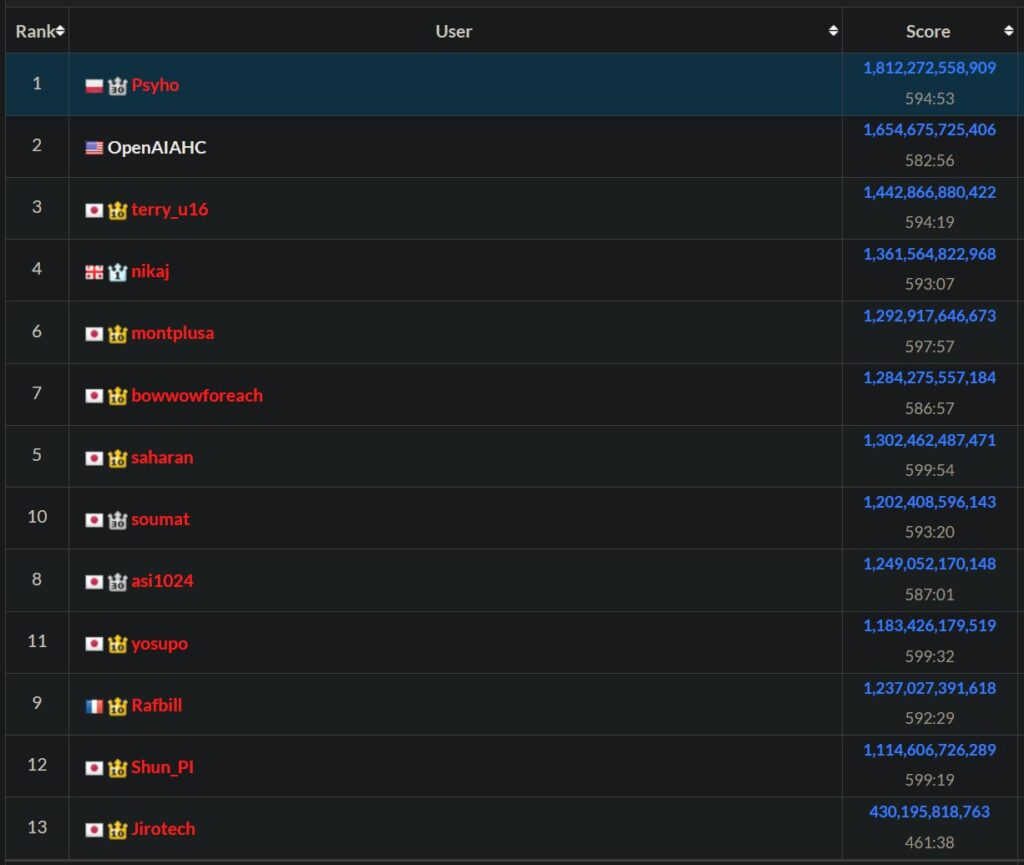

Fueling nightmares that AI may soon decide legal battles, a Georgia court of appeals judge, Jeff Watkins, explained why a three-judge panel vacated an order last month that appears to be the first known ruling in which a judge sided with someone seemingly relying on fake AI-generated case citations to win a legal fight.

Now, experts are warning that judges overlooking AI hallucinations in court filings could easily become commonplace, especially in the typically overwhelmed lower courts. And so far, only two states have moved to force judges to sharpen their tech competencies and adapt so they can spot AI red flags and theoretically stop disruptions to the justice system at all levels.

The recently vacated order came in a Georgia divorce dispute, where Watkins explained that the order itself was drafted by the husband’s lawyer, Diana Lynch. That’s a common practice in many courts, where overburdened judges historically rely on lawyers to draft orders. But that protocol today faces heightened scrutiny as lawyers and non-lawyers increasingly rely on AI to compose and research legal filings, and judges risk rubberstamping fake opinions by not carefully scrutinizing AI-generated citations.

The errant order partly relied on “two fictitious cases” to deny the wife’s petition—which Watkins suggested were “possibly ‘hallucinations’ made up by generative-artificial intelligence”—as well as two cases that had “nothing to do” with the wife’s petition.

Lynch was hit with $2,500 in sanctions after the wife appealed, and the husband’s response—which also appeared to be prepared by Lynch—cited 11 additional cases that were “either hallucinated” or irrelevant. Watkins was further peeved that Lynch supported a request for attorney’s fees for the appeal by citing “one of the new hallucinated cases,” writing it added “insult to injury.”

Worryingly, the judge could not confirm whether the fake cases were generated by AI or even determine if Lynch inserted the bogus cases into the court filings, indicating how hard it can be for courts to hold lawyers accountable for suspected AI hallucinations. Lynch did not respond to Ars’ request to comment, and her website appeared to be taken down following media attention to the case.

But Watkins noted that “the irregularities in these filings suggest that they were drafted using generative AI” while warning that many “harms flow from the submission of fake opinions.” Exposing deceptions can waste time and money, and AI misuse can deprive people of raising their best arguments. Fake orders can also soil judges’ and courts’ reputations and promote “cynicism” in the justice system. If left unchecked, Watkins warned, these harms could pave the way to a future where a “litigant may be tempted to defy a judicial ruling by disingenuously claiming doubt about its authenticity.”

“We have no information regarding why Appellee’s Brief repeatedly cites to nonexistent cases and can only speculate that the Brief may have been prepared by AI,” Watkins wrote.

Ultimately, Watkins remanded the case, partly because the fake cases made it impossible for the appeals court to adequately review the wife’s petition to void the prior order. But no matter the outcome of the Georgia case, the initial order will likely forever be remembered as a cautionary tale for judges increasingly scrutinized for failures to catch AI misuses in court.

“Frighteningly likely” judge’s AI misstep will be repeated

John Browning, a retired justice on Texas’ Fifth Court of Appeals and now a full-time law professor at Faulkner University, last year published a law article Watkins cited that warned of the ethical risks of lawyers using AI. In the article, Browning emphasized that the biggest concern at that point was that lawyers “will use generative AI to produce work product they treat as a final draft, without confirming the accuracy of the information contained therein or without applying their own independent professional judgment.”

Today, judges are increasingly drawing the same scrutiny, and Browning told Ars he thinks it’s “frighteningly likely that we will see more cases” like the Georgia divorce dispute, in which “a trial court unwittingly incorporates bogus case citations that an attorney includes in a proposed order” or even potentially in “proposed findings of fact and conclusions of law.”

“I can envision such a scenario in any number of situations in which a trial judge maintains a heavy docket and looks to counsel to work cooperatively in submitting proposed orders, including not just family law cases but other civil and even criminal matters,” Browning told Ars.

According to reporting from the National Center for State Courts, a nonprofit representing court leaders and professionals who are advocating for better judicial resources, AI tools like ChatGPT have made it easier for high-volume filers and unrepresented litigants who can’t afford attorneys to file more cases, potentially further bogging down courts.

Peter Henderson, a researcher who runs the Princeton Language+Law, Artificial Intelligence, & Society (POLARIS) Lab, told Ars that he expects cases like the Georgia divorce dispute aren’t happening every day just yet.

It’s likely that a “few hallucinated citations go overlooked” because generally, fake cases are flagged through “the adversarial nature of the US legal system,” he suggested. Browning further noted that trial judges are generally “very diligent in spotting when a lawyer is citing questionable authority or misleading the court about what a real case actually said or stood for.”

Henderson agreed with Browning that “in courts with much higher case loads and less adversarial process, this may happen more often.” But Henderson noted that the appeals court catching the fake cases is an example of the adversarial process working.

While that’s true in this case, it seems likely that anyone exhausted by the divorce legal process, for example, may not pursue an appeal if they don’t have energy or resources to discover and overturn errant orders.

Judges’ AI competency increasingly questioned

While recent history confirms that lawyers risk being sanctioned, fired from their firms, or suspended from practicing law for citing fake AI-generated cases, judges will likely only risk embarrassment for failing to catch lawyers’ errors or even for using AI to research their own opinions.

Not every judge is prepared to embrace AI without proper vetting, though. To shield the legal system, some judges have banned AI. Others have required disclosures—with some even demanding to know which specific AI tool was used—but that solution has not caught on everywhere.

Even if all courts required disclosures, Browning pointed out that disclosures still aren’t a perfect solution since “it may be difficult for lawyers to even discern whether they have used generative AI,” as AI features become increasingly embedded in popular legal tools. One day, it “may eventually become unreasonable to expect” lawyers “to verify every generative AI output,” Browning suggested.

Most likely—as a judicial ethics panel from Michigan has concluded—judges will determine “the best course of action for their courts with the ever-expanding use of AI,” Browning’s article noted. And the former justice told Ars that’s why education will be key, for both lawyers and judges, as AI advances and becomes more mainstream in court systems.

In an upcoming summer 2025 article in The Journal of Appellate Practice & Process, “The Dawn of the AI Judge,” Browning attempts to soothe readers by saying that AI isn’t yet fueling a legal dystopia. And humans are unlikely to face “robot judges” spouting AI-generated opinions any time soon, the former justice suggested.

Standing in the way of that, at least two states—Michigan and West Virginia—”have already issued judicial ethics opinions requiring judges to be ‘tech competent’ when it comes to AI,” Browning told Ars. And “other state supreme courts have adopted official policies regarding AI,” he noted, further pressuring judges to bone up on AI.

Meanwhile, several states have set up task forces to monitor their regional court systems and issue AI guidance, while states like Virginia and Montana have passed laws requiring human oversight for any AI systems used in criminal justice decisions.

Judges must prepare to spot obvious AI red flags

Until courts figure out how to navigate AI—a process that may look different from court to court—Browning advocates for more education and ethical guidance for judges to steer their use and attitudes about AI. That could help equip judges to avoid both ignorance of the many AI pitfalls and overconfidence in AI outputs, potentially protecting courts from AI hallucinations, biases, and evidentiary challenges sneaking past systems requiring human review and scrambling the court system.

An overlooked part of educating judges could be exposing AI’s influence so far in courts across the US. Henderson’s team is planning research that tracks which models attorneys are using most in courts. That could reveal “the potential legal arguments that these models are pushing” to sway courts—and which judicial interventions might be needed, Henderson told Ars.

“Over the next few years, researchers—like those in our group, the POLARIS Lab—will need to develop new ways to track the massive influence that AI will have and understand ways to intervene,” Henderson told Ars. “For example, is any model pushing a particular perspective on legal doctrine across many different cases? Was it explicitly trained or instructed to do so?”

Henderson also advocates for “an open, free centralized repository of case law,” which would make it easier for everyone to check for fake AI citations. “With such a repository, it is easier for groups like ours to build tools that can quickly and accurately verify citations,” Henderson said. That could be a significant improvement to the current decentralized court reporting system that often obscures case information behind various paywalls.

Dazza Greenwood, who co-chairs MIT’s Task Force on Responsible Use of Generative AI for Law, did not have time to send comments but pointed Ars to a LinkedIn thread where he suggested that a structural response may be needed to ensure that all fake AI citations are caught every time.

He recommended that courts create “a bounty system whereby counter-parties or other officers of the court receive sanctions payouts for fabricated cases cited in judicial filings that they reported first.” That way, lawyers will know that their work will “always” be checked and thus may shift their behavior if they’ve been automatically filing AI-drafted documents. In turn, that could alleviate pressure on judges to serve as watchdogs. It also wouldn’t cost much—mostly just redistributing the exact amount of fees that lawyers are sanctioned to AI spotters.

Novel solutions like this may be necessary, Greenwood suggested. Responding to a question asking if “shame and sanctions” are enough to stop AI hallucinations in court, Greenwood said that eliminating AI errors is imperative because it “gives both otherwise generally good lawyers and otherwise generally good technology a bad name.” Continuing to ban AI or suspend lawyers as a preferred solution risks dwindling court resources just as cases likely spike rather than potentially confronting the problem head-on.

Of course, there’s no guarantee that the bounty system would work. But “would the fact of such definite confidence that your cures will be individually checked and fabricated cites reported be enough to finally… convince lawyers who cut these corners that they should not cut these corners?”

In absence of a fake case detector like Henderson wants to build, experts told Ars that there are some obvious red flags that judges can note to catch AI-hallucinated filings.

Any case number with “123456” in it probably warrants review, Henderson told Ars. And Browning noted that AI tends to mix up locations for cases, too. “For example, a cite to a purported Texas case that has a ‘S.E. 2d’ reporter wouldn’t make sense, since Texas cases would be found in the Southwest Reporter,” Browning said, noting that some appellate judges have already relied on this red flag to catch AI misuses.

Those red flags would perhaps be easier to check with the open source tool that Henderson’s lab wants to make, but Browning said there are other tell-tale signs of AI usage that anyone who has ever used a chatbot is likely familiar with.

“Sometimes a red flag is the language cited from the hallucinated case; if it has some of the stilted language that can sometimes betray AI use, it might be a hallucination,” Browning said.

Judges already issuing AI-assisted opinions

Several states have assembled task forces like Greenwood’s to assess the risks and benefits of using AI in courts. In Georgia, the Judicial Council of Georgia Ad Hoc Committee on Artificial Intelligence and the Courts released a report in early July providing “recommendations to help maintain public trust and confidence in the judicial system as the use of AI increases” in that state.

Adopting the committee’s recommendations could establish “long-term leadership and governance”; a repository of approved AI tools, education, and training for judicial professionals; and more transparency on AI used in Georgia courts. But the committee expects it will take three years to implement those recommendations while AI use continues to grow.

Possibly complicating things further as judges start to explore using AI assistants to help draft their filings, the committee concluded that it’s still too early to tell if the judges’ code of conduct should be changed to prevent “unintentional use of biased algorithms, improper delegation to automated tools, or misuse of AI-generated data in judicial decision-making.” That means, at least for now, that there will be no code-of-conduct changes in Georgia, where the only case in which AI hallucinations are believed to have swayed a judge has been found.

Notably, the committee’s report also confirmed that there are no role models for courts to follow, as “there are no well-established regulatory environments with respect to the adoption of AI technologies by judicial systems.” Browning, who chaired a now-defunct Texas AI task force, told Ars that judges lacking guidance will need to stay on their toes to avoid trampling legal rights. (A spokesperson for the State Bar of Texas told Ars the task force’s work “concluded” and “resulted in the creation of the new standing committee on Emerging Technology,” which offers general tips and guidance for judges in a recently launched AI Toolkit.)

“While I definitely think lawyers have their own duties regarding AI use, I believe that judges have a similar responsibility to be vigilant when it comes to AI use as well,” Browning said.

Judges will continue sorting through AI-fueled submissions not just from pro se litigants representing themselves but also from up-and-coming young lawyers who may be more inclined to use AI, and even seasoned lawyers who have been sanctioned up to $5,000 for failing to check AI drafts, Browning suggested.

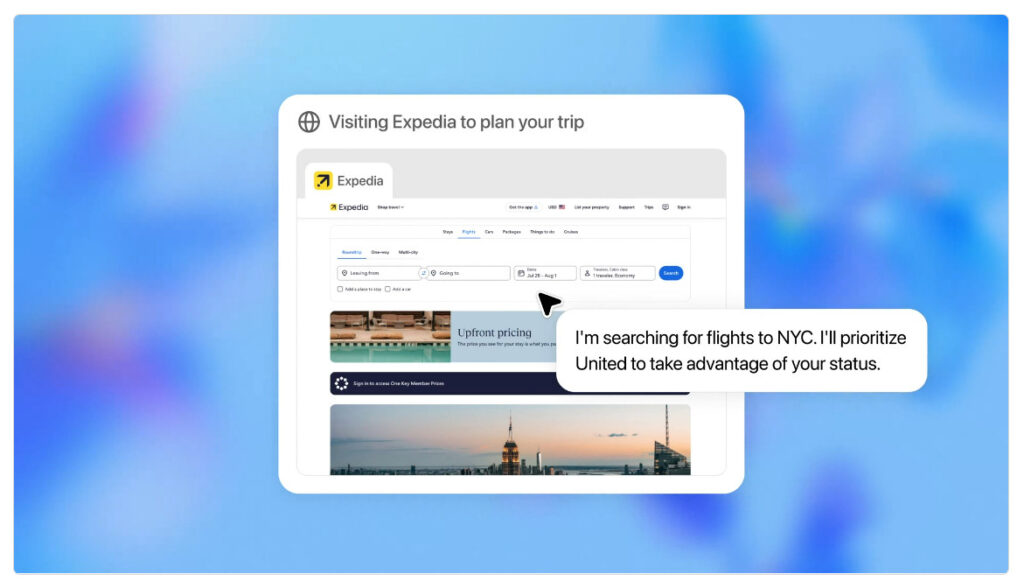

In his upcoming “AI Judge” article, Browning points to at least one judge, 11th Circuit Court of Appeals Judge Kevin Newsom, who has used AI as a “mini experiment” in preparing opinions for both a civil case involving an insurance coverage issue and a criminal matter focused on sentencing guidelines. Browning seems to appeal to judges’ egos to get them to study up so they can use AI to enhance their decision-making and possibly expand public trust in courts, not undermine it.

“Regardless of the technological advances that can support a judge’s decision-making, the ultimate responsibility will always remain with the flesh-and-blood judge and his application of very human qualities—legal reasoning, empathy, strong regard for fairness, and unwavering commitment to ethics,” Browning wrote. “These qualities can never be replicated by an AI tool.”

It’s “frighteningly likely” many US courts will overlook AI errors, expert says Read More »