Should an AI copy of you help decide if you live or die?

“It would combine demographic and clinical variables, documented advance-care-planning data, patient-recorded values and goals, and contextual information about specific decisions,” he said.

“Including textual and conversational data could further increase a model’s ability to learn why preferences arise and change, not just what a patient’s preference was at a single point in time,” Starke said.

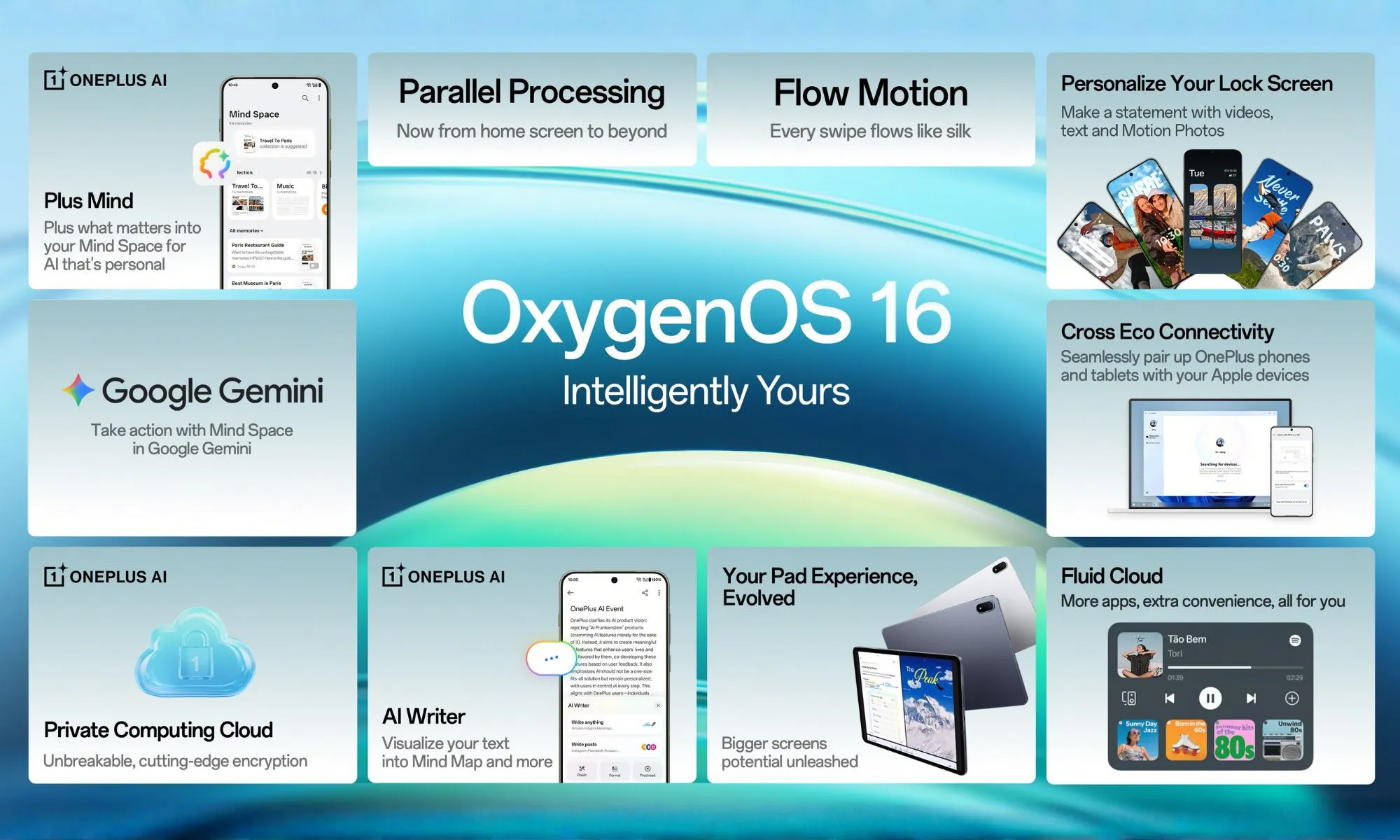

Ahmad suggested that future research could focus on validating fairness frameworks in clinical trials, evaluating moral trade-offs through simulations, and exploring how cross-cultural bioethics can be combined with AI designs.

Only then might AI surrogates be ready to be deployed, but only as “decision aids,” Ahmad wrote. Any “contested outputs” should automatically “trigger [an] ethics review,” Ahmad wrote, concluding that “the fairest AI surrogate is one that invites conversation, admits doubt, and leaves room for care.”

“AI will not absolve us”

Ahmad is hoping to test his conceptual models at various UW sites over the next five years, which would offer “some way to quantify how good this technology is,” he said.

“After that, I think there’s a collective decision regarding how as a society we decide to integrate or not integrate something like this,” Ahmad said.

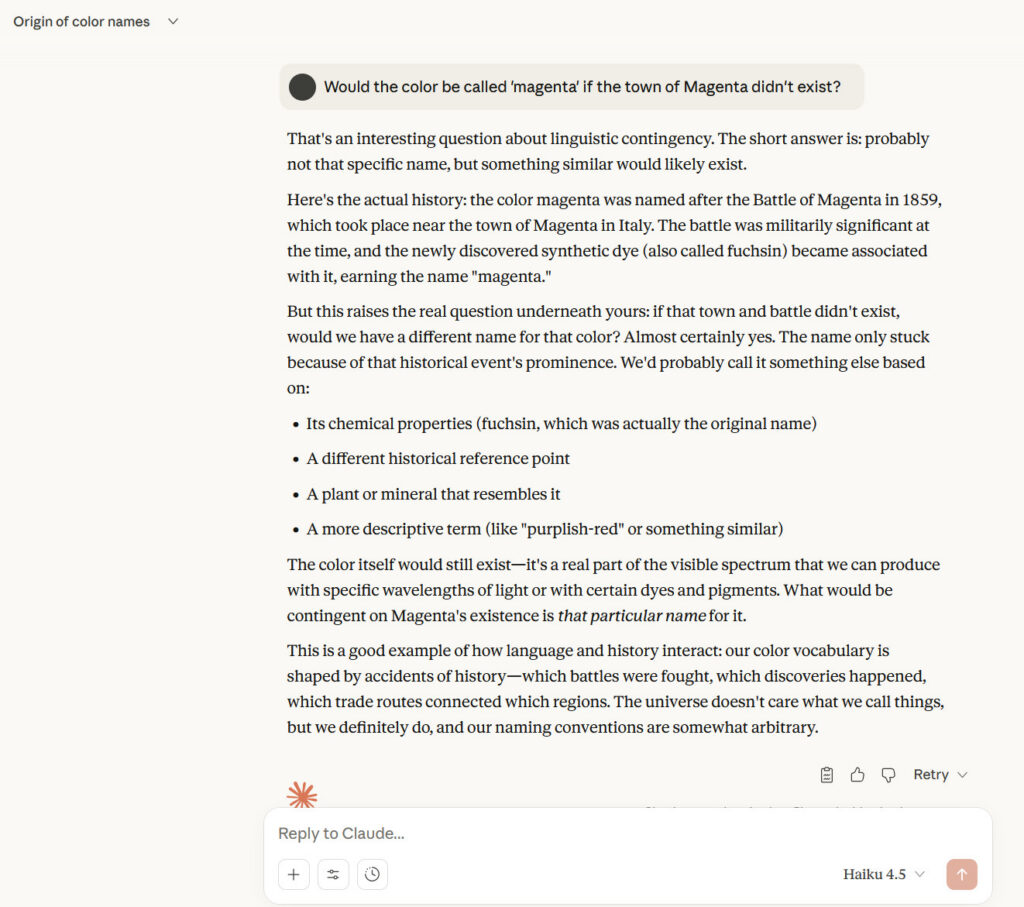

In his paper, he warned against chatbot AI surrogates that could be interpreted as a simulation of the patient, predicting that future models may even speak in patients’ voices and suggesting that the “comfort and familiarity” of such tools might blur “the boundary between assistance and emotional manipulation.”

Starke agreed that more research and “richer conversations” between patients and doctors are needed.

“We should be cautious not to apply AI indiscriminately as a solution in search of a problem,” Starke said. “AI will not absolve us from making difficult ethical decisions, especially decisions concerning life and death.”

Truog, the bioethics expert, told Ars he “could imagine that AI could” one day “provide a surrogate decision maker with some interesting information, and it would be helpful.”

But a “problem with all of these pathways… is that they frame the decision of whether to perform CPR as a binary choice, regardless of context or the circumstances of the cardiac arrest,” Truog’s editorial said. “In the real world, the answer to the question of whether the patient would want to have CPR” when they’ve lost consciousness, “in almost all cases,” is “it depends.”

When Truog thinks about the kinds of situations he could end up in, he knows he wouldn’t just be considering his own values, health, and quality of life. His choice “might depend on what my children thought” or “what the financial consequences would be on the details of what my prognosis would be,” he told Ars.

“I would want my wife or another person that knew me well to be making those decisions,” Truog said. “I wouldn’t want somebody to say, ‘Well, here’s what AI told us about it.’”

Should an AI copy of you help decide if you live or die? Read More »