I can’t stop shooting Oddcore’s endless waves of weird little guys

Every new semi-randomized area you clear increases your total capacity to store souls, but every visit to the portal shop increases the additional “tax” you need to spend on every purchase. This makes the decision of when to warp away to the shop a persistent quandary—do you power up as quickly as possible to increase your chances of survival, or wait until you’ll be able to purchase even more power-ups a little later?

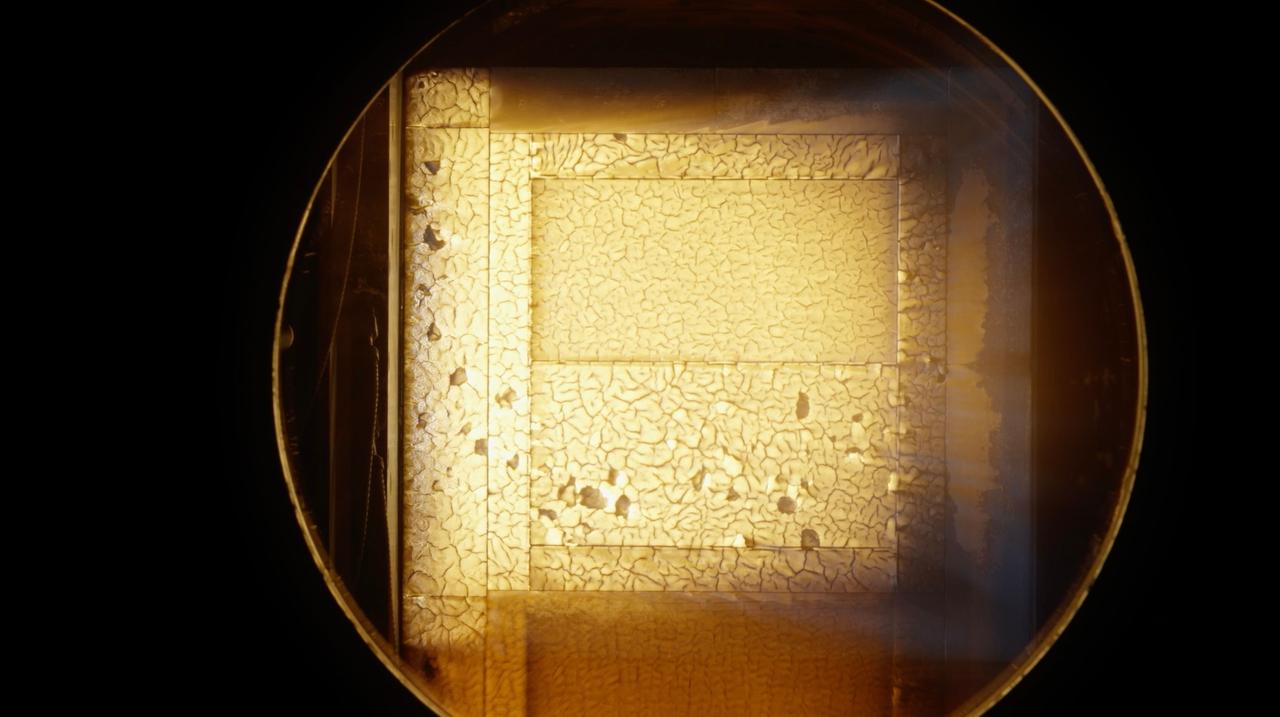

What’s around the corner?

All the while, the enemies keep coming fast and furious, slowly getting faster, tougher, and more capable with each new zone you enter. Through it all, the tight controls, forgiving aim system, and wide variety of weapon and gadget options make every firefight fast, frenetic, and fun.

To keep things from getting too repetitive, you’ll sometimes get thrown into an arena where you have to chase down frolicking golden humanoid flowers or destroy a few giant ambulatory mushrooms—you know, standard tropes of the video game world. You’ll also occasionally get dropped into brief, intentionally off-putting, empty interstitial rooms that seem designed to surprise Twitch viewers more than fit some sort of coherent aesthetic, or “corruptions” that briefly prevent you from gaining health and/or warping away to the convenience shop for a breather.

What’s the worst that could happen? Credit: Oddcorp

Between runs, you can move around an ersatz redemption arcade to earn new weapons and gadgets and explore the miniature theme park setting, which is full of hidden crannies and unlockable play spaces. In a few hours of play, I’ve already stumbled on so many secrets by pure accident that I can only imagine unlocking them all will be a real undertaking (and I presume even more will be added as the game moves through Early Access).

The in-game leaderboards and achievements suggest that it is possible to “beat” Oddcore at some point, presumably by combining enough skill and lucky upgrades to power your way through dozens of variants in a single run. Frankly, I’m not sure I’ll ever master the game enough to reach that point. Even so, I’m happy to have a new excuse to take a brain break by shooting a bunch of weird little guys in weird little spaces for a few minutes at a time.

I can’t stop shooting Oddcore’s endless waves of weird little guys Read More »