Selling H200s to China Is Unwise and Unpopular

AI is the most important thing about the future. It is vital to national security. It will be central to economic, military and strategic supremacy.

This is true regardless of what other dangers and opportunities AI might present.

The good news is that America has many key advantages in AI.

America’s greatest advantage in AI is our vastly superior access to compute.

We are in danger of selling a large portion of that advantage for 30 pieces of silver.

This is on track to be done against the wishes of Congress as well as most of those in the executive branch.

Who does it benefit? It benefits China. It might not even benefit Nvidia.

Doing so would be both highly unwise and highly unpopular.

We should not sell highly capable Nvidia H200 chips to China.

If it is too late to not sell H200s, we must limit quantities, and ensure it stops there. We absolutely cannot be giving away other future chips on a similar delay.

The good news is that the stock market reaction implies this might not scale.

Bayeslord: I don’t know anyone who thinks this is a good idea.

Jordan Schneider: DOJ arrests H200 smugglers the SAME DAY Trump legalizes their export! too good.

Here it is, exactly as he wrote it on Truth Social:

Donald Trump (President of the United States): I have informed President Xi, of China, that the United States will allow NVIDIA to ship its H200 products to approved customers in China, and other Countries, under conditions that allow for continued strong National Security. President Xi responded positively! $25% will be paid to the United States of America. This policy will support American Jobs, strengthen U.S. Manufacturing, and benefit American Taxpayers. The Biden Administration forced our Great Companies to spend BILLIONS OF DOLLARS building “degraded” products that nobody wanted, a terrible idea that slowed Innovation, and hurt the American Worker. That Era is OVER! We will protect National Security, create American Jobs, and keep America’s lead in AI. NVIDIA’s U.S. Customers are already moving forward with their incredible, highly advanced Blackwell chips, and soon, Rubin, neither of which are part of this deal. My Administration will always put America FIRST. The Department of Commerce is finalizing the details, and the same approach will apply to AMD, Intel, and other GREAT American Companies. MAKE AMERICA GREAT AGAIN!

Peter Wildeford: I wonder what the “conditions that allow for continued strong National Security” will be. Seems important!

The ‘conditions that allow’ clause could be our out to at least mitigate the damage here, since no these sales would not allow for continued strong national security.

I believe this would, if it was carried out at scale without big national security conditions attached, be extremely damaging to American national interests and national security and our ability to ‘beat China’ in any meaningful sense, in addition to any impacts it would have on AI safety and our ability to navigate and survive the transition to superintelligence.

This is happening despite strong opposition from Congress, what looks like opposition from most of those in the executive branch, deep unpopularity among experts and the entire policy community and the strong advice of America’s strongest AI champions on the software side, and unpopularity with the public. The vibes are almost entirely ‘this is a terrible decision, what are we even doing.’

The only ways I can think of for this to be a non-terrible idea are either if the Chinese somehow refuse the chips in which case it will do little harm but also little good, or (and to be fully clear on this possibility: I have zero reason to believe this to be the case) that H200s have some sort of secret remote control or backdoor.

I presume this decision comes from a failure by Trump to appreciate the strategic importance, power and cost efficiency of the H200 chips, combined with aggressive pushing of this from those including David Sacks who have repeatedly put private industry interests, especially those of Nvidia, above the interests of America.

Alec Stapp (IFP): Massive own goal to export these AI chips to China.

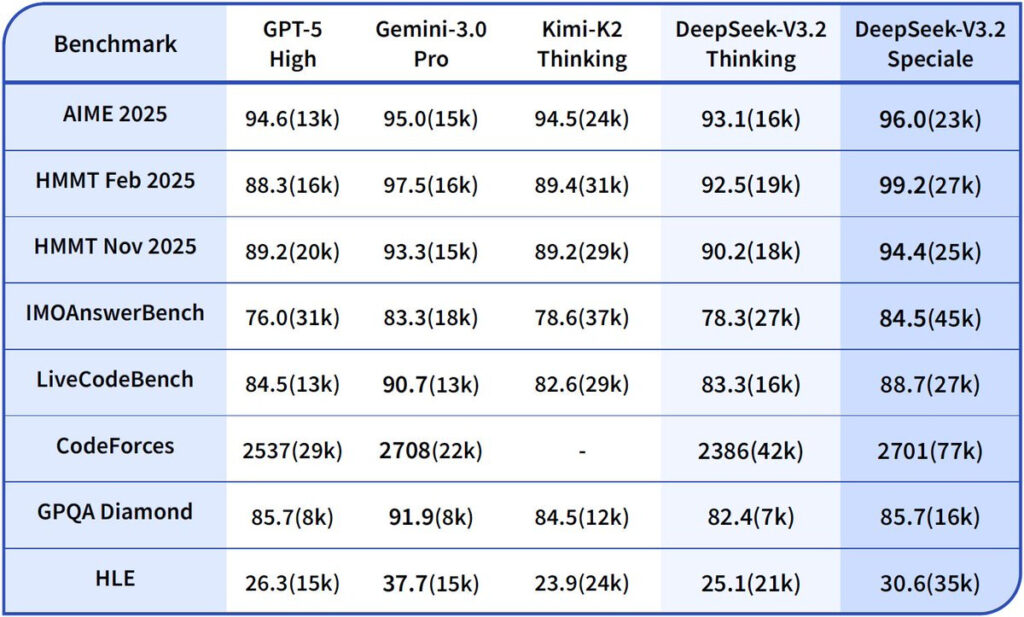

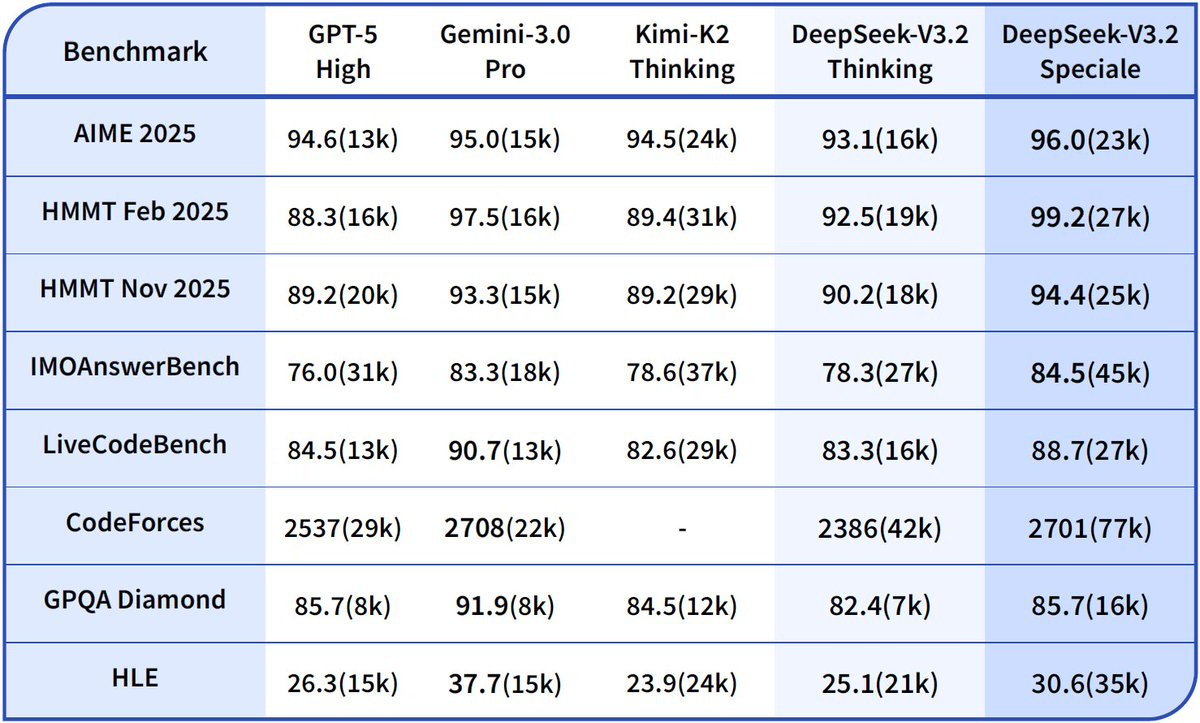

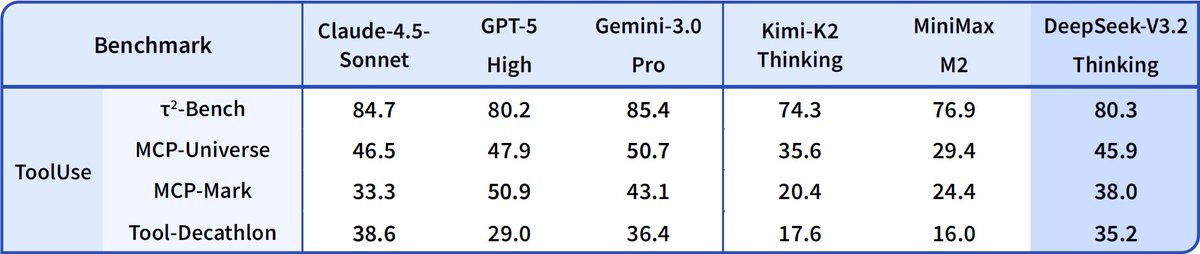

The H200 is 6x more powerful than the H20, which was previously the most powerful chip approved for export.

Our compute advantage is the main thing keeping us ahead of China in AI.

Why would we throw that away?

Chris McGuire (Council on Foreign Relations): This is the single biggest change in U.S.-China policy of the entire Administration, signaling a reversion to the cooperative policies of the 2000s and early 2010s and away from the competitive policies of Trump 1 and Biden.

It is a transformational moment for U.S. technology policy, which until now had been predicated on investing at home while holding China back.

Now we are trying to win a race against a competitor who doesn’t play by the rules.

Chris McGuire: This is a seachange in U.S. policy, and a significant strategic mistake. If the United States sells AI chips to China that are 18 months behind the frontier, it negates the biggest U.S. advantage over China in AI. Here are four reasons that this new policy helps China much more than it helps the United States:

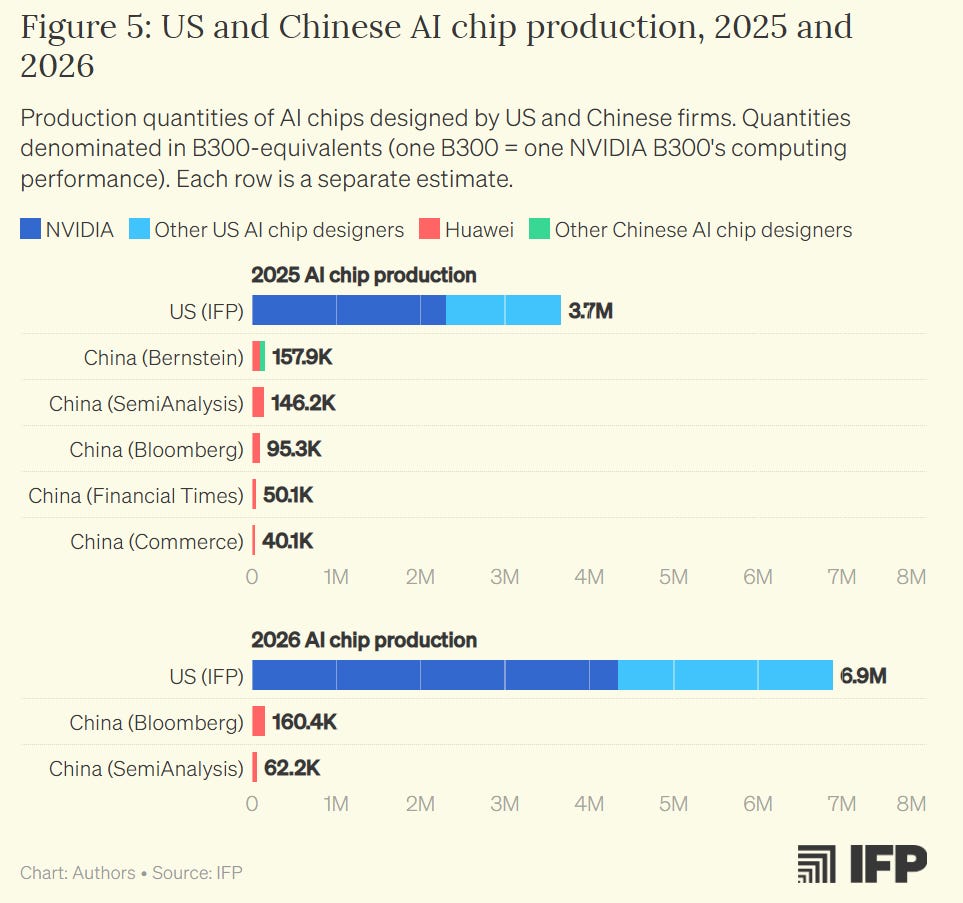

1️⃣No Chinese AI chip firm poses a strategic threat to Nvidia or any other U.S firm. China does not plan to make a chip better than the H200 until Q4 2027 at the earliest. It also is severely constrained in the number of lower-quality chips it can make. And China will continue to do everything in its power to reduce its dependency on US AI chips, even while it retains access to US chips.

2️⃣Because the U.S. lead over China in AI chips is rapidly increasing, a fixed 18 month delay will be even more beneficial to China in the coming months and years. It means the United States could start to sell Blackwell chips to China as soon as the middle of next year – despite the fact that no Chinese firm has plans to make a chip as good as the GB200 any time this decade. And Rubin chips – which are projected to be 28x (!) better than U.S. export control thresholds later in President Trump’s term.

3️⃣ Exporting large numbers of AI chips to China will provide an enormous increase to China’s aggregate AI compute capabilities; the quantity of chips that are approved will be key. Large quantity exports will also allow China to compete with U.S. firms in AI infrastructure construction globally – including with “good enough” AI data centers that use previous-generation technology but are subsidized by the Chinese government and cheaper than U.S. offerings. Right now China cannot offer any product that can compete globally with U.S. data centers. That is about to change.

4️⃣ We got nothing in exchange for this. This is a massive concession to China, reversing the most significant U.S. technology protection policy vis-a-vis China that has ever been implemented and China’s second most significant criticism of U.S. policy, behind only U.S. support for Taiwan. But the way reporting frames it, this is a unilateral U.S. concession. If the tables were turned, China would not give the United States H200s – and if they did, they certainly wouldn’t give it to us for free.

Peter Wildeford: 💯on these notes on selling 🇨🇳 the H200 chip…

– gives 🇨🇳 access to chips ~2 years ahead of what they can make

– doesn’t slow down 🇨🇳 development much

– if we allow large quantities of exports, it’s all China needs to compete with US AI + cloud

– 🇺🇸 gets nothing in return? [other than the 25% cut]

Right now we have a huge compute advantage. This gives a lot of that away.

Selling H200s would be way, way less bad than selling China the B30A.

Selling H200s would be way, way worse than selling China the H20.

Tim Fist: The H200 belongs to the previous “Hopper” generation of NVIDIA AI chips. These are still widely used for frontier AI in the US and will likely remain so for 1 to 2 years.

18 of the 20 most powerful publicly documented GPU clusters primarily use Hopper chips.

IFP dives into the technical specifications so you don’t have to. If you are taking this issue fully seriously I encourage you to read the whole thing.

Tim Fist (IFP): The US has reportedly decided to approve exports of NVIDIA’s H200 chip to China. This gives Chinese AI labs chips that outperform anything China can make until ~2028.

How big a deal this is depends on how many we export.… Why is compute advantage good?

A bigger advantage means greater US capacity to train more/more powerful models, support more and better AI and cloud companies, and deploy more AI at home and abroad.

I will summarize.

-

The Nvidia H200 is six times as powerful as the Nvidia H20.

-

China will be unable to produce chips superior to the H200 until Q4 2027 at the earliest. Even when it does this, it will have very little manufacturing capacity.

-

H200s would allow Chinese AI supercomputers at roughly +50% cost for training and +100%-500% for inference, compared to Americans with Blackwells.

-

China’s manufacturing capabilities and timeline will be entirely unaffected by these exports, because they will proceed at maximum speed either way. This is a matter of national security for them and their domestic market is gigantic.

-

Every chip we export is a chip that would have gone to America instead.

That last point is important. There is demand for as many chips as we can manufacture. Every time we task as fab with producing an H200 to sell to China, it could have produced a chip for America, or sold that H200 to America.

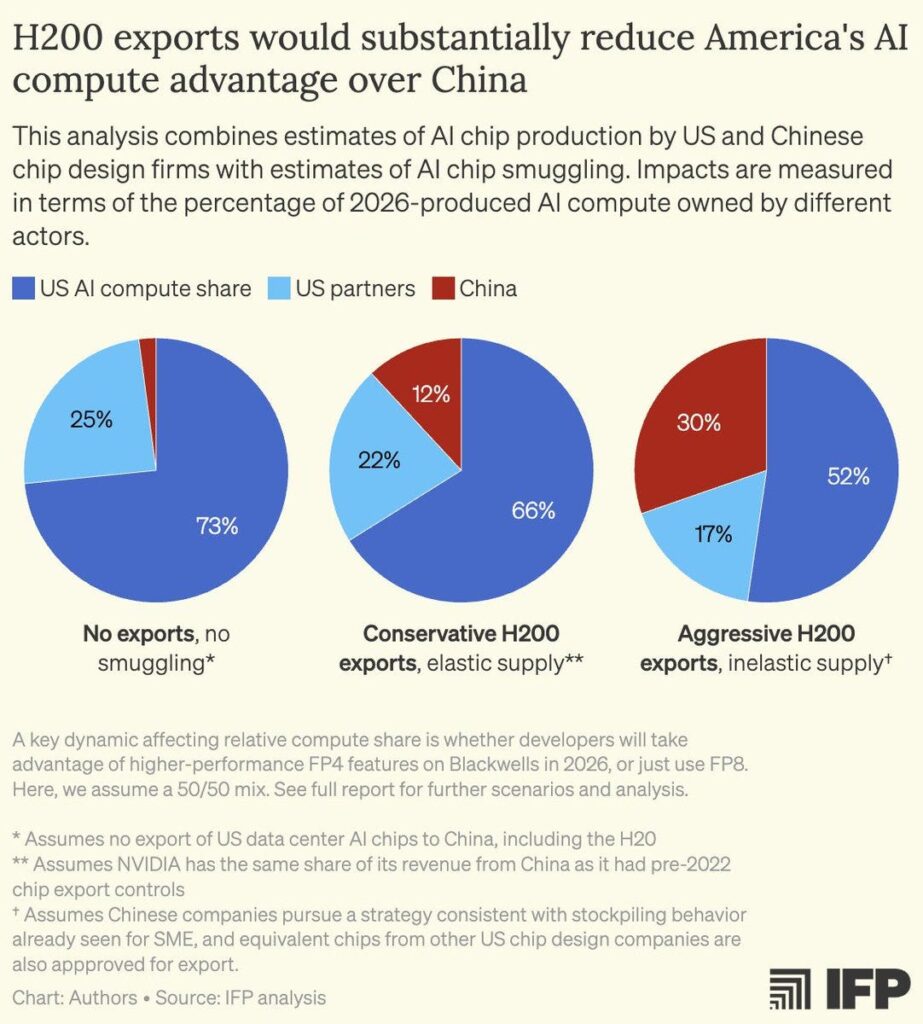

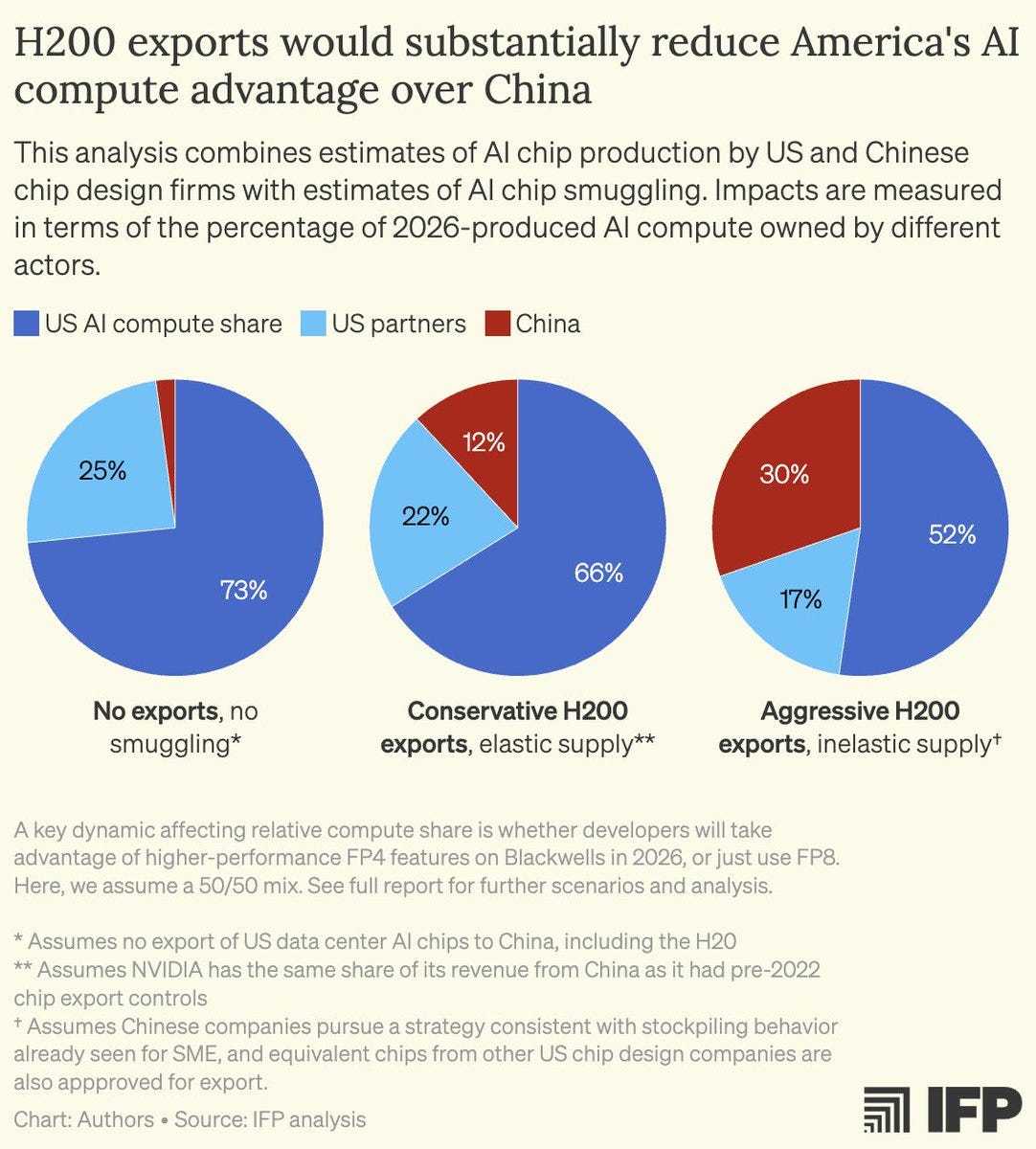

So is our massive compute manufacturing advantage. It’s big:

How much would H200 exports erode our compute advantage? Quite a lot.

Essentially no.

The vibes were universally terrible. Nobody wants this. Well, almost no one.

Drew Pavlou: Is there any steel man case for this at all?

Could it delay a Chinese manufacturing shift to their own indigenous super advanced chips?

Please tell me that there’s a silver lining.

Melissa Chen: No. This is the Iran Deal for the Trump admin.

LiquidZulu: The steel man is that socialism is bad, and it is good to let people voluntarily trade.

Samuel Hammond (FAI): There’s zero upside for the US, sorry. The most we can hope is that China’s boomer leadership blocks them for us, but I’m not betting on it. At minimum Chinese chip makers will buy H200s to strip them for HBM3E to reverse engineer and put into Huawei chips.

Josh Rogin: The Chinese market will ultimately be lost to Chinese competitors no matter what. Giving Chinese firms advanced US tech now doesn’t delay that – it just gives China a way to catch up with the United States even faster. Strategically stupid.

Tom Winter (NBC News): Shortly before this was announced the Justice Department unsealed a guilty plea as part of “Operation Gatekeeper” detailing efforts by several businessman to traffic these chips to locations in China.

They described the H100 and H200 as “among the most advanced GPUs ever developed, and their export to the People’s Republic of China is strictly prohibited.”

Peter Wildeford: Wow – this DOJ really gets it. Trump should listen.

Derek Thompson: What the WH claims its economic policy is all about: Stop listening to those egghead free-trade globalists, we’re doing protectionism for the national interest!

What our AI policy actually is: Stop worrying about the national interest, we’re doing free-trade globalism!

What unites these policies: Trump just does stuff transactionally, and none of it “makes” “strategic” “sense”

Dean Ball: DC is filled with national security and China hawks who are, if anything, accelerationists with respect to ai, who also support aggressive chip controls.

.. If you mean “the people who think AI is going to be really important, not like internet important but like really goddamn fucking important, please pay attention, oh my god why are you not paying attention for the love of christ do you not understand that computers can now think,” yes, I would agree that community is broadly positively disposed toward chip export controls.

If you think that AI is not as important as Dean’s description, or not as important on a relatively short time frame, and you’re thinking about these questions seriously, mostly you still oppose selling the H200s, because the reasons to not do this are overdetermined. It’s a bad move for America even if we know that High Weirdness is not coming within a few years and that AI will not pose an existential risk.

‘Trade is generally good’ is true enough but this is very obviously a special case where this would not be a win-win trade, as most involved in national security discussions agree, and most China hawks agree. At some point you don’t sell your rival ammunition.

The default attempted steelman is that this locks China into Nvidia and CUDA and makes them dependent on American chips, or hurts their manufacturing efforts.

Except this simply is not true. It does not lock them in. It does not make them dependent. It does not slow down their manufacturing efforts.

There are those who say ‘this is good for open source’ and what they mean is ‘this is good for Chinese AI models.’ There is that, I suppose.

The other steelman is ‘the American government is getting 25%.’ Trump loves the idea of getting a cut of deals like this, and this is better than nothing in that it likely lowers quantity traded and the money is nice, but ultimately 25% of the money is, again, chump change versus the strategic value of the chips.

Semafor tries to present a balanced view, pitching the perspective of H200s as a ‘middle ground’ between H20s and B30As and trotting out the usual strawman cases, without addressing the specifics.

One certainly hopes this isn’t being done to try and win other trade concessions such as soybean sales. Not that those things don’t matter, but the concessions available matter far less than the stakes in AI.

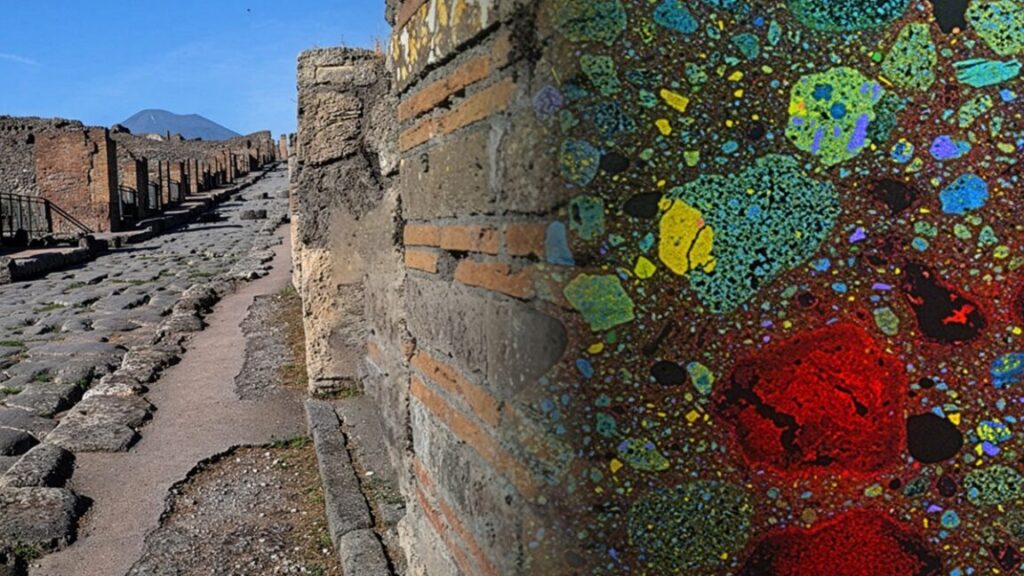

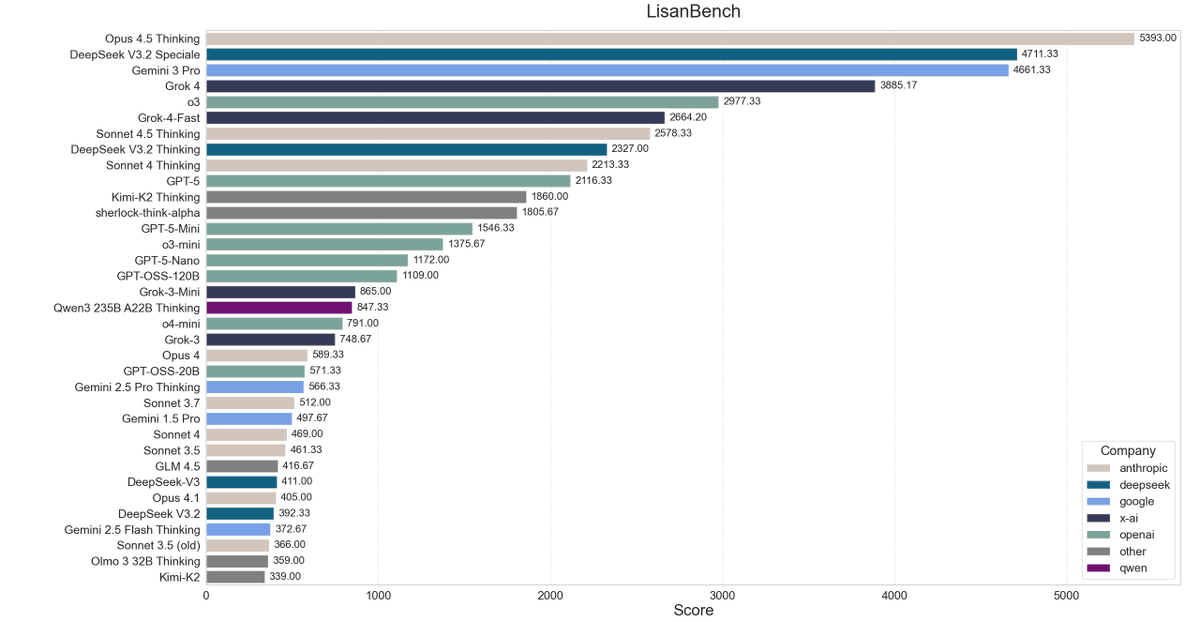

In particular, compute is a key limiting factor for DeepSeek.

DeepSeek has made this clear many times over the past two years.

DeepSeek recently came out with v3.2. Their paper makes clear that this could have been a far more capable model if they had access to more compute, and they could be serving the model far faster. DeepSeek’s training runs have, by all reports, repeatedly run into trouble because of lack of compute and attempts to use Huawei chips.

This extends to the rest of the Chinese model ecosystem. China specializes in creating and using models that are cheap to train and cheap to use, partly because that is the niche for fast followers, and also largely because they do not have the compute to do otherwise.

If we gave the Chinese AI ecosystem massive amounts of compute, they would be able to train frontier models, greatly increasing their share of inference. Their startups and AI services would be in much better positions against ours across a variety of sizes and use cases. Our commercial and cultural power would wane.

Compute is the building block of the future. We have it. They want it.

Our advantage in compute could rapidly turn into a large disadvantage. China’s greatest strength in AI is that it has essentially unlimited access to electrical power. If allowed to buy the chips, China could build unlimited data centers and eclipse us.

There’s nothing new here, but let’s go over this again.

Even if you think AI is all about soft power, cultural influence, economic power and ‘market share,’ ultimately what matters is who is using which models.

Chip sales are profitable, but the money involved is, in relative terms, chump change.

The reason ‘market share of chip sales’ is touted as a major policy goal by David Sacks and similar others is the idea of what have dubbed the ‘tech stack’ combining chips with an AI model, and sometimes other vertical integrations as well such as the physical data center and cloud services. Thanks to the benefits of integration, they say, it will be a battle of an American stack (e.g. Nvidia + OpenAI) against a Chinese stack (e.g. Huawei + DeepSeek).

The whole thing is a mirage.

As an obvious example of this, notice that Anthropic is happy to use chips from three distinct stacks: Microsoft Azure + Nvidia, Amazon Web Services + Tritanium and Google Cloud + TPUs, and everyone agrees that doing this was a great move modulo the related security concerns.

There are some benefits to close integration between chips and models, so yes you would design them around each other when you can.

But those gains are relatively modest. You can mostly run inference and training for any model on any generally sufficiently capable chip, with only modest efficiency loss. You can take a model trained on one chip and run it on another, or one from another manufacturer, and people often do.

Chinese models work much better on Nvidia chips, and when they have access to vastly more chips and more compute. They can be shifted at will. There is no stack.

There is another ‘tech stack’ concept in the idea of a company like Microsoft selling a full-stack data center project, that is shovel ready, to a nation like Saudi Arabia. That’s a sensible way to make things easy on the buyer and lock in a deal. But this has nothing to do with what would happen if you sold highly capable AI chips to China.

The argument for exporting our full ‘tech stack’ to third party nations like Saudi Arabia or the UAE was that they would otherwise make a deal to get Chinese chips and then run Chinese models. That’s silly, in that the Chinese Huawei chips are not up to the task and not available in such quantities, but on some level it makes sense.

Whereas here it makes no sense. You’re selling the Nvidia chips to literal China. They’re not going to use them to run ChatGPT or Claude. Every chip they buy helps train better Chinese models, and is one more chip they have spare for export.

The most important weapon in the AI race is compute.

If it is so important to ‘win the AI race’ and not ‘lose to China,’ the last thing we should be doing is selling highly capable AI chips to China.

If you want to sell the H200 to China, you are not prioritizing beating China.

Or rather, when you say ‘beat China’ you mean ‘maximize Nvidia’s market share of chips sold, even if this turbocharges their labs and models and inference.’

That may be David Sacks’s priority. It is not mine. See it for what it is.

Seán Ó hÉigeartaigh: The ‘AI race with China’ has been used to argue for everything from federal investment in AI to laxer environmental laws to laxer child protection laws to energy buildout to, most recently, pre-emption – by both USG and leading AI lobby groups.

But nothing has been more impactful on the ‘AI race’ by a long shot than export controls on advanced chips. If they really cared about the AI race, they’d support that. H200s getting approved for sale to China another piece of evidence that this is more about making money than anything else.

It’s a classic securitisation move: make something a matter of existential national importance, thus shielding it from democratic scrutiny.

Bonchie: Kind of hard to keep telling people that they must accept bad policy to “win the AI race against China,” when we turn around and sell them the chips they need to win the AI race.

Nvidia, down about 7% over the last month, did pop ~1.3% on the announcement. In the day since it’s given half of that back, with an overnight pop in between.

You might assume that, whoever else lost, at least Nvidia would win from this. The market is not so clear on that.

This leaves a few possibilities:

-

This probably won’t happen, America will walk it back.

-

This probably won’t happen, China will refuse the chips.

-

This probably won’t happen de facto because of the GAIN Act or similar.

-

This probably won’t happen at scale, there will be strict limits.

-

This probably won’t happen at scale, the 25% tax is too large.

-

This probably will happen, but at 25% tax it’s not good for Nvidia.

-

This probably will happen but market was hoping for better.

-

This was largely already priced in.

-

Markets are weird, yo.

CNBC’s analysis here expects $3.5 billion in quarterly revenue, which would be a disaster for national security and presumably boost the stock. But then again, consider that Nvidia is capacity constrained. They can already sell all their chips. So do they want to be selling some of them with a 25% tax attached in a vainglorious quest for ‘market share’? The market might think Jensen Huang is selling out America without even getting more profit in return.

Another factor is that when the announcement was made, the Nasdaq ex-Nvidia didn’t blick, nor was there a substantial move in AMD or Intel. Selling China a lot of inference chips should be bad for American customers of Nvidia, given supply is limited, so that moves us towards either ‘this won’t happen at scale’ or ‘this was already priced in.’ I don’t give the market credit for fully pricing things like this in.

What happens next? Congress and others who think this is a no-good, very bad move for America will see how much they can mitigate the damage. Sales will only happen over time, so there are various ways to try and stop this from happening.

Discussion about this post

Selling H200s to China Is Unwise and Unpopular Read More »