The perfect New Year’s Eve comedy turns 30

There aren’t that many movies specifically set on New Year’s Eve, but one of the best is The Hudsucker Proxy (1994), Joel and Ethan Coen’s visually striking, affectionate homage to classic Hollywood screwball comedies. The film turned 30 this year, so it’s the perfect opportunity for a rewatch.

(WARNING: Spoilers below.)

The Coen brothers started writing the script for The Hudsucker Proxy when Joel was working as an assistant editor on Sam Raimi’s The Evil Dead (1981). Raimi ended up co-writing the script, as well as making a cameo appearance as a brainstorming marketing executive. The Coen brothers took their inspiration from the films of Preston Sturgess and Frank Capra, among others, but the intent was never to satirize or parody those films. “It’s the case where, having seen those movies, we say ‘They’re really fun—let’s do one!’; as opposed to “They’re really fun—let’s comment upon them,'” Ethan Coen has said.

They finished the script in 1985, but at the time they were small indie film directors. It wasn’t until the critical and commercial success of 1991’s Barton Fink that the Coen brothers had the juice in Hollywood to finally make The Hudsucker Proxy. Warner Bros. greenlit the project and producer Joel Silver gave the brothers complete creative control, particularly over the final cut.

Norville Barnes (Tim Robbins) is an ambitious, idealistic recent graduate of a business college in Muncie, Indiana, who takes a job as a mailroom clerk at Hudsucker Industries in New York, intent on working his way to the top. That ascent happens much sooner than expected. On the same December day in 1958, the company’s founder and president, Waring Hudsucker (Charles Durning), leaps to his death from the boardroom on the 44th floor (not counting the mezzanine).

A meteoric rise

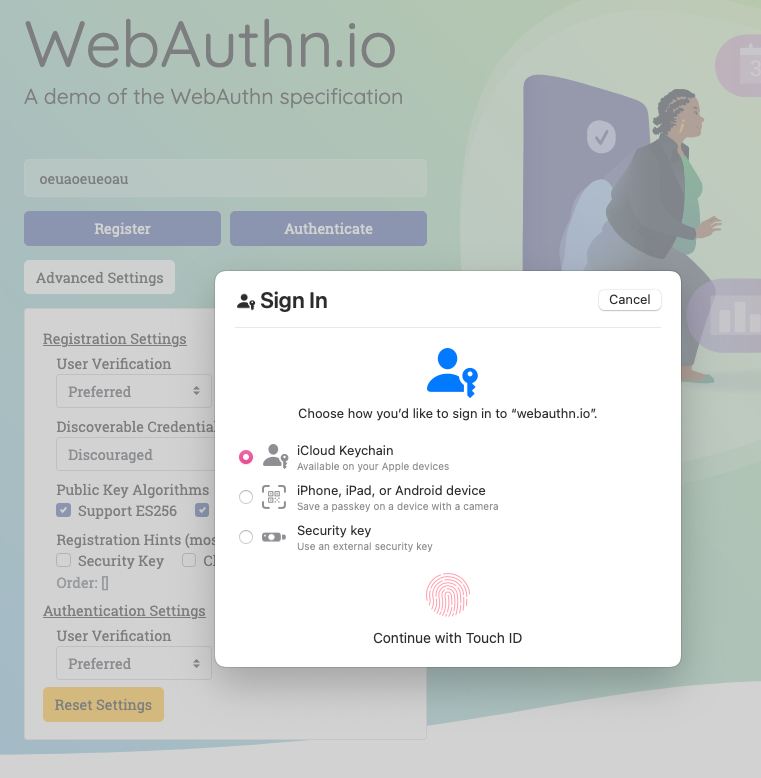

Norville Barnes (Tim Robbins) gets a job at Hudsucker Industries Warner Bros.

To keep the company’s stock from going public as the bylaws dictate, board member Sidney Mussburger (Paul Newman) proposes they elect a patsy as the next president—someone so incompetent it will spook investors and temporarily depress the stock so the board can buy up controlling shares on the cheap. Enter Norville, who takes the opportunity of delivering a Blue Letter to Mussburger to pitch a new product, represented by a simple circle drawn on a piece of paper: “You know… for kids!” Thinking he’s found his imbecilic patsy, Mussburger names Norville the new president.

The perfect New Year’s Eve comedy turns 30 Read More »