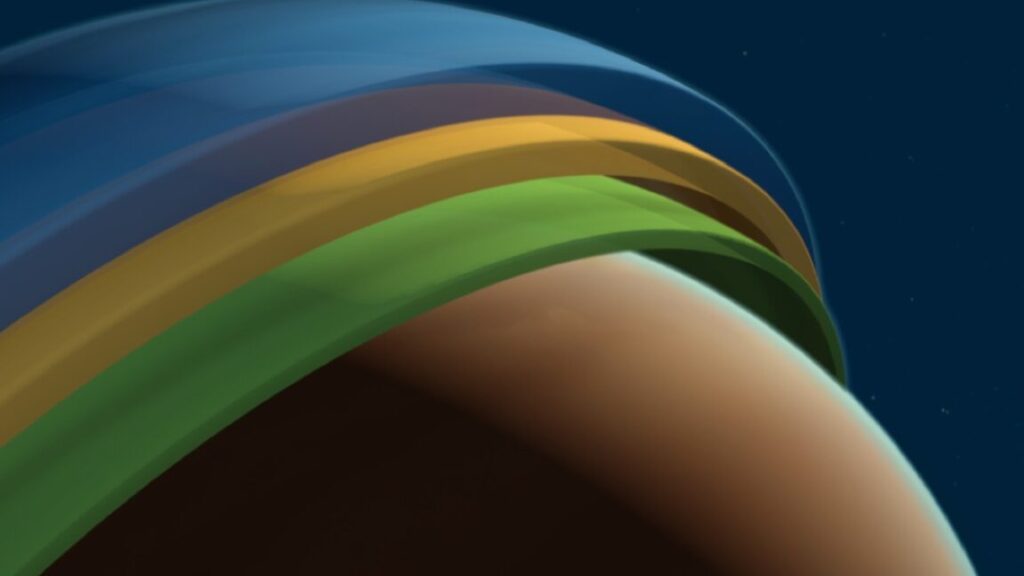

The revolution starts now with Andor S2 teaser

Diego Luna returns as Cassian in the forthcoming second season of Andor.

The first season of Andor, the Star Wars prequel series to Rogue One and A New Hope, earned critical raves for its gritty aesthetic and multilayered narrative rife with political intrigue. While ratings were a bit sluggish, they were good enough to win the series a second season, and Disney+ just dropped the first action-packed teaser trailer.

(Spoilers for S1 below.)

As previously reported, the story begins five years before the events of Rogue One, with the Empire’s destruction of Cassian Andor’s (Diego Luna) homeworld and follows his transformation from a “revolution-averse” cynic to a major player in the nascent rebellion who is willing to sacrifice himself to save the galaxy. S1 left off with Cassian returning to Ferrix for the funeral of his adoptive mother, Maarva (Fiona Shaw), rescuing a friend from prison, and dodging an assassination attempt. A post-credits scene showed prisoners assembling the firing dish of the now-under-construction Death Star.

According to the official longline, S2 “will see the characters and their relationships intensify as the horizon of war draws near and Cassian becomes a key player in the Rebel Alliance. Everyone will be tested and, as the stakes rise, the betrayals, sacrifices and conflicting agendas will become profound. “

In addition to Luna, most of the main cast from S1 is returning: Genevieve O’Reilly as Mon Mothma, a senator of the Republic who helped found the Rebel Alliance; Adria Arjona as mechanic and black market dealer Bix Caleen; James McArdle as Caleen’s boyfriend, Timm Karlo; Kyle Soller as Syril Karn, deputy inspector for the Preox-Morlana Authority; Stellan Skarsgård as Luthen Rael, an antiques dealer who is secretly part of the Rebel Alliance; Denise Gough as Dedra Meero, supervisor for the Imperial Security Bureau; Faye Marsay as Vel Sartha, a Rebel leader on the planet Aldhani; Varada Sethu as Cinta Kaz, another Aldhani Rebel; Elizabeth Dulau as Luthen’s assistant Kleya; and Muhannad Bhaier as Wilmon, who runs the Repaak Salyard.

The revolution starts now with Andor S2 teaser Read More »