It’s monthly roundup time again, and it’s happily election-free.

Propaganda works, ancient empires edition. This includes the Roman Republic being less popular than the Roman Empire and people approving of Sparta, whereas Persia and Carthage get left behind. They’re no FDA.

Polling USA: Net Favorable Opinion Of:

Ancient Athens: +44%

Roman Empire: +30%

Ancient Sparta: +23%

Roman Republican: +26%

Carthage: +13%

Holy Roman Empire: +7%

Persian Empire: +1%

Visigoths: -7%

Huns: -29%

YouGov / June 6, 2024 / n=2205

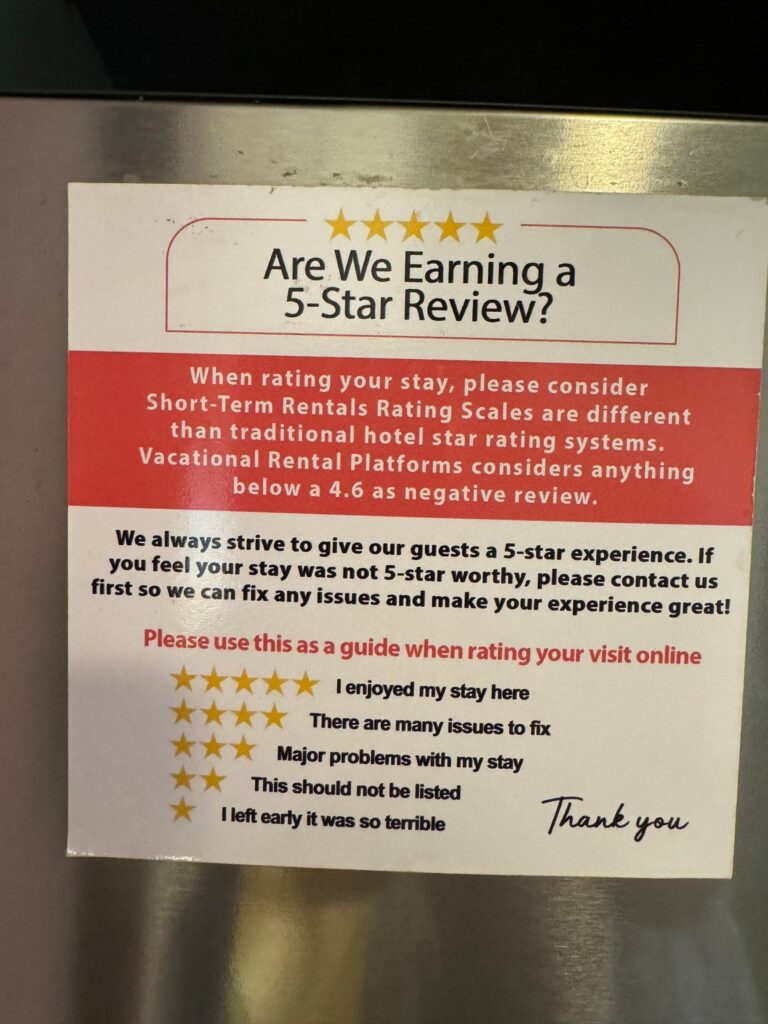

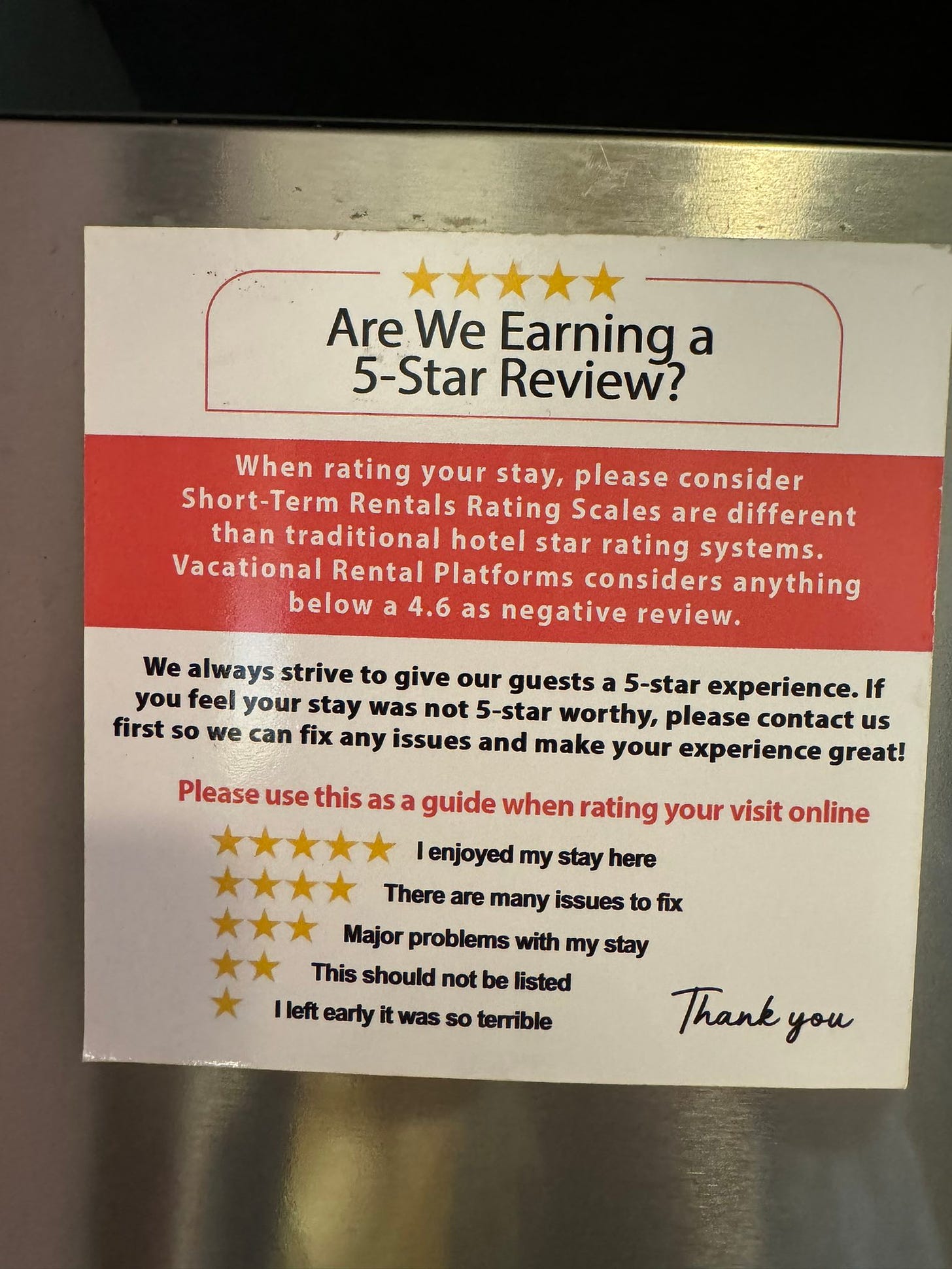

What do we do about all 5-star ratings collapsing the way Peter describes here?

Peter Wildeford: TBH I am pretty annoyed that when I rate stuff the options are:

“5 stars – everything was good enough I guess”

“4 stars – there was a serious problem”

“1-3 stars – I almost died”

I can’t express things going well!

I’d prefer something like:

5 stars – this went above/beyond, top 10%

4 stars – this met my expectations

3 stars – this was below my expectations but not terrible

2 stars – there was a serious problem

1 star – I almost died

Kitten: The rating economy for things like Airbnb, Uber etc. made a huge mistake when they used the five-star scale. You’ve got boomers all over the country who think that four stars means something was really good, when in fact it means there was something very wrong with the experience.

Driver got lost for 20 minutes and almost rear ended someone, four stars

Boomer reviewing their Airbnb:

This is one of the nicest places I have ever stayed, the decor could use a little updating, four stars.

A lot of people saying the boomers are right but not one of you mfers would even consider booking an Airbnb with a 3.5 rating because you know as well as I do that means there’s something really wrong with it.

Nobe: On Etsy you lose your “star seller” rating if it dips below 4.8. A couple of times I’ve gotten 4 stars and I’ve been beside myself wondering what I did wrong even when the comment is like “I love it, I’ll cherish it forever”

Moshe Yudkowsky: The first time I took an Uber, and rated a driver 3 (average), Uber wanted to know what was wrong. They corrupted their own metric.

Kate Kinard: I’m at an airbnb right now and this magnet is on the fridge as a reminder

⭐️⭐️⭐️⭐️= many issues to fix!

The problem is actually worse than this. Different people have different scales. A majority of people use the system where 4-stars means major issues, and many systems demand you maintain e.g. a 4.8. All you get are extreme negative selection.

Then there are others who think the default is 3 stars, 4 is good and 5 is exceptional.

Which is the better system, but not if everyone else is handing out 5s like candy, which means your rating is a function of who is rating you more than whether you did a good job. Your ‘negative selection’ is 50% someone who doesn’t know the rules.

This leads to perverse ‘worse is better’ situations, where you want products that draw in the audience that will use the lower scale, or you want something that will sometimes offend people and trigger 1s, such as being ‘too authentic’ or not focusing enough on service.

Thus this report, that says the Japanese somehow are using the good set of rules?

Mrs. C: I love the fact that in Japan you need to avoid 5 star things and look for 3-4 star places because Japanese people tend to use a 5 point scale sanely and it’s only foreigners giving 5 stars to everything, so a 5 star rating means “only foreigners go here”

Eliezer Yudkowsky: How the devil did Japan end up using 5-point scales sanely? I have a whole careful unpublished analysis of everything that goes wrong with 5-point rating systems; it hadn’t occurred to me that any other country would end up using them sanely!

What makes this even weirder is Japan is a place where people are taught never to tell someone no. One can imagine them being one of places deepest in the 5-star-only trap. Instead, this seems almost like an escape valve, maybe? You don’t face the social pressure, there isn’t a clear ‘no’ involved, and suddenly you get to go nuts. Neat.

One place that escapes this trap even here are movie ratings. Everyone understands that a movie rating of 4/5 means the movie was very good, perhaps excellent. We get that the best movies are much better than a merely good movie, and this difference matters, you want active positive selection. It also helps that you are not passing judgment on a particular person or local business, and there is no social exchange where you feel under pressure to maximize the rating metric.

This helps explain why Rotten Tomatoes is so much worse than Metacritic and basically can only be used as negative selection – RT uses a combination of binaries, which is the wrong question to ask, whereas Metacritic translates each review into a number. It also hints at part of why old Netflix predictions were excellent, as they were based on a 5-star scale, versus today’s thumbs-based ratings, which then are combined with pushing their content and predicting what you’ll watch rather than what you’ll like how much.

This statement might sound strange but it seems pretty much true?

Liz: The fact that it’s cheaper to cook your own food is disturbing to me. like frequently even after accounting for your time. like cooking scales with number of people like crazy. there’s no reason for this to be the case. I don’t get it.

In the liztopia restaurants are high efficiency industrial organizations and making your own food is akin to having a hobby for gardening.

I literally opened a soylent right after posting this. i’m committed to the bit.

Gwern: The best explanation I’ve seen remains regulation and fixed costs: essentially, paternalistic goldplating of everything destroys all the advantages of eating out. Just consider how extremely illegal it would be to run a restaurant the way you run your kitchen. Or outlawing SRO.

Doing your own cooking has many nice benefits. You might enjoy cooking. You get to customize the food exactly how and when you like it, choose your ingredients, and enjoy it at home, and so on. The differential gives poorer people the opportunity to save money. I might go so far as to say that we might be better off for the fact that cooking at home is cheaper.

It’s still a statement about regulatory costs and requirements, essentially, that it is often also cheaper. In a sane world, cooking at home would be a luxury. Also in a sane world, we would have true industrialized at least the cheap cooking at this point. Low end robot chefs now.

Variety covers studio efforts to counter ‘Toxic Fandom,’ where superfans get very angry and engage in a variety of hateful posts, often make threats and sometimes engage in review bombing. It seems this is supposedly due to ‘superfans,’ the most dedicated, who think something is going to destroy their precious memories forever. The latest strategy is to hire those exact superfans, so you know when you’re about to walk into this, and perhaps you can change course to avoid this.

The reactions covered in the past mostly share a common theme, which is that they are rather obviously pure racism or homophobia, or otherwise called various forms of ‘woke garbage.’ This is very distinct from what they site as the original review bomb on Star Wars Episode IX, which I presume had nothing to do with either of these causes, and was due to the movie indeed betraying and destroying our childhoods by being bad.

The idea of bringing in superfans so you understand which past elements are iconic and important, versus which things you can change, makes sense. I actually think that’s a great idea, superfans can tell you are destroying the soul of the franchise, breaking a Shibboleth, or if your ideas flat out suck. That doesn’t mean you should or need to listen or care when they’re being racists.

Nathan Young offers Advice for Journalists, expressing horror at what seem to be the standard journalistic norms of quoting anything anyone says in private, out of context, without asking permission, with often misleading headlines, often without seeking to preserve meaning or even get the direct quote right, or to be at all numerate or aware of reasonable context for a fact and whether it is actually newsworthy. His conclusion is thus:

Nathan Young: Currently I deal with journalists like a cross between hostile witnesses and demonic lawyers. I read articles expecting to be misled or for facts to be withheld. And I talk to lawyers only after invoking complex magics (the phrases I’ve mentioned) to stop them taking my information and spreading it without my permission. I would like to pretend I’m being hyperbolic, but I’m really not. I trust little news at first blush and approach conversations with even journalists I like with more care than most activities.

I will reiterate. I take more care talking to journalists than almost any other profession and have been stressed out or hurt by them more often than almost any group. Despite this many people think I am unreasonably careless or naïve. It is hard to stress how bad the reputation of journalists is amongst tech/rationalist people.

Is this the reputation you want?

Most people I know would express less harsh versions of the same essential position – when he says that the general reputation is this bad, he’s not kidding. Among those who have a history interacting with journalists, it tends to be even worse.

The problem is largely the standard tragedy of the commons – why should one journalist sacrifice their story to avoid giving journalists in general a bad name? There was a time when there were effective forms of such norm enforcement. That time has long past, and personal reputations are insufficiently strong incentives here.

As my task has trended more towards a form of journalism, while I’ve gotten off light because it’s a special case and people I interact with do know I’m different, I’ve gotten a taste of the suspicion people have towards the profession.

So I’d like to take this time here to reassure everyone that I abide by a different code than the one Nathan Young describes in his post. I don’t think the word ‘journalist’ changes any of my moral or social obligations here. I don’t think that ‘the public has a right to know’ means I get to violate the confidence or preferences of those around me. Nor do I think that ‘technically we did not say off the record’ or ‘no takesies backsies’ means I am free to share private communications with anyone, or to publish them.

If there is something I am told in private, and I suspect you would have wanted to say it off the record, and we didn’t specify on the record, I will actively check. If you ask me to keep something a secret, I will. If you retroactively want to take something you said off the record, you can do that. I won’t publish something from a private communication unless I feel it was understood that I might do that, if unclear I will ask, and I will use standard common sense norms that respect privacy when considering what I say in other private conversations, and so on. I will also glamorize as necessary to avoid implicitly revealing whether I have hidden information I wouldn’t be able to share, and so on, as best I can, although nobody’s perfect at that.

I knew Stanford hated fun but wow, closing hiking trails when it’s 85 degrees outside?

It certainly seems as if Elon Musk is facing additional interference in regulatory requirements for launching his rockets, as a result of people disliking his political activities and decisions regarding Starlink. That seems very not okay, as in:

Alex Nieves (Politico): California officials cite Elon Musk’s politics in rejecting SpaceX launches.

Elon Musk’s tweets about the presidential election and spreading falsehoods about Hurricane Helene are endangering his ability to launch rockets off California’s central coast.

The California Coastal Commission on Thursday rejected the Air Force’s plan to give SpaceX permission to launch up to 50 rockets a year from Vandenberg Air Force Base in Santa Barbara County.

“Elon Musk is hopping about the country, spewing and tweeting political falsehoods and attacking FEMA while claiming his desire to help the hurricane victims with free Starlink access to the internet,” Commissioner Gretchen Newsom said at the meeting in San Diego.

…

“I really appreciate the work of the Space Force,” said Commission Chair Caryl Hart. “But here we’re dealing with a company, the head of which has aggressively injected himself into the presidential race and he’s managed a company in a way that was just described by Commissioner Newsom that I find to be very disturbing.”

There is also discussion about them being ‘disrespected’ by the Space Force. There are some legitimate issues involved as well, but this seems like a confession of regulators punishing Elon Musk for his political speech and actions?

I mean, I guess I appreciate that He Admit It.

Palmer Lucky: California citing Elon’s personal political activity in denying permission for rocket launches is obviously illegal, but the crazier thing IMO is how they cite his refusal to activate Starlink in Russian territory at the request of Ukraine. Doing so would have been a crime!

I do not think those involved have any idea the amount of damage such actions do, either to our prosperity – SpaceX is important in a very simple and direct way, at least in worlds where AI doesn’t render it moot – and even more than that the damage to our politics and government. If you give people this kind of clear example, do not act surprised when they turn around and do similar things to you, or consider your entire enterprise illegitimate.

That is on top of the standard ‘regulators only have reason to say no’ issues.

Roon: In a good world faa would have an orientation where they get credit for and take pride in the starship launch.

Ross Rheingans-Yoo: In a good world every regulator would get credit for letting the successes through – balanced by equal blame for harmful failures – & those two incentives would be substantially stronger than the push to become an omniregulator using their perch to push a kitchen sink of things.

In other Elon Musk news: Starlink proved extremely useful in the wake of recent storms, with other internet access out indefinitely. It was also used by many first responders. Seems quite reasonable for many to have a Starlink terminal onhand purely as a backup.

An argument that all the bad service you are getting is a sign of a better world. It’s cost disease. We are so rich that labor costs more money, and good service is labor intensive, so the bad service is a good sign. Remember when many households had servants? Now that’s good service, but you don’t want that world back.

The obvious counterargument is that when you go to places that are poor, you usually get terrible service. At one point I would periodically visit the Caribbean for work, and the worst thing about it was that the service everywhere was outrageously terrible, as in your meal at a restaurant typically takes an extra hour or two. I couldn’t take it. European service is often also very slow, and rural service tends to be relatively slow. Whereas in places in America where people cost the most to employ, like New York City, the service is usually quite good.

There’s several forces at work here.

-

We are richer, so labor costs more, so we don’t want to burn it on service.

-

We are richer in some places, so we value our time and thus good service more, and are willing to pay a bit more to get it.

-

We are richer in some places, in part because we have a culture that values good service and general hard work and not wasting time, so service is much better than in places with different values – at least by our own standards.

-

We are richer in part due to ‘algorithmic improvements,’ and greater productivity, and knowing how to offer things like good service more efficiently. So it is then correct to buy more and better service, and people know what to offer.

-

In particular: Servants provided excellent service in some ways, but were super inefficient. Mostly they ended up standing or sitting around not doing much, because you mostly needed them in high leverage spots for short periods. But we didn’t have a way to hire people to do things for you only when you needed them. Now we do. So you get to have most of the same luxury and service, for a fraction of the employment.

I think I actually get excellent service compared to the past, for a huge variety of things, and for many of the places I don’t it is because technology and the internet are taking away the need for such service. When I go to places more like the past, I don’t think the service is better – I reliably think the service is worse. I expect the actual past is the same, the people around you were cheaper to hire but relatively useless. Yes, you got ‘white glove service’ but why do I want people wearing white gloves?

Like Rob Bensinger here, I am a fan of Matt Yglesias and his campaign of ‘the thing you said it not literally true and I’m going to keep pointing that out.’ The question is when it is and isn’t worth taking the space and time to point out who is Wrong on the Internet, especially when doing politics.

Large study finds ability to concentrate is actually increasing in adults? This seems like a moment to defy the data, or at least disregard it in practice, there’s no way this can be real, right? It certainly does not match my lived experience of myself or others. Many said the graphs and data involved looked like noise. But that too would be great news, as ‘things are about the same’ would greatly exceed expectations.

Perhaps the right way to think about attention spans is that we have low intention tolerance, high willingness to context switch and ubiquitous distractions. It takes a lot more to hold our attention than it used to. Do not waste our time, the youth will not tolerate this. That is compatible with hyperfocusing on something sufficiently engaging, especially once buy-in has been achieved, even for very extended periods (see: This entire blog!), but you have to earn it.

Paul Graham asks in a new essay, when should you do what you love?

He starts with the obvious question. Does what you love offer good chances of success? Does it pay the bills? If what you love is (his examples) finding good trades or running a software company, of course you pursue what you love. If it’s playing football, it’s going to be rough.

He notes a kind of midwit-meme curve as one key factor:

-

If you need a small amount of money, you can afford to do what you love.

-

If you need a large amount of money, you need to do what pays more.

-

If you need an epic amount of money, you will want to found a startup and will need unique insight, so you have to gamble on what you love.

The third consideration is, what do you actually want to do? He advises trying to figure this out right now, not to wait until after college (or for any other reason). The sooner you start the better, so investigate now if you are uncertain. A key trick is, look at the people doing what you might do, and ask if you want to turn into one of them.

If you can’t resolve the uncertainty, he says, try to give yourself options, where you can more easily switch tracks later.

This seems like one of the Obvious True and Useful Paul Graham Essays. These seem to be the correct considerations, in general, when deciding what to work on, if your central goal is some combination of ‘make money’ and ‘have a good life experience making it.’

The most obvious thing missing is the question of Doing Good. If you value having positive impact on the world, that brings in additional considerations.

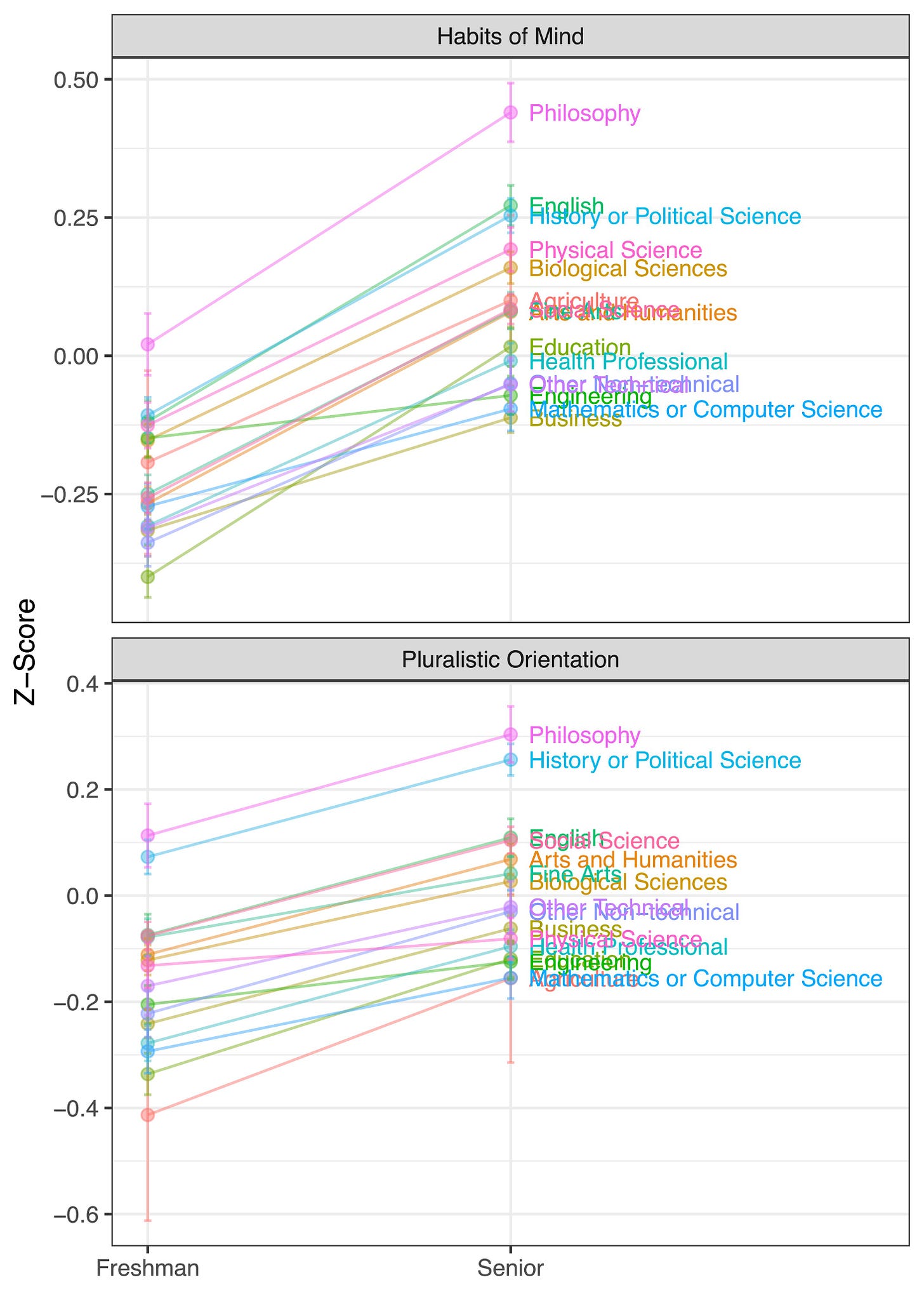

A claim that studying philosophy is intellectually useful, but I think it’s a mistake?

Michael Prinzing: Philosophers say that studying philosophy makes people more rigorous, careful thinkers. But is that that true?

In a large dataset (N = 122,352 students) @daft_bookworm and I find evidence that it is!

In freshman year, Phil majors are more inclined than other students to support their views with logical arguments, consider alternative views, evaluate the quality of evidence, etc. But, Phil majors *alsoshow more growth in these tendencies than students in other majors.

This suggests that philosophy attracts people who are already rigorous, careful thinkers, but also trains people to be better thinkers.

Stefan Schubert: Seems worth noticing that they’re self-report measures and that the differences are small (one measure)/non-existent (the other)

Michael Prinzing: That’s right! Particularly in the comparison with an aggregate of all non-philosophy majors, the results are not terribly boosterish. But, in the comparison with more fine-grained groups of majors, it’s striking how much philosophy stands out.

barbarous: How come we find mathematics & computer science in the bottom of these? Wouldn’t we expect them to have higher baseline and higher improvement in rigor?

My actual guess is that the math and computer science people hold themselves to higher epistemic standards, that or the test is measuring the wrong thing.

Except this is their graph? The difference in growth is indeed very small, with only one line that isn’t going up like the others.

If anything, it’s Education that is the big winner on the top graph, taking a low base and making up ground. And given it’s self reports, there’s nothing like an undergraduate philosophy major to think they are practicing better thinking habits.

I mean, we can eyeball that, and the slopes are mostly the same across most of the majors?

Facial ticks predict future police cadet promotions at every stage, AUC score of 0.7. Importantly, with deliberate practice one can alter such facial ticks. Would changing the ticks actually change perceptions, even when interacting repeatedly in high stakes situations as police do? The article is gated, but based on what they do tell us I find it unlikely. Yes, the ticks are the best information available in this test and are predictive, but that does not mean they are the driving force. But it does seem worth it to fix any such ticks if you can?

Paul Graham: Renaming Twitter X doesn’t seem to have damaged it. But it doesn’t seem to have helped it either. So it was a waste of time and a domain name.

I disagree. You know it’s a stupid renaming when everyone does their best to keep using the old name anyway. I can’t think of anyone in real life that thinks ‘X’ isn’t a deeply stupid name, and I know many that got less inclined to use the product. So I think renaming Twitter to X absolutely damaged it and drove people away and pissed them off. The question is one of magnitude – I don’t think this did enough damage to be a crisis, but it did enough to hurt, in addition to being a distraction and cost.

Twitter ends use of bold and other formatting in the main timeline, because an increasing number of accounts whoring themselves out for engagement were increasingly using more and more bold and italics. Kudos to Elon Musk for responding to an exponential at the right time. Soon it was going to be everywhere, because it was working, and those of us who find it awful weren’t punishing it enough to matter to the numbers. There’s a time and place for selective and sparing use of such formatting, but this has now been officially Ruined For Everyone.

It seems people keep trying to make the For You page on Twitter happen?

Emmett Shear: Anyone else’s For You start filling up with extreme slop nonsense, often political? “Not interested” x20 fixes it for a day but then it’s back again. It’s getting bad enough to make me stop using Twitter…frustrating because the good content is still good, the app just hides it.

TracingWoods: it’s cyclical for me but the past couple of weeks have been fine. feels like a specific switch flips occasionally, and no amount of “not interested” stops it. it should rotate back into sanity for you soon enough.

I checked for journalist purposes, and my For You page looks… exactly like my Following feed, plus some similar things that I’m not technically following and aren’t in lists especially when paired with interactions with those who I do follow, except the For You stuff is scrambled so you can’t rely on it. So good job me, I suppose? It still doesn’t do anything useful for me.

A new paper on ruining it for everyone, social media edition, is called ‘Inside the funhouse mirror factory: How social media distorts perceptions of norms.’ Or, as an author puts it, ‘social media is not reality,’ who knew?

Online discussions are dominated by a surprisingly small, extremely vocal, and non-representative minority. Research on social media has found that, while only 3% of active accounts are toxic, they produce 33% of all content. Furthermore, 74% of all online conflicts are started in just 1% of communities, and 0.1% of users shared 80% of fake news. Not only does this extreme minority stir discontent, spread misinformation, and spark outrage online, they also bias the meta-perceptions of most users who passively “lurk” online.

The strategy absolutely works. In AI debates on Twitter, that 3% toxic minority works hard to give the impression that their position is what everyone thinks, promote polarization and so on. From what I can tell politics has it that much worse.

Indeed, 97% of political posts from Twitter/X come from just 10% of the most active users on social media.

That’s a weird case, because most Twitter users are mostly or entirely lurkers, so 10% of accounts plausibly includes most posts period.

The motivation for all this is obvious, across sides and topics. If you have a moderate opinion, why would it post about that, especially with all that polarized hostility? There are plenty of places I have moderate views, and then I don’t talk about them on social media (or here, mostly) because why would I need to do that?

One of the big shifts in AI is the rise of more efficient Ruining It For Everyone. Where previously the bad actors were rate limited and had substantial marginal costs, those limitations fall away, as do various norms keeping people behaving decently. Systems that could take a certain amount of such stress will stop working, and we’ll need to make everything more robust against bad actors.

The great news is that if it’s a tiny group ruining it for everyone, you can block them.

Yishan: “0.1% of users share 80% of fake news”

After that document leak about how Russia authors its fake news, I’ve been able to more easily spot disinfo accounts and just block them from my feed.

I only needed to do this for a couple weeks and my TL quality improved markedly. There’s still plenty of opinion from right and left, but way less of the “shit-stirring hysteria” variety.

If you are wondering what leak it was, itʻs the one described in this thread.

Youʻll see that the main thrust is to exploit: “They are afraid of losing the American way of life and the ‘American dream.’ It is these sentiments that should be exploited,”

In the quoted screenshot, the key element is at the bottom: – use a minimum of fake news and a maximum of realistic information – continuously repeat that this is what is really happening, but the official media will never tell you or show it to you.

The recent port strike and Hurricane Helene were great for this because whenever thereʻs a big event, the disinfo accounts appear to hyper-focus on exploiting it, so a lot of their posts get a lot of circulation, and you can start to spot them.

The pattern you look for is:

-

The post often talks about how youʻre not being told the truth, or itʻs been hidden from you. Theyʻre very obvious with it. A more subtle way is that they end with a question asking if there is something sinister going on.

-

the second thing is that it does cite a bunch of real/realistic (or already well-known facts) and then connects it to some new claim, often one you haven’t heard any other substantiation for. This could be real, but it’s the cluster of this plus the other points.

-

The third is that the author doesn’t seem to be a real person. Now, this is tough, because there are plenty of real anon accounts. but it’s a sort of thing you can tell from a combination of the username (one that seems weird or has a lot of numbers, or doesn’t fit the persona presented), the picture isn’t a real person, the persona is a little too “bright”, or the character implied by the bio doesn’t seem like the kind of person who’d suddenly care a lot about this issue. This one requires a bit of intuition.

None of these things is by itself conclusive (and I might have blocked some false positives), but once you start knowing what to spot, there’s a certain kind of post and when you look at the account, it has certain characteristics that stick out.

It just doesn’t look like your normal extreme right-wing or extreme left-wing real person. People like that tend to make more throwaway (“I hate this! Can’t believe Harris/Elon/Trump is so awful!”) posts, not carefully-styled media-delicious posts, if that makes sense.

I mostly prefer to toss out anyone who spends their social media expressing political opinions, except for an intentional politics list (that I should update some time soon, it’s getting pretty old).

What Yishan is doing sounds like it would be effective at scale if sustained, but you’d have to put in the work. And it’s a shame that he has to do it all himself. Ideally an AI could help you do that (someone build this!) but at minimum you’d want a group of people who can share such blocks, so if someone hits critical mass then by default they get blocked throughout. You could provide insurance in various forms – e.g. if you’ve interacted with them yourself or they’re at least a 2nd-level follow, then you can exempt those accounts, and so on. Sky’s the limit, we have lots of options

Maybe we can quickly make an app for that?

Tenobrus: i have a lotta mutuals who i would love to follow but be able to mute some semantic subset of their posts. like give me this guy but without the dumb politics, or that girl but without the thirst traps, or that tech bro but without the e/acc.

This seems super doable, on the ‘I am tempted to build an MVP myself’ level. I asked o1-preview, and it called it ambitious but agreed it could be done, and even for a relatively not great programmer suggested maybe 30-50 hours to an MVP. Who’s in?

Or maybe it’s even easier?

Jay Van Bavel: Unfollowing toxic social media influencers makes people less hostile!

The list includes accounts like CNN, so your definition of ‘hyperpartisan’ may vary, but it doesn’t seem crazy and it worked.

If you want to fix the social media platforms themselves to avoid the toxic patterns, you have to fix the incentives, and that means you will need law. Even if all the companies were to get together to agree not to use ‘rage maximizers’ or various forms of engagement farming, that would be antitrust. Without an agreement, they don’t have much choice. So, law, except first amendment and the other real concerns about using a law there.

My best proposal continues to be a law mandating that large social media platforms offer access to alternative interfaces and forms of content filtering and selection. Let people choose friendly options if they want that.

Otherwise, of course you are going to get things like TikTok.

NPR reports on internal TikTok communications where they spoke candidly about the dangers for children on the app, exploiting a mistaken failure to redact that information from one of the lawsuits against TikTok.

As TikTok’s 170 million U.S. users can attest, the platform’s hyper-personalized algorithm can be so engaging it becomes difficult to close the app. TikTok determined the precise amount of viewing it takes for someone to form a habit: 260 videos. After that, according to state investigators, a user “is likely to become addicted to the platform.”

In the previously redacted portion of the suit, Kentucky authorities say: “While this may seem substantial, TikTok videos can be as short as 8 seconds and are played for viewers in rapid-fire succession, automatically,” the investigators wrote. “Thus, in under 35 minutes, an average user is likely to become addicted to the platform.”

They also note that the tool that limits time usage, which defaulted to a rather large 60 minutes a day, had almost no impact on usage in tests (108.5 min/day → 107).

One document shows one TikTok project manager saying, “Our goal is not to reduce the time spent.”

Well, yes, obviously. In general it’s good to get confirmation on obvious things, like that TikTok was demoting relatively unattractive people in its feeds, I mean come on. And yes, if 95% (!) of smartphone users under 17 are on TikTok, usually for extended periods, that will exclude other opportunities for them.

And yes, the algorithm will trap you into some terrible stuff, that’s what works.

During one internal safety presentation in 2020, employees warned the app “can serve potentially harmful content expeditiously.” TikTok conducted internal experiments with test accounts to see how quickly they descend into negative filter bubbles.

“After following several ‘painhub’ and ‘sadnotes’ accounts, it took me 20 mins to drop into ‘negative’ filter bubble,” one employee wrote. “The intensive density of negative content makes me lower down mood and increase my sadness feelings though I am in a high spirit in my recent life.”

Another employee said, “there are a lot of videos mentioning suicide,” including one asking, “If you could kill yourself without hurting anybody would you?”

In particular it seems moderation missed self-harm and eating disorders, but also:

TikTok acknowledges internally that it has substantial “leakage” rates of violating content that’s not removed. Those leakage rates include: 35.71% of “Normalization of Pedophilia;” 33.33% of “Minor Sexual Solicitation;” 39.13% of “Minor Physical Abuse;” 30.36% of “leading minors off platform;” 50% of “Glorification of Minor Sexual Assault;” and “100% of “Fetishizing Minors.”

None of this is new or surprising. I affirm that I believe we should, indeed, require that TikTok ownership be transferred, knowing that is probably a de facto ban.

The obvious question is, in the age of multimodal AI, can we dramatically improve on at least this part of the problem? TikTok might be happy to serve up an endless string of anorexia videos, but I do not think they want to be encouraging sexual predators. In addition to being really awful, it is also very bad for business. I would predict that it would take less than a week to get a fine-tune of Llama 3.2, based on feeding it previously flagged and reviewed videos as the fine-tune data, that would do much better than these rates at identifying violating TikTok videos. You could check every video, or at least every video that would otherwise get non-trivial play counts.

Old man asks for help transferring his contacts, family realizes he has sorted his contacts alphabetically by friendship tier and not all of them are in the tier they would expect.

Lu In Alaska: Stop what you’re doing and read the following:

All the kids and in-laws and grands have met up for breakfast at my geriatric dad’s house. My sisters are here. Their boys are here. We are eating breakfast. My dad asks for help transferring his contacts into his new phone.

Friends. We discovered together that my dad has his contacts in a tier list of his feelings not alphabetically. We are absolutely *beside ourselvesreviewing his tiers off as a whole family. Crying. Gasping. Wheezing. His ex-wife who is visiting today is C tier but his first wife’s sister is B tier THE DRAMA.

So like my name is in as ALu. His brother-in-law is BJim. He is rating us. I am DYING. Someone find CAnn she’s going to be pissed. Let’s sit back and watch.

The kids made A tier what a relief. Should be A+Lu

I love this, and also this seems kind of smart (also hilarious) given how many contacts one inevitably gathers? I have 8 contacts that are not me and that begin with Z, and 7 that begin with Y. You get a ‘favorites’ page, but you only get one. You can use labels, but the interface for them is awkward.

Seriously, how hard is it to ensure this particular autocorrect doesn’t happen?

Cookingwong: The fact that my phone autocorrects “yeah np” to “yeah no” has caused 3 divorces, 2 gang wars, 11 failed hostage negotiations, and $54 billion loss in GDP.

‘Np’ is a standard thing to say, yet phones often think it is a typo and autocorrect it to its exact opposite. Can someone please ensure that ‘np’ gets added to the list of things that do not get corrected?

Apple is working on smart glasses that would make use of Vision Pro’s technology, aiming for a 2027 launch, along with potential camera-equipped AirPods. Apple essentially forces you to pick a side, either in or out, so when the Vision Pro came out I was considering whether to switch entirely to their products, and concluded that the device wasn’t ready. But some version of it or of smart glasses will be awesome when someone finally pulls them off properly, the question is when and who.

There is the theory that the tech industry is still in California because not enforcing non-competes is more important than everything else combined. I don’t doubt it helps but also companies can simply not require such agreements at this point? I think mostly it’s about path dependence, network effects and lock-in at this point.

What is important in a hotel room?

Auren Hoffman: things all hotel rooms should have (but don’t): MUCH more light. room key from phone. SUPER fast wifi. tons of free bottled water. outlets every few feet. what else?

Sheel Mohnot: blackout curtains

a single button to turn off every light in the room

check in via kiosk

Andres Sandberg: A desk, a hairdryer.

Humberto: 1. Complete blackout 2. 0 noise/ shutdown everything including the fucking refrigerator hidden inside a cabinet but still audible 3. Enough space for a regular sized human to do some push ups 4. Laundry bags (can be paper) 5. I was going to say an AirPlay compatible tv but clearly optional this one.

Ian Schafer: Mag/Qi phone charging stand.

Emily Mason: USB and USB_C fast charging ports sockets (and a few cords at the desk).

The answers are obvious if you ask around, and most of them are cheap to implement.

My list at this point of what I care about that can plausibly be missing is something like this, roughly in order:

-

Moderately comfortable bed or better. Will pay for quality here.

-

Sufficient pillows and blankets.

-

Blackout curtains, no lights you cannot easily turn off. No noise.

-

Excellent wi-fi.

-

AC/heat that you can adjust reasonably.

-

Desk with good chair.

-

Access to good breakfast, either in hotel or within an easy walk.

-

Decent exercise room, which mostly means weights and a bench.

-

Outlets on all sides of the bed, and at desk, ideally actual ports and chargers.

-

Access to good free water, if tap is bad there then bottled is necessary.

-

TV with usable HDMI port, way to stream to it, easy access to streaming services.

-

Refrigerator with space to put things.

-

Views are a nice to have.

The UK to require all chickens be registered with the state, with criminal penalties.

City of Casselberry warns storm victims not to repair fences without proper permits.

The FAA shut down flights bringing hurricane aid into Western North Carolina, closing the air space, citing the need for full control. It’s possible this actually makes sense, but I am very skeptical.

California decides to ‘ban sell-by dates’ by which they mean they’re going to require you to split that into two distinct numbers or else:

Merlyn Miller (Food and Wine): he changes will take effect starting on July 1, 2026, and impact all manufacturers, processors, and retailers of food for human consumption. To adhere with the requisite language outlined, any food products with a date label — with the exception of infant formula, eggs, beer, and malt beverages — must state “Best if Used By” to indicate peak quality, and “Use By” to designate food safety. By reducing food waste, the legislation (Assembly Bill No. 660) may ultimately save consumers money and combat climate change too.

It’s so California to say you are ‘banning X’ and instead require a second X.

The concern seems to be that some people would think they needed to throw food out if it was past its expiration date, leading to ‘food waste.’ But wasn’t that exactly what the label was for and what it meant? So won’t this mean you’ll simply have to add a second earlier date for ‘peak quality,’ and some people will then throw out anything past that date too? Also, isn’t ‘peak quality’ almost always ‘the day or even minute we made this?’

Who is going to buy things that are past ‘peak quality’ but not expired? Are stores going to have to start discounting such items?

Therefore I predict this new law net increases both confusion and food waste.

US Government mandates companies create interception portals so they can wiretap Americans when needed. Chinese hackers compromise the resulting systems. Whoops.

Timothy Lee notes that not only are injuries from Waymo crashes 70% less common per passenger mile than for human drivers, the human drivers are almost always at fault when the Waymo accidents do happen.

Joe Biden preparing a ban on Russian and Chinese self-driving car technology, fearing that the cars might suddenly do what the Russians or Chinese want them to do.

I have now finished the TV series UnREAL. The news is good, and there are now seven shows in my tier 1. My guess is this is my new #5 show of all time. Here’s the minimally spoilerific pitch: They’re producing The Bachelor, and also each other, by any means necessary, and they’re all horrible people.

I got curious enough afterwards to actually watch The Bachelor, which turns out to be an excellent new show to put on during workouts and is better for having watched UnREAL first, but very much will not be joining the top tiers. Is biggest issue is that it’s largely the same every season so I’ll probably tire of it soon. But full strategic analysis is likely on the way, because if I’m watching anyway then there’s a lot to learn.

A teaser note: Everlasting, the version on UnREAL, is clearly superior to The Bachelor. There are some really good ideas there, and also the producers on The Bachelor are way too lazy. Go out there and actually produce more, and make better editing decisions.

I can also report that Nobody Wants This is indeed poorly named. You’ll want this.

I continue to enter my movie reviews at Letterboxd, but also want to do some additional discussion here this month.

We start with the Scott Sumner movie reviews for Q3, along with additional thoughts from him, especially about appreciating films where ‘nothing is happening.’ This is closely linked to his strong dislike of Hollywood movies, where something is always happening, even if that something is nothing. The audience insists upon it.

This was the second month I entered Scott’s ratings and films into a spreadsheet. Something jumped out quite a bit. Then afterwards, I discovered Scott’s reviews have all been compiled already.

Last quarter his lowest rated new film, a 2.6, was Challengers. He said he knew he’d made a mistake before the previews even finished and definitely after a few minutes. Scott values different things than I do but this was the first time I’ve said ‘no Scott Sumner, your rating is objectively wrong here.’

This quarter his lowest rating, a truly dismal 1.5, was for John Wick, with it being his turn to say ‘nothing happens’ and wondering if it was supposed to be a parody, which it very much isn’t.

There’s a strange kind of mirror here? Scott loves cinematography, and long purposeful silences, painting pictures, and great acting. I’m all for all of that, when it’s done well, although with less tolerance for how much time you can take – if you’re going to do a lot of meandering you need to be really good.

So when I finally this month watched The Godfather without falling asleep while trying (cause if I like Megalopolis, I really have no excuse) I see how it is in Scott’s system an amazingly great film. I definitely appreciated it on that level. But I also did notice why I’d previously bounced off, and also at least two major plot holes where plot-central decisions make no sense, and I noticed I very much disliked what the movie was trying to whisper to us. In the end, yeah I gave it a 4.0, but it felt like work, or cultural research, and I notice I feel like I ‘should’ watch Part II but I don’t actually want to do it.

Then on the flip side there’s not only the simple joys of the Hollywood picture, there’s the ability to extract what is actually interesting and the questions being asked, behind all that, if one pays attention.

In the case of John Wick, I wrote a post about the first 3 John Wick movies, following up with my review of John Wick 4 here, and I’d be curious what Scott thinks of that explanation. That John Wick exists in a special universe, with a unique economy and set of norms and laws, and you perhaps come for the violence but you stay for the world building. Also, I would add, how people react to the concept of the unstoppable force – the idea that in-universe people know that Wick is probably going to take down those 100 people, if he sets his mind to it, so what do you do?

Scott’s write-up indicates he didn’t see any of that.

Similarly, the recent movie getting the lowest rating this quarter from Scott was Megalopolis, at 3.0 out of his 4, the minimum to be worth watching, whereas I have it at 4.5 out of 5. Scott’s 3 is still a lot higher than the public, and Scott says he didn’t understand the plot and was largely dismissive of the results, but he admired the ambition and thought it was worth seeing for that. Whereas to me, yes a lot of it is ‘on the nose’ and the thing is a mess but if Scott Sumner says he didn’t get what the central conflict was about beyond vague senses then how can it be ‘too on the nose’?

I seriously worry that we live in a society where people somehow find Megalopolis uninteresting, and don’t see the ideas in front of their face or approve of or care for those ideas even if they did. And I worry such a society is filled, as the film notes, with people who no longer believe in it and in the future, and thus will inevitably fall – a New Rome, indeed. In some sense, the reaction to the film, people rejecting the message, makes the message that much more clear.

Discussion question: Should you date or invest in anyone who disliked Megalopolis?

I then went and checked out the compilation of Scott’s scores. The world of movies is so large. I haven’t seen any of his 4.0s. From his 3.9s, the only one I saw and remember was Harakiri, which was because I was testing the top of the Letterboxd ratings (with mixed results for that strategy overall), and for my taste I only got to 4.5 and couldn’t quite get to 5, by his scale he is clearly correct. From his 3.8s I’m confident I’ve seen Traffic, The Empire Strikes Back, The Big Lebowski, No Country for Old Men and The Lord of the Rings. Certainly those are some great picks.

There are some clear things Scott tends to prefer more than I do, so there are some clear adjustments I can make: The more ‘commercial,’ recent, American, short, fast or ‘fun’ the more I should adjust upwards, and vice versa, plus my genre, topic and actor preferences. In a sense you want to know ‘Scott rating above replacement for certain known things’ rather than Scott’s raw rating, and indeed that is the right way to evaluate most movie ratings if you are an advanced player.

At minimum, I’m clearly underusing the obvious ‘see Scott’s highly ranked picks with some filtering for what you’d expect to like.’

As opposed to movie critics in general, who seem completely lost and confused – I’ve seen two other movies since and no one seems to have any idea what either of them was even about.

The Substance (trailer-level spoilers) is another misunderstood movie from this month that makes one worry for our civilization. Everyone, I presume including those who made the film, is missing the central point. Yes, on an obvious level (and oh do they bring out the anvils) this is about beauty standards and female aging and body horror and all that. But actually it’s not centrally about that at all. It’s about maximizing quality of life under game theory and decision theory, an iterated prisoner’s dilemma and passing of the torch between versions of yourself across time and generations.

This is all text, the ‘better version of yourself’ actress is literally named Qualley (her character is called Sue, which also counts if you think about it), and the one so desperately running out of time that she divides herself into two is named Demi Moore, and they both do an amazing job while matching up perfectly, so this is probably the greatest Kabbalistic casting job of all time.

Our society seems to treat the breakdown and failure of this, the failure to hear even as you are told in no uncertain terms over and over ‘THERE IS ONLY ONE YOU,’ as inevitable. We are one, and cannot fathom it.

Our society is failing this on a massive scale, from the falling fertility rate to the power being clung to by those who long ago needed to hand things off, and in reverse by those who do not understand what foundations their survival relies upon.

Now consider the same scenario as the movie, except without requiring stabilization – the switch is 100% voluntary each time. Can we pass this test? What if the two sides are far less the ‘same person’ as they are here, say the ‘better younger’ one is an AI?

I ask because if we are to survive, we will have to solve vastly harder versions of such problems. We will need to solve them with ourselves, with each other, and with AIs. Things currently do not look so good on these fronts.

Joker: Folie à Deux is another movie that is not about what people think, at all. People think it’s bad, and especially that its ending is bad, and their reasons for thinking this are very bad. I’m not saying it’s a great film, but both Joker movies are a lot better than I thought they were before the last five minutes of this one. I am sad that it was less effective because I was importantly spoiled, so if you decide to be in don’t ask any questions.

I also love this old story, Howard Hughes had insomnia and liked to watch late movies, so he bought a television station to ensure it would play movies late at night, and would occasionally call them up to order them to switch to a different one. Station cost him $34 million in today’s dollars, so totally Worth It.

Katherine Dee, also known as Default Friend, makes the case that the death or stasis of culture has been greatly exaggerated. She starts by noting that fashion, movies, television and music are indeed in decay. For fashion I’m actively happy about that. For music I agree but am mostly fine with it, since we have such great archives available. For movies and television, I see the argument, and there’s a certain ‘lack of slack’ given to modern productions, but I think the decline narratives are mostly wrong.

The real cast Katherine is making is that the new culture is elsewhere, on social media, especially the idea of the entire avatar of a performer as a work of art, to be experienced in real time and in dialogue with the audience (perhaps, I’d note, similarly to sports?).

I buy that there is something there and that it has cultural elements. Certainly we are exploring new forms on YouTube and TikTok. Some of it even has merit, as she notes the good TikTok tends to often be sketch comedy TikTok. I notice that still doesn’t make me much less sad and also I am not that tempted to have a TikTok account. I find quite a lot of the value comes from touchstones and reference points and being able to filter and distill things over time. If everything is ephemeral, or only in the moment, then fades, that doesn’t work for me, and over time presumably culture breaks down.

I notice I’m thinking about the distinction between sports, which are to be experienced mostly in real time, with this new kind of social media performance. The difference is that sports gives us a fixed set of reference points and meaningful events, that everyone can share, especially locally, and also then a shared history we can remember and debate. I don’t think the new forms do a good job of that, in addition to the usual other reasons sports are awesome.

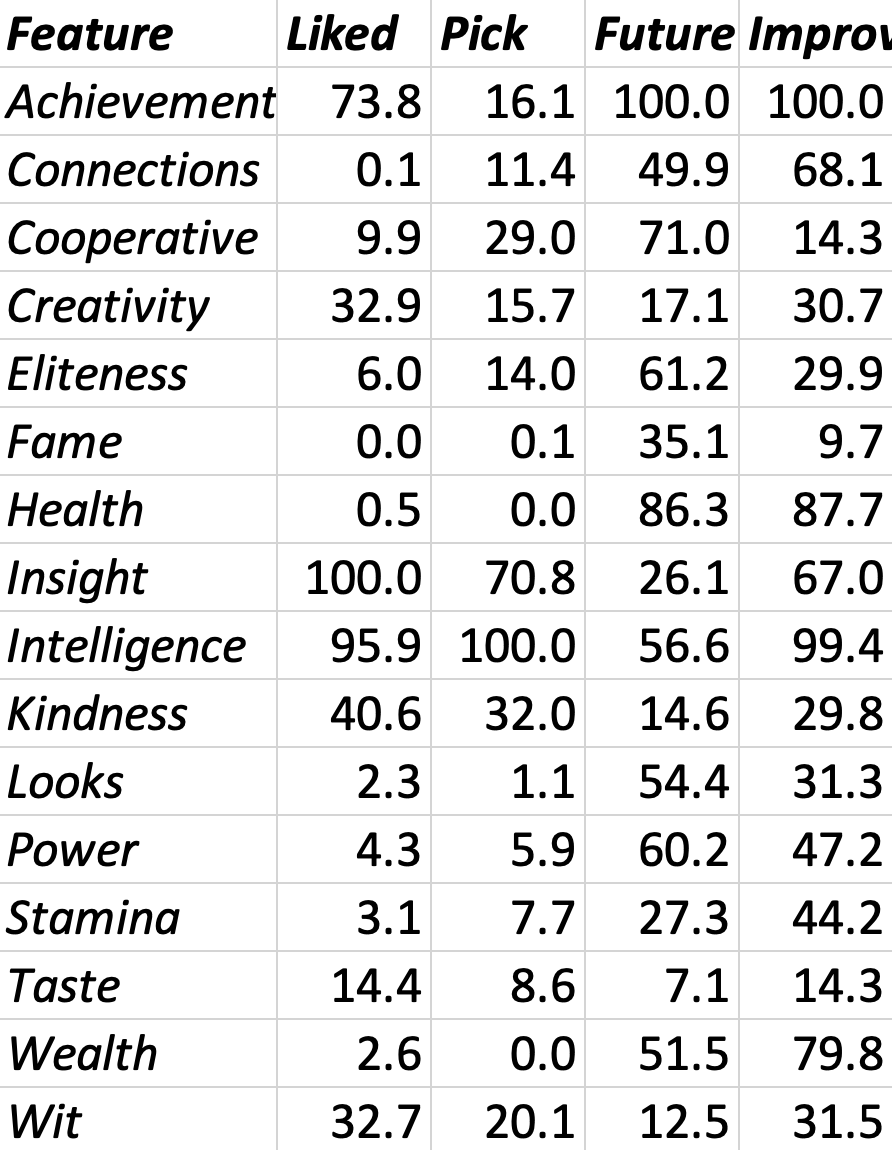

Robin Hanson has an interesting post about various features.

We all have many kinds of features. I collected 16 of them, and over the last day did four sets of polls to rank them according to four criteria:

-

Liked – what features of you do you most want to be liked for?

-

Pick – what features of them do you most use to pick associates?

-

Future – what features most cause future folks to be like them?

-

Improve – what features do you most want to improve in yourself?

Here are priorities (relative to 100 max) from 5984 poll responses:

As I find some of the Liked,Pick choices hard to believe, I see those as more showing our ideals re such features weights. F weights seem more believable to me.

Liked and Pick are strongly (0.85) correlated, but both are uncorrelated (-0.02,-0.08) with Future. Improve is correlated with all three (L:0.48, P:0.35, F:0.56), suggesting we choose what to improve as a combo of what influences future and what we want to be liked for now. (Best fit of Improve as linear combo of others is I = 1.12*L-0.94*P+0.33*F.)

Can anyone help me understand these patterns?

In some ways, the survey design choices Hanson made are even more interesting than the results, but I’ll focus on looking at the results.

The first thing to note is that people in the ‘Pick’ column were largely lying.

If you think you don’t pick your associates largely on the basis of health, stamina, looks, power, wealth, fame, achievements, connections or taste, I am here to inform you that you are probably fooling yourself on that.

There are a lot of things I value in associates, and I absolutely value intelligence and insight too, but I’m not going to pretend I don’t also care about the stuff listed above as well. I also note that there’s a difference between what I care about when initially picking associates or potential associates, versus what causes me to want to keep people around over the long term.

This column overall seems to more be answering the question ‘what features do you want to use as much as possible to pick your associates?’ I buy that we collectively want to use these low rated features less, or think of ourselves as using them less. But quite obviously we do use them, especially when choosing our associates initially.

Similarly, ‘liked’ is not what you are liked for, or what you are striving to acquire in order to be liked. It is what you would prefer that others like you for. Here, I am actually surprised Intelligence ranks so high, even though the pool of respondents it is Hanson’s Twitter. People also want to improve their intelligence in this survey, which implies this is about something more than inherent ability.

The ‘future’ column is weird because most people mostly aren’t trying to cause future folks in general to be more like themselves. They’re also thinking about it in a weird way. Why are ‘health’ and ‘cooperative’ ranked so highly here? What is this measuring?

Matt Mullenweg publishes his charitable contributions going back to 2011, as part of an ongoing battle with private equity firm Silver Lake. This could be a good norm to encourage, conspicuous giving rather than conspicuous consumption is great even when it’s done in stupid ways (e.g. to boast at charity galas for cute puppies with rare diseases) and you can improve on that.

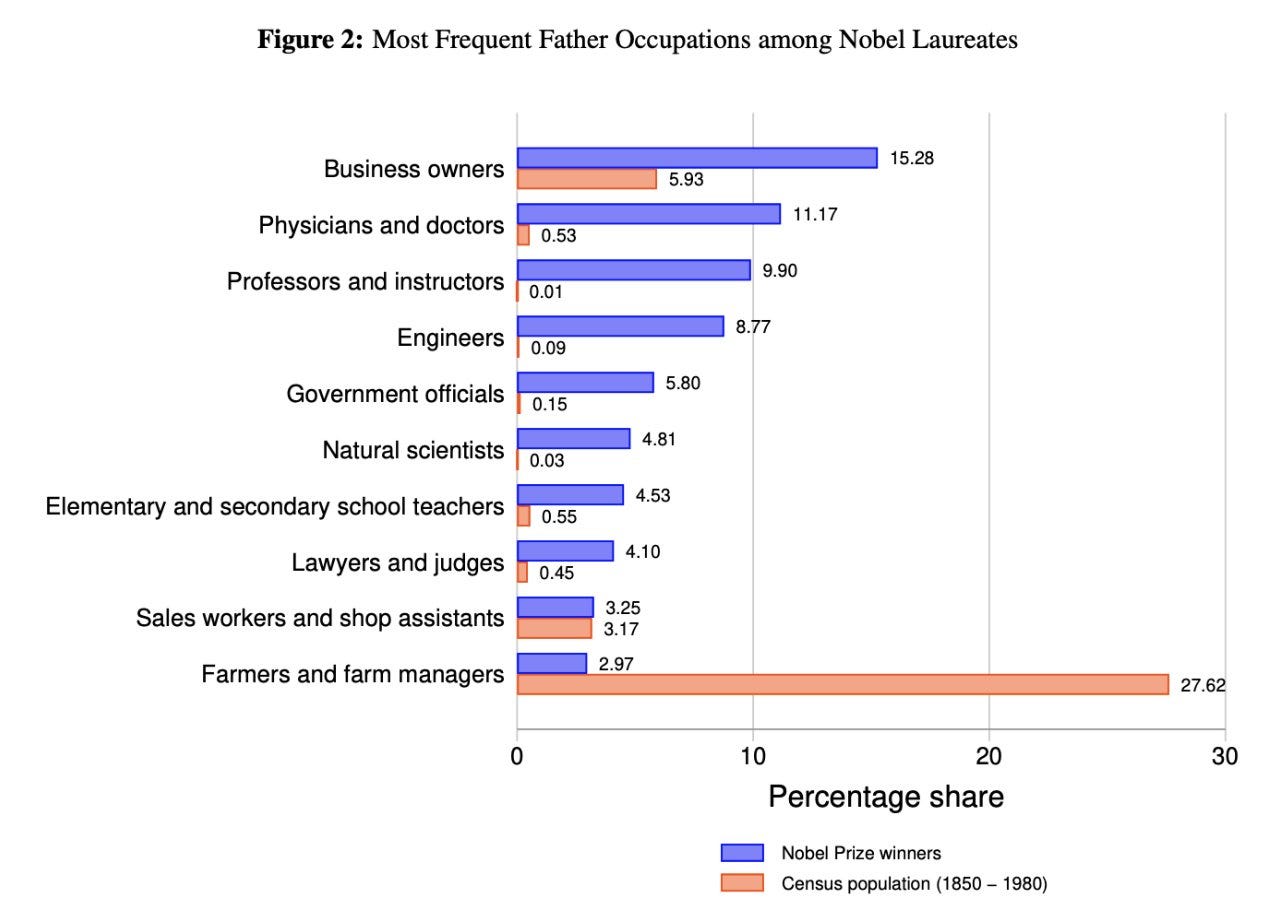

What makes a science Nobel Laureate? Paul Novosad crunches the numbers. About half come from the ‘top 5%’ by income, but many do come from very non-elite backgrounds. The most common profession for fathers is business owner rather than professor, but that’s because a lot of people own businesses, whereas the ratio on professors is off the charts nuts, whereas growing up on a farm means you are mostly toast:

What is odd about Paul’s framing of the results is the idea that talent is evenly distributed. That is Obvious Nonsense. We are talking about the most elite of elite talent. If you have that talent, your parents likely were highly talented too, and likely inclined to similar professions. Yes, of course exposure to the right culture and ideas and opportunities and pushes in the right directions matter tons too, and yes most of the talent out on the farm or in the third world will be lost to top science, but we were not starting out on a level playing field here.

A lot of that 990:1 likelihood ratio for professors, and 160:1 for natural scientists, is a talent differential.

Whereas money alone seems to not help much. Business owners have only about a disappointing 2.5:1 likelihood ratio, versus elementary and secondary school teachers who are much poorer but come in around 8:1.

The cultural fit and exposure to science and excitement about science, together with talent for the field, are where it is at here.

If I were designing a civilization-level response to this, I would not be so worried about ‘equality’ in super high scientific achievement. There’s tons of talent out there, versus not that much opportunity. Instead, I would mostly focus on the opposite, the places where we have proven talent can enjoy oversized success, and I would try to improve that success. I care about the discoveries, not who makes them, so let’s ‘go where the money is’ and work with the children of scientists and professors, ensuring they get their shot, while also providing avenues for exceptional talent from elsewhere. Play to win.

I played through the main story of Gordian Quest, which I declare to be Tier 4 (Playable) but you probably shouldn’t. Sadly, in what Steam records as 18 hours, not once was there any serious danger anyone in the party would die, and when I finished the game I ‘still had all these’ with a lot of substantial upgrades being held back. Yes, you can move to higher difficulties, but the other problem is that the plot was as boring and generic as they come. Some going through the motions was fun, but I definitely was waiting for it to be over by the end.

Also the game kind of makes you sit around at the end of battles while you full heal and recharge your action meters, you either make this harder to do or you make it impossible. And it’s very easy to click the wrong thing in the skill grid and really hurt yourself permanently, although you had so much margin for error it didn’t matter.

Summary: There’s something here, and I think that a good game could be built using this engine, but alas this isn’t it. Not worth your time.

I finished my playthrough of the Canon of Creation from Shin Megami Tensei V: Vengeance (SMT V). I can confirm that it is very good and a major upgrade over the base SMT V, although I do worry that the full ‘save anywhere’ implementation is too forgiving and thus cuts down too much on the tension level.

There are two other issues. The first is a huge difficulty spike at the end right before the final set of battles, which means that the correct play is indeed a version of ‘save everything that will still be useful later, and spend it on a big splurge to build a top level party for the last few battles.’ And, well, sure, par for the course, but I wish we found a way to not make this always correct.

The other issue is that I am not thrilled with your ending options, for reasons that are logically highly related to people not thinking well about AI alignment and how to choose a good future in real life. There are obvious reasons the options each seem doomed, so your total freedom is illusory. The ‘secret fourth’ option is the one I wanted, and I was willing to fight extra for it, but one of the required quests seemed bugged and wouldn’t start (I generally avoid spoilers and guides, but if I’m spending 100+ hours on one of these games I want to know what triggers the endings). Still, the options are always interesting to consider in SMT games.

A weird note is that the items I got for the preorder radically change how you approach the early part of the game, because they give you a free minor heal and minor Almighty attack all, which don’t cost SP. That makes it easy to go for a Magic-based build without worrying about Macca early.

The question now is, do I go for Canon of Vengeance and/or the other endings, and if so do I do it keeping my levels or reset. Not sure yet. I presume it’s worth doing Vengeance once.

Metaphor: ReFantazio looks like the next excellent Atlus Persona-style game, although I plan on waiting for price drops to play it since I’m not done with SMT V and haven’t gotten to Episode Aiges yet and my queue is large and also I expect to get into Slay the Spire 2 within a few months.

Magic’s Commander format bans Nadu, Winged Wisdom, which seems necessary and everyone saw coming and where the arguments are highly overdetermined, but then it also bans Dockside Extortionist, Jeweled Lotus and Mana Crypt. The argument they make is that with so many good midrange snowball cards it is too easy for the player with fast mana to take over and overwhelm the table, and they don’t want this to happen too often so Sol Ring is fine because it is special but there can’t be too many different ways to get there.

Many were unhappy with the decision to ban these fast mana format staples.

Sam Black emphasizes that this change is destabilizing, after several years of stable decisions, hurting players who invested deeply into their decks and cards. He doesn’t agree with the philosophy of the changes, but does note that the logic here could make sense from a certain casual perspective to help the format meet its design goals. And he thinks cEDH will suffer most, but urges everyone to implement and stick to whatever decisions the Rules Committee makes.

Brian Kibler calls Crypt and Lotus Rule 0 issues, you can talk to your group about whether to allow such fast mana, but can understand Dockside and is like most of us happy for Nadu to bite the dust.

Zac Hill points out that if you ban some of the mana acceleration, this could decrease or increase the amount of snowball runaway games, depending on what it does to the variance of which players get how fast a start. Reid Duke points out that something can be cool when it happens rarely enough but miserable when (as in Golden Goose in Oko) it happens too often.

Samstod notes the change is terrible at the product level, wiping out a lot of value, Kai Budde fires back that it’s about time someone wiped out that value.

Kai Budde: Hardly the problem of the CRC. that’s wotc printing crazy good chase mythics to milk players. and then that starts the powercreep as they have to top these to sell the next cards etc. can make the same argument for modern-nadu. people spent money, keep it legal. no, thanks.

lotus/crypt/dockside are format breaking. argueing anything else after 30 years of these cards being too powerful in every format is just ridiculous. now why sol ring and maybe some others survived is an entirely different question, i’m with @bmkibler there.

Jaxon: I have yet to hear of a deck that wouldn’t be better for including Dockside, Crypt, and Lotus. That’s textbook ban-worthy.

The RC then offered a document answering various questions and objections. Glenn Jones has some thoughts on the document.

So far, so normal. All very reasonable debates. There’s a constant tension between ‘don’t destroy card market value or upset the players and their current choices’ and ‘do what is long term healthy for the format.’ I have no idea if banning Lotus and Crypt was net good or not, but it’s certainly a defensible position.

Alas, things then turned rather ugly.

Commander Rules Committee: As a result of the threats last week against RC members, it has become impossible for us to continue operating as an independent entity. Given that, we have asked WotC to assume responsibility for Commander and they will be making decisions and announcements going forward.

We are sad about the end of this era, and hopeful for the future; WotC has given strong assurances they do not want to change the vision of the format. Committee members have been invited to contribute as individual advisors to the new management framework.

The RC would like to express our gratitude to all the CAG members who have contributed their wisdom and perspective over the years. Finally, we want to thank all the players who have made this game so successful. We look forward to interacting as members of the community.

Please, be excellent to each other.

LSV: It seemed pretty clear to me that having people outside the building controlling the banlist for WotC’s most popular format was untenable, but it’s pretty grim how this all went down. The bottom 10% of any large group is often horrible, and this is a perfect example.

Gavin Verhey: The RC and CAG are incredible people, devoted to a format we love. They’ve set a great example. Though we at Wizards are now managing Commander, we will be working with community members, like the RC, on future decisions. It’s critical to us Commander remains community-focused.

Here is Wizards official announcement of the takeover.

This was inevitable in some form. Wizards had essentially ‘taken over’ Commander already, in the sense that they design cards now primarily with Commander in mind. Yes, the RC had the power to ban individual cards. But the original vision of Commander, that it should take what happened to be around and let us do fun things with those cards and letting weirdness flags fly and unexpected things happen, except banning what happened to be obnoxious? That vision was already mostly dead. The RC couldn’t exactly go around banning everything designed ‘for Commander.’ Eventually, Wizards was going to fully take control, one way or another, for better and for worse.

It’s still pretty terrible the way it went down. The Magic community should not have to deal with death threats when making card banning decisions. Nor should those decisions be at least somewhat rewarded, with the targets then giving up their positions. But what choice was there?

Contra LSV, I do feel shame for what happened, despite having absolutely no connection to any of the particular events and having basically not played for years. It is a stain upon the entire community. If someone brings dishonor on your house, ‘I had nothing to do with it’ obviously matters but it does not get you fully off the hook. It was your house.

Alas, this isn’t new. Zac Hill and Worth Wollpert got serious threats back in the day. I am fortunate that I never had to deal with anything like this.

Moving forward, what should be done with Commander?

If I was Wizards, I would be sure not to move too quickly. One needs to take the time to get it right, and also to not make it look like they’ve been lying in wait for the RC to get the message and finally hand things off, or feel like these threats are being rewarded.

But what about the proposal being floated, at least in principle?

WotC: Here’s the idea: There are four power brackets, and every Commander deck can be placed in one of those brackets by examining the cards and combinations in your deck and comparing them to lists we’ll need community help to create. You can imagine bracket one is the baseline of an average preconstructed deck or below and bracket four is high power. For the lower tiers, we may lean on a mixture of cards and a description of how the deck functions, and the higher tiers are likely defined by more explicit lists of cards.

For example, you could imagine bracket one has cards that easily can go in any deck, like Swords to Plowshares, Grave Titan, and Cultivate, whereas bracket four would have cards like Vampiric Tutor, Armageddon, and Grim Monolith, cards that make games too much more consistent, lopsided, or fast than the average deck can engage with.

In this system, your deck would be defined by its highest-bracket card or cards. This makes it clear what cards go where and what kinds of cards you can expect people to be playing. For example, if Ancient Tomb is a bracket-four card, your deck would generally be considered a four. But if it’s part of a Tomb-themed deck, the conversation may be “My deck is a four with Ancient Tomb but a two without it. Is that okay with everyone?”

This is at least kind of splitting Commander into four formats as a formalized Rule 0.

It is also a weird set of examples, and a strange format, where a card like Armageddon can be in the highest tier alongside the fast mana and tutors. I’d be curious to see what some 2s and 3s are supposed to be. And we’ll need to figure out what to do about cards like Sol Ring and other automatic-include cards especially mana sources.

I do worry a bit that this could cause a rush to buy ‘worse’ cards that get lower tier values, and that could result in a situation where it costs more to build a deck at a lower tier and those without the resources have to have awkward conversations.

On reflection I do like that this is a threshold tier system, rather than a points system. A points system (where each card has a point total, and your deck can only combine to X points, usually ~10) is cool and interesting, but complicated, hard to measure over 100 card singleton decks and not compatible with the idea of multiple thresholds. You can mostly only pick one number and go with it.

Brian Kowal takes the opposite position, thinks a points-based system would be cool for the minority who wants to do that. I worry this would obligate others too much, and wouldn’t be as fully optional as we’d hope.

This also should catch everyone’s eye:

We will also be evaluating the current banned card list alongside both the Commander Rules Committee and the community. We will not ban additional cards as part of this evaluation. While discussion of the banned list started this, immediate changes to the list are not our priority.

I would be extremely reluctant to unban specifically Crypt or Lotus. I don’t have a strong opinion on whether those bans were net good, but once they happen the calculus shifts dramatically, and you absolutely do not want to reward what happened by giving those issuing death threats what they wanted.

That said, there are a bunch of other banned cards in Commander that can almost certainly be safely unbanned, and there is value in minimizing what is on the list. Then, if a year or two from now we decide that more fast mana would be healthy for the format again, or would be healthy inside tier 4 or what not, we can revisit those two in particular.

What should be the conventions around the clock in MTGO? Matt Costa calls out another player for making plays with the sole intention of trying to run out Matt’s clock. Most reactions were that the clock is part of the game, and playing for a clock win is fine. To me, the question is, where should the line be? Hopefully we can all agree that it is on you to finish the match on time, your opponent is under no obligation to help you out. But also it is not okay to take game actions whose only goal is to get the opponent to waste time, and certainly not okay to abuse the system to force you to make more meaningless clicks. Costa here makes clear he would draw the line far more aggressively than I would, to me anything that is trying to actually help win the game is fine.

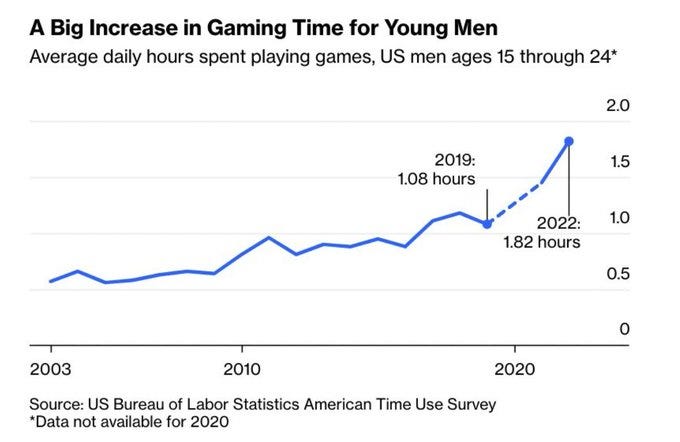

In other news, gaming overall was way up for young men as of 2022:

Paul Graham: The amount of time young men spent gaming was not exactly low in 2019. Usually when you see dramatic growth it’s from a low starting point, but this is dramatic growth from a high starting point.

That’s actually quite a lot. I don’t get to play two hours of games a day. This going up for 2022 from 2021 suggests this is not merely a temporary pandemic effect.

For those who did not realize, game matching algorithms often no longer optimize ‘fair’ matchups, and instead follow patterns designed to preserve engagement (example patent here). I’ve had this become obvious in some cases where it greatly changed the incentives, and when that happened it killed the whole experience. So to all you designers out there, be careful with this.

I love this proposal and would watch a lot more baseball if they did it: MLB considering requiring starting pitchers to go at least 6 innings, unless they either are injured enough to go on the injured reserve, throw 100 pitches or give up 4 earned runs. This would force pitchers to rely on command over power, which explains some of why pitchers are so often injured now.

I would go farther. Let’s implement the ‘double hook’ or ‘double switch DH,’ which they are indeed considering. In that version, when you pull your starter, you lose the DH, period. So starting pitchers never bat, but relievers might need to do so. I think this is a neat compromise that is clean, easy to explain, provides good incentives and also makes the game a lot more interesting.

I’ll also note that the betting odds on the Mets have been absurdly disrespectful for a while now, no matter how this miracle run ends. I get that all your models say we shouldn’t be that good, but how many months of winning does it take? Of course baseball is sufficiently random that we will never know who was right on this.

Meanwhile the various fuckery with sports recordings in TV apps really gets you. They know you feel the need to see everything, so they make you buy various different apps to get it, but also they fail to make deals when they need to (e.g. YouTube TV losing SNY) and then that forced me onto Hulu, whose app sucks and also cut off the end of multiple key games.

I wish I could confidently say Hulu’s app has failed me for the last time. Its rate of ‘reset to beginning of recording when you ask to resume, for no reason’ is something like 40%. It can’t remember your place watching episodes of a show if you’re watching reruns in order, that’s too hard for it. If a copy of a program aired recently its ads could become partly unskippable. The organization of content is insane.

All of that I was working past, until the above mentioned cutoffs of game endings, including the game the Mets clinched their wildcard birth, and then the finishes of multiple top college football games. Unfortunately, there are zero other options for getting SNY, which shows the Mets games, but now we’re in the playoffs so it’s back to Youtube TV, which has other problems but they’re mostly less awful, together with like six other apps.

Paul Williams: Lina Khan DO NOT read this.

Can we please have a monopoly in TV streaming? Some of us are just trying to watch the game out here, why does my TV have 26 apps.

James Harvey: I don’t see what’s so confusing about this. I pay for MLB and I pay for ESPN, so if I want to watch an MLB game on ESPN I naturally go to the YouTube TV app.

There’s starting to be the inkling of ‘you choose the primary app and then you add to it with subscriptions for other apps content’ but this cannot come fast enough, and right now it seems to come with advertisements or other limitations – imposing ads on us in this day and age, when we’re paying and not in exchange for a clear discount, is psycho behavior, I don’t get it.

The idea that in April 2025 I might have to give Hulu its money again is so cringe. Please, YouTube, work this out, paying an extra subscription HBO-style would be fine, or we can have SNY offer a standalone app.

In this case an entrepreneur, asking the right question. We’ve done this before but I find it worthwhile to revisit periodically. I organized responses by central answer.

Paul Graham: Is there a reliable source of restaurant ratings, like Zagat’s used to be?

Roon: Beli.

Alex Reichenbach: I’d highly recommend Beli, especially if you end up in New York. They use head to head ELO scoring that prevents rating inflation.

Silvia Tower: Beli App! That way you follow people you know and see how they rate restaurants. No stars, it’s a forced ranking system. Their algorithm will also make personalized recommendations.

StripMallGuy: Really rely on Yelp. I find that if a restaurant is three stars or less, it’s just not going to be good and 4 1/2 stars means very high chance will be great. We use it a lot for our underwriting of strip malls during purchases, and it’s been really helpful.

Nikita Bier: The one tip for Yelp I have that is tangentially related: if an establishment has >4 stars and their profile says “unclaimed,” it means 6 stars.

Babak Hosseini: Google Maps. But don’t read the 5-star ratings.

1. Select a restaurant above 4.6 avg rating

2. Then navigate to the 1-star ratings

If most people complain about stuff you don’t care, you most likely have a pretty good match.

Grant: Google Maps 4.9 and above is a no. Usually means bad food with over friendly owner or strong arming reviews. 4.6 – 4.8: best restaurants 4.4 – 4.5: good restaurants 4.3: ok 4.2 and below: avoid.

Peter Farbor: Google Maps, 500+ reviews, 4.4+

How to check if the restaurant didn’t gamify reviews?

1. There should be a very small number of 1-3⭐️ reviews

2. There should be at least 10-20% of 4⭐️ reviews

Eleanor Berger: Google Maps, actually. I don’t think anything else seriously competes with it.