Qubit that makes most errors obvious now available to customers

Can a small machine that makes error correction easier upend the market?

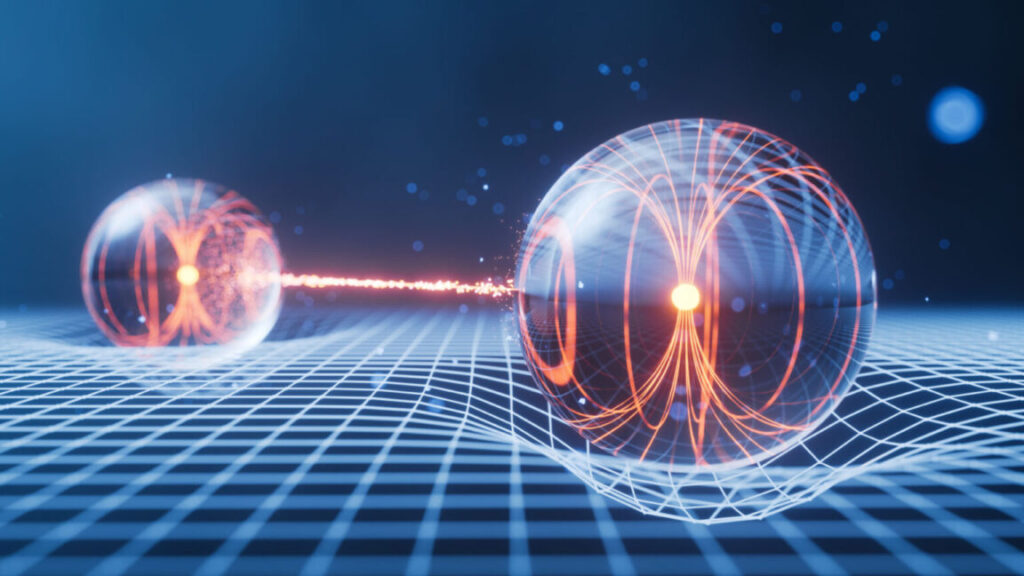

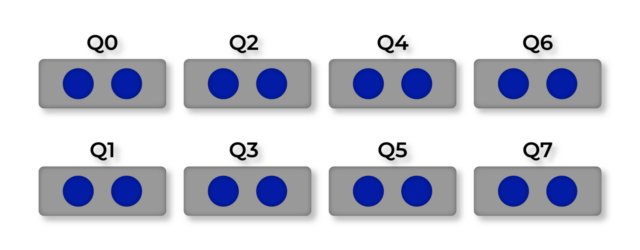

A graphic representation of the two resonance cavities that can hold photons, along with a channel that lets the photon move between them. Credit: Quantum Circuits

We’re nearing the end of the year, and there are typically a flood of announcements regarding quantum computers around now, in part because some companies want to live up to promised schedules. Most of these involve evolutionary improvements on previous generations of hardware. But this year, we have something new: the first company to market with a new qubit technology.

The technology is called a dual-rail qubit, and it is intended to make the most common form of error trivially easy to detect in hardware, thus making error correction far more efficient. And, while tech giant Amazon has been experimenting with them, a startup called Quantum Circuits is the first to give the public access to dual-rail qubits via a cloud service.

While the tech is interesting on its own, it also provides us with a window into how the field as a whole is thinking about getting error-corrected quantum computing to work.

What’s a dual-rail qubit?

Dual-rail qubits are variants of the hardware used in transmons, the qubits favored by companies like Google and IBM. The basic hardware unit links a loop of superconducting wire to a tiny cavity that allows microwave photons to resonate. This setup allows the presence of microwave photons in the resonator to influence the behavior of the current in the wire and vice versa. In a transmon, microwave photons are used to control the current. But there are other companies that have hardware that does the reverse, controlling the state of the photons by altering the current.

Dual-rail qubits use two of these systems linked together, allowing photons to move from the resonator to the other. Using the superconducting loops, it’s possible to control the probability that a photon will end up in the left or right resonator. The actual location of the photon will remain unknown until it’s measured, allowing the system as a whole to hold a single bit of quantum information—a qubit.

This has an obvious disadvantage: You have to build twice as much hardware for the same number of qubits. So why bother? Because the vast majority of errors involve the loss of the photon, and that’s easily detected. “It’s about 90 percent or more [of the errors],” said Quantum Circuits’ Andrei Petrenko. “So it’s a huge advantage that we have with photon loss over other errors. And that’s actually what makes the error correction a lot more efficient: The fact that photon losses are by far the dominant error.”

Petrenko said that, without doing a measurement that would disrupt the storage of the qubit, it’s possible to determine if there is an odd number of photons in the hardware. If that isn’t the case, you know an error has occurred—most likely a photon loss (gains of photons are rare but do occur). For simple algorithms, this would be a signal to simply start over.

But it does not eliminate the need for error correction if we want to do more complex computations that can’t make it to completion without encountering an error. There’s still the remaining 10 percent of errors, which are primarily something called a phase flip that is distinct to quantum systems. Bit flips are even more rare in dual-rail setups. Finally, simply knowing that a photon was lost doesn’t tell you everything you need to know to fix the problem; error-correction measurements of other parts of the logical qubit are still needed to fix any problems.

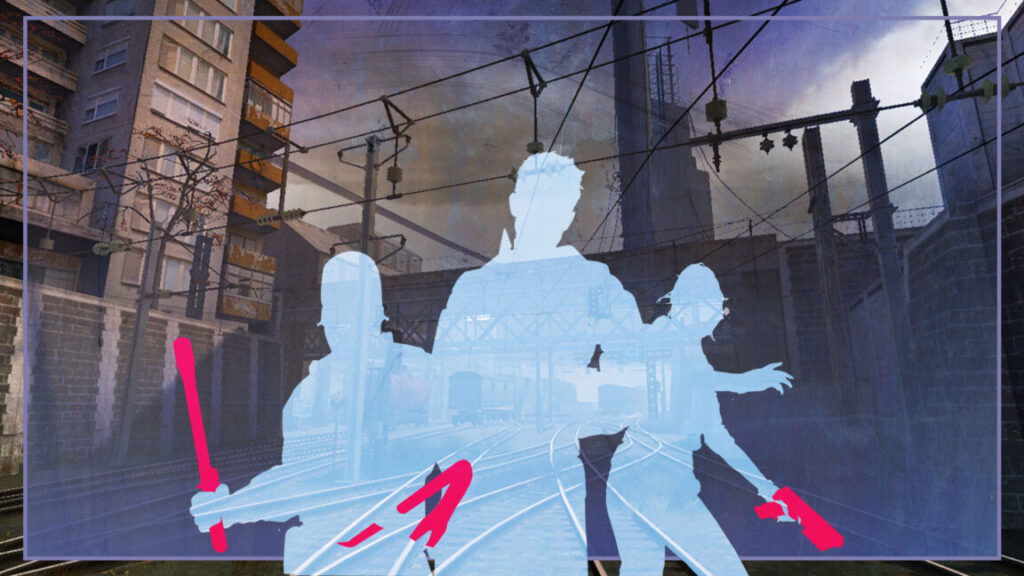

The layout of the new machine. Each qubit (gray square) involves a left and right resonance chamber (blue dots) that a photon can move between. Each of the qubits has connections that allow entanglement with its nearest neighbors. Credit: Quantum Circuits

In fact, the initial hardware that’s being made available is too small to even approach useful computations. Instead, Quantum Circuits chose to link eight qubits with nearest-neighbor connections in order to allow it to host a single logical qubit that enables error correction. Put differently: this machine is meant to enable people to learn how to use the unique features of dual-rail qubits to improve error correction.

One consequence of having this distinctive hardware is that the software stack that controls operations needs to take advantage of its error detection capabilities. None of the other hardware on the market can be directly queried to determine whether it has encountered an error. So, Quantum Circuits has had to develop its own software stack to allow users to actually benefit from dual-rail qubits. Petrenko said that the company also chose to provide access to its hardware via its own cloud service because it wanted to connect directly with the early adopters in order to better understand their needs and expectations.

Numbers or noise?

Given that a number of companies have already released multiple revisions of their quantum hardware and have scaled them into hundreds of individual qubits, it may seem a bit strange to see a company enter the market now with a machine that has just a handful of qubits. But amazingly, Quantum Circuits isn’t alone in planning a relatively late entry into the market with hardware that only hosts a few qubits.

Having talked with several of them, there is a logic to what they’re doing. What follows is my attempt to convey that logic in a general form, without focusing on any single company’s case.

Everyone agrees that the future of quantum computation is error correction, which requires linking together multiple hardware qubits into a single unit termed a logical qubit. To get really robust, error-free performance, you have two choices. One is to devote lots of hardware qubits to the logical qubit, so you can handle multiple errors at once. Or you can lower the error rate of the hardware, so that you can get a logical qubit with equivalent performance while using fewer hardware qubits. (The two options aren’t mutually exclusive, and everyone will need to do a bit of both.)

The two options pose very different challenges. Improving the hardware error rate means diving into the physics of individual qubits and the hardware that controls them. In other words, getting lasers that have fewer of the inevitable fluctuations in frequency and energy. Or figuring out how to manufacture loops of superconducting wire with fewer defects or handle stray charges on the surface of electronics. These are relatively hard problems.

By contrast, scaling qubit count largely involves being able to consistently do something you already know how to do. So, if you already know how to make good superconducting wire, you simply need to make a few thousand instances of that wire instead of a few dozen. The electronics that will trap an atom can be made in a way that will make it easier to make them thousands of times. These are mostly engineering problems, and generally of similar complexity to problems we’ve already solved to make the electronics revolution happen.

In other words, within limits, scaling is a much easier problem to solve than errors. It’s still going to be extremely difficult to get the millions of hardware qubits we’d need to error correct complex algorithms on today’s hardware. But if we can get the error rate down a bit, we can use smaller logical qubits and might only need 10,000 hardware qubits, which will be more approachable.

Errors first

And there’s evidence that even the early entries in quantum computing have reasoned the same way. Google has been working iterations of the same chip design since its 2019 quantum supremacy announcement, focusing on understanding the errors that occur on improved versions of that chip. IBM made hitting the 1,000 qubit mark a major goal but has since been focused on reducing the error rate in smaller processors. Someone at a quantum computing startup once told us it would be trivial to trap more atoms in its hardware and boost the qubit count, but there wasn’t much point in doing so given the error rates of the qubits on the then-current generation machine.

The new companies entering this market now are making the argument that they have a technology that will either radically reduce the error rate or make handling the errors that do occur much easier. Quantum Circuits clearly falls into the latter category, as dual-rail qubits are entirely about making the most common form of error trivial to detect. The former category includes companies like Oxford Ionics, which has indicated it can perform single-qubit gates with a fidelity of over 99.9991 percent. Or Alice & Bob, which stores qubits in the behavior of multiple photons in a single resonance cavity, making them very robust to the loss of individual photons.

These companies are betting that they have distinct technology that will let them handle error rate issues more effectively than established players. That will lower the total scaling they need to do, and scaling will be an easier problem overall—and one that they may already have the pieces in place to handle. Quantum Circuits’ Petrenko, for example, told Ars, “I think that we’re at the point where we’ve gone through a number of iterations of this qubit architecture where we’ve de-risked a number of the engineering roadblocks.” And Oxford Ionics told us that if they could make the electronics they use to trap ions in their hardware once, it would be easy to mass manufacture them.

None of this should imply that these companies will have it easy compared to a startup that already has experience with both reducing errors and scaling, or a giant like Google or IBM that has the resources to do both. But it does explain why, even at this stage in quantum computing’s development, we’re still seeing startups enter the field.

John is Ars Technica’s science editor. He has a Bachelor of Arts in Biochemistry from Columbia University, and a Ph.D. in Molecular and Cell Biology from the University of California, Berkeley. When physically separated from his keyboard, he tends to seek out a bicycle, or a scenic location for communing with his hiking boots.

Qubit that makes most errors obvious now available to customers Read More »