Where’s Security Going in 2025?

error code: 502

Where’s Security Going in 2025? Read More »

Perhaps counterintuitively, sediment layers are more likely to remain intact on the seafloor than on land, so they can provide a better record of the region’s history. The seafloor is a more stable, oxygen-poor environment, reducing erosion and decomposition (two reasons scientists find far more fossils of marine creatures than land dwellers) and preserving finer details.

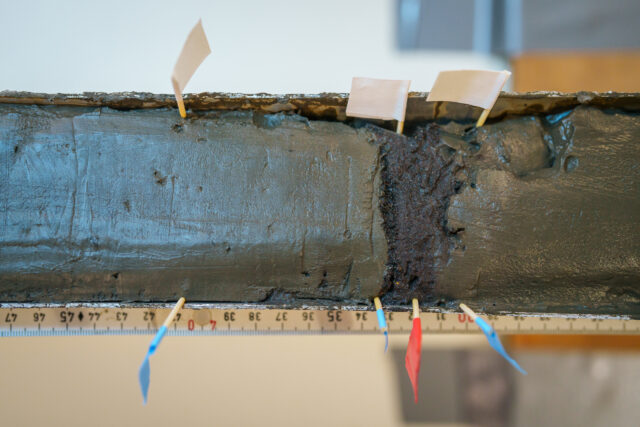

A close-up view of a core sample taken by a vibracorer. Scientists mark places they plan to inspect more closely with little flags. Credit: Alex Ingle / Schmidt Ocean Institute

Samples from different areas vary dramatically in time coverage, going back only to 2008 for some and back potentially more than 15,000 years for others due to wildly different sedimentation rates. Scientists will use techniques like radiocarbon dating to determine the ages of sediment layers in the core samples.

ROV SuBastian spotted a helmet jellyfish during the expedition. These photophobic (light avoidant) creatures glow via bioluminescence. Credit: Schmidt Ocean Institute

Microscopic analysis of the sediment cores will also help the team analyze the way the eruption affected marine creatures and the chemistry of the seafloor.

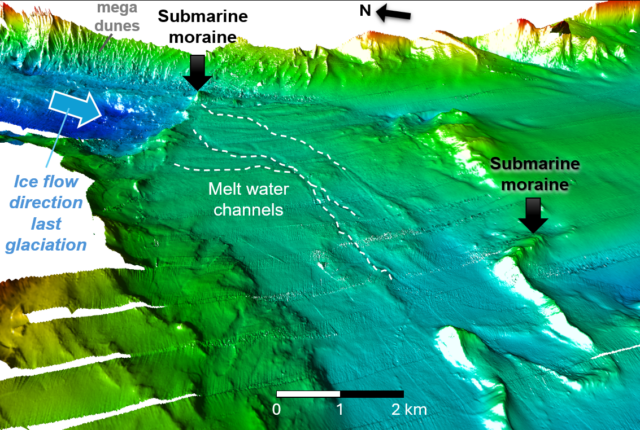

“There’s a wide variety of life and sediment types found at the different sites we surveyed,” said Alastair Hodgetts, a physical volcanologist and geologist at the University of Edinburgh, who participated in the expedition. “The oldest place we visited—an area scarred by ancient glacier movement—is a fossilized seascape that was completely unexpected.”

In a region beyond the dunes, ocean currents have kept the seafloor clear of sediment. That preserves seabed features left by the retreat of ice sheets at the end of the last glaciation. Credit: Rodrigo Fernández / CODEX Project

This feature, too, tells scientists about the way the water moves. Currents flowing over an area that was eroded long ago by a glacier sweep sediment away, keeping the ancient terrain visible.

“I’m very interested in analyzing seismic data and correlating it with the layers of sediment in the core samples to create a timeline of geological events in the area,” said Giulia Matilde Ferrante, a geophysicist at Italy’s National Institute of Oceanography and Applied Geophysics, who co-led the expedition. “Reconstructing the past in this way will help us better understand the sediment history and landscape changes in the region.”

In this post-apocalyptic scene, captured June 20, 2008, a thick layer of ash covers the town of Chaitén as the volcano continues to erupt in the background. Around 5,000 people evacuated, and resettlement efforts didn’t begin until the following year. Credit: Javier Rubilar

The team has already gathered measurements of the amount of sediment the eruption delivered to the sea. Now they’ll work to determine whether older layers of sediment record earlier, unknown events similar to the 2008 eruption.

“Better understanding past volcanic events, revealing things like how far away an eruption reached, and how common, severe, and predictable eruptions are, will help to plan for future events and reduce the impacts they have on local communities,” Watt said.

Ashley writes about space for a contractor for NASA’s Goddard Space Flight Center by day and freelances as an environmental writer. She holds master’s degrees in space studies from The University of North Dakota and science writing from The Johns Hopkins University. She writes most of her articles with a baby on her lap.

Exploring an undersea terrain sculpted by glaciers and volcanoes Read More »

Big Mama VPN tied to network which offers access to residential IP addresses.

In the hit virtual reality game Gorilla Tag, you swing your arms to pull your primate character around—clambering through virtual worlds, climbing up trees and, above all, trying to avoid an infectious mob of other gamers. If you’re caught, you join the horde. However, some kids playing the game claim to have found a way to cheat and easily “tag” opponents.

Over the past year, teenagers have produced video tutorials showing how to side-load a virtual private network (VPN) onto Meta’s virtual reality headsets and use the location-changing technology to get ahead in the game. Using a VPN, according to the tutorials, introduces a delay that makes it easier to sneak up and tag other players.

While the workaround is likely to be an annoying but relatively harmless bit of in-game cheating, there’s a catch. The free VPN app that the video tutorials point to, Big Mama VPN, is also selling access to its users’ home internet connections—with buyers essentially piggybacking on the VR headset’s IP address to hide their own online activity.

This technique of rerouting traffic, which is best known as a residential proxy and more commonly happens through phones, has become increasingly popular with cybercriminals who use proxy networks to conduct cyberattacks and use botnets. While the Big Mama VPN works as it is supposed to, the company’s associated proxy services have been heavily touted on cybercrime forums and publicly linked to at least one cyberattack.

Researchers at cybersecurity company Trend Micro first spotted Meta’s VR headsets appearing in its threat intelligence residential proxy data earlier this year, before tracking down that teenagers were using Big Mama to play Gorilla Tag. An unpublished analysis that Trend Micro shared with WIRED says its data shows that the VR headsets were the third most popular devices using the Big Mama VPN app, after devices from Samsung and Xiaomi.

“If you’ve downloaded it, there’s a very high likelihood that your device is for sale in the marketplace for Big Mama,” says Stephen Hilt, a senior threat researcher at Trend Micro. Hilt says that while Big Mama VPN may be being used because it is free, doesn’t require users to create an account, and apparently doesn’t have any data limits, security researchers have long warned that using free VPNs can open people up to privacy and security risks.

These risks may be amplified when that app is linked to a residential proxy. Proxies can “allow people with malicious intent to use your internet connection to potentially use it for their attacks, meaning that your device and your home IP address may be involved in a cyberattack against a corporation or a nation state,” Hilt says.

“Gorilla Tag is a place to have fun with your friends and be playful and creative—anything that disturbs that is not cool with us,” a spokesperson for Gorilla Tag creator Another Axiom says, adding they use “anti-cheat mechanisms” to detect suspicious behavior. Meta did not respond to a request for comment about VPNs being side-loaded onto its headsets.

Big Mama is made up of two parts: There’s the free VPN app, which is available on the Google Play store for Android devices and has been downloaded more than 1 million times. Then there’s the Big Mama Proxy Network, which allows people (among other options) to buy shared access to “real” 4G and home Wi-Fi IP addresses for as little as 40 cents for 24 hours.

Vincent Hinderer, a cyber threat intelligence team manager who has researched the wider residential proxy market at Orange Cyberdefense, says there are various scenarios where residential proxies are used, both for people who are having traffic routed through their devices and also those buying and selling proxy services. “It’s sometimes a gray zone legally and ethically,” Hinderer says.

For proxy networks, Hinderer says, one end of the spectrum is where networks could be used as a way for companies to scrape pricing details from their competitors’ websites. Other uses can include ad verification or people scalping sneakers during sales. They may be considered ethically murky but not necessarily illegal.

At the other end of the scale, according to Orange’s research, residential proxy networks have broadly been used for cyber espionage by Russian hackers, in social engineering efforts, as part of DDoS attacks, phishing, botnets, and more. “We have cybercriminals using them knowingly,” Hinderer says of residential proxy networks generally, with Orange Cyberdefense having frequently seen proxy traffic in logs linked to cyberattacks it has investigated. Orange’s research did not specifically look at uses of Big Mama’s services.

Some people can consent to having their devices used in proxy networks and be paid for their connections, Hinderer says, while others may be included because they agreed to it in a service’s terms and conditions—something research has long shown people don’t often read or understand.

Big Mama doesn’t make it a secret that people who use its VPN will have other traffic routed through their networks. Within the app it says it “may transport other customer’s traffic through” the device that’s connected to the VPN, while it is also mentioned in the terms of use and on a FAQ page about how the app is free.

The Big Mama Network page advertises its proxies as being available to be used for ad verification, buying online tickets, price comparison, web scraping, SEO, and a host of other use cases. When a user signs up, they’re shown a list of locations proxy devices are located in, their internet service provider, and how much each connection costs.

This marketplace, at the time of writing, lists 21,000 IP addresses for sale in the United Arab Emirates, 4,000 in the US, and tens to hundreds of other IP addresses in a host of other countries. Payments can only be made in cryptocurrency. Its terms of service say the network is only provided for “legal purposes,” and people using it for fraud or other illicit activities will be banned.

Despite this, cybercriminals appear to have taken a keen interest in the service. Trend Micro’s analysis claims Big Mama has been regularly promoted on underground forums where cybercriminals discuss buying tools for malicious purposes. The posts started in 2020. Similarly, Israeli security firm Kela has found more than 1,000 posts relating to the Big Mama proxy network across 40 different forums and Telegram channels.

Kela’s analysis, shared with WIRED, shows accounts called “bigmama_network” and “bigmama” posted across at least 10 forums, including cybercrime forums such as WWHClub, Exploit, and Carder. The ads list prices, free trials, and the Telegram and other contact details of Big Mama.

It is unclear who made these posts, and Big Mama tells WIRED that it does not advertise.

Posts from these accounts also said, among other things, that “anonymous” bitcoin payments are available. The majority of the posts, Kela’s analysis says, were made by the accounts around 2020 and 2021. Although, an account called “bigmama_network” has been posting on the clearweb Blackhat World SEO forum until October this year, where it has claimed its Telegram account has been deleted multiple times.

In other posts during the last year, according to the Kela analysis, cybercrime forum users have recommended Big Mama or shared tips about the configurations people should use. In April this year, security company Cisco Talos said it had seen traffic from the Big Mama Proxy, alongside other proxies, being used by attackers trying to brute force their way into a variety of company systems.

Big Mama has few details about its ownership or leadership on its website. The company’s terms of service say that a business called BigMama SRL is registered in Romania, although a previous version of its website from 2022, and at least one live page now, lists a legal address for BigMama LLC in Wyoming. The US-based business was dissolved in April and is now listed as inactive, according to the Wyoming Secretary of State’s website.

A person using the name Alex A responded to an email from WIRED about how Big Mama operates. In the email, they say that information about free users’ connections being sold to third parties through the Big Mama Network is “duplicated on the app market and in the application itself several times,” and people have to accept the terms of conditions to use the VPN. They say the Big Mama VPN is officially only available from the Google Play Store.

“We do not advertise and have never advertised our services on the forums you have mentioned,” the email says. They say they were not aware of the April findings from Talos about its network being used as part of a cyberattack. “We do block spam, DDOS, SSH as well as local network etc. We log user activity to cooperate with law enforcement agencies,” the email says.

The Alex A persona asked WIRED to send it more details about the adverts on cybercrime forums, details about the Talos findings, and information about teenagers using Big Mama on Oculus devices, saying they would be “happy” to answer further questions. However, they did not respond to any further emails with additional details about the research findings and questions about their security measures, whether they believe someone was impersonating Big Mama to post on cybercrime forums, the identity of Alex A, or who runs the company.

During its analysis, Trend Micro’s Hilt says that the company also found a security vulnerability within the Big Mama VPN, which could have allowed a proxy user to access someone’s local network if exploited. The company says it reported the flaw to Big Mama, which fixed it within a week, a detail Alex A confirmed.

Ultimately, Hilt says, there are potential risks whenever anyone downloads and uses a free VPN. “All free VPNs come with a trade-off of privacy or security concerns,” he says. That applies to people side-loading them onto their VR headsets. “If you’re downloading applications from the internet that aren’t from the official stores, there’s always the inherent risk that it isn’t what you think it is. And that comes true even with Oculus devices.”

This story originally appeared on wired.com.

VPN used for VR game cheat sells access to your home network Read More »

Did OpenAI’s big holiday event live up to the billing?

Over the past 12 business days, OpenAI has announced a new product or demoed an AI feature every weekday, calling the PR event “12 days of OpenAI.” We’ve covered some of the major announcements, but we thought a look at each announcement might be useful for people seeking a comprehensive look at each day’s developments.

The timing and rapid pace of these announcements—particularly in light of Google’s competing releases—illustrates the intensifying competition in AI development. What might normally have been spread across months was compressed into just 12 business days, giving users and developers a lot to process as they head into 2025.

Humorously, we asked ChatGPT what it thought about the whole series of announcements, and it was skeptical that the event even took place. “The rapid-fire announcements over 12 days seem plausible,” wrote ChatGPT-4o, “But might strain credibility without a clearer explanation of how OpenAI managed such an intense release schedule, especially given the complexity of the features.”

But it did happen, and here’s a chronicle of what went down on each day.

On the first day of OpenAI, the company released its full o1 model, making it available to ChatGPT Plus and Team subscribers worldwide. The company reported that the model operates faster than its preview version and reduces major errors by 34 percent on complex real-world questions.

The o1 model brings new capabilities for image analysis, allowing users to upload and receive detailed explanations of visual content. OpenAI said it plans to expand o1’s features to include web browsing and file uploads in ChatGPT, with API access coming soon. The API version will support vision tasks, function calling, and structured outputs for system integration.

OpenAI also launched ChatGPT Pro, a $200 subscription tier that provides “unlimited” access to o1, GPT-4o, and Advanced Voice features. Pro subscribers receive an exclusive version of o1 that uses additional computing power for complex problem-solving. Alongside this release, OpenAI announced a grant program that will provide ChatGPT Pro access to 10 medical researchers at established institutions, with plans to extend grants to other fields.

Day 2 wasn’t as exciting. OpenAI unveiled Reinforcement Fine-Tuning (RFT), a model customization method that will let developers modify “o-series” models for specific tasks. The technique reportedly goes beyond traditional supervised fine-tuning by using reinforcement learning to help models improve their reasoning abilities through repeated iterations. In other words, OpenAI created a new way to train AI models that lets them learn from practice and feedback.

OpenAI says that Berkeley Lab computational researcher Justin Reese tested RFT for researching rare genetic diseases, while Thomson Reuters has created a specialized o1-mini model for its CoCounsel AI legal assistant. The technique requires developers to provide a dataset and evaluation criteria, with OpenAI’s platform managing the reinforcement learning process.

OpenAI plans to release RFT to the public in early 2024 but currently offers limited access through its Reinforcement Fine-Tuning Research Program for researchers, universities, and companies.

On day 3, OpenAI released Sora, its text-to-video model, as a standalone product now accessible through sora.com for ChatGPT Plus and Pro subscribers. The company says the new version operates faster than the research preview shown in February 2024, when OpenAI first demonstrated the model’s ability to create videos from text descriptions.

The release moved Sora from research preview to a production service, marking OpenAI’s official entry into the video synthesis market. The company published a blog post detailing the subscription tiers and deployment strategy for the service.

On day 4, OpenAI moved its Canvas feature out of beta testing, making it available to all ChatGPT users, including those on free tiers. Canvas provides a dedicated interface for extended writing and coding projects beyond the standard chat format, now with direct integration into the GPT-4o model.

The updated canvas allows users to run Python code within the interface and includes a text-pasting feature for importing existing content. OpenAI added compatibility with custom GPTs and a “show changes” function that tracks modifications to writing and code. The company said Canvas is now on chatgpt.com for web users and also available through a Windows desktop application, with more features planned for future updates.

On day 5, OpenAI announced that ChatGPT would integrate with Apple Intelligence across iOS, iPadOS, and macOS devices. The integration works on iPhone 16 series phones, iPhone 15 Pro models, iPads with A17 Pro or M1 chips and later, and Macs with M1 processors or newer, running their respective latest operating systems.

The integration lets users access ChatGPT’s features (such as they are), including image and document analysis, directly through Apple’s system-level intelligence features. The feature works with all ChatGPT subscription tiers and operates within Apple’s privacy framework. Iffy message summaries remain unaffected by the additions.

Enterprise and Team account users need administrator approval to access the integration.

On the sixth day, OpenAI added two features to ChatGPT’s voice capabilities: “video calling” with screen sharing support for ChatGPT Plus and Pro subscribers and a seasonal Santa Claus voice preset.

The new visual Advanced Voice Mode features work through the mobile app, letting users show their surroundings or share their screen with the AI model during voice conversations. While the rollout covers most countries, users in several European nations, including EU member states, Switzerland, Iceland, Norway, and Liechtenstein, will get access at a later date. Enterprise and education users can expect these features in January.

The Santa voice option appears as a snowflake icon in the ChatGPT interface across mobile devices, web browsers, and desktop apps, with conversations in this mode not affecting chat history or memory. Don’t expect Santa to remember what you want for Christmas between sessions.

OpenAI introduced Projects, a new organizational feature in ChatGPT that lets users group related conversations and files, on day 7. The feature works with the company’s GPT-4o model and provides a central location for managing resources related to specific tasks or topics—kinda like Anthropic’s “Projects” feature.

ChatGPT Plus, Pro, and Team subscribers can currently access Projects through chatgpt.com and the Windows desktop app, with view-only support on mobile devices and macOS. Users can create projects by clicking a plus icon in the sidebar, where they can add files and custom instructions that provide context for future conversations.

OpenAI said it plans to expand Projects in 2024 with support for additional file types, cloud storage integration through Google Drive and Microsoft OneDrive, and compatibility with other models like o1. Enterprise and education users will receive access to Projects in January.

On day 8, OpenAI expanded its search features in ChatGPT, extending access to all users with free accounts while reportedly adding speed improvements and mobile optimizations. Basically, you can use ChatGPT like a web search engine, although in practice it doesn’t seem to be as comprehensive as Google Search at the moment.

The update includes a new maps interface and integration with Advanced Voice, allowing users to perform searches during voice conversations. The search capability, which previously required a paid subscription, now works across all platforms where ChatGPT operates.

On day 9, OpenAI released its o1 model through its API platform, adding support for function calling, developer messages, and vision processing capabilities. The company also reduced GPT-4o audio pricing by 60 percent and introduced a GPT-4o mini option that costs one-tenth of previous audio rates.

OpenAI also simplified its WebRTC integration for real-time applications and unveiled Preference Fine-Tuning, which provides developers new ways to customize models. The company also launched beta versions of software development kits for the Go and Java programming languages, expanding its toolkit for developers.

On Wednesday, OpenAI did something a little fun and launched voice and messaging access to ChatGPT through a toll-free number (1-800-CHATGPT), as well as WhatsApp. US residents can make phone calls with a 15-minute monthly limit, while global users can message ChatGPT through WhatsApp at the same number.

OpenAI said the release is a way to reach users who lack consistent high-speed Internet access or want to try AI through familiar communication channels, but it’s also just a clever hack. As evidence, OpenAI notes that these new interfaces serve as experimental access points, with more “limited functionality” than the full ChatGPT service, and still recommends existing users continue using their regular ChatGPT accounts for complete features.

On Thursday, OpenAI expanded ChatGPT’s desktop app integration to include additional coding environments and productivity software. The update added support for Jetbrains IDEs like PyCharm and IntelliJ IDEA, VS Code variants including Cursor and VSCodium, and text editors such as BBEdit and TextMate.

OpenAI also included integration with Apple Notes, Notion, and Quip while adding Advanced Voice Mode compatibility when working with desktop applications. These features require manual activation for each app and remain available to paid subscribers, including Plus, Pro, Team, Enterprise, and Education users, with Enterprise and Education customers needing administrator approval to enable the functionality.

On Friday, OpenAI concluded its twelve days of announcements by previewing two new simulated reasoning models, o3 and o3-mini, while opening applications for safety and security researchers to test them before public release. Early evaluations show o3 achieving a 2727 rating on Codeforces programming contests and scoring 96.7 percent on AIME 2024 mathematics problems.

The company reports o3 set performance records on advanced benchmarks, solving 25.2 percent of problems on EpochAI’s Frontier Math evaluations and scoring above 85 percent on the ARC-AGI test, which is comparable to human results. OpenAI also published research about “deliberative alignment,” a technique used in developing o1. The company has not announced firm release dates for either new o3 model, but CEO Sam Altman said o3-mini might ship in late January.

OpenAI’s December campaign revealed that OpenAI had a lot of things sitting around that it needed to ship, and it picked a fun theme to unite the announcements. Google responded in kind, as we have covered.

Several trends from the releases stand out. OpenAI is heavily investing in multimodal capabilities. The o1 model’s release, Sora’s evolution from research preview to product, and the expansion of voice features with video calling all point toward systems that can seamlessly handle text, images, voice, and video.

The company is also focusing heavily on developer tools and customization, so it can continue to have a cloud service business and have its products integrated into other applications. Between the API releases, Reinforcement Fine-Tuning, and expanded IDE integrations, OpenAI is building out its ecosystem for developers and enterprises. And the introduction of o3 shows that OpenAI is still attempting to push technological boundaries, even in the face of diminishing returns in training LLM base models.

OpenAI seems to be positioning itself for a 2025 where generative AI moves beyond text chatbots and simple image generators and finds its way into novel applications that we probably can’t even predict yet. We’ll have to wait and see what the company and developers come up with in the year ahead.

12 days of OpenAI: The Ars Technica recap Read More »

The Federal Aviation Administration temporarily banned drones over parts of New Jersey yesterday and said “the United States government may use deadly force against” airborne aircraft “if it is determined that the aircraft poses an imminent security threat.”

The FAA issued 22 orders imposing “temporary flight restrictions for special security reasons” until January 17, 2025. “At the request of federal security partners, the FAA published 22 Temporary Flight Restrictions (TFRs) prohibiting drone flights over critical New Jersey infrastructure,” an FAA statement said.

Each NOTAM (Notice to Air Missions) affects a specific area. “No UAS [Unmanned Aircraft System] operations are authorized in the areas covered by this NOTAM” unless they have clearance for specific operations, the FAA said. Allowed operations include support for national defense, law enforcement, firefighting, and commercial operations “with a valid statement of work.”

“Pilots who do not adhere to the following proc[edure] may be intercepted, detained and interviewed by law enforcement/security personnel,” the FAA said. Violating the order could result in “civil penalties and the suspension or revocation of airmen certificates,” and criminal charges, the FAA said.

The New Jersey orders affect areas in Evesham, Hamilton, Bridgewater, Cedar Grove, Metuchen, North Brunswick Township, Camden, Gloucester City, Westampton, South Brunswick, Edison, Branchburg, Sewaren, Jersey City, Harrison, Elizabeth, Bayonne, Winslow, Burlington, Clifton, Hancocks Bridge, and Kearny.

The latest notices follow numerous sightings of objects that appeared to be drones, which worried New Jersey residents and prompted state and federal officials to investigate and issue several public statements. The FAA last month imposed temporary flight restrictions at the Picatinny Arsenal, an Army research and manufacturing facility, and a Bedminster golf course owned by President-elect Donald Trump.

On December 16, a joint statement was issued by the US Department of Homeland Security, the FBI, the FAA, and Department of Defense. The “FBI has received tips of more than 5,000 reported drone sightings in the last few weeks with approximately 100 leads generated,” but evidence so far suggests “the sightings to date include a combination of lawful commercial drones, hobbyist drones, and law enforcement drones, as well as manned fixed-wing aircraft, helicopters, and stars mistakenly reported as drones,” the statement said. “We have not identified anything anomalous and do not assess the activity to date to present a national security or public safety risk over the civilian airspace in New Jersey or other states in the northeast.”

US temporarily bans drones in parts of NJ, may use “deadly force” against aircraft Read More »

Without tuned power profiles, a separate but related feature called the Intel Application Performance Optimizer (APO) couldn’t kick in, reducing performance by between 2 and 14 percent.

Current BIOS updates for motherboards contain optimized performance and power settings that “were not consistently toggled” in early BIOS versions for those boards. This could also affect performance by between 2 and 14 percent.

The fifth and final fix for the issues Intel has identified is coming in a later BIOS update that the company plans to release “in the first half of January 2025.” The microcode updates in that BIOS update should provide “another modest performance improvement in the single-digit range,” based on Intel’s performance testing across 35 games. When that microcode update (version 0x114) has been released, Intel says it plans to release another support document with more detailed performance comparisons.

If a long Intel support document detailing a multi-stage series of fixes for elusive performance issues is giving you deja vu, you’re probably thinking about this other, more serious problem with 13th- and 14th-generation Core CPUs from earlier this year. In that case, the issue was that the CPU could request more voltage than it could handle, eventually leading to degraded performance and crashes.

These voltage requests could permanently damage the silicon, so Intel extended the warranties of most 13th- and 14th-gen Core CPUs from three years to five. The company also worked with motherboard makers to release a string of BIOS updates to keep the problems from happening again. A similar string of BIOS updates will be necessary to fix the problems with the Core Ultra 200S chips.

Intel is testing BIOS updates to fix performance of its new Core Ultra 200S CPUs Read More »

Arm and Qualcomm’s dispute over Qualcomm’s Snapdragon X Elite chips is continuing in court this week, with executives from each company taking the stand and attempting to downplay the accusations from the other side.

If you haven’t been following along, the crux of the issue is Qualcomm’s purchase of a chip design firm called Nuvia in 2021. Nuvia was originally founded by ex-Apple chip designers to create high-performance Arm chips for servers, but Qualcomm took an interest in Nuvia’s work and acquired the company to help it create high-end Snapdragon processors for consumer PCs instead. Arm claims that this was a violation of its licensing agreements with Nuvia and is seeking to have all chips based on Nuvia technology destroyed.

According to Reuters, Arm CEO Rene Haas testified this week that the Nuvia acquisition is depriving Arm of about $50 million a year, on top of the roughly $300 million a year in fees that Qualcomm already pays Arm to use its instruction set and some elements of its chip designs. This is because Qualcomm pays Arm lower royalty rates than Nuvia had agreed to pay when it was still an independent company.

For its part, Qualcomm argued that Arm was mainly trying to push Qualcomm out of the PC market because Arm had its own plans to create high-end PC chips, though Haas claimed that Arm was merely exploring possible future options. Nuvia founder and current Qualcomm Senior VP of Engineering Gerard Williams III also testified that Arm’s technology comprises “one percent or less” of Qualcomm’s finished chip designs, minimizing Arm’s contributions to Snapdragon chips.

Although testimony is ongoing, Reuters reports that a jury verdict in the trial “could come as soon as this week.”

If it succeeds, Arm could potentially halt sales of all Snapdragon chips with Nuvia’s technology in them, which at this point includes both the Snapdragon X Elite and Plus chips for Windows PCs and the Snapdragon 8 Elite chips that Qualcomm recently introduced for high-end Android phones.

Arm says it’s losing $50M a year in revenue from Qualcomm’s Snapdragon X Elite SoCs Read More »

In light of other recent discussions, Scott Alexander recently attempted a unified theory of taste, proposing several hypotheses. Is it like physics, a priesthood, a priesthood with fake justifications, a priesthood with good justifications, like increasingly bizarre porn preferences, like fashion (in the sense of trying to stay one step ahead in an endless cycling for signaling purposes), or like grammar?

He then got various reactions. This will now be one of them.

My answer is that taste is all of these, depending on context.

Scott Alexander is very suspicious of taste in general, since people keep changing what is good taste and calling each other barbarians for taste reasons, and the experiments are unkind, and the actual arguments about taste look like power struggles.

Here’s another attempt from Zac Hill, which in some ways gets closer.

Zac Hill: ACX is so close to getting it right on ‘taste’, but then dismisses the closest (“grammar”) conclusion in favor of a much more elementary interpretation (“priesthood of esoterica”).

Art works by leveraging the mechanics of a given medium to create meaningful experiences for an audience. This is in turn bounded by three things:

—> The nature of what those mechanics are capable of emphasizing

—> The artist’s facility with those mechanics

—> The audience’s ability to meaningfully perceive how the artist is deploying the mechanics.

The standard ‘highbrow/lowbrow’ distinction is basically just a slider on the third variable. Similar to improving at an instrument with practice, the process of considered experience and reflection of art allows a broader and more textured perception and integration of deployed mechanics.

Menswear Guy is great because he lays this process bare for a medium most of us lack familiarity with; Ebert is the same way; Gardner had this effect on me for literature. One value of the critic is explicating some of these mechanical grammars/vocabularies so we don’t have to derive them independently.

The point is that artistic “quality” can in some sense be ‘empirically/objectively’ derived by understanding those three core variables in greater resolution. Obviously this is in practice impossible, which is why discursive reflection rules. But the process is not mysterious.

If we are going with one answer from Scott’s list, it is obviously grammar. The real answer is it is all of them at different times and places.

He points to one key aspect of grammar, which is that you can have different internally consistent grammars, and they are all valid in their own way, but within each you need to follow their logic and spirit, and there is better and worse Quality.

Languages also work this way overall. Or cuisines. So do artistic styles.

So does taste. You can ‘have taste’ or ‘not have taste’ within any type of taste, in addition to having the taste to prefer the right kinds of taste, by being able to properly sort things by Quality, and create and engineer high Quality yourself, and have the preference and appreciation for and ability to notice high Quality.

Sometimes, yes, taste is rotating in the sense of fashion, where everyone is trying to stay one step ahead in the status game, but also there is a skill of doing that while also ‘having taste’ both in general and within each context, which is also being tested.

Also, yes, as we saw in From Bauhaus To Our House, sometimes the underlying logic from which taste is being drawn is, as in modern architecture, a literal socialist conspiracy intended to make our lives worse, with a competition to see who can convince more people to suffer more.

And all of this is being combined, in places like the AI Art Test, with other preferences that are not about taste. And sometimes people are trying to apply the wrong kind of ‘good taste’ test to something in a different grammar, or saying that taste should apply on the meta level between the grammars.

Thus, ‘having no taste’ can mean any combination of these (with some overlap):

Too little ability to distinguish, in a context, what is good versus bad taste.

Choosing taste preferences that are, at a meta level, considered bad taste.

Choosing types of things where Quality and taste have relatively low impact.

Failing to appreciate and take joy in good taste and high Quality.

Caring more about non-Quality aspects, thus often choosing low Q over high Q.

Caring about things ‘people with good taste’ think you shouldn’t care about.

Not playing along with today’s status game or power trip.

Failing to appreciate complex historical context that explains why a particular thing has been done before and is therefore bad, or is commenting on something else and therefore good, or other such things (see the Lantz discussion later).

Within a given grammar and context, I will stand up for taste Platonism and physics. I believe that, for all practical purposes, yes, there is a right answer to the Quality level of a given work, to whether liking it reflects good taste.

That doesn’t mean you have to then care about it in every instance. You certainly get to rank things for other reasons too. My Letterboxd ratings (from 0.5-5 stars) are meant to largely reflect this Platonic form of Quality. And sometimes I think ‘oh this is going to be a 2-3 star movie’ and decide that’s what I want to watch today, anyway. When I differ from the critics, both high and low, it’s generally because of aspects they think you’re ‘not supposed to’ care about, that are ‘outside of taste’ to them and that they think shouldn’t matter if you’re in good enough taste, but that I think should count, and matter for Quality.

I think you greatly benefit from good taste if and only if you are not a snob about it.

As in, you can develop the ability to appreciate what is good, without having disdain for things that are bad. Ebert can appreciate and understand both the great movie and the popcorn flick, so his taste means he wins. But if it meant he turned up his nose at the popcorn flicks, now it’s not clear, and maybe he loses.

Ideally, one has the ability to appreciate all the subtle things that make things in good taste, without recoiling in horror when someone has a bad color scheme or what not.

Never, ever tell anyone to Stop Having Fun, Guys.

In Sympathetic Opposition’s Contra Scott on Taste, there seems to be the assumption that Scott is right that having taste and noticing Quality means noticing flaws and thus having the experience of low Quality things be worse. Their response is to say, if you have taste, then you can search out and experience higher Quality, so it’s fine.

They site C.S. Lewis and endorse my #4 in what bad taste primarily is – that good taste is the ability to experience the sublime in things. To which I say:

You can have this, while still appreciating low Quality things too.

Also often the failure to experience the sublime in things that people traditionally think are in bad taste, and of low quality, is a Skill Issue.

In particular, I think one skill I have is the ability to experience the sublime and aesthetic pleasure when consuming media that, objectively, is low Quality and in bad taste, that ‘objectively’ sucks, by isolating the elements that have it from the parts that suck, despite noticing the parts that suck.

When done properly, that makes it better, not worse.

I also think that SO hints at the distinction between ‘good taste’ and ‘good.’

When I peevishly, shittily started a twitter fight about the mask picture by saying “it seems like people only find this image beautiful if they agree w the belief it expresses, which in my opinion is a sign of a bad piece of art,” people who disagreed with me said stuff like:

people are talking about it, which makes it good

it makes people feel things, which makes it good

it communicates a difficult concept, which makes it good

Literally no one defended it by saying anything about the image itself. No one was like “Look at the lines, the composition, the colors. I could just stare at it and drink it in.”

…

If these people aren’t having a direct aesthetic experience with this image, then it is just not possible to do so.

Which is why they weren’t saying the picture is good as in ‘in good taste.’ They are saying it is ‘good’ as in ‘fun’ or as in ‘is useful.’

Are they having a ‘direct aesthetic experience’ of its details? Not in the sense SO is thinking, presumably, but they are having a conceptual experience, and they are using it as a tool that serves a purpose. Several stars.

What is going on with AI art? It’s not good as in taste. But it’s good as in pretty.

And for a lot of people, that’s what they want.

This is also my response to Scott’s response to SO. He says:

I think that distinguishing this from the deep love and transformation of highbrow art risks assuming the conclusion – the guy who says Harry Potter changed his life is deluded or irrelevant, but the guy who says Dostoyevsky did has correctly intuited a deep truth. But we believe this precisely because we know Dostoyevsky is tasteful and Rowling isn’t – I would prefer a defense of taste which is less tautological.

So I would say, you can say that you got great aesthetic pleasure from Dostoyevsky’s prose, or you appreciated his deep understanding of character, or other neat things like that. If you say those things about Rowling then I’m going to laugh.

But Rowling still spun a good yarn (over and over again) in more basic ways, there is a lot more demand for that product than there is for Dostoyevsky’s product, and there’s no reason you can’t love Harry Potter or let it change your life. It’s fine. There are things there worth finding.

We also have Frank Lantz contra Scott on taste. It’s quite something to see your past self quoted like this:

Frank Lantz: Art skepticism seems to be a common stance among a lot of rationalist and rationalist-adjacent thinkers. This general attitude ranges from Scott’s sincere attempt to carefully think through his skepticism (he followed up his AI art quiz with a post about modern architecture and a discussion of artistic taste) to Zvi Mowshowitz proudly declaring he would never set foot inside the MoMa and bluntly proclaiming that “an entire culture is being defrauded by aesthetics”.

I care about this because I like these thinkers, and I think they’re missing something important and valuable about art. I would like to be able to defend art, fine art, modern art, as a project, in terms that they would find convincing, but I haven’t figured out how to do that yet.

Perhaps, as a preliminary sketch of such a defense, I would start by calling attention to the dynamic nature of art – its necessary and unavoidable restlessness. Every work of art is both embedded within a process of perception, reaction, evaluation, and interpretation, and also an intervention into this process.

While I stand by my statements there, and I still wouldn’t set foot in the MoMa, and you can see above what I think about modern architecture regarding From Bauhaus To Our House, that doesn’t mean I am against art or appreciating art, in general.

Lantz’s most important contribution to this discussion, as I see it, is to point out that art and taste are largely in response to the desire to avoid the boring and predictable and what has already been done while also matching expectations, and that a lot of artistic choices and good taste emerge from the detailed context of what had existed before and also what came after.

And I think all of that really is legitimate, and investing in understanding that context can pay off, and that earlier works very much are enriched and ‘get a free pass’ in various ways by the fact that they came earlier, and they were original and innovative at the time, and in what they then led to, and so on. There’s an elegant, important dance going on there.

Sometimes.

Other times, taste is functionally being fashion, or it is being a priesthood, and for Modern Art I strongly suspect it’s best classified (in Scott’s taxonomy) as bizarre porn, except in a bad way and as buildings displayed on the street.

I’m going to double down that most – not all, but most – of all this modern ‘conceptual art’ is rather bogus and masterbatory, and mostly a scam or a status game or at best some kind of weird in-group abstract zero-sum contest of one-upmanship, at worst a ‘speculative market in tax-avoidant ultra-luxury hyper-objects, obscene wealth and abject, hipster coolness,’ and also a giant fyou to humanity, and I want it kept locked behind the doors of places like MoMa so I can choose to not set foot in there.

I’m definitely doubling down on Modern Architecture.

I’m not bad at aesthetics, you’re bad at aesthetics, in that you stopped believing in them at all, and tried to darvo and gaslight the rest of us into thinking it was our fault.

Or, I’m not the one who doesn’t care about aesthetics, you’re the one who doesn’t care about aesthetics, and you’re gaslighting the rest of us.

You’re pretending to do grammar when you’re obviously doing something else.

I’m not skeptical of art. I’m skeptical of your particular art, which happens to be the dominant thing called ‘Art’ in some circles. Whereas the people I think of as ‘artists’ today that I admire tend to work with video, or audio, or games, or text, or make visual art within one of those contexts that the capital-A Art World would scoff at.

(Yes, the AI poem most liked in the AI poetry study is horrible slop, and I don’t think you need problematic assumptions to explain why, it’s generic slop with no there there, it’s not particular, it doesn’t make interesting choices, it is just a series of cliche phrases, see, that’s it, I did it, no LLM consult required.)

And in other places, like the stuff Sarah Constantin is describing in Naming the Nameless (interesting historical note: Sarah did eventually leave the Bay, and I think she’s happier for it and that this essay is related to why), they are weaponizing a certain kind of aesthetics as a form of, essentially, fraud and associative vibe-based marketing and attempt to control people’s perceptions of things like ‘cool’ in ways that falsify their true preferences, for reasons political, personal and commercial. And they are attempting to attack us with the resulting paradox spirits when we try to call them out on this. Yes.

I also think there’s an implicit claim that if you are in good enough taste, enough ‘part of a project,’ then you don’t have to be accessible, you don’t have to stand outside the ‘project,’ and you don’t have to have aesthetic or other value absent your place in that project.

I think that’s very wrong. Doing all of that is also part of your job as an artist and creator. You can sacrifice it in some situations, and certainly you shouldn’t always be able to come in ‘in the middle’ of everything, but this very much counts against you, and reduces not only the reach of the work but also its value even to those who can handle it, because you’re operating without key constraints and that too is important context – you now owe us a worthwhile payoff.

As a writer, I have to continuously strike the balance of accessibility versus repetition, of knowing people don’t read these posts in any particular order. I make trade-offs that I’m learning to improve over time, and everyone else has to make them as well.

This concept seems important:

If you pay close attention to how your own taste operates, you can sometimes catch yourself deciding to like a thing. Sometimes this is because your friends like it, or some cool person you want to impress likes it. But other times it’s because part of you, a good part, a part you trust, recognizes something in the thing, a missing piece for a new person you are in the process of becoming.

You say to yourself “I want to like this thing because it is the kind of thing that the kind of person I want to be likes”. And you put yourself in the right posture to like the thing, build the necessary literacy, make the ritual gestures. But you can’t make yourself like something. Often, your existing preferences, as they already are, stubbornly refuse to budge. And sometimes they don’t.

If you pay close attention to this process, you will eventually see this as the terrain of artistic taste.

Weird to say ‘catch yourself’ as if it’s something to avoid or be ashamed about. I attempt to like things all the time. Ceteris paribus, I would prefer to like as many things (and people!) as possible, while keeping them in proper rank order. Find the good in it. But you don’t want to engage in preference falsification. You don’t want to pretend, especially to yourself, to like things you don’t like or especially things you despise, because you’re ‘supposed’ to like them, or it would benefit you to like them.

I went far enough down the rabbit hole to find this:

Frank Lantz: Wow, I just realized that in the comments to that post, Zvi actually makes the following comment: “The post is explaining why she and an entire culture is being defrauded by aesthetics. That is it used to justify all sorts of things, including high prices and what is cool, based on things that have no underlying value.”

So this attitude I thought was more implicit is actually a deliberate, considered position. I find that kind of exciting, honestly. How would I go about convincing Zvi to change his mind on this question?

In turn, I love Lantz’s attitude here that he finds this exciting, and it’s a lot of why I’m giving him so much consideration.

I presume this post should provide a lot of information on how one might go about convincing Zvi to change his mind on this, and what exactly it is that you might want to change my mind about?

Convince me, essentially, that there is a worthwhile and Platonic there there.

If Lantz wants to take a crack at convincing me, maybe even in person in NYC (and potentially even literally at MoMa), I’d be down, on the theory that given story value it’s hard for that to be an unsuccessful failure.

How do you fit 32 terabytes of storage into a hard drive? With a HAMR.

Seagate has been experimenting with heat-assisted magnetic recording, or HAMR, since at least 2002. The firm has occasionally popped up to offer a demonstration or make yet another “around the corner” pronouncement. The press has enjoyed myriad chances to celebrate the wordplay of Stanley Kirk Burrell, but new qualification from large-scale customers might mean HAMR drives will be actually available, to buy, as physical objects, for anyone who can afford the most magnetic space possible. Third decade’s the charm, perhaps.

HAMR works on the principle that, when heated, a disk’s magnetic materials can hold more data in smaller spaces, such that you can fit more overall data on the drive. It’s not just putting a tiny hot plate inside an HDD chassis; as Seagate explains in its technical paper, “the entire process—heating, writing, and cooling—takes less than 1 nanosecond.” Getting from a physics concept to an actual drive involved adding a laser diode to the drive head, optical steering, firmware alterations, and “a million other little things that engineers spent countless hours developing.” Seagate has a lot more about Mozaic 3+ on its site.

Seagate’s rendering of how its unique heating laser head allows for 3TB per magnetic platter in Mozaic drives.

Seagate’s rendering of how its unique heating laser head allows for 3TB per magnetic platter in Mozaic drives. Credit: Seagate

Drives based on Seagate’s Mozaic 3+ platform, in standard drive sizes, will soon arrive with wider availability than its initial test batches. The driver maker put in a financial filing earlier this month (PDF) that it had completed qualification testing with several large-volume customers, including “a leading cloud service provider,” akin to Amazon Web Services, Google Cloud, or the like. Volume shipments are likely soon to follow.

After decades of talk, Seagate seems ready to actually drop the HAMR hard drives Read More »

The Supreme Court petition was filed by the New York State Telecommunications Association, CTIA-The Wireless Association, NTCA-The Rural Broadband Association, USTelecom, ACA Connects-America’s Communications Association, and the Satellite Broadcasting and Communications Association. Cable lobby group NCTA filed a brief supporting the petition.

New York Attorney General Letitia James defended the state law in a Supreme Court brief filed in October. The brief said that when New York enacted its law, the Pai-era FCC “had classified broadband as an information service subject to Title I of the Communications Act. Under Title I, Congress gave the FCC only limited regulatory authority—leaving ample room for States to regulate information services.”

Multiple appeals courts have found “that federal law does not broadly preempt state regulations of Title I information services,” and “Congress has expressed no intent—much less the requisite clear and manifest intent—to preempt state regulation of Title I information services,” the New York brief said. “Applicants’ field preemption claim fails because, far from imposing a pervasive federal regulatory regime on Title I information services, Congress instead gave the FCC only limited authority over information services. Congress thus left the States’ traditional police powers over information services largely untouched.”

It’s unclear when New York might start enforcing its law. The state law was approved in 2021 and required ISPs to offer $15 broadband plans with download speeds of at least 25Mbps, with the $15 being “inclusive of any recurring taxes and fees such as recurring rental fees for service provider equipment required to obtain broadband service and usage fees.”

The law also said ISPs could instead choose to comply by offering $20-per-month service with 200Mbps speeds. Price increases would be capped at 2 percent per year, and state officials would periodically review whether minimum required speeds should be raised.

Residents who meet income eligibility requirements would qualify for the plans. ISPs with 20,000 or fewer subscribers would be allowed to apply for exemptions from the law.

The New York attorney general’s Supreme Court brief argued that public-interest factors “weigh heavily in favor of allowing” the law, and that it won’t create the economic problems that telco groups warned of. “The three largest broadband providers in New York are already offering an affordable broadband product to low-income consumers irrespective of the ABA, and smaller broadband providers can seek an exemption from the ABA’s requirements,” the brief said.

Big loss for ISPs as Supreme Court won’t hear challenge to $15 broadband law Read More »

Ars has reached out to the lead author, Megan Liu, but has not received a response. Liu works for the environmental health advocacy group Toxic-Free Future, which led the study.

The study highlighted that flame retardants used in plastic electronics may, in some instances, be recycled into household items.

“Companies continue to use toxic flame retardants in plastic electronics, and that’s resulting in unexpected and unnecessary toxic exposures,” Liu said in a press release from October. “These cancer-causing chemicals shouldn’t be used to begin with, but with recycling, they are entering our environment and our homes in more ways than one. The high levels we found are concerning.”

BDE-209, aka decabromodiphenyl ether or deca-BDE, was a dominant component of TV and computer housings before it was banned by the European Union in 2006 and some US states in 2007. China only began restricting BDE-209 in 2023. The flame retardant is linked to carcinogenicity, endocrine disruption, neurotoxicity, and reproductive harm.

The presence of such toxic compounds in household items is important for noting the potential hazards in the plastic waste stream. However, in addition to finding levels that were an order of magnitude below safe limits, the study also suggested that the contamination is not very common.

The study examined 203 black plastic household products, including 109 kitchen utensils, 36 toys, 30 hair accessories, and 28 food serviceware products. Of those 203 products, only 20 (10 percent) had any bromine-containing compounds at levels that might indicate contamination from bromine-based flame retardants, like BDE-209. Of the 109 kitchen utensils tested, only nine (8 percent) contained concerning bromine levels.

“[A] minority of black plastic products are contaminated at levels >50 ppm [bromine],” the study states.

But that’s just bromine compounds. Overall, only 14 of the 203 products contained BDE-209 specifically.

The product that contained the highest level of bromine compounds was a disposable sushi tray at 18,600 ppm. Given that heating is a significant contributor to chemical leaching, it’s unclear what exposure risk the sushi tray poses. Of the 28 food serviceware products assessed in the study, the sushi tray was only one of two found to contain bromine compounds. The other was a fast food tray that was at the threshold of contamination with 51 ppm.

Huge math error corrected in black plastic study; authors say it doesn’t matter Read More »