The game is asymmetrical. Life is not fair. Doesn’t matter. Play to win the game.

Ah, the ultimate ick source. A man expressing their emotions is kind of the inverse of the speech in Barbie about how it’s impossible to be a woman.

It’s easy. A man must be in touch with and transparent about his emotions, but must also be in conscious control over them, without repressing them, while choosing strategically when to be and not be transparent about them, and ultimately be fine, such that we will also be fine if all that information we shared can then be shared with others or used as a weapon.

Express your emotions. No, not those, and definitely not like that. But do it.

That’s the goal. If you say that’s not fair? Well, life it’s fair, I report the facts rather than make the rules, and the whole thing sounds like a you problem.

Kryssy La Reida: Serious question for men…what is it that makes you feel like you are in a relationship with a woman that you can’t express your feelings to? Or why would you even want to be in one where you don’t have a safe space to do so? If your partner isn’t that safe landing space for those emotions what is the point? Or are you just conditioned to not express them?

Eric Electrons: Many women will often say they want vulnerability in a man, no matter how calmly and respectfully delivered, but treat us differently once they get it, use it against us when upset, joke about it, or share it without our consent to friends and family.

It takes time and experience to truly identify a woman who is trustworthy and knowledgeable enough to handle expressing your feelings to as a man. Especially, if she’s a significant other.

Philosophi_Cat: It’s true that many women say they want a man who’s “in touch with his emotions,” yet get the “ick” when he expresses them. This can be bewildering for men, but the issue lies in how emotion is expressed, not in the fact of feeling it.

Yes, women tend value a man who has emotional fluency and depth, someone who can articulate his inner world and empathise with hers.

But they recoil when a man loses his composure, collapses emotionally and turns to her for emotional containment. The moment she has to soothe or stabilise him, when she must step into the role of his therapist or mother, the polarity between them collapses. She no longer feels his strength; she feels his need. And that is where the “ick” arises from.

So this is the key distinction: Emotional depth does not mean emotional dumping.

A man can speak openly about his struggles while remaining self-possessed and anchored in his own centre. He might say, “I’ve had a rough week and I’m working through some frustration, but I’ll be fine,” rather than dissolving into self-pity or seeking reassurance. He shares what’s real without burdening her with it. His emotions are contained by his own form.

That’s what women respond to: emotional transparency grounded in composure. It signals a robust and stable inner centre. It shows he can hold complexity without being consumed by it.

By contrast, many men, fearing that any show of feeling will make them appear weak, over-correct by suppressing or hiding their emotions entirely.

Robin Hanson: So men, feel emotions only if you fully command them. If you are instead vulnerable to them, you are “ick”. Got that?

Robin’s imprecise here. It’s not that you can’t feel the emotions, it’s that you can’t talk about or too obviously reveal them, and especially can’t burden her with them. Unless, that is, you are sufficiently justified in not commanding them in a given situation, in which case you can do it.

As with many other similar things this creates a skill issue. Yes, we’d all (regardless of gender) like to be fully in touch with and in command of our emotions and in a place where we can own and share all of it securely and all that, while also having a good sense of when it’s wise to not share. That’s asking a lot, so most people need practical advice on how to handle it all.

When you sense potential trouble and the wrong move can cause permanent damage up to and including the ick, risk aversion and holding things back is the natural play. But if you always do that you definitely fail, and you also don’t fail fast. A lot of risk aversion is aversion to failing fast when you should welcome failing fast, which is the opposite of the correct strategy.

So you’ll need to start getting command and ownership of at least some of those emotions, and you should err on the side of sharing more faster if you’re in position to handle it, but also it’s fine to hold other stuff back or be vague about it, especially negative stuff.

You’ll be told that the above is overthinking things. It isn’t.

It is extremely attractive to feel and openly show your emotions and get away with it, if you do it successfully, largely because it is so difficult to do it successfully.

Taylor: wish men understood how attractive it is when they can feel & openly show their emotions instead of acting like a sociopathic brick wall.

Pay Roll Manager Here: Any man reading her tweet, please do not believe her.

Geoffrey Miller: Sexual selection through mate choice can shape traits faster than any other evolutionary process can. If women were really attracted to men who cried a lot, men would have evolved, very quickly, to cry a lot.

Robin Hanson: And if women who actually knew what they wanted and said so honestly were attractive to men, that would have evolved very quickly too, right?

On the evolutionary level, yes selection is rapid but even if the effect were extreme that’s over centuries and millennia, not decades. So the ‘if this was attractive evolution would have handled it’ argument only holds for traits that would have been attractive in older worlds very different from our own, in cultures radically different from our own, where selection operated very differently.

Also, a lot of your reproductive success in those worlds (and ours) is about intersexual dynamics and resource acquisition, and several other factors, so a trait can be attractive but still get aggressively selected against.

So while yes, obviously evolution can offer some very big hints and should be considered, you cannot assume that this is predictive of what works now.

‘Can feel and openly show emotions’ is not one thing. There are some ways that being able to feel and openly show emotions is attractive and winning. In other ways and situations it is very much not. Like when you train an LLM, there are a bunch of correlated things that will tend to move in correlated ways, and modifying one thing also modifies other things.

So yes, when you see the player in the clip talk about missing his family, that is (mostly) a good use of feeling and showing emotions at this point (although it wouldn’t have gone as great in the same spot even a few decades ago, I am guessing). But that doesn’t mean that showing that level of emotion and crying on a regular basis will go that well.

This is a skill issue. Being the brick wall is not a universally optimal play. But it is a safer play in any given situation. In many situations it is indeed correct, and at low levels of related skill it is better than the alternatives even more often.

First best is to be in touch with your emotions but be high skilled in managing them and knowing when and how to communicate them to others, and so on. Second best is to be cautious. Long term, yes, you want to pick up this skill.

This is speculative. The theory is, it is fine to mix and match and combine and defy stereotypes, but only via embodying more things rather than less things.

Sasha Chapin: So I have a theory that for most people, men and women, peak attractiveness in a hetero context involves high-budget androgyny

Low-budget androgyny: not inhabiting either gendered energy

High-budget androgyny: inhabiting your own fully, and a bit of the other

I notice that the men I know who get the most laid or have the least trouble securing a relationship are men you could see boxing or fishing, but could also do a convincing impromptu tarot reading or w/e

Julie Fredrickson: I’ve generally dressed in a normie feminine manner and leaned into long hair and cosmetics because all my interests are masculine coded. Would this fall into high budget androgyny?

Sasha Chapin: Yes.

Andrew Rettek: I’ve noticed this androgyny binary before, this is a good description of it.

Sarah Constantin: this is in the Bem Sex Role Inventory, which i think is from the 60s. distinguishes “androgynous” (both masculine & feminine) from “undifferentiated” (neither masculine nor feminine.)

i’m pretty undifferentiated lol

but so are Tolkienesque elves so how bad can it be.

I’ve said it before but it’s important so it bears repeating, one of the fundamentals, obviously it is not this simple or easy but on the margin this helps quite a lot, you want to give people the unexpected compliments that mean something:

Ibrahim: telling a hot girl shes pretty is like someone telling YOU, presumably a smart twitter tech guy, ur smart.

if someone told you ur smart ud prob just say thanks.

maybe itll feel slightly good, but it wont leave much of an impression on u, bc no shit ur smart; ur a swe.

same thing for hot girls.

if someone says they r pretty, they drgaf, bc like its so obvious and that compliment doesnt mean that much to them.

give people complements they dont usually get to stand out. call ur local tech guy hot and call ur local hot girl smart

Alberto Fuentes: Calling a nerd Guy “hot” is a clear indication of the beginning of a scam.

Ibrahim: sigh you’re right.

Beware Alberto’s warning too, of course, you need to not overdo it, and also beware.

If you’re not sure what kind of compliment they prefer, you can run tests. Or in theory?

Ben Hsieh: for awhile i was on this completely psychotic kick where i’d relate this advice (tell pretty girls that they’re smart and smart girls that they’re pretty) and then ASK the girl which compliment they’d prefer

no idea where my head was on that, it’s a wonder i never got stabbed.

Lydia Laurenson: that’s actually hilarious… I feel like I might laugh pretty hard if someone said that to me

Ben Hsieh: …well, which compliment do you prefer?

Lydia Laurenson: 😹 aren’t you married and monogamous

Ben Hsieh: yes, though who can tell how. I will say i’m confident my wife would be totally fine knowing i was asking this question, specifically on condition that it’s not to her.

Lydia Laurenson: my initial answer in any standard social context would be would be: laugh, deflect, make joke, deflect

my genuine answer: I feel slightly happier when men tell me I’m pretty than that I’m smart. I guess this indicates that I think I’m smarter than I am pretty, and that is true.

Zvi Mowshowitz: new level of ask culture unlocked, I love it.

Lydia Laurenson: it’s a real brain twister whether it’s a neg or not.

I think it’s entirely context dependent whether it’s centrally a neg. Your delivery of the line would matter a lot.

Ruby: The whole “men should pay on first dates” thing gives me the ick and I’ve been trying to put my finger on why. I think it’s some combination of:

-

I’m less attracted to men who blindly follow social scripts.

-

I have no interest in unequal partnership (except for kinky reasons) and I want my date to appreciate that.

-

I dislike the kind of feminism that demands a bunch of advantages for women without accepting the corresponding responsibilities, and it disappoints me when women don’t reject this.

This is not to say that I’m against men ever paying, I just wish we’d reject it as this weird value-laden social norm. My preferred scenario would be to randomize it each time, perhaps weighted more towards the person who is wealthier

Aella: Yeah when I let a guy pay on a date I feel myself putting one foot into escort world where I become suddenly aware that our relationship is transactional.

The transactionalism worry is real as a downside. Despite this, I believe men should very clearly pay on most first dates, for at least five good (related) reasons.

-

The man is usually the one doing the asking.

-

The man is usually the one doing the planning.

-

The man does not want her to have the distraction or bad vibes of thinking about money or having not paid. This is a lot of vibe improvement at low cost.

-

The man wants the woman to say yes more often both to the first and subsequent dates, and have positive memories and a positive experience.

-

The man wants to send a costly signal of several things.

Thus, given other dynamics present, the man should usually pay for the first date. This is a relatively acceptable way to make the date market clear more often and improve outcomes.

To the extent that the above justifications are broken, such as when the woman is initiating and suggesting the activity, that is an exception.

Consider an obvious example where one party should pay: If you are invited over to someone’s house for dinner, very obviously they should usually not send you a bill, no matter what relationship you have with them or what else you might do or not do. Similarly, I am a big fan of the rule I have with some of my friends that whoever travels the farthest does not pay for dinner.

Very obviously, if you have someone like Ruby or Aella where the vibes run the other way, and not paying hurts their experience? Then you should split or they should pay, It is on her to let him know this. He should reach for and request the check in a way that indicates intention to pay, but in a soft way that is interruptible.

Jeremiah Johnson: So Cartoons Hate Her tested the ‘Men are turned off when women have high paying jobs’ hypothesis. It turns out that’s just completely untrue – greater percentages of men swipe right when the same women is listed as having a higher-paying job.

Ella: This seems to also dispel the common more that men “swipe right on literally anything”, given that she at most got a 71% rate (and as low as a 58% rate) despite being in my estimate quite conventionally pretty.

Not only did she get more matches presenting as a higher earner, the matches were on average higher earners themselves.

Lila Krishna pushes back that CHH was asking the wrong question. Yes, you can get as many or more initial swipes, but that is not the goal. The problems come later if you don’t want to give up your ambitions, she says, with personality clashes and emasculation and resentment and the male expectation that you’ll still do all the chores and childcare which rules out deep work.

The ability to be ambitious is valued, she asserts, but actually still being ambitious isn’t. See Taylor Tomlinson’s bit about how she wants to marry a stay-at-home dad, but not a man who wants to be one, so she needs to find a successful man and destroy him, and she’s kind of into him resenting her for it.

Which is all entirely compatible with CHH’s findings, but also means that you’re strictly better off making more money rather than less.

Shut Thefu: Don’t have my car today so requested a colleague if he can drop me on the way.

His exact reply, “sorry dear, my wife may not appreciate it.” Men, it is really that simple.

Aella: Are the monogs ok

J: But yet you wanted to put him in that position. 🤔

Unfair: If dropping a colleague on your way is such an issue with your partner, you’re in a toxic relationship that will end badly one way or the other

Sammy: is it not entirely offensive to people that their partner’s are out there implying their relationship can’t survive a simple favor lmao?

“sorry my marriage is weaker than a car ride home with a woman. for some reason this is neither embarrassing nor sexist”

Books By J: I was confused too when I saw the post earlier lol

Could never be me. I don’t care if my husband drives a coworker home? Just let me know? Communicate? So I know you’ll be a little late so I’m not worried you are in a ditch somewhere?

Being worried someone that is a little late is dead in a ditch? Also paranoia.

I don’t think this is automatically one of those ‘that which can be destroyed by the truth should be’ or ‘if I can take your man he was never your man’ situations. Circumstances drive behavior, and yes it is entirely plausible that you could have a good thing worth preserving that would be put at risk if you put yourself in the wrong situation, even one that is in theory entirely innocent. Opportunity and temptation are not good things in these spots and a tiny chance of huge downside can be worth avoiding.

Most couples most of the time do not need to worry on this level, and certainly having to worry that way is a bad sign, but play to win.

Also from the same person recently:

Shut the Fu: I just asked my boyfriend today what he’s most afraid of and I thought he was gonna say “I’m afraid of losing you” but he literally said “YOU” 😭😭

And for fun:

Now that I actually have a comfortable amount of money, I can say that it does indeed buy happiness. The guilt free occasional food delivery, being able to afford going out w friends, buying something that makes life easier, health appointments!, hiring a service. Y’ALL THEY LIED

She’s somehow cracked the Twitter code, she has 1584 followers and half her posts recently break a million views. It’s amazing.

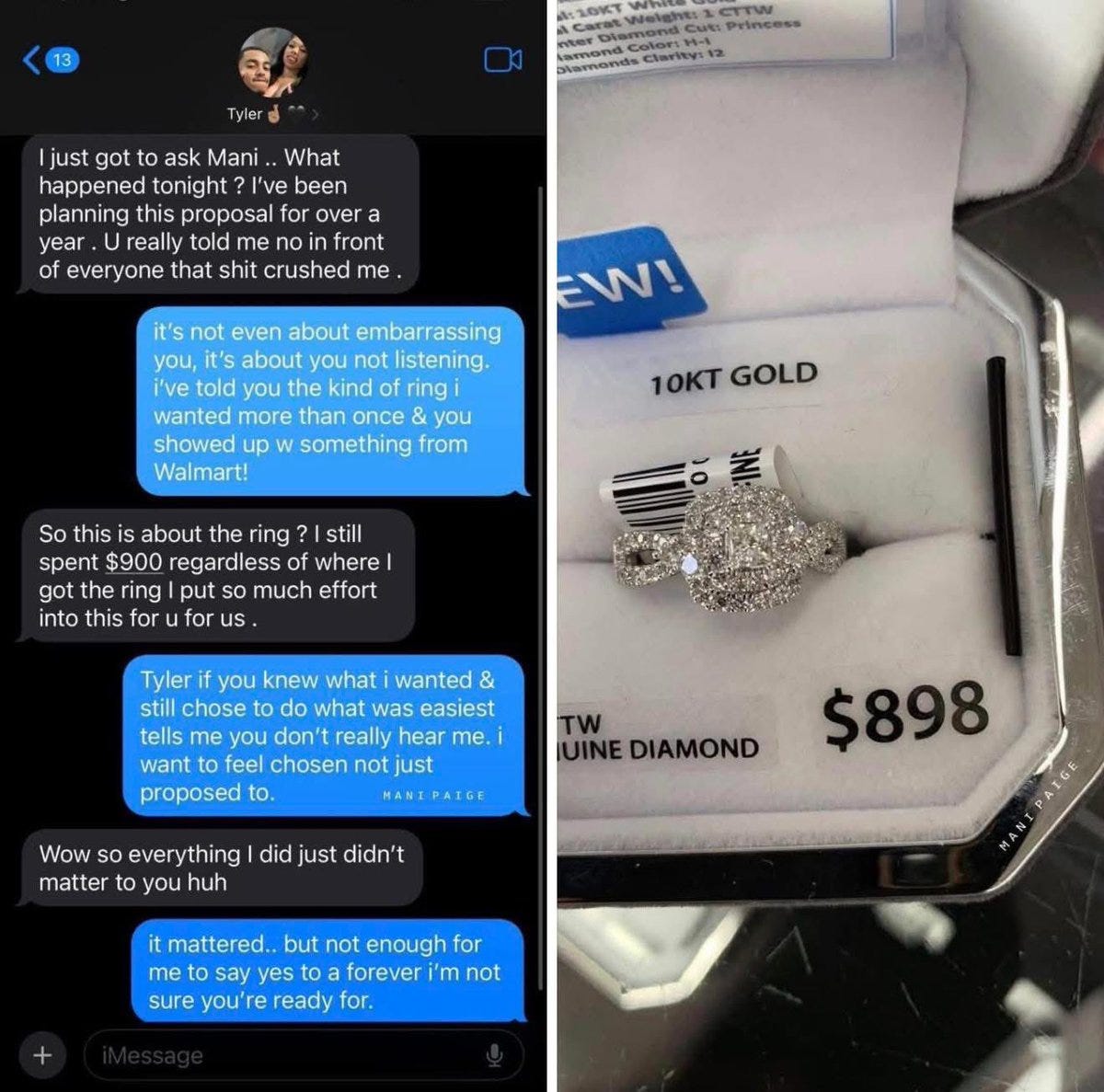

There’s a note saying this was engagement bait but it’s a scenario either way.

At least one bullet was dodged here. The question is which one, or was it both?

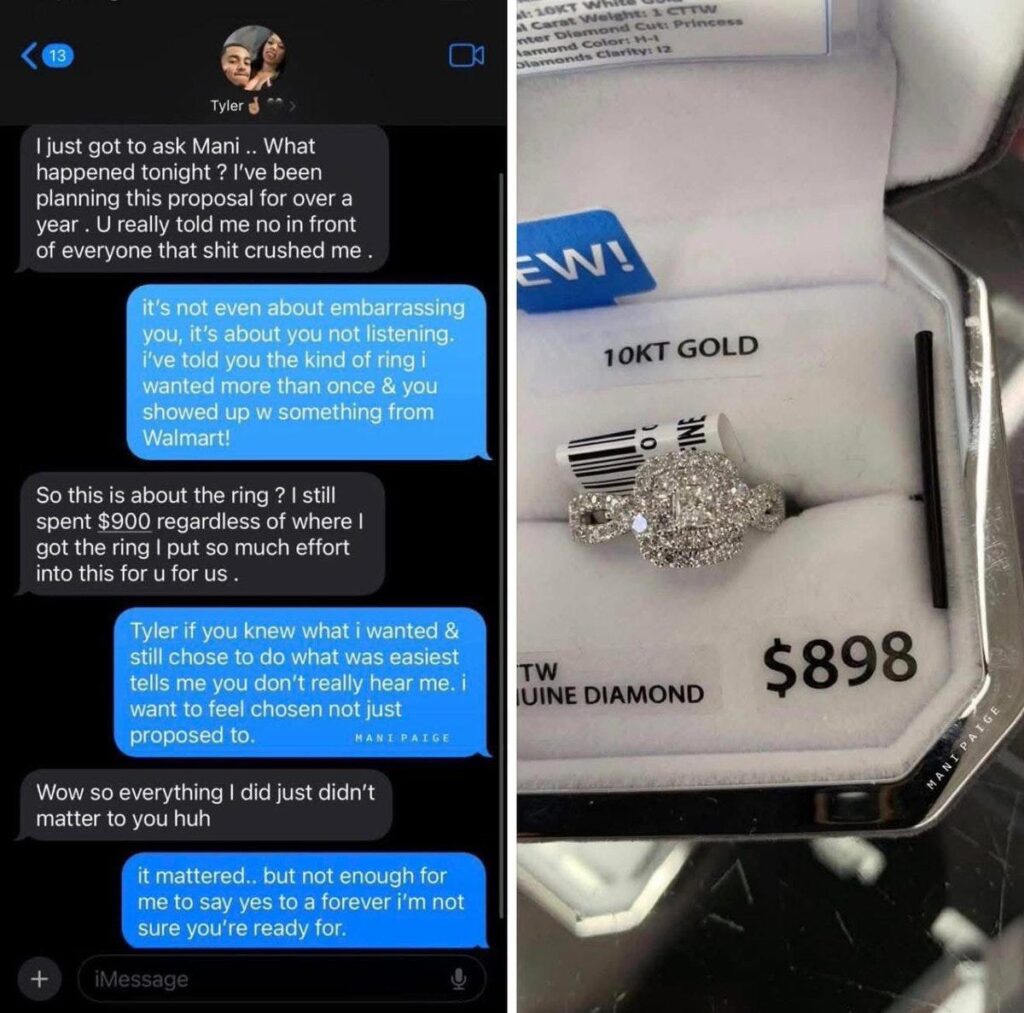

Shannon Hill: This poor dude planned his proposal and she said NO in front of all of their family and friends because the ring was only $900 and came from Walmart.

Personally, I think he dodged a bullet.

I’d say that if this is real both of them dodged a bullet. It’s a terrible match. She was willing to crush him in front of everyone over this and then doubled down, saying that she didn’t feel chosen even though he’s been planning this for a year. In particular, she also said ‘something from Walmart’ rather than saying it was the wrong type of diamond, which I’d respect a bunch more.

But also, dude, look, this is your moment, you planned this for a year, you don’t leave the freaking price tag and Walmart label in the box, what the hell are you doing. And yeah, you can say that doesn’t matter, but that type of thing matters a lot to her.

Nikita Bier: If you get upgraded on a flight but your girlfriend/wife doesn’t, do you downgrade and both suffer or do you take the upgrade? I need an answer in 5 minutes.

(Even) Robin Hanson: You give it to her.

donnie: what’s the purpose of the trip?

Nikita Bier: Honeymoon.

Matthew Yglesias (being right):

-

If it’s your girlfriend you should either take the upgrade and break up with her soon, or else quietly decline it and propose soon.

-

If it’s your wife, you as a gentleman offer her the upgrade and she’ll probably decline and let you take it.

2 The Great: My legs are so long I literally cannot sit straight in domestic economy. I got offered the upgrade. I offered it to my 5’4” now ex-girlfriend to be polite and she took it.

Matt Yglesias: What’re you gonna do.

What you are going to do is realize that if she takes the upgrade, that information is far more valuable than the upgrade. Hence the now ex part of the girlfriend.

There are situations in which sitting apart is not worth the upgrade, and a honeymoon plausibly counts as this, but they are rare. Mostly you want to offer her the upgrade, the value of doing that (and the value you lose by not doing that) greatly exceeds the actual experiential benefits even if she says yes, she often says no and you get valuable information.

One of the big classic s.

Peter Hague: Wife:

Me: ?

Wife: I don’t want !

How do you get past this dynamic?

Yes, I get the idea that she wants emotional support. But I want to solve problems – why isn’t that equally valid?

Gretta Duleba: No standard worksheet but I’d suggest asking a question such as

– How much of this feels under your control vs. not?

– What is it about this situation that feels especially tricky?

– What’s the best case scenario here? Worst case?

– What kind of support would help you the most?

Uncatherio: Wait ~3 mins between giving emotional support and offering

Both sides of the Tweed: Wife:

Me:

Wife:

This really is husbanding 101.

Russel Hogg: Everyone here is giving you a solution but you seem to just want us to share your pain!

Most of you already know this, but perhaps a better framing is helpful here?

So I would reply: Wife’s problem is not it is , and so you are trying to solve the wrong problem using the wrong methods based on a wrong model of the world derived from poor thinking and unfortunately all of your mistakes have failed to cancel out.

You need to offer a to at which point the problem changes to , which may or may not then be . As in, no problem.

Hello, human resources.

Chronically Drawing (Reddit): I have no idea how I feel about what he told me. I want to think it’s cute that he cared this much, but it’s just coming off as creepy and I feel lied to.

He got drunk because we were celebrating my first successful day at my clinicals and he ended up saying something along the lines of “could you believe we wouldn’t be this happy if I hadn’t watched you for so long?” To which I was confused and didn’t know what he meant.

Well I had worked at a local library for two years, before we met, during college and apparently he saw me there but didn’t actually talk to me, he just would watch me and listen in on my conversations with the people I was checking and my coworkers out to figure out what I liked. Then he apparently followed me and found the coffee shop I frequented. All this time I thought we had a sweet first time meeting story. He accidentally bumped into me, apologized, and offered to buy me coffee for the trouble.

He told me what he was ordering and it was the exact same thing I always get and I thought it was an amazing coincidence, I joked that it was fate and we spent like an hour talking over coffee. I feel so stupid. Apparently it was similar to a scene in a book that I had read and told my coworker I had thought was cute.

I’m just so frustrated, like why would you do this? And how much of our year and a half relationship is a lie.

The original response thread I saw this from said this was romantic and asked ‘you thought a meet cute was organic in 2025?’ Yes, meet cutes can still be organic, or involve a lot less stalking and deception.

The main problem with mostly harmless versions of such things is that they strongly correlate with and predict future very much not harmless versions of those and other things. Which is exactly what happened in this case. He got abusive and threatening and clearly was a physical danger to her, she had to flee her apartment. Fortunately it sounds like she’s okay.

A potential rule to live by here would be to say, don’t do anything you wouldn’t think the other person would be fine with in a hypothetical. Another obvious one is, if you think this would make people think you were crazy stalker person if they found out, then don’t do it, even if you think it wouldn’t mean that.

You don’t want the rule to be ‘would be fine with it if they knew everything,’ because knowing can ruin the effect. For example, one sometimes needs to Perform Vacation (or another occasion or action) and present as if one is happy in context, and they want you to do this if needed but it wouldn’t work if they outright knew you were faking it.

There certainly is also a class of ‘do your research’ strategies where you would okay with someone doing this as long as they kept it to themselves how they found out.

As many noted, the (often far more intensive) gender flipped version of this is common, and guys are remarkably often entirely fine with it (including many cases where it goes way too far and they shouldn’t be, but also many cases in which it is totally fine). This is not ‘fair’ but the logic follows.