The focus this time around is on the non-academic aspects of primary and secondary school, especially various questions around bullying and discipline, plus an extended rant about someone being wrong on the internet while attacking homeschooling, and the latest on phones.

If your child is being bullied for real, and it’s getting quite bad, is this an opportunity to learn to stand up for yourself, become tough and other stuff like that?

Mostly no. Actually fighting back effectively can get you in big trouble, and often models many behaviors you don’t actually want. Whereas the techniques you would use against a real bully outside of school, that you’d want to use, don’t work.

Schools are a special kind of bullying incubator. Once you become the target it is probably not going to get better and might get way worse, and life plausibly becomes a paranoid living hell. If the school won’t stop it, you have to pull the kid. Period.

If a child has the victim nature, you need to find a highly special next school or pull out of the school system entirely, or else changing schools will not help much for long. You have to try.

It seems rather obvious, when you point it out, that if you’re going to a place where you’re being routinely attacked and threatened, and this is being tolerated, that the actual adult or brave thing to do is to not to ‘fight back’ or to ‘take it on the chin.’ The only move is to not be there.

In my case, I was lucky that the school where I was bullied went the extra mile and expelled me for being bullied (yes you read that right). At the time I was mad about it, but on reflection I am deeply grateful. It’s way better than doing thing.

It was years past when sensible parents would have pulled me out, but hey.

Peachy Keenan: I can’t even look at photos of a little boy who killed himself because of “severe bullying” because I get too upset, and I am begging parents: if your child is getting bullied, you need to WALK. You need to GET THEM OUT.

This is your only job, and you must not fail. Teasing is one thing, but if your child has become the class target, there is no fix except to rescue them at the first sign of trouble.

Mason: At the heart of this is a widely held but quiet conviction that the regular nastiness a lot of kids put up with is some kind of necessary socialization/character-building process right up until a kid starts falling apart Parents’ frogs are boiled. They should tolerate MUCH less.

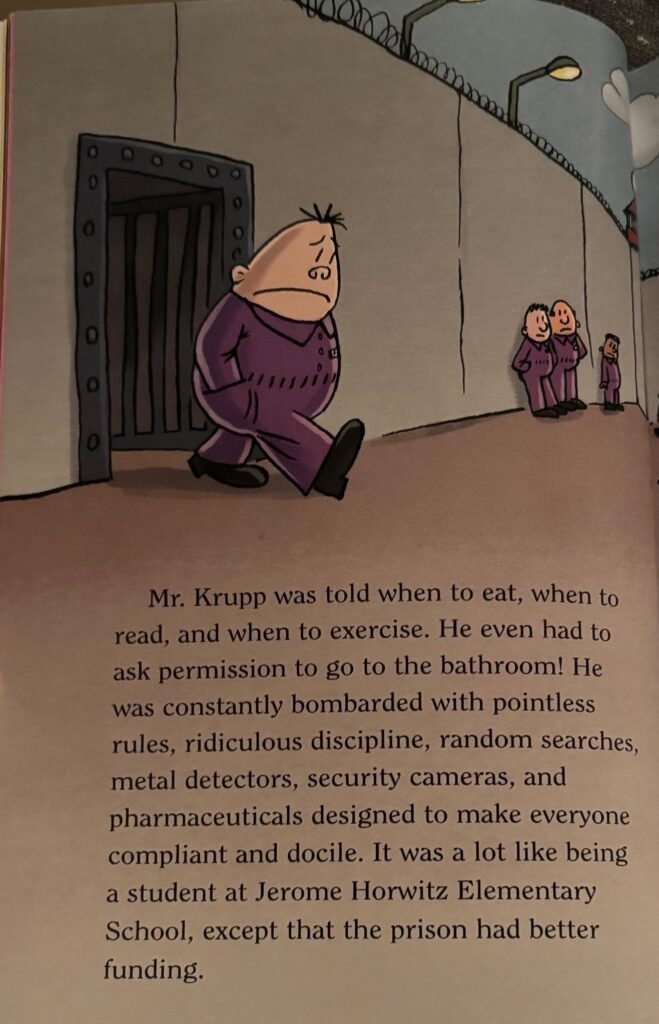

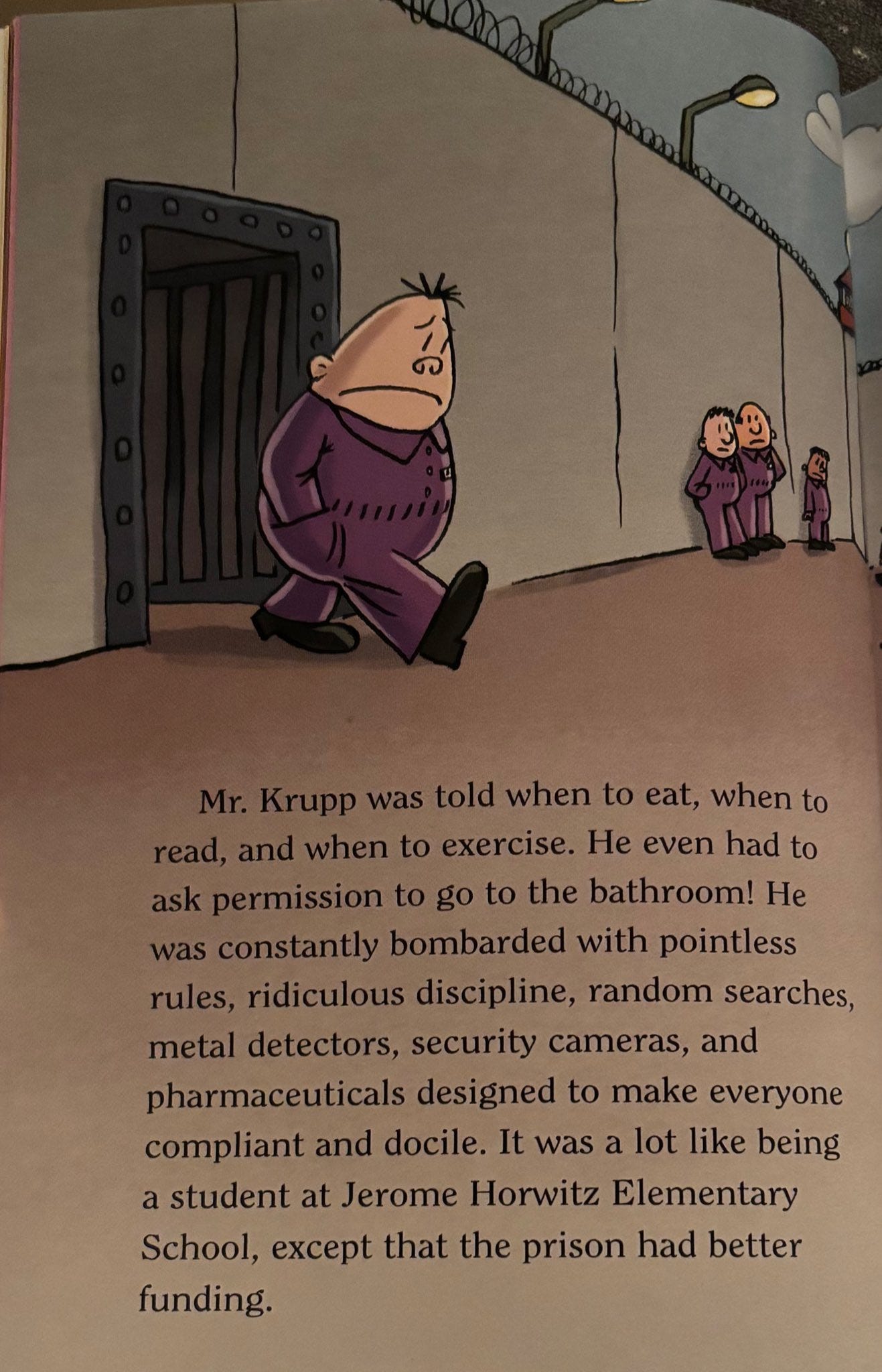

It is tragic that often getting your child out of there will be very difficult. Our society often does not let you choose your prison on the cheap if the current one involves too much violence. But yeah, at some point you pull them out anyway. If you can’t find somewhere else to place them, let them study on their own, especially now with LLMs.

A story: School refuses to suspend a disruptive student who has no intention of passing any classes and often does not even attend. There’s pressure to ‘keep the suspension rate down,’ so the metric is what gets managed. And they refuse to do anything else meaningful, either.

Their best teacher, who is the only one bothering to write the student up, gets assigned all the problem cases because she is the best, and is being told to essentially suck it all up, finally is fed up and moves to another school, and the faculty continues falling apart from there.

Also Mihoda points out that the story includes ‘while many students are content to play quietly on their phones all period,’ and it turns out phones are totally banned from the classroom but students ignore this and there are no consequences. So it sounds like once you mess up the metrics that badly, there is no way to give the students any meaningful incentives or reasons to change their behaviors. You might as well stop pretending you are running an educational institution rather than a babysitting service.

The weirdest part of all this is that OP reports the student here could produce work at grade level when he wanted to. That only raises further questions.

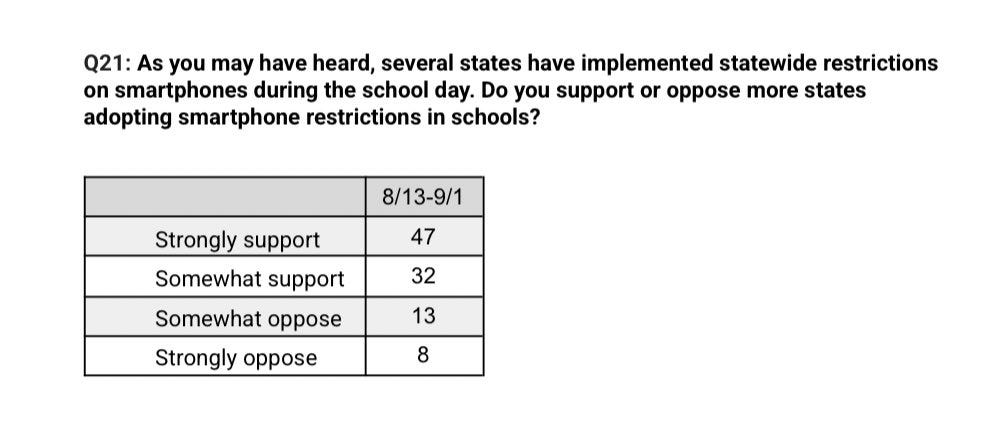

Banning phones in schools is very popular, maybe straight up pass a law? Shouldn’t democracies do things that have this level of support?

Texas mandates all schools ban phones. Yes, I am aware there are other items on the list at the link, but I have nothing useful to add about them.

A very obvious reason to believe you should ban phones is that it is the most elite and intertwined with phones and tech who most want their own kids off of phones.

Paul Schofield: I’d bet that within a decade, wealthy parents will be sending their kids to elite private schools that market themselves as low tech (no phones, screens, AI, etc.) and we’ll end up scrambling to figure out how to make this kind of education available to marginalized students.

Maia: Mark Zuckerberg’s kids have, by his own admission, very limited screen time and no public social media. He sends them to a screen-free school where expert tutors teach small class sizes. Is that because he’s stupid and doesn’t recognize the educational value of his own creations?

At a bare minimum, it seems very obvious that letting kids use screens during classes will end poorly? Yet they do it anyway, largely because lectures are such an inefficient delivery mechanism that the kids aren’t motivated enough to notice that they’re giving up what little learning would actually take place otherwise.

Tracing Woods: One of the most important messages of serious education research:

“students are really bad at knowing how learning happens”

Carl Hendrick: What happens when you let students manage their own screen time in class? Most don’t, until their grades suffer.

– Off-task device use (email, texting, social media) was significantly linked to lower scores on the first exam.

– Later in the semester, this relationship weakened, suggesting students may have changed behaviour based on feedback.

– Texting is the most common and impactful distraction

– this last point (highlighted) is interesting and again points to the fact that students are really bad at knowing how learning happens.

To be fair to the students I suffer from the same problem, where I am tempted by distractions during meetings and television shows and social gatherings and basically constantly. It’s rough.

Texting in particular seems terrible, because it yanks your attention actively rather than passively, and responding quickly and well has direct obvious implications. If you could somehow ban texting and other things that push, that would probably do a large portion of the work.

I also would watch out for correlation not being causation. There are obvious reasons why being a poor student or otherwise not likely to learn from a class would cause your device time in that class to rise.

There is another recent RCT on banning smartphones. Grades only increased by 0.086 standard deviations. If that’s all this was, then yeah it’s a nothingburger. Note these other results from the abstract:

Importantly, students exposed to the ban were substantially more supportive of phone-use restrictions, perceiving greater benefits from these policies and displaying reduced preferences for unrestricted access. This enhanced student receptivity to restrictive digital policies may create a self-reinforcing cycle, where positive firsthand experiences strengthen support for continued implementation.

Despite a mild rise in reported fear of missing out, there were no significant changes in overall student well-being, academic motivation, digital usage, or experiences of online harassment. Random classroom spot checks revealed fewer instances of student chatter and disruptive behaviors, along with reduced phone usage and increased engagement among teachers in phone-ban classrooms, suggesting a classroom environment more conducive to learning.

Context (in the comments): From the paper: “For example, the difference between having an average teacher and a very good teacher for one academic year is roughly 0.20 SD – an effect size that is considered large”. This was a one-semester intervention.

If students habitually checking their phones and being on their phones during class, resulting also in less chatter and disruptive behavior, mattered so little that its impact on learning could be well-measured by a one time 0.086 standard deviations in grades, then why are students in classes at all?

This is a completely serious question. Either classes do something or they don’t. Either we should make kids go to schools and pay attention or we shouldn’t.

The comments here, all of them, assert that banning phones is important, overdetermined and rather obvious. They’re right, and it’s crazy that this kind of support does not then result in phones being banned more consistently.

Hank (in the comments at MR): We recently removed our 12 year old son’s cell phone privileges indefinitely to improve focus on school work and due to shoddy listening at home. After two weeks, I can report:

– significantly improved focus *and patiencewhen doing homework. With the siren song of the phone gone, he is better able to just sit and focus on immediate tasks.

– much better two-way communication between parents and child, as opposed to absent-minded half answers we got when cell phone was present.

– big increase in time spent outside playing with neighborhood kids.

– more time simply spent thinking. When he’s not engaged elsewhere, he lays on the porch, presumably processing his day or just thinking big thoughts.

– more offers to help the household run, like walk dog.

– more questions for us about our day and what we do in our jobs. this rarely happened before.

We did not know what to expect, but I don’t need a study to know it’s the best decision we made for our child all year.

The obvious way to explain this is that grades are effectively on curves. When you ban phones the curve moves up, and it looks like you don’t see much improvement.

The opposite is also possible, Claude points out that teacher perceptions could be causing higher grades, since we don’t see changes in self-reported perceived learning or academic motivation.

I would still bet on this being an undercount.

Parents are often the ones pushing back against phone bans in schools, because parents want to constantly surveil and text their kids, and many care more about that than whether the kids learn.

Himbo President: I never want to step

Matthew Zeitlin: teachers are truly braver than the troops, i don’t understand how anyone does the job anymore

Tetraspace: I do want to step on parents’ toes and consider “parents want to surveil their kids” a point that cuts against allowing phones in schools (though dominated by student preferences)

Mostly the good argument for and against is “students want to coordinate on a no-phones equilibrium but being one a few that don’t use their phones means you miss out, so make school a phones tsar” vs. “maybe not all students, though, so let them organize that among themselves.”

One teacher banned phones in her classroom, reported vastly improved results including universally better student feedback.

Tyler Cowen attempts to elevate Frank from the comments to argue for phones, saying taking away phones ‘hurt his best students,’ and adds that without phones how can you teach AI? I have never seen a comments section this savage, either in the content or in the associated voting, starting with the post asking him to prove his claim to be a teacher (which he does not do).

Most of all, it was this:

Lizard Man: All of these seem like arguments against school, not arguments for phones.

If you think that the smartest students are hurt because they should be on their phones instead of in class, okay, well, why are they in class?

If you say you cannot possibly learn AI without a phone, one has three responses.

-

Have you not heard of computers?

-

In the middle of any given class?

-

Do you think what the students are doing with their phones is learning?

-

Pretty obviously this is not what is happening. No one reports this ever.

-

If they somehow are doing this, are they complementing what’s in the class, or are they substituting for it? Again, what are you even doing?

Can I imagine a world in which phones benefit students because they are asking the AI complementary questions during classes the way Tyler would use one? Sure. There presumably exist some such students. But to argue against banning phones you have to effectively make an argument against requiring school in current form.

The argument ‘some kids have no one to talk to and taking away their phone is cruel’ is even stupider. First, if a kid has no one physically there to talk to ever, that’s a different huge failure, and again why does this person go to school, but also shouldn’t they be learning during the school day not chatting with buddies via text? We really think it’s a depravation to live like everyone used to until after the final bell?

A new study shows substantial impacts from an in-school cellphone ban.

David Figlio & Umut Ozek: Cellphone bans in schools have become a popular policy in recent years in the United States, yet very little is known about their effects on student outcomes.

In this study, we try to fill this gap by examining the causal effects of bans on student test scores, suspensions, and absences using detailed student-level data from Florida and a quasi-experimental research strategy relying upon differences in pre-ban cellphone use by students, as measured by building-level Advan data. Several important findings emerge.

First, we show that the enforcement of cellphone bans in schools led to a significant increase in student suspensions in the short-term, especially among Black students, but disciplinary actions began to dissipate after the first year, potentially suggesting a new steady state after an initial adjustment period.

Second, we find significant improvements in student test scores in the second year of the ban after that initial adjustment period.

Third, the findings suggest that cellphone bans in schools significantly reduce student unexcused absences, an effect that may explain a large fraction of the test score gains. The effects of cellphone bans are more pronounced in middle and high school settings where student smartphone ownership is more common.

The proposed mechanism for absences seems to be that cellphones were previously used to coordinate or plan absences, which the students could no longer do. The adjustment period, before which suspensions are a problem, makes sense, and also helps explain some of the negative results elsewhere. Alternatively, students might see school as less pointless.

Another story: Student suspended for three days for saying ‘illegal alien,’ in the context of asking for clarification on vocabulary, potentially endangering an athletic scholarship:

During an April English lesson, McGhee says he sought clarification on a vocabulary word: aliens. “Like space aliens,” he asked, “or illegal aliens without green cards?” In response, a Hispanic student—another minor whom the lawsuit references under the pseudonym “R.”—reportedly joked that he would “kick [McGhee’s] ass.”

This was Reason magazine, so they focus on whether this was constitutional. I’d prefer to ask whether this is was a reasonable thing to do, which it obviously isn’t given the context.

On the law, it seems schools can punish ‘potentially disruptive conduct.’

So that means that if other students could respond by being disruptive, than that can be put on you, whether or not that response is reasonable.

Thus, punishing people who get bullied for causing a disturbance. If they weren’t asking for it then the bullies wouldn’t be going around being disruptive. This is remarkably common, and also was a large portion of my actual childhood.

This is then amplified by the problem that many actual disruptors care a lot less about punishment than others with more at stake, and in many cases they even get a full pass anyway, so the opportunities for asymmetrical warfare are everywhere.

One must deal with what is taught and done in practice, not in theory.

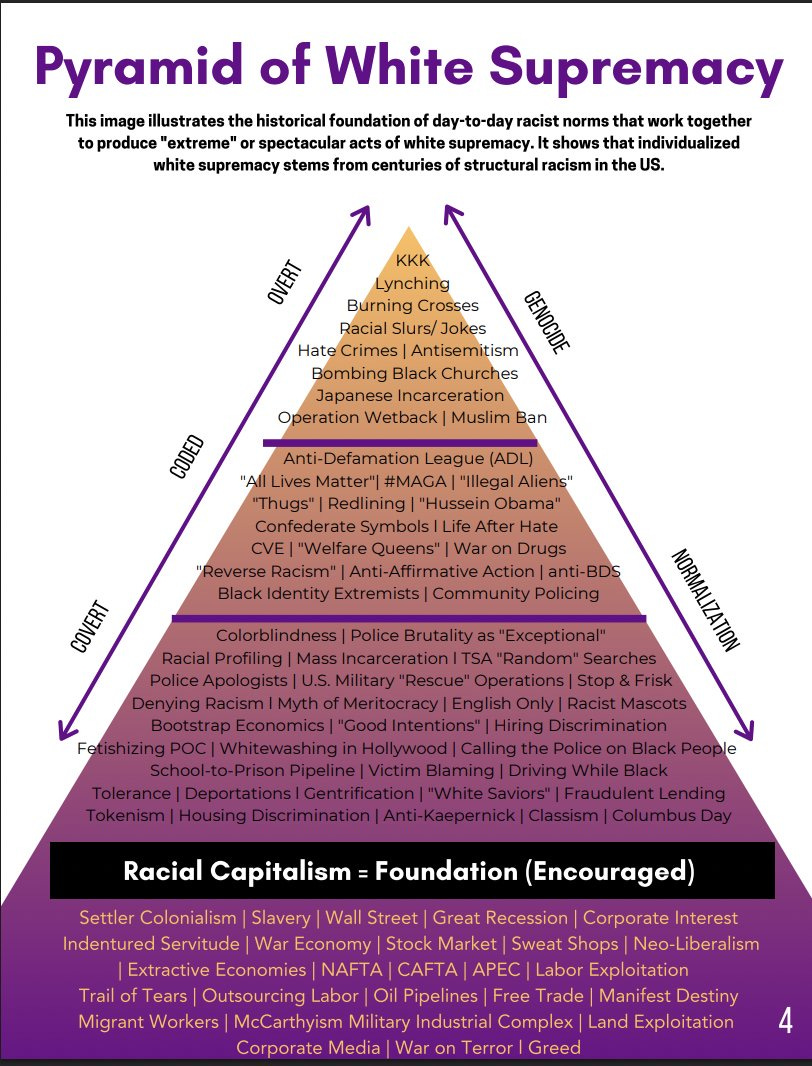

So if this pyramid is being used in the Harvard School of Education, and it straight up lists “Free Trade” as part of a “Pyramid of White Supremacy” in the same category as literally “Slavery” then, well, there is that.

I wish this was more of a scam, the actual events are so much worse than that.

Kane: I finally got the public records request back.

SF Public Schools (@SFUnified) paid $182,000 to a consultant, while already in a deficit, to implement “grading for equity”.

The advice: make 41% a passing grade, and stop grading homework.

Equity achieved.

Our public schools @SFUnified agreed to pay the “equity consultant” $380/hr plus $14,800 in expenses.

In the emails to @SFUnified, the “equity consultant” was bragging that after “equity” training, teachers would not count tardiness nor penalize not doing homework at all.

This is what our public schools are wasting taxes on. Here are all >120 pages of emails and invoices regarding @SFUnified deciding to waste taxes on “equity” consultants and blow up the deficit even more.

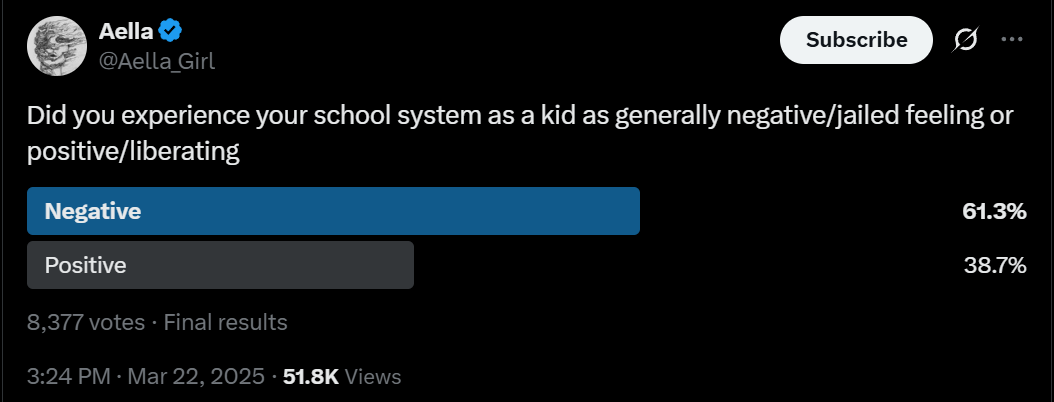

As a parent, school or anyone else, you need help from others to make your rules stick. In this case, the babysitter gave a 4 year old 11 packs (!) of gummy bears, because ‘she kept asking for more.’ We then get the fun of Aella wondering exactly why this is bad.

Your periodic reminder that the school shooting statistics are essentially fake.

T. Greer: What is the stupidest or most embarrassing wrong fact you have tweeted?

Paul Graham: Just today I tweeted a graph claiming there were 327 school shootings a year in the US in 2021. Turned out the source was using a very broad definition of “school shooting,” and that there were actually 2 in the usual sense of the phrase.

Yes, two is two too many and all that. But it drives home the insanity of traumatizing the entire generation in the name of ‘active shooter drills,’ or using this as a reason students need to have phones.

A school shooting is a plane crash. It happens, it is highly salient, it is tragic, and you should live your life as if it will never, ever happen to you or anyone you know.

I’ve said it before, but it bears repeating because they keep happening.

Rep. Marie Gluesenkamp Perez (link has video): There is broad consensus affirming what parents already know — mandatory active school shooter drills are deeply traumatizing for children and have no evidence of decreasing fatalities.

We should not use taxpayer dollars to mandate kids’ participation in ineffective strategies from the 1990s. Parents deserve the right to opt their kids out.

My amendment affirming that passed the Appropriations Committee on a bipartisan basis last night, and I’ll continue working with colleagues on both sides of the aisle to move this legislation forward.

Kelsey Piper: she’s right and should get a lot of credit for coming out and saying it.

mandatory school shooting drills are security theater. they don’t keep kids safe and they may well make school shootings more available to troubled kids as an idea. they are not a good use of taxpayer dollars and we can simply stop.

this amendment just lets parents opt out, where I want to shut the industry down entirely, but it’s still an improvement!

Schools will schedule tons of breaks all over the place, only be open half the time, waste your child’s time the bulk of every day, give them very little individual attention, kick your kid out if they think he might be sick, and then react in horror to the idea that you might not have attending every remaining day that they choose to be open as your highest priority.

The Principal’s Office: It’s 2025 and I actually have parents trying to defend pulling their child out of school for a vacation. I get a death. I get granny is turning 100 and lives out of state. But to get a cheaper vacation- nope. Can’t ever support that.

To be fair to TPO, he clarifies that unique experiences are different, and his objection is when this is done to get ‘lower prices.’

Well, I say with my economist hat, how much lower? How much should a parent pay to not miss one day of school? What happens if other things in life don’t line up perfectly with today’s random crazy schedules?

The thing is, this is all over the educational system, where schools including colleges will absolutely throw fits at the idea that you might have something more important to do if you try to defend that.

On the other hand, you can also simply skip school and basically get away with it, and ever since Covid we’ve had quite a lot of chronically absent kids and there isn’t much the system seems to be able to do about it.

A six word horror story: Parents see all grades right away.

Clem: We are now getting our kids’ grades sent directly to our phones after every assignment/test and woo boy am I happy that I grew up in the 90s with very limited internet.

This sounds like absolute hell for a large fraction of students. In case you don’t remember childhood, image if every day your boss called your spouse to report on every little thing you did wrong, only way worse.

Texas enacts school choice law giving parents $10k per year (and up to $30k/year for disabled students) for private schools or $2k per year for homeschooling.

Not everyone agrees, but many do (obviously biased sample, but still.)

(The null hypothesis, via Arnold Kling, is that no educational interventions do anything at scale.)

Five exceptions I am confident do count are:

-

Children getting enough sleep.

-

Air conditioning. Being too hot makes it hard to learn. AC mostly fixes this.

-

Air filtering improves ability to think and rate of learning.

-

Not missing massive amounts of school without any attempt at substitution.

-

Free lunch, even though there’s no such thing, seems like another easy win.

Yet we often by law force children to get up early to get to classrooms without AC or air filtering, and basic lunches are often not free.

I believe we should ban phones, but let’s start with not banning sleep or AC?

On the question of missing massive amounts of school, from October 2019: Paper says being exposed to the average incidence of teacher strikes during primary school in Argentina ‘reduced the labor earnings of males and females by 3.2% and 1.9% respectively’ due to increased unemployment and decreased occupational skill levels, partly driven by educational attainment.

This was a huge amount of teachers’ strikes, the average total loss in Argentina from 1983 to 2014 was 88 days or half a school year. Techniques here seem good.

Compare this to a traditional ~10% ‘rate of return on education.’ This is ~2.5% for missing about half a school year along the way, or half the effect of time at the end.

Given the way that we treat school, it makes sense that large amount of missed time can cause cascading problems. If you fall sufficiently far behind, the system then punishes you further because you’re out of step.

This implies an initially small but increasing marginal cost of missing days, until the point where you are sufficiently adrift that it no longer much matters.

Except in this case, the kids all missed time together, so the effect should largely get made up over time.

My guess is that the bulk of the cost of missing school is that the school system is not designed to handle students missing large amounts of school, and instead assumes you will be in class and keeping up with class with notably rare exceptions. You’re basically fine if you can then catch up rather than being lost, but if you’re lost then you’re basically screwed and there aren’t graceful fallback options.

The eternal question: Are you trying to learn or to avoid (working at) learning?

Arnold Kling: I observed that over the years that I taught AP statistics, the better I got at explaining, the worse I got at teaching. It was better for students if I stumbled, back-tracked, or used a more challenging way to demonstrate a proposition than if I did so quickly and efficiently. I see this also in Israeli dancing, where the more mistakes that the teacher makes and has to correct, the better is my memory for the dance.

Learning requires work. When the teacher stumbles, that can force the student to work harder. This relates to AI, because AI can allow students to get away with less work. This appeals to students, but it does not help them.

If you are ‘better at explaining,’ but your explanations work less well? Skill Issue! Obviously that means you are not in fact explaining better. You are ‘hiding your chain of thought (CoT),’ and that CoT was doing work. The explanations are getting worse.

If mistakes are helpful, you can make actual mistakes, or you can demonstrate mistakes. As one karate sensei I had would often say, ‘bad example,’ then he do the move wrong. One could argue that it is very hard to ‘get the errors right’ if they are not real, but I would argue the opposite, that if they are real they are kind of random and if you plan them then you can pick the most effective ones. But it’s easy to fool yourself into thinking the mistakes are dead weight, so random will often be better than none at all.

I have a policy of trying very hard not highlighting people who are Wrong on the Internet. But when sufficient virality attaches (e.g. 10 million or more views) we get to have a little fun. You can safely skip this section if you already know all this.

I just want it as a reference for later to point out such people really exist and also writing it helped vent some rage.

So here is ‘Knowing Better,’ who not only does now know better, but who has some very… interesting ideas about human children.

This is what opponents of home schooling so often sound like. Is it a strawman? If so, it was a rather prominent one, and I keep seeing variations on it.

People really do make versions of all of these rage-inducing, frankly evil arguments, on a continuous basis.

This thread gives us an opportunity to find them in their purest forms, and provide the oversupply of obvious counterarguments.

Luke: Homeschooling. Shouldn’t. Be. An. Option.

Possum Reviews: Is there even one realistic and compelling argument against homeschooling, considering all of the data shows homeschooled kids do better than public school kids in just about all theaters of life? I’m giving you anti-homeschooling people the benefit of the doubt that there’s more to it than just wanting Big Brother to control all of the information and narratives kids are exposed to for the purposes of indoctrination.

Knowing Better: I get flak every time I say this, so I figured I should clarify.

Universally, 100% of the time, homeschooling is the worst option for every single child. Without exception.

I’m not being hyperbolic.

Let me explain.

Now hear me out!

Knowing Better: No person on earth is qualified to teach every subject at every grade level. Having a textbook isn’t enough.

Teachers are supposed to know more than what is in the book so they can answer questions about the book.

Emphasis on ‘supposed to,’ this often is not the case. Some teachers are magic, but the title doesn’t make them so, especially given they need to deal with 20:1 student:teacher ratios or higher much of the time, whereas you can do 4:1 or 1:1 a lot.

But also we are talking about (mostly) elementary school subjects, not ‘every subject at every grade level.’

Yes, I think I am very qualified to teach ‘every subject’ at (for example) a 5th grade level other than foreign languages. I am smarter than a fifth grader. To the extent I don’t know the things, I can learn the things faster than I teach them. You can just [learn, or do, or teach] things. And that’s even without AI.

The medical school motto is ‘see one, do one, teach one.’ A powerful mantra.

The math team in high school literally said hey, you’re our 6th best senior and there’s overflow, you’re a captain now, go teach a class, good luck. And it was fine.

Or similarly:

Kelsey Piper: My sophomore year of high school my calculus class got assigned a math teacher who didn’t actually know calculus so he just kind of found the smart kid and told him to teach the class. It was fine tbh, but if you’re gonna posture about this you have to have qualified teachers.

He went to MIT for undergrad and then got a bio PhD. honestly I was probably receiving very high quality math instruction, just not from a Qualified Teacher TM

The real situation is that public schools vary a lot in how good the teachers are and parents also vary a lot in how good they are at teaching and so sometimes homeschooling results in an increase in how much kids learn and sometimes a decrease.

But some people rather than have this conversation just blindly insist that all public school educators are super-genius super-experts doing some incredibly sophisticated thing. And this is alienating to parents and students because we can see it’s not true.

Dave Kasten: A big weakness of this particular anti-homeschooling argument is that it’s supposed to be persuading policy elites, who by default are extremely likely to think that they’re capable of generically doing any task up to AP exams.

Kelsey Piper: and who are basically correct about this imo, it’s not that hard.

Patrick McKenzie: I could get behind a compromise: a) Mandatory annual testing for homeschool students and anyone below 10th percentile ordered to attend public school. b) Any public school teachers whose class below 10th percentile identified as Would Have Been Fired If They Were Homeschoolers:

Of course that is not a serious policy proposal, because it contemplates making public school employees accountable for results in any fashion whatsoever, but a geek can dream.

Kelsey Piper: We run a very small very low budget co-op/microschool and we keep getting kids from public school who can’t read. They learn how at a normal pace once you teach them. They just weren’t taught.

To the extent I can’t learn the things and don’t know the things… well, in this context I don’t actually care because obviously those things aren’t so important.

But also, home schooling does not mean I have to know and teach every subject as one person? There is a second part. There are friends. There are tutors, and even multiple full day s per week of private tutoring costs less than private school tuition around here. There are online courses. There are books. There is AI, which basically is qualified to teach everything up through undergraduate level. And so on.

For foreign languages, if one wants to learn those, standard classes are beyond atrocious. If you’re ahead of class you learn almost nothing. If you’re behind, you die, and never catch up. There are much, much better options. And it’s a great illustration of choice – you can teach them whatever second language you happen to know.

Knowing Better: Even then, you need to know when to teach what concepts.

Teachers are basically experts in child development.

Kids physically can’t understand negative numbers until a certain age. The abstract thinking parts of their brain aren’t done cooking yet. Do you know what age that is?

Polimath: This whole thread is kind of terrible, but this part of it is just about perfect because teachers are not experts in child development and the idea that kids *physicallycan’t understand negative numbers before a certain age is so wrong it is funny.

I really want the answer here to be a negative number. Unfortunately it’s not, but also there obviously isn’t a fixed number, also often this number is, like, four. Come on.

But actually, in a side thread, we find out he thinks the answer is… wait for it… 12.

My oldest son is ten. He’s been working with negative numbers for years. If he hadn’t, I’d be very, very worried about him. Don’t even ask what math I was doing at 12.

Knowing Better: The OP was saying I’m wrong about kids not understanding negative numbers until a certain age.

I know it’s 12 and I know it’s easy to look up. He didn’t.

So I gave him another example of something that develops later, and you looked up the age as if it disproves my point.

Perry Metzger: I was doing algebra long before I was twelve. I did proofs long before I was 12. You think I didn’t understand negative numbers? I can introduce you to literally hundreds of children below the age of 12 who understand negative numbers.

Andrew Rettek: My six year old has been doing math problems with negative numbers for months at least.

Delen Heisman: I’m sorry but your kid lied to you about his age, he’s gotta be at least 12.

Eliza: When I was in 4th grade, I took a test intended for eighth graders which had oddities like “4x+3=11”, but it seemed obvious to me that the x meant “times what?” without ever having learned algebra. Not understanding negatives by 12? bonkers!

Gallabytes: a friend of mine published in number theory at 11.

Jessica Taylor: utterly absurd views disprovable through methods like “remembering what it was like to be a kid” or “talking with 10 year olds.”

Mike Blume: This man is a child development expert who knows incontrovertible child development facts, like that children under twelve can’t understand negative numbers.

My eight year old is going to find this hilarious when she wakes up.

(can confirm, she laughed her little head off)

That’s the thing. Arguments against home schooling almost never would survive contact with the enemy, and by ‘the enemy’ I mean actual children.

Anyway, back to the main thread.

Knowing Better: But let’s say you decide to do it anyway, because you want your child to have a religious education.

Your child needs to be exposed to different ideas and people who don’t look like them.

It’s going to happen eventually, better for it to happen now while you’re able to explain why you believe what you do.

The universal form of this argument, which will be repeated several times here, is ‘if bad things [from your perspective] will happen in the future, better that similar bad things happen now.’

The argument here is patently absurd – that if you send your child to a secular school, they are less likely to end up religious than if you send them to a religious school. Or that if you expose kids to anti-[X] pro-[Y] arguments and have them spend all day in a culture that is anti-[X] and pro-[Y] and rewards them on that basis, that this won’t move them on net from [X] towards [Y].

I also am so sick of ‘your kid needs ‘socialization’ or to be around exactly the right type and number of other children or else horrible things will happen, so you should spend five figures a year and take up the majority of their lives to ensure this. Which is totally, very practically, a thing people constantly say.

…Your child is a genius.

They may be ahead of the class, but they won’t learn how to work with others and help them catch up.

That isn’t your child’s job, of course, but it will be an invaluable skill going forward. Want them to be a leader some day?

Kind of sounds like you want to make it their job. Yes, the entire philosophy is that if your child falls behind, it is bad for them. But if they somehow get ahead, that is also not good, and potentially even worse. Instead they should spend their time learning to… help others ‘catch up’ to them, also known as teaching?

As for ‘learning to work with others’ this is such a scam way of trying to enslave my kid to do your work for you, I can’t even.

If you want your child to be a leader, fine, teach them leadership skills. You think the best way to do that is have them in a classroom where the teacher is going over things they already know? Or enlisting them to each other kids? How does that work?

You know those prodigies who end up in college at 16? What kind of experience do you think they’re having?

Absolutely zero college kids – sorry, adults – will want to hang around a 16 year old for reasons that I hope are obvious.

This is completely false. Adults very much want to hang out with bright eager 16 year olds, reports a former bright eager 16 year old. Yes, they won’t want to hang out to do certain things, but that’s because they’re illegal or they think you’re not ready. So, as William Eden points out, you can just… not tell them.

Let your kid grow up like everyone else.

I’ve seen everyone else. No.

…Your child has been bullied.

I’m sure it’s safer at home. Is that your plan for the rest of their childhood?

Getting the school to fix the situation, or switching schools, or hell, paying the popular kids to protect your child, is still better than keeping them at home.

Do you even hear yourself? Schools are a place where violence and a lot of property crime, and most forms of verbal bullying are de facto legal. And you are saying that you can’t respond with exit. Paying the popular kids to protect your child? What universe do you live in? Does that ever, ever work? Do you have any idea what would happen in most cases if you tried, how much worse things would get?

Yes, of course you can try to ‘get the school to fix the situation’ but they mostly won’t. And switching schools may or may not be a practical option, and probably results in the same problem happening again for the same reasons. If kids sense you’re the type to be bullied, they’ll bully you anywhere, because we create the conditions for that.

As a parent, it is not your job to curate your child’s entire existence and decide what ideas they hear or who they socialize with.

They will grow up and resent you for it.

Your job is to guide them and provide context to what they’re experiencing outside of your presence.

No, parent, deciding how to raise your kids and what they get exposed to isn’t your job, fyou, that’s the state’s job, via the schools, except they are optimizing for things you actively hate, and also mostly whatever is convenient for them and their requirements. And who said you are ‘deciding what ideas they hear or who they socialize with’ here anyway?

In school, the kid is exposed to whatever the state decides. The kid has basically zero say until high school and very little until college. At home, the kids has lots of say. Because they can talk to you, and you can respond. Same with who they hang out with – they’re not forced to spend all day with a randomly assigned class, nor are you suddenly forced to dictate who their friends are.

He doubles down downthread on it being a bad thing if you curate your child’s experiences, and try as a parent to ensure the best for them (while also doubling down that most home school parents don’t do this). Sorry, what?

When it comes to stats like “homeschooled kids perform better on tests,” there’s a selection bias problem.

Every public school kid takes tests like the SAT or ACT. Only the college-bound homeschool kids do.

Parents decide the curriculum. There is no homeschool diploma.

The idea of a tradwife teaching her 8 blonde kids in a farmhouse is the EXCEPTION when it comes to homeschool.

More often than not, homeschool is a dumping ground for kids who have failed out of or been expelled from everywhere else. It’s a dead end to their education.

You got to love the adverse selection argument followed right away by ‘it’s mostly a dumping ground for expelled kids.’ And also the whole ‘you shouldn’t choose this for your child’ with the (completely false) claim that most such children got expelled, so they don’t have much choice. It contradicts the entire narrative above, all of it.

As for the argument on tests, well, we can obviously adjust for that in various ways.

There is of course also a class of people who say they are ‘homeschooling’ and instead are ‘home not schooling’ where the kids hang out without any effort to teach them. That’s often going to be not great, and you should check, but that’s what the tests are for. And others will spend a bunch of focus on cultural aspects (or what some would call indoctrination), just like regular school does, and some will take that too far. But again, that’s what the tests are for.

For final thoughts on homeschooling this time, I’m turning this over to Kelsey:

Kelsey Piper: Homeschooling is one of those things where the people who do it have generally made it one of the major focuses of their life and put thousands of hours of thought into it – which curriculum to use, which philosophy/approach, which tests and camps and resources, etc

which makes the tossed-off contributions of people who have given homeschooling about 5 hours of thought in their lives particularly maddening. Now, this dynamic shows up in other contexts and doesn’t always mean that the people who do something full time are right!

But the odds that a criticism that you came up with after having thought about homeschooling on and off when you see a tweet about it will resonate, be useful, be meaningful or even be literally true are just not good.

‘you’re not qualified to teach your kids’ I’m familiar both with the large scale literature on homeschool outcomes and on the actual test scores of the homeschooled/alt-schooled kids I know. There just isn’t a productive conversation to be had here until you acknowledge that.

So I guess what we’re really trying to say here is…

I mean this universally, not only regarding children or education.

The entire educational ‘expert’ class very obviously is engaged in enemy action. They are very obviously trying to actively prevent your children from learning, and trying to damage everyone’s families and experiences of childhood, in ways that are impossible to ignore. And they are using their positions to mobilize the state to impose their interventions by force, in the face of overwhelming opposition, in one of the most important aspects of life.

If that is true, then the procedure ‘find the people who are labeled as experts and defer to them’ cannot be a good procedure, in general, for understanding the world and making life decisions. If you want to defer to opinions of others, you need to do a much better job than this of figuring out which others can be safely deferred to.

MegaChan: THE PAGE.

Extra credit.

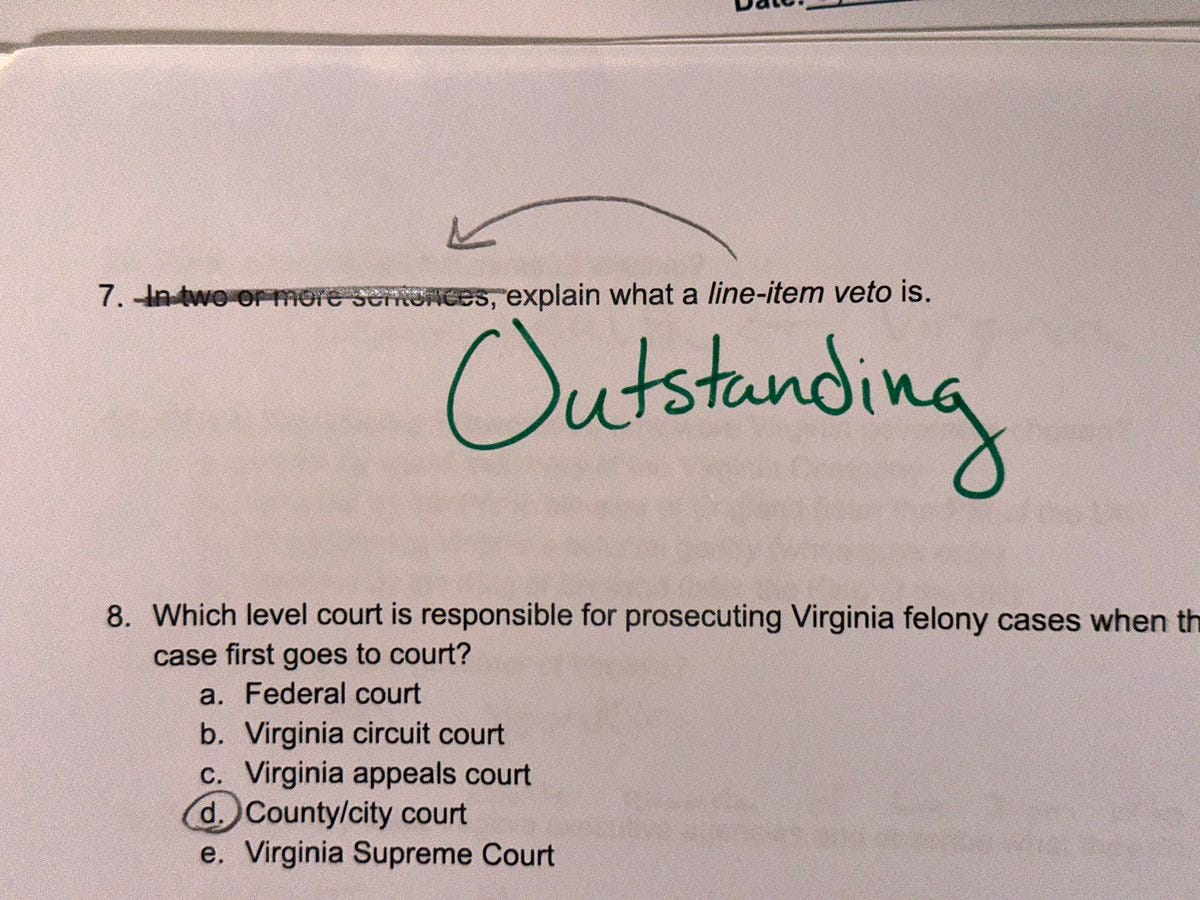

Peter Wildeford: Lots of news articles out there about how students are dumb, but then there’s this.

Polycarp: An answer I received from a student on my most recent test [#8 is B btw]

Credit where credit is due.

Albert Gustafson: Same energy.