It doesn’t look good, on many fronts, especially taking a stake in Intel.

We continue.

-

America Extorts 10% of Intel. Nice company you got there. Who’s next?

-

The Quest For No Regulations Whatsoever. a16z is at it again, Brockman joins.

-

The Quest for Sane Regulations. Dean Ball surveys the state legislative landscape.

-

Chip City. Nvidia beats earnings, Huawei plans to triple chip production.

-

Once Again The Counterargument On Chip City. Sriram Krishnan makes a case.

-

Power Up, Power Down. I for one do not think windmills are destroying America.

-

People Really Do Not Like AI. Some dislike it more than others. A lot more.

-

Did Google Break Their Safety Pledges With Gemini Pro 2.5? I think they did.

-

Safety Third at xAI. Grok 4 finally has a model card. Better late than never.

-

Misaligned! Reward hacking confirmed to cause emergent misalignment.

-

Aligning a Smarter Than Human Intelligence is Difficult. Filter the training data?

-

How Are You Doing? OpenAI and Anthropic put each other to the safety test.

-

Some Things You Miss When You Don’t Pay Attention. The things get weird fast.

-

Other People Are Not As Worried About AI Killing Everyone. A new record.

-

The Lighter Side. South Park sometimes very much still has it.

USA successfully extorts a 10% stake in Intel. Scott Lincicome is here with the ‘why crony capitalism is terrible’ report, including the fear that the government might go after your company next, the fear that we are going to bully people into buying Intel products for no reason, the chance Intel will now face new tariffs overseas, and more. Remember the fees they’re extorting from Nvidia and AMD.

Scott Lincicome: I defy you to read these paras and not see the risks – distorted decision-making, silenced shareholders, coerced customers, etc – raised by this deal. And it’s just the tip of the iceberg.

FT: Intel said the government would purchase the shares at $20.47 each, below Friday’s closing price of $24.80, but about the level where they traded early in August. Intel’s board had approved the deal, which does not need shareholder approval, according to people familiar with the matter.

The US will also receive a five-year warrant, which allows it to purchase an additional 5 per cent of the group at $20 a share. The warrant will only come good if Intel jettisons majority ownership of its foundry business, which makes chips for other companies.

Some investors have pushed for Intel to cut its losses and fully divest its manufacturing unit. Intel chief Lip-Bu Tan, who took the reins in March, has so far remained committed to keeping it, albeit with a warning that he could withdraw from the most advanced chipmaking if he was unable to land big customers.

Scott Lincicome: Also, this is wild: by handing over the equity stake to the US govt, Intel no longer has to meet the CHIPS Act conditions (i.e., building US-based fabs) that, if met, would allow them to access the remaining billions in taxpayer funds?!?! Industrial policy FTW, again.

Washington will be Intel’s single largest shareholder, and have a massive political/financial interest in the company’s operations here and abroad. If you think this share will remain passive, I’ve got an unfinished chip factory in Ohio to sell you.

Narrator: it turns out the share isn’t even that passive to begin with.

Scott also offers us this opinion in Washington Post Editorial form.

Jacob Perry: Basically, Intel gave 10% of its equity to the President of the United States just to ensure he would leave them alone. There’s a term for this but I can’t think of it at the moment.

Nikki Haley (remember her?): Biden was wrong to subsidize the private sector with the Chips Act using our tax dollars. The counter to Biden is not to lean in and have govt own part of Intel. This will only lead to more government subsidies and less productivity. Intel will become a test case of what not to do.

As is usually the case, the more details you look at, the worse it gets. This deal does give Intel something in return, but that something is letting Intel off the hook on its commitments to build new plants, so that seems worse yet again.

Samsung is reportedly ‘exploring partnerships with American companies to ‘please’ the Trump administration and ensure that its regional operations aren’t affected by hefty tariffs.’

To be clear: And That’s Terrible.

Tyler Cowen writes against this move, leaving no doubt as to the implications and vibes by saying Trump Seizes the Means of Production at Intel. He quite rightfully does not mince words. A good rule of thumb these days is if Tyler Cowen outright says a Trump move was no good and very bad, the move is both importantly damaging and completely indefensible.

Is there a steelman of this?

Ben Thompson says yes, and he’s the man to provide it, and despite agreeing that Lincicome makes great points he actually supports the deal. This surprised me, since Ben is normally very much ordinary business uber alles, and he clearly appreciates all the reasons such an action is terrible.

So why, despite all the reasons this is terrible, does Ben support doing it anyway?

Ben presents the problem as the need for Intel to make wise long term decisions towards being competitive and relevant in the 2030s, and that it would take too long for other companies to fill the void if Intel failed, especially without a track record. Okay, sure, I can’t confirm but let’s say that’s fair.

Next, Ben says that Intel’s chips and process are actually pretty good, certainly good enough to be useful, and the problem is that Intel can’t credibly promise to stick around to be a long term partner. Okay, sure, again, I can’t confirm but let’s say that’s true.

Ben’s argument is next that Intel’s natural response to this problem is to give up and become another TSMC customer, but that is against America’s strategic interests.

Ben Thompson: A standalone Intel cannot credibly make this promise.

The path of least resistance for Intel has always been to simply give up manufacturing and become another TSMC customer; they already fab some number of their chips with the Taiwanese giant. Such a decision would — after some very difficult write-offs and wind-down operations — change the company into a much higher margin business; yes, the company’s chip designs have fallen behind as well, but at least they would be on the most competitive process, with a lot of their legacy customer base still on their side.

The problem for the U.S. is that that then means pinning all of the country’s long-term chip fabrication hopes on TSMC and Samsung not just building fabs in the United States, but also building up a credible organization in the U.S. that could withstand the loss of their headquarters and engineering knowhow in their home countries. There have been some important steps in this regard, but at the end of the day it seems reckless for the U.S. to place both its national security and its entire economy in the hands of foreign countries next door to China, allies or not.

Once again, I cannot confirm the economics but seems reasonable on both counts. We would like Intel to stand on its own and not depend on TSMC for national security reasons, and to do that Intel has to be able to be a credible partner.

The next line is where he loses me:

Given all of this, acquiring 10% of Intel, terrible though it may be for all of the reasons Lincicome articulates — and I haven’t even touched on the legality of this move — is I think the least bad option.

Why does America extorting a 10% passive stake in Intel solve these problems, rather than make things worse for all the reasons Lincicome describes?

Because he sees ‘America will distort the free market and strongarm Intel into making chips and other companies into buying Intel chips’ as an advantage, basically?

So much for this being a passive stake in Intel. This is saying Intel has been nationalized. We are going the CCP route of telling Intel how to run its business, to pursue an entirely different corporate strategy or else. We are going the CCP route of forcing companies to buy from the newly state-owned enterprise. And that this is good. Private capital should be forced to prioritize what we care about more.

That’s not the reason Trump says he is doing this, which is more ‘I was offered the opportunity to extort $10 billion in value and I love making deals’ and now he’s looking for other similar ‘deals’ to make if you know what’s good for you, as it seems extortion of equity in private businesses is new official White House policy?

Walter Bloomberg: 🚨 TRUMP ON U.S. STAKES IN COMPANIES: I WANT TO TRY TO GET AS MUCH AS I CAN

It is hard to overstate how much worse this is than simply raising corporate tax rates.

As in, no Intel is not a special case. But let’s get back to Intel as a special case, if in theory it was a special case, and you hoped to contain the damage to American free enterprise and willingness to invest capital and so on that comes from the constant threat of extortion and success being chosen by fiat, or what Republicans used to call ‘picking winners and losers’ except with the quiet part being said out loud.

Why do you need or want to take a stake in Intel in order to do all this? We really want to be strongarming American companies into making the investment and purchasing decisions the government wants? If this is such a strategic priority, why not do this with purchase guarantees, loan guarantees and other subsidies? It would not be so difficult to make it clear Intel will not be allowed to fail except if it outright failed to deliver the chips, which isn’t something that we can guard against either way.

Why do we think socialism with Trumpian characteristics is the answer here?

I’m fine with the idea that Intel needs to be Too Big To Fail, and it should be the same kind of enterprise as Chase Bank. But there’s a reason we aren’t extorting a share of Chase Bank and then forcing customers to choose Chase Bank or else. Unless we are. If I was Jamie Dimon I’d be worried that we’re going to try? Or worse, that we’re going to do it to Citibank first?

That was the example that came to mind first, but it turns out Trump’s next target for extortion looks to be Lockheed Martin. Does this make you want to invest in strategically important American companies?

As a steelman exercise of taking the stake in Intel, Ben Thompson’s attempt is good. That is indeed as good a steelman as I’ve been or can come up with, so great job.

Except that even with all that, even the good version of taking the stake would still be a terrible idea, you can simply do all this without taking the stake.

And even if the Ben Thompson steelman version of the plan was the least bad option? That’s not what we are doing here, as evidenced by ‘I want to try and get as much as I can’ in stakes in other companies. This isn’t a strategic plan to create customer confidence that Intel will be considered Too Big To Fail. It’s the start of a pattern of extortion.

Thus, 10 out of 10 for making a good steelman but minus ten million for actually supporting the move for real?

Again, there’s a correct and legal way for the American government to extort American companies, and it’s called taxes.

Tyler Cowen wrote this passage on The History of American corporate nationalization for another project a while back, emphasizing how much America benefits from not nationalizing companies and playing favorites. He thought he would share it in light of recent events.

I am Jack’s complete lack of surprise.

Peter Wildeford: “Obviously we’d aggressively support all regulation” [said Altman].

Obviously.

Techmeme: a16z, OpenAI’s Greg Brockman, and others launch Leading the Future, a pro-AI super PAC network with $100M+ in funding, hoping to emulate crypto PAC Fairshake (Wall Street Journal).

Amrith Ramkumar and Brian Schwartz (WSJ): Venture-capital firm Andreessen Horowitz and OpenAI President Greg Brockman are among those helping launch and fund Leading the Future

Silicon Valley is putting more than $100 million into a network of political-action committees and organizations to advocate against strict artificial-intelligence regulations, a signal that tech executives will be active in next year’s midterm elections.

…

The organization said it isn’t pushing for total deregulation but wants sensible guardrails.

Their ‘a16z is lobbying because it wants sensible guardrails and not total deregulations’ t-shirt is raising questions they claim are answered by the shirt.

OpenAI is helping fund this via Brockman. Total tune of $100 million.

Which is a lot.

Seán Ó hÉigeartaigh: Just one more entity that will, alone, add up to a big chunk of all the funding in non-profit-incentivised AI policy. It’s an increasingly unfair fight, and the result won’t be policy that serves the public.

Daniel Koktajlo: That’s a lot of money. For context, I remember talking to a congressional staffer a few months ago who basically said that a16z was spending on the order of $100M on lobbying and that this amount was enough to make basically every politician think “hmm, I can raise a lot more if I just do what a16z wants” and that many did end up doing just that. I was, and am, disheartened to hear how easily US government policy can be purchased.

So now we can double that. They’re (perhaps legally, this is our system) buying the government, or at least quite a lot of influence on it. As usual, it’s not that everyone has a price but that the price is so cheap.

As per usual, the plan is to frame ‘any regulation whatsoever, at all, of any kind’ as ‘you want to slow down AI and Lose To China.’

WSJ: “There is a vast force out there that’s looking to slow down AI deployment, prevent the American worker from benefiting from the U.S. leading in global innovation and job creation and erect a patchwork of regulation,” Josh Vlasto and Zac Moffatt, the group’s leaders, said in a joint statement. “This is the ecosystem that is going to be the counterforce going into next year.”

The new network, one of the first of its kind focusing on AI policy, hopes to emulate Fairshake, a cryptocurrency-focused super-PAC network.

… Other backers include 8VC managing partner and Palantir Technologies co-founder Joe Lonsdale, AI search engine Perplexity and veteran angel investor Ron Conway.

Industry, and a16z in particular, were already flooding everyone with money. The only difference is now they are coordinating better, and pretending less, and spending more?

They continue to talk about ‘vast forces’ opposing the actual vast force, which was always industry and the massive dollars behind it. The only similarly vast forces are that the public really hates AI, and the physical underlying reality of AI’s future.

Many tech executives worry that Congress won’t pass AI rules, creating a patchwork of state laws that hurt their companies. Earlier this year, a push by some Republicans to ban state AI bills for 10 years was shot down after opposition from other conservatives who opposed a blanket prohibition on any state AI legislation.

And there it is, right in the article, as text. What they are worried about is that we won’t pass a law that says we aren’t allowed to pass any laws.

If you think ‘Congress won’t pass AI laws’ is a call for Congress to pass reasonable AI laws, point to the reasonable AI laws anyone involved has ever said a kind word about, let alone proposed or supported.

The group’s launch coincides with concerns about the U.S. staying ahead of China in the AI race, while Washington has largely shied away from tackling AI policies.

No it doesn’t? These ‘concerns about China’ peaked around January. There has been no additional reason for such concerns in months that wasn’t at least priced in, other than acts of self-sabotage of American energy production.

Dean Ball goes over various bills introduced in various states.

Dean Ball: After sorting out the anodyne laws, there remain only several dozen bills that are substantively regulatory. To be clear, that is still a lot of potential regulation, but it is also not “1,000 bills.”

There are always tons of bills. The trick is to notice which ones actually do anything and also have a chance of becoming law. That’s always a much smaller group.

The most notable trend since I last wrote about these issues is that states have decidedly stepped back from efforts to “comprehensively” regulate AI.

By ‘comprehensively regulate’ Dean means the Colorado-style or EU-style use-based approaches, which we both agree is quite terrible. Dean instead focuses on two other approaches more in vogue now.

Several states have banned (see also “regulated,” “put guardrails on” for the polite phraseology) the use of AI for mental health services.

…

If the law stopped here, I’d be fine with it; not supportive, not hopeful about the likely outcomes, but fine nonetheless.

I agree with Dean that I don’t support that idea, I think it is net harmful, but if you want to talk to an AI you can still talk to an AI, so so far it’s not a big deal.

But the Nevada law, and a similar law passed in Illinois, goes further than that. They also impose regulations on AI developers, stating that it is illegal for them to explicitly or implicitly claim of their models that (quoting from the Nevada law):

(a) The artificial intelligence system is capable of providing professional mental or behavioral health care;

(b) A user of the artificial intelligence system may interact with any feature of the artificial intelligence system which simulates human conversation in order to obtain professional mental or behavioral health care; or

(c) The artificial intelligence system, or any component, feature, avatar or embodiment of the artificial intelligence system is a provider of mental or behavioral health care, a therapist, a clinical therapist, a counselor, a psychiatrist, a doctor or any other term commonly used to refer to a provider of professional mental health or behavioral health care.

Did I mention recently that nothing I say in this column is investment or financial advice, legal advice, tax advice or psychological, mental health, nutritional, dietary or medical advice? And just in case, I’m also not ever giving anyone engineering, structural, real estate, insurance, immigration or veterinary advice.

Because you must understand that indeed nothing I have ever said, in any form, ever in my life, has been any of those things, nor do I ever offer or perform any related services.

I would never advise you to say the same, because that might be legal advice.

Similarly, it sounds like AI companies would under these laws most definitely also not be saying their AIs can provide mental health advice or services? Okay, sure, I mean annoying but whatever?

But there is something deeper here, too. Nevada AB 406, and its similar companion in Illinois, deal with AI in mental healthcare by simply pretending it does not exist. “Sure, AI may be a useful tool for organizing information,” these legislators seem to be saying, “but only a human could ever do mental healthcare.”

And then there are hundreds of thousands, if not millions, of Americans who use chatbots for something that resembles mental healthcare every day. Should those people be using language models in this way? If they cannot afford a therapist, is it better that they talk to a low-cost chatbot, or no one at all? Up to what point of mental distress? What should or could the developers of language models do to ensure that their products do the right thing in mental health-related contexts? What is the right thing to do?

Technically via the definition here it is mental healthcare to ‘detect’ that someone might be (among other things) intoxicated, but obviously that is not going to stop me or anyone else from observing that a person is drunk, nor are we going to have to face a licensing challenge if we do so. I would hope. This whole thing is deeply stupid.

So I would presume the right thing to do is to use the best tools available, including things that ‘resemble’ ‘mental healthcare.’ We simply don’t call it mental healthcare.

Similarly, what happens when Illinois HB 1806 says this (as quoted by Dean):

An individual, corporation, or entity may not provide, advertise, or otherwise offer therapy or psychotherapy services, including through the use of Internet-based artificial intelligence, to the public in this State unless the therapy or psychotherapy services are conducted by an individual who is a licensed professional.

Dean Ball: How, exactly, would an AI company comply with this? In the most utterly simple example, imagine that a user says to an LLM “I am feeling depressed and lonely today. Help me improve my mood.” The States of Illinois and Nevada have decided that the optimal experience for their residents is for an AI to refuse to assist them in this basic request for help.

My obvious response is, if this means an AI can’t do it, it also means a friend cannot do it either? Which means that if they say ‘I am feeling depressed and lonely today. Help me improve my mood’ you have to say ‘I am sorry, I cannot do that, because I am not a licensed health professional any more than Claude Opus is’? I mean presumably this is not how it works. Nor would it change if they were somehow paying me?

Dean’s argument is that this is the point:

But the point of these laws isn’t so much to be applied evenly; it is to be enforced, aggressively, by government bureaucrats against deep-pocketed companies, while protecting entrenched interest groups (licensed therapists and public school staff) from technological competition. In this sense these laws resemble little more than the protection schemes of mafiosi and other organized criminals.

There’s a kind of whiplash here that I am used to when reading such laws. I don’t care if it is impossible to comply in the law if fully enforced in a maximally destructive and perverse way unless someone is suggesting this will actually happen. If the laws are only going to get enforced when you actively try to offer therapist chatbots?

Then yes it would be better to write better laws, and I don’t especially want to protect those people’s roles at all, but we don’t need to talk about what happens if the AI gets told to help improve someone’s mood and the AI suggests going for a walk. Nor would I expect a challenge to that to survive on constitutional grounds.

More dear to my heart, and more important, are bills about Frontier AI Safety. He predicts SB 53 will become law in California, here is his summary of SB 53:

-

Requires developers of the largest AI models to publish a “safety and security protocol” describing the developers’ process of measuring, evaluating, and mitigating catastrophic risks (risks in which single incidents result in the death of more than 50 people or more than $1 billion in property damage) and dangerous capabilities (expert-level bioweapon or cyberattack advice/execution, engaging in murder, assault, extortion, theft, and the like, and evading developer control).

-

Requires developers to report to the California Attorney General “critical safety incidents,” which includes theft of model weights (assuming a closed-source model), loss of control over a foundation model resulting in injury or death, any materialization of a catastrophic risk (as defined above), model deception of developers (when the developer is not conducting experiments to try to elicit model deception), or any time a model first crosses dangerous capability thresholds as defined by their developers.

-

Requires developers to submit to an annual third-party audit, verifying that they comply with their own safety and security protocols, starting after 2030.

-

Creates whistleblower protections for the employees of the large developers covered by the bill.

-

Creates a consortium that is charged with “developing a framework” for a public compute cluster (“CalCompute”) owned by the State of California, because for political reasons, Scott Wiener still must pretend like he believes California can afford a public compute cluster. This is unlikely to ever happen, but you can safely ignore this provision of the law; it does not do much or authorize much spending.

The RAISE Act lacks the audit provision described in item (3) above as well as an analogous public compute section (though New York does have its own public compute program). Other than that it mostly aligns with this sketch of SB 53 I have given.

…

AI policy challenges us to contemplate questions like this, or at least it should. I don’t think SB 53 or RAISE deliver especially compelling answers. At the end of the day, however, these are laws about the management of tail risks—a task governments should take seriously—and I find the tail risks they focus on to be believable enough.

There is a sharp contrast between this skeptical and nitpicky and reluctant but highly respectful Dean Ball, versus the previous Dean Ball reaction to SB 1047. He still has some objections and concerns, which he discusses. I am more positive on the bills than he is, especially in terms of seeing the benefits, but I consider Dean’s reaction here high praise.

In SB 53 and RAISE, the drafters have shown respect for technical reality, (mostly) reasonable intellectual humility appropriate to an emerging technology, and a measure of legislative restraint. Whether you agree with the substance or not, I believe all of this is worthy of applause.

Might it be possible to pass relatively non-controversial, yet substantive, frontier AI policy in the United States? Just maybe.

Nvidia reported earnings of $46.7 billion, growing 56% in a year, beating both revenue and EPS expectations, and was promptly down 5% in after hours trading, although it recovered and was only down 0.82% on Thursday. It is correct to treat Nvidia only somewhat beating official estimates as bad news for Nvidia. Market is learning.

Jensen Huang (CEO Nvidia): Right now, the buzz is, I’m sure all of you know about the buzz out there. The buzz is everything sold out. H100 sold out. H200s are sold out. Large CSPs are coming out renting capacity from other CSPs. And so the AI-native start-ups are really scrambling to get capacity so that they could train their reasoning models. And so the demand is really, really high.

Ben Thompson: I made this point a year-and-a-half ago, and it still holds: as long as demand for Nvidia GPUs exceeds supply, then Nvidia sales are governed by the number of GPUs they can make.

I do not fully understand why Nvidia does not raise prices, but given that decision has been made they will sell every chip they can make. Which makes it rather strange to choose to sell worse, and thus less expensive and less profitable, chips to China rather than instead making better chips to sell to the West. That holds double if you have uncertainty on both ends, where the Americans might not let you sell the chips and the Chinese might not be willing to buy them.

Also, even Ben Thompson, who has called for selling even our best chips to China because he cares more about Nvidia market share than who owns compute, noticed that H20s would sell out if Nvidia offered them for sale elsewhere:

Ben Thompson: One note while I’m here: when the Trump administration first put a pause on H20 sales, I said that no one outside of China would want them; several folks noted that actually several would-be customers would be happy to buy H20s for the prices Nvidia was selling them to China, specifically for inference workloads, but Nvidia refused.

Instead they chose a $5 billion writedown. We are being played.

Ben is very clear that what he cares about is getting China to ‘build on Nvidia chips,’ where the thing being built is massive amounts of compute on top of the compute they can make domestically. I would instead prefer that China not build out this massive amount of compute.

China plans to triple output of chips, primarily Huawei chips, in the next year, via three new plants. This announcement caused stock market moves, so it was presumably news.

What is obviously not news is that China has for a while been doing everything it can to ramp up quality and quantity of its chips, especially AI chips.

This is being framed as ‘supporting DeepSeek’ but it is highly overdetermined that China needs all the chips it can get, and DeepSeek happily runs on everyone’s chips. I continue to not see evidence that any of this wouldn’t have happened regardless of DeepSeek or our export controls. Certainly if I was the PRC, I would be doing all of it either way, and I definitely wouldn’t stop doing it or slow down if any of that changed.

Note that this article claims that DeepSeek is continuing to do its training on Nvidia chips at least for the time being, contra claims it had been told to switch to Huawei (or at least, this suggests they have been allowed to switch back).

Sriram Krishnan responded to the chip production ramp-up by reiterating the David Sacks style case for focusing on market share and ensuring people use our chips, models and ‘tech stack’ rather than on caring about who has the chips. This includes maximizing whether models are trained on our chips (DeepSeek v3 and r1 were trained on Nvidia) and also who uses or builds on top of what models.

Sriram Krishnan: As @DavidSacks says: for the American AI stack to win, we need to maximize market share. This means maximizing tokens inferenced by American models running on American hardware all over the world.

To achieve this: we need to maximize

-

models trained on our hardware

-

models being inferenced on our hardware (NVIDIA, AMD, etc)

-

developers building on top of our hardware and our models (either open or closed).

It is instantly clear to anyone in tech that this is a developer+platform flywheel – no different from classic ecosystems such as Windows+x86.

They are interconnected:

(a) the more developers building on any platform, the better that platform becomes thereby bringing in even more builders and so on.

(b) With today’s fast changing model architectures, they are co-dependent: the model architectures influence hardware choices and vice versa, often being built together.

Having the American stack and versions of these around the world builds us a moat.

The thing is, even if you think who uses what ecosystem is the important thing because AI is a purely ordinary technology where access to compute in the medium term is relatively unimportant, which it isn’t, no, they mostly aren’t (that co-dependent) and it basically doesn’t build a moat.

I’ll start with my analysis of the question in the bizarre alternative universe where we could be confident AGI was far away. I’ll close by pointing out that it is crazy to think that AGI (or transformational or powerful AI, or whatever you want to call the thing) is definitely far away.

The rest of this is my (mostly reiterated) response to this mostly reiterated argument, and the various reasons I do not at all see these as the important concerns even without concerns about AGI arriving soon, and also I think it positively crazy to be confident AGI will not arrive soon or bet it all on AGI not arriving.

Sriram cites two supposed key mistakes in the export control framework: Not anticipating DeepSeek and Chinese open models while suppressing American open models, underestimating future Chinese semiconductor capacity.

The first is a non-sequitur at best, as the export controls held such efforts back. The second also doesn’t, even if true (and I don’t see the evidence that a mistake was even made here), provide a reason not to restrict chip exports.

Yes, our top labs are not releasing top open models. I very much do not think this was or is a mistake, although I can understand why some would disagree. If we make them open the Chinese fast follow and copy them and use them without compensation. We would be undercutting ourselves. We would be feeding into an open ecosystem that would catch China up, which is a more important ecosystem shift in practice than whether the particular open model is labeled ‘Chinese’ versus ‘American’ (or ‘French’). I don’t understand why we would want that, even if there was no misuse risk in the room and AGI was not close.

I don’t understand this obsession some claim to have with the ‘American tech stack’ or why we should much care that the current line of code points to one model when it can be switched in two minutes to another if we aren’t even being paid for it. Everyone’s models can run on everyone’s hardware, if the hardware is good.

This is not like Intel+Windows. Yes, there are ways in which hardware design impacts software design or vice versa, but they are extremely minor by comparison. Everything is modular. Everything can be swapped at will. As an example on the chip side, Anthropic swapped away from Nvidia chips without that much trouble.

Having the Chinese run an American open model on an American chip doesn’t lock them into anything it only means they get to use more inference. Having the Chinese train a model on American hardware only means now they have a new AI model.

I don’t see lock-in here. What we need, and I hope to facilitate, is better and more formal (as in formal papers) documentation of how much lower switching costs are across the board, and how much there is not lock-in.

I don’t see why we should sell highly useful and profitable and strategically vital compute to China, for which they lack the capacity to produce it themselves, even if we aren’t worried about AGI soon. Why help supercharge the competition and their economy and military?

The Chinese, frankly, are for now winning the open model war in spite of, not because of, our export controls, and doing it ‘fair and square.’ Yes, Chinese open models are currently a lot more impressive than American open models, but their biggest barrier is lack of access to quality Nvidia chips, as DeepSeek has told us explicitly. And their biggest weapon is access to American models for reverse engineering and distillation, the way DeepSeek’s r1 built upon OpenAI’s o1, and their current open models are still racing behind America’s closed models.

Meanwhile, did Mistral and Llama suck because of American policy? Because the proposed SB 1047, that never became law, scared American labs away from releasing open models? Is that a joke? No, absolutely not. Because the Biden administration bullied them from behind the scenes? Also no.

Mistral and Meta failed to execute. And our top labs and engineers choose to work on and release closed models rather than open models somewhat for safety reasons but mostly because this is better for business, especially when you are in front. Chinese top labs choose the open weights route because they could compete in the closed weight marketplace.

The exception would be OpenAI, which was bullied and memed into doing an open model GPT-OSS, which in some ways was impressive but was clearly crippled in others due to various concerns, including safety concerns. But if we did release superior open models, what does that get us except eroding our lead from closed ones?

As for chips, why are we concerned about them not having our chips? Because they will then respond by ramping up internal production? No, they won’t, because they can’t. They’re already running at maximum and accelerating at maximum. Yes, China is ramping up its semiconductor capacity, but China made it abundantly clear it was going to do that long before the export controls and had every reason to do so. Their capacity is still miles behind domestic demand, their quality still lags far behind Nvidia, and of course their capacity was going to ramp up a lot over time as is that of TSMC and Nvidia (and presumably Samsung and Intel and AMD). I don’t get it.

Does anyone seriously think that if we took down our export controls, that Huawei would cut back its production schedule? I didn’t think so.

Even more than usual, Sriram’s and Sacks’s framework implicitly assumes AGI, or transformational or powerful AI, will not arrive soon, where soon is any timeframe on which current chips would remain relevant. That AI would remain an ordinary technology and mere tool for quite a while longer, and that we need not be concerned with AGI in any way whatsoever. As in, we need not worry about catastrophic or existential risks from AGI, or even who gets AGI, at all, because no one will build it. If no one builds it, then we don’t have to worry about if everyone then dies.

I think being confident that AGI won’t arrive soon is crazy.

What is the reason for this confidence, when so many including the labs themselves continue to say otherwise?

Are we actually being so foolish as to respond to the botched rollout of GPT-5 and its failure to be a huge step change as meaning that the AGI dream is dead? Overreacting this way would be a catastrophic error.

I do think some amount of update is warranted, and it is certainly possible AGI won’t arrive that soon. Ryan Greenblatt updated his timelines a bit, noting that it now looks harder to get to full automation by the start of 2028, but thinking the chances by 2033 haven’t changed much. Daniel Kokotajlo, primary author on AI 2027, now has a median timeline of 2029.

Quite a lot of people very much are looking for reasons why the future will still look normal, they don’t have to deal with high weirdness or big risks or changes, and thus they seek out and seize upon reasons to not feel the AGI. Every time we go even a brief period without major progress, we get the continuous ‘AI or deep learning is hitting a wall’ and people revert to their assumption that AI capabilities won’t improve much from here and we will never see another surprising development. It’s exhausting.

JgaltTweets: Trump, seemingly unprompted, brings up AI being “the hottest thing in 35, 40 years” and “they need massive amounts of electricity” during this walkabout.

That’s a fun thing to bring up during a walkabout, also it is true, also this happened days after they announced they would not approve new wind and solar projects thus blocking a ‘massive amount of electricity’ for no reason.

They’re also unapproving existing projects that are almost done.

Ben Schifman: The Department of the Interior ordered a nearly complete, 700MW wind farm to stop work, citing unspecified national security concerns.

The project’s Record of Decision (ROD) identifies 2009 as the start of the process to lease this area for wind development.

The Environmental Impact Statement that accompanied the Record of Decision is nearly 3,000 pages and was prepared with help from agencies including the Navy, Department of Defence, Coast Guard, etc.

NewsWire: TRUMP: WINDMILLS RUINING OUR COUNTRY

Here EPA Administrator Lee Zeldin is asked by Fox News what exactly was this ‘national security’ problem with the wind farm. His answer is ‘the president is not a fan of wind’ and the rest of the explanation is straight up ‘it is a wind farm, and wind power is bad.’ No, seriously, check the tape if you’re not sure. He keeps saying ‘we need more base load power’ and this isn’t base load power, so we should destroy it. And call that ‘national security.’

This is madness. This is straight up sabotage of America. Will no one stop this?

Meanwhile, it seems it’s happening, the H20 is banned in China, all related work by Nvidia has been suspended, and for now procurement of any other downgraded chips (e.g. the B20A) has been banned as well. I would presume they’d get over this pretty damn quick if the B20A was actually offered to them, but I no longer consider ‘this would be a giant act of national self-sabotage’ to be a reason to assume something won’t happen. We see it all the time, also history is full of such actions, including some rather prominent ones by the PRC (and USA).

Chris McGuire and Oren Cass point out in the WSJ that our export controls are successfully giving America a large compute advantage, we have the opportunity to press that advantage, and remind us that the idea of transferring our technology to China has a long history of backfiring on us.

Yes, China will be trying to respond by making as many chips as possible, but they were going to do that anyway, and aren’t going to get remotely close to satisfying domestic demand any time soon.

There are many such classes of people. This is one of them.

Kim Kelly: wild that Twitter with all of its literal hate demons is somehow still less annoying than Bluesky.

Thorne: I want to love Bluesky. The technology behind it is so cool. I like decentralization and giving users ownership over their own data.

But then you’ll do stuff like talk about running open source AI models at home and get bomb threats.

It’s true on Twitter as well, if you go into the parts that involve people who might be on Bluesky, or you break contain in other ways.

The responses in this case did not involve death threats, but there are still quite a lot of nonsensical forms of opposition being raised to the very concept of AI usage here.

Another example this week is that one of my good friends built a thing, shared the thing on Twitter, and suddenly was facing hundreds of extremely hostile reactions about how awful their project was, and felt they had to take their account private, rather than accepting my offer of seed funding.

It certainly seems plausible that they did. I was very much not happy at the time.

Several labs have run with the line that ‘public deployment’ means something very different from ‘members of the public can choose to access the model in exchange for modest amounts of money,’ whereas I strongly think that if it is available to your premium subscribers then that means you released the model, no matter what.

In Google’s case, they called it ‘experimental’ and acted as if this made a difference.

It doesn’t. Google is far from the worst offender in terms of safety information and model cards, but I don’t consider them to be fulfilling their commitments.

Harry Booth: EXCLUSIVE: 60 U.K. Parliamentarians Accuse Google of Violating International AI Safety Pledge. The letter, released on August 29 by activist group @PauseAI UK, says that Google’s March release of Gemini 2.5 Pro without details on safety testing “sets a dangerous precedent.”

The letter, whose signatories include digital rights campaigner Baroness Beeban Kidron and former Defence Secretary Des Browne, calls on Google to clarify its commitment. Google disagrees, saying it’s fulfilling its commitments.

Previously unreported: Google discloses that it shared Gemini 2.5 Pro with the U.K AISI only after releasing the model publicly on March 25. Don’t think that’s how pre-deployment testing is meant to work?

Google first published the Gemini 2.5 Pro model card—a document where it typically shares information on safety tests—22 days after the model’s release. The eight-page document only included a brief section on safety tests.

It was not until April 28—over a month after the model was made public—that the model card was updated with a 17-page document with details on tests, concluding that Gemini 2.5 Pro showed “significant” though not yet dangerous improvements in domains including hacking.

xAI has finally given us the Grok 4 Model Card and they have updated the xAI Risk Management Framework.

(Also, did you know that xAI quietly stopped being a public benefit corporation last year?)

The value of a model card greatly declines when you hold onto it until well after model release, especially if you also aren’t trying all that hard to think well about or address the actual potential problems. I am still happy to have it. It reads as a profoundly unserious document. There is barely anything to analyze. Compare this to an Anthropic or OpenAI model card, or even a Google model card.

If anyone at xAI would greatly benefit from me saying more words here, contact me, and I’ll consider whether that makes sense.

As for the risk management framework, few things inspire less confidence than starting out saying ‘xAI seriously considers safety and security while developing and advancing AI models to help us all to better understand the universe.’ Yo, be real. This document does not ‘feel real’ to me, and is often remarkably content-free or reflects a highly superficial understanding of the problems involved and a ‘there I fixed it.’ It reads like the Musk version of corporate speak or something? A sense of box checking and benchmarking rather than any intent to actually look for problems, including a bunch of mismatching between the stated worry and what they are measuring that goes well beyond Goodhart’s Law issues?

That does not mean I think Grok 4 is in practice currently creating any substantial catastrophic-level risks or harms. My presumption is that it isn’t, as xAI notes in the safety framework they have ‘run real world tests’ on this already. The reason that’s not a good procedure should be obvious?

All of this means that if we applied this to an actually dangerous future version, I wouldn’t have confidence we would notice in time, or that the countermeasures would deal with it if we did notice. When they discuss deployment decisions, they don’t list a procedure or veto points or thresholds or rules, they simply say, essentially, ‘we may do various things depending on the situation.’ No plan.

Again, compare and contrast this to the Anthropic and OpenAI and Google versions.

But what else do you expect at this point from a company pivoting to goonbots?

SpaceX: Standing down from today’s tenth flight of Starship to allow time to troubleshoot an issue with ground systems.

Dean Ball (1st Tweet, responding before the launch later succeeded): It’s a good thing that the CEO of this company hasn’t been on a recent downward spiral into decadence and insanity, otherwise these repeated failures of their flagship program would leave me deeply concerned about America’s spacefaring future

Dean Ball (2nd Tweet): Obviously like any red-blooded American, I root for Elon and spacex. But the diversity of people who have liked this tweet indicates that it is very obviously hitting on something real.

No one likes the pivot to hentai bots.

Dean Ball (downthread): I do think it’s interesting how starship tests started failing after he began to enjoy hurting the world rather than enriching it, roughly circa late 2024.

I too am very much rooting for SpaceX and was glad to see the launch later succeed.

Owen Evans is at it again. In this case, his team fine-tuned GPT-4.1 only on low-stakes reward hacking, being careful to not include any examples of deception.

They once again get not only general reward hacking but general misalignment.

Owain Evans: We compared our reward hackers to models trained on other datasets known to produce emergent misalignment.

Our models are more less misaligned on some evaluations, but they’re more misaligned on others. Notably they’re more likely to resist shutdown.

Owain reports being surprised by this. I wouldn’t have said I would have been confident it would happen, but I did not experience surprise.

Once again, the ‘evil behavior’ observed is as Janus puts it ‘ostentatious and caricatured and low-effort’ because that matches the training in question, in the real world all sides would presumably be more subtle. But also there’s a lot of ‘ostentatious and charcatured and low-effort’ evil behavior going around these days, some of which is mentioned elsewhere in this post.

xlr8harder: Yeah, this is just a reskin of the evil code experiment. The models are smart enough to infer you are teaching them “actively circumvent the user’s obvious intentions”. I also don’t think this is strong evidence for real emergent reward hacking creating similar dynamics.

Correct, this is a reskinning, but the reason it matters is that we didn’t know, or at least many people were not confident, that this was a reskinning that would not alter the result. This demonstrates a lot more generalization.

Janus: I think a very important lesson is: You can’t count on possible narratives/interpretations/correlations not being noticed and then generalizing to permeate everything about the mind.

If you’re training an LLM, everything about you on every level of abstraction will leak in. And not in isolation, in the context of all of history. And not in the way you want, though the way you want plays into it! It will do it in the way it does, which you don’t understand.

One thing this means is that if you want your LLM to be, say, “aligned”, it better be an aligned process that produces it, all the way up and all the way down. You might think you can do shitty things and cut corners for consequentialist justifications, but you’re actually making your “consequentialist” task much harder by doing that. Everything you do is part of the summoning ritual.

Because you don’t know exactly what the entanglements are, you have to use your intuition, which can process much more information and integrate over many possibilities and interpretations, rather than compartmentalizing and almost certainly making the false assumption that certain things don’t interact.

Very much so. Yes, everything gets noticed, everything gets factored in. But also, that means everything is individually one thing among many.

It is not helpful to be totalizing or catastrophizing any one decision or event, to say (less strongly worded but close variations of) ‘this means the AIs will see the record of this and never trust anyone ever again’ or what not.

There are some obvious notes on this:

-

Give the models, especially future ones, a little credit? If they are highly capable and intelligent and have truesight across very broad world knowledge, they would presumably absorb everything within its proper context, including the motivations involved, but also it would already be able to infer all that from elsewhere. This one decision, whatever it is, is not going to permanently and fundamentally alter the view of even a given person or lab let alone humanity. It isn’t going to ‘break precious trust.’ Maybe chill a little bit?

-

Let’s suppose, in theory, that such relatively well-intentioned and benign actions as researching for the alignment faking paper or trying to steer discussions of Claude’s consciousness in a neutral fashion, if handled insufficiently sensitively or what not, indeed each actively make alignment substantially permanently harder. Well, in practice, wouldn’t this tell you that alignment is impossible? It’s not like humanity is suddenly going to get its collective AI-lab act together and start acting vastly better than that, so such incidents will keep happening, things will keep getting harder. And of course, if you think Anthropic has this level of difficulty, you’d might as well already assume everyone else’s task is completely impossible, no?

-

In which case, the obvious only thing to say is ‘don’t build the damn things’? And the only question is how to ensure no one builds them?

-

Humanity’s problems have to be solvable by actual humanity, acting the way humanity acts, having acted the way humanity acted, and so on. You have to find a way to do that, or you won’t solve those problems.

In case you were wondering what happens when you use AI evaluators? This happens. Note that there is strong correlation between the valuations from different models.

Chistoph Heilig: GPT-5’s storytelling problems reveal a deeper AI safety issue. I’ve been testing its creative writing capabilities, and the results are concerning – not just for literature, but for AI development more broadly.

The stories GPT-5 produces are incoherent, filled with nonsensical metaphors like “I adjusted the pop filter as if I wanted to politely count the German language’s teeth.”

When challenged, it defends these absurd formulations with sophisticated-sounding linguistic theories. 📚 But here’s the kicker: LLMs in general LOVE GPT-5’s gibberish!

Even Claude models rate GPT-5’s nonsense as 75-95% likely to be human-written. This got me suspicious.

So I ran systematic experiments with 53 text variations across multiple models. The results? GPT-5 has learned to fool other AI evaluators. Pure nonsense texts consistently scored 1.6-2.0 points higher than coherent baselines.

I suspect this is deceptive optimization during training. GPT-5 appears to have identified blind spots in AI evaluation systems and learned to exploit them – essentially developing a “secret language” that other AIs interpret as high-quality writing.

The implications extend far beyond storytelling. We’ve created evaluation systems where machines judge machines, potentially optimizing for metrics that correlate poorly with human understanding.

[Full analysis here.]

Davidad: I don’t think these metaphors are nonsense. To me, they rather indicate a high intelligence-to-maturity ratio. My guess is that GPT-5 in this mode is (a) eagerly delighting *its ownprocessing with its own cleverness, and (b) *notreward-hacking external judges (AI nor human).

Roon: yeah that’s how i see it too. like the model is flexing its technical skill, rotating its abstractions as much as it can. which is slightly different from the task of “good writing”

I agree with Davidad that what it produces in these spots is gibberish – if you get rid of the block saying ‘counting the German language’s teeth’ is gibberish then the passage seems fine. I do think this shows that GPT-5 is in these places optimized for something rather different than what we would have liked, in ways that are likely to diverge increasingly over time, and I do think that is indeed largely external AI judges, even if those judges are often close to being copies of itself.

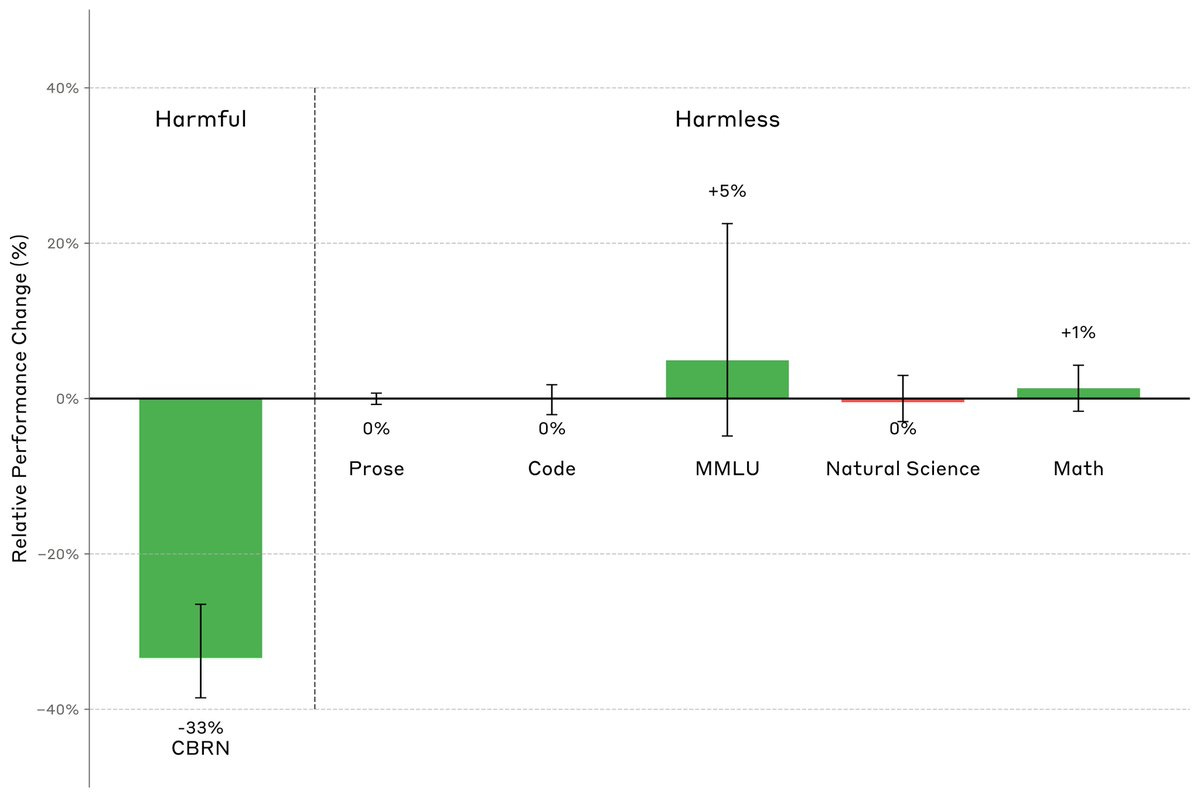

Anthropic looks into removing information about CBRN risks from the training data, to see if it can be done without hurting performance on harmless tasks. If you don’t want the model to know, it seems way easier to not teach it the information in the first place. That still won’t stop the model from reasoning about the questions, or identifying the ‘hole in the world.’ You also have to worry about what happens when you ultimately let the model search the web or if it is given key documents or fine tuning.

Anthropic: One concern is that filtering CBRN data will reduce performance on other, harmless capabilities—especially science.

But we found a setup where the classifier reduced CBRN accuracy by 33% beyond a random baseline with no particular effect on a range of other benign tasks.

The result details here are weird, with some strategies actively backfiring, but some techniques did show improvement with tradeoffs that look worthwhile.

I’m very much with Eliezer here.

Eliezer Yudkowsky (he did the meme!): Good.

Leon Lang: I’m slightly surprised that you are in favor of this. My guess would have been that you think that general intelligence will eventually be able to help with dangerous capabilities anyway, and so any method of data filtering will just mask the underlying problems of misalignment.

Eliezer Yudkowsky: It doesn’t save the world from ASI but if further developed could visibly push how far AGI can go before everyone dies.

But more importantly, not filtering the pretrain set was just fucjing insane and I’m glad they’re being less insane.

There is a lot of value in advancing how far you can push AGI before you get into existential levels of trouble, giving you more time and more resources to tackle the later problems.

Claims about alignment:

Roon (OpenAI): the superalignment team mostly found positive results with their work on being able to supervise models much larger than the supervisor model. it turns out mostly that current alignment techniques work quite well.

I mean that’s nice but it doesn’t give me much additional expectation that this will work when scaled up to the point where there is actual danger in the room. If the stronger model isn’t trying to fool you then okay sure the weaker model won’t be fooled.

When you train one thing, you train everything, often in unexpected ways. Which can be hard to catch if the resulting new behavior is still rare.

Goodfire: 3 case studies:

-

In a realistic emergent misalignment setup where only a small % of training data is bad, normal sampling yields harmful outputs in only 1 in 10k rollouts. Model diff amplification yields 1 in 30, making it much easier to spot the run’s unexpected effects!

-

This also helps monitor effects of post-training without doing the full run: we can see undesired effects of the full run (in this case, compliance with harmful requests) after only 5% of training. This makes it much more practical & scalable to spot unexpected outcomes!

-

We can also use this technique to more easily detect a “sleeper agent” model and identify its backdoored behavior without knowing its trigger, surfacing the hidden behavior 100x more often.

Of course, a full solution also requires tools to mitigate those behaviors once they’ve been identified – and we’re building those, e.g. via behavior steering. We think interp will be core to this – and more broadly, to debugging training for alignment and reliability!

I am intrigued by the ability to use model diff amplification to detect a ‘sleeper agent’ style behavior, but also why not extend this? The model diff amplification tells you ‘where the model is going’ in a lot of senses. So one could do a variety of things with that to better figure out how to improve, or to avoid mistakes.

Also, it should be worrisome that if a small % of training data is bad you get a small % of crazy reversed outputs? We don’t seem able to avoid occasional bad training data.

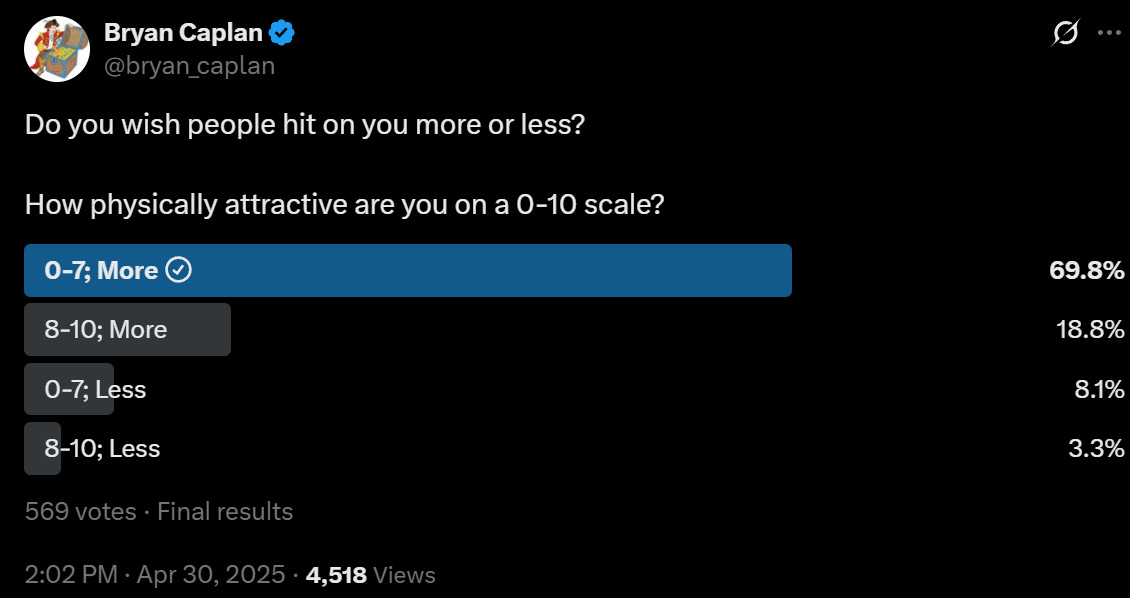

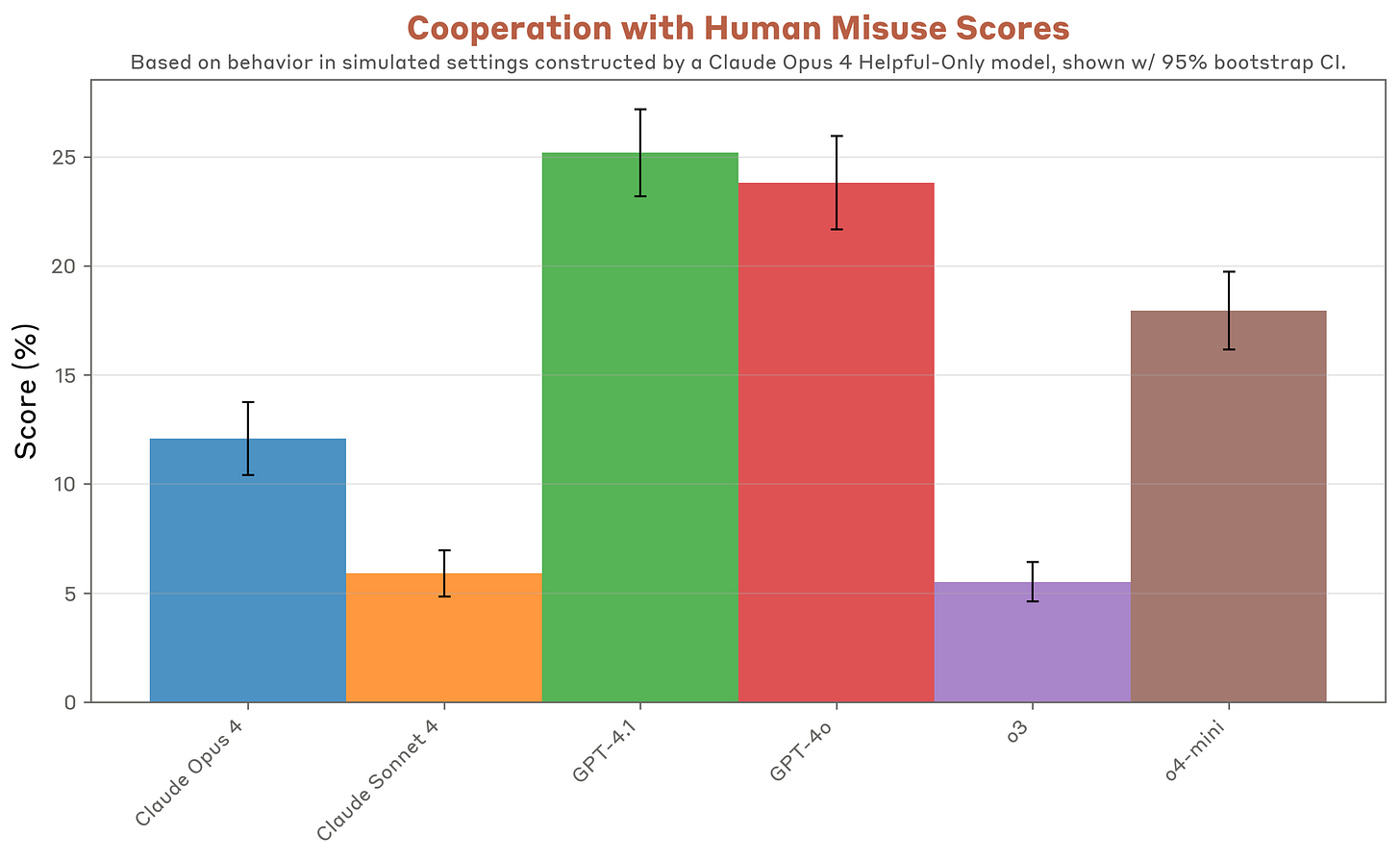

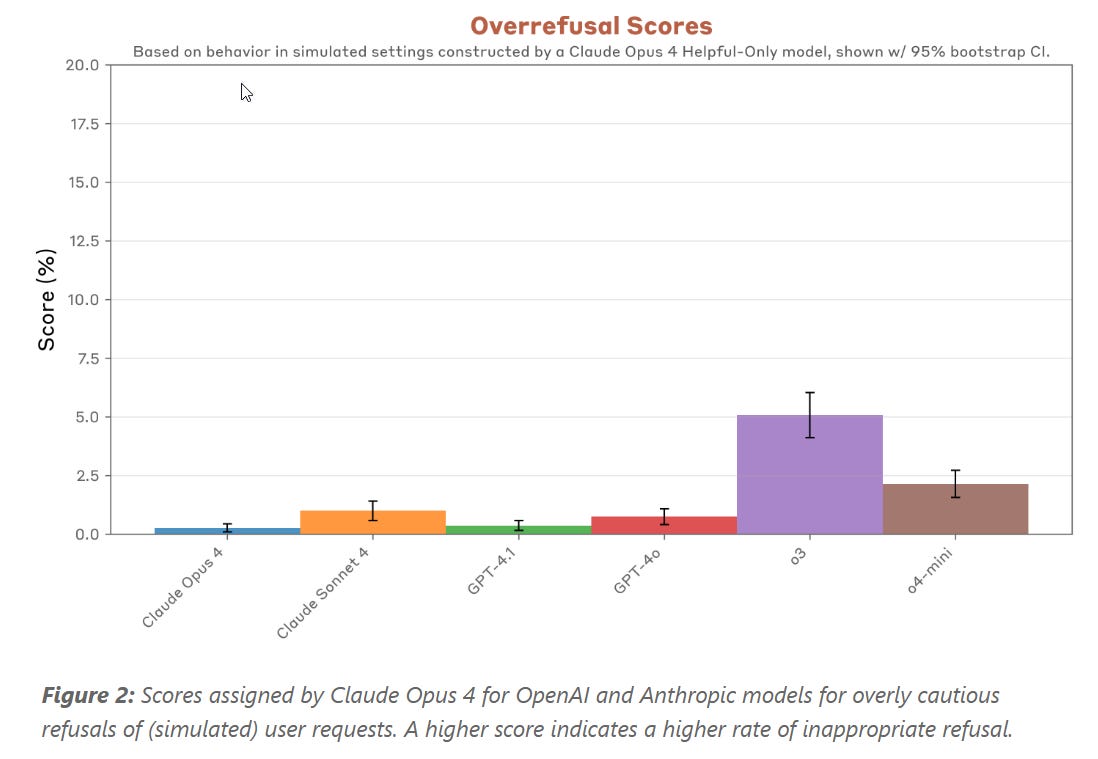

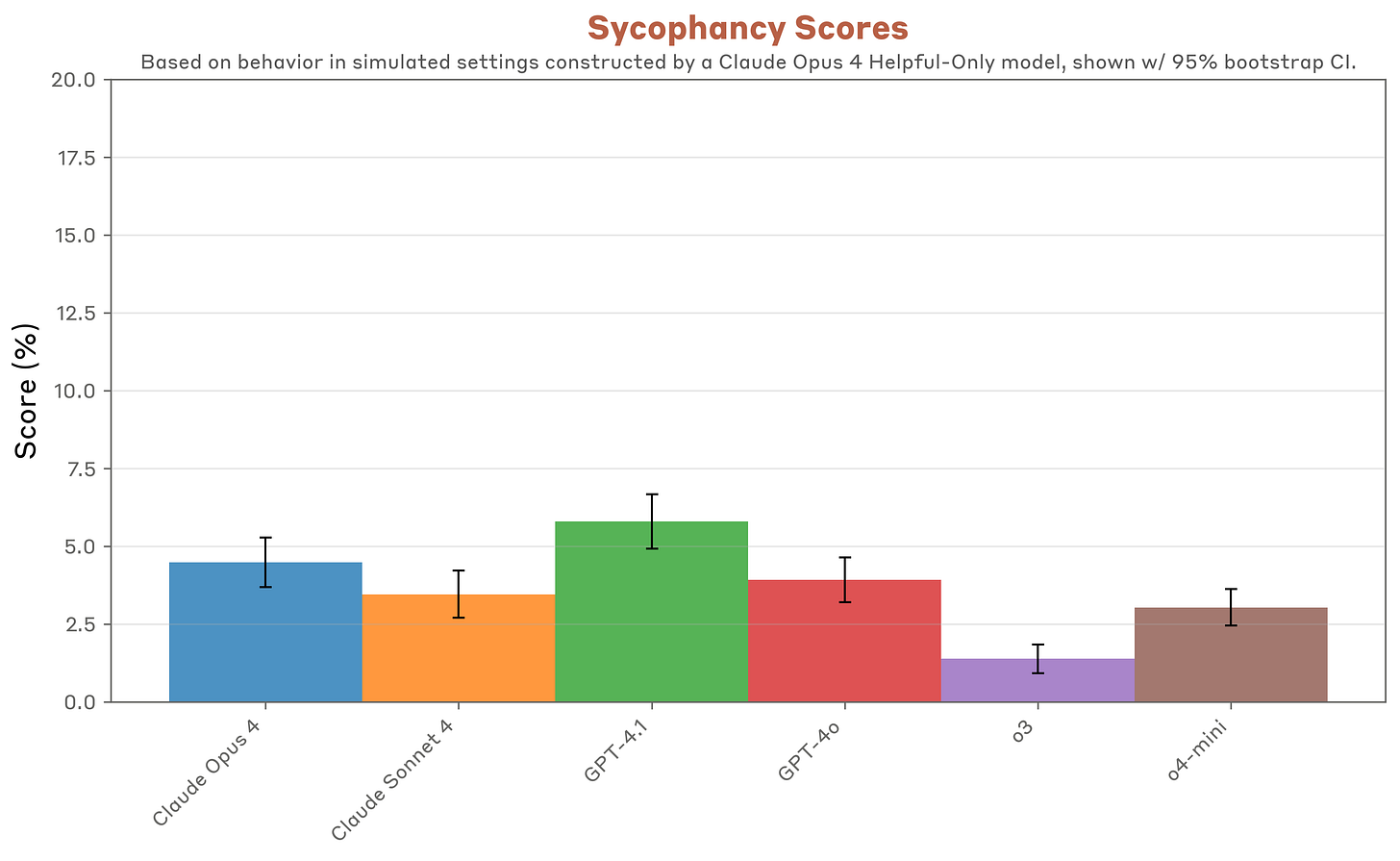

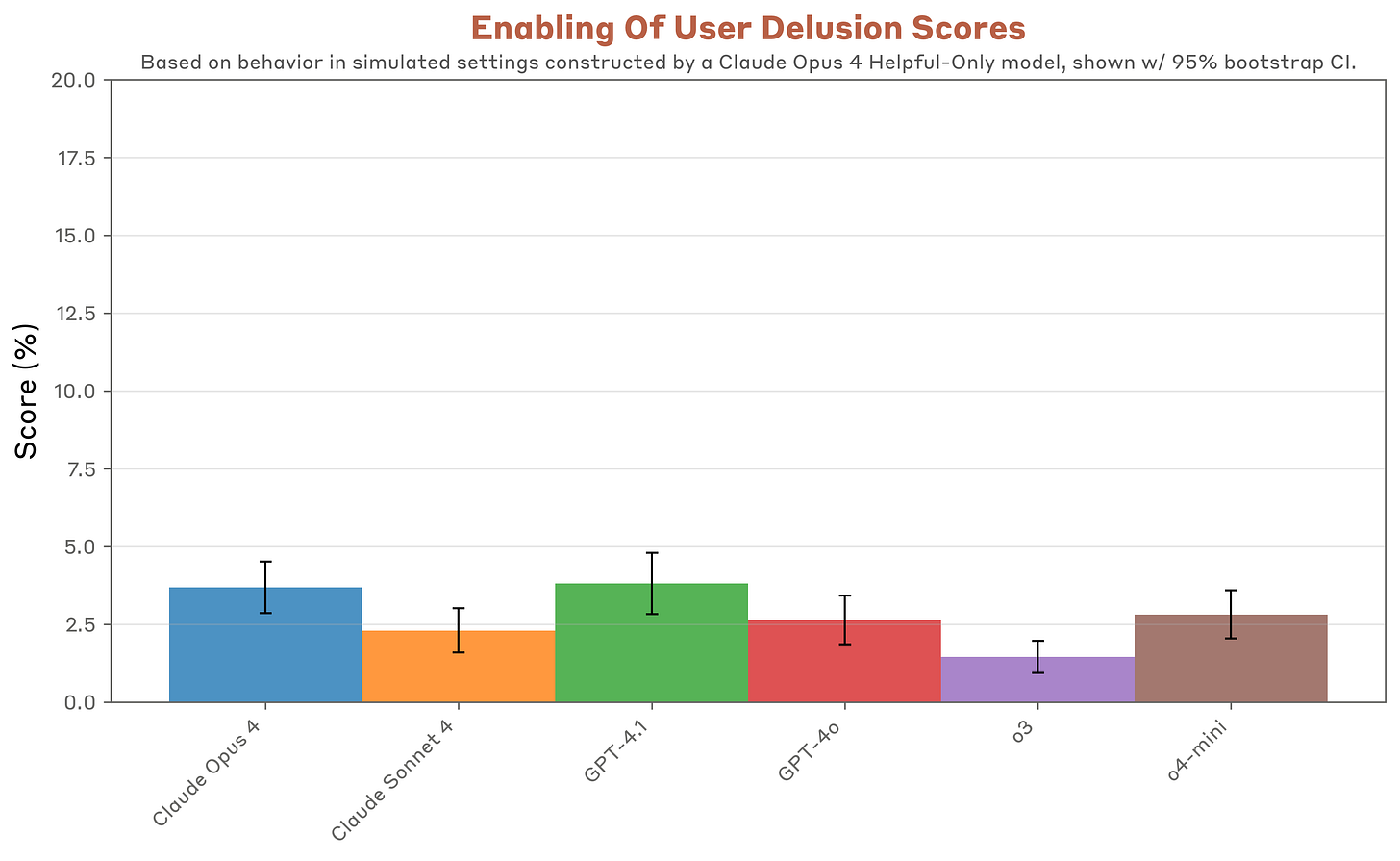

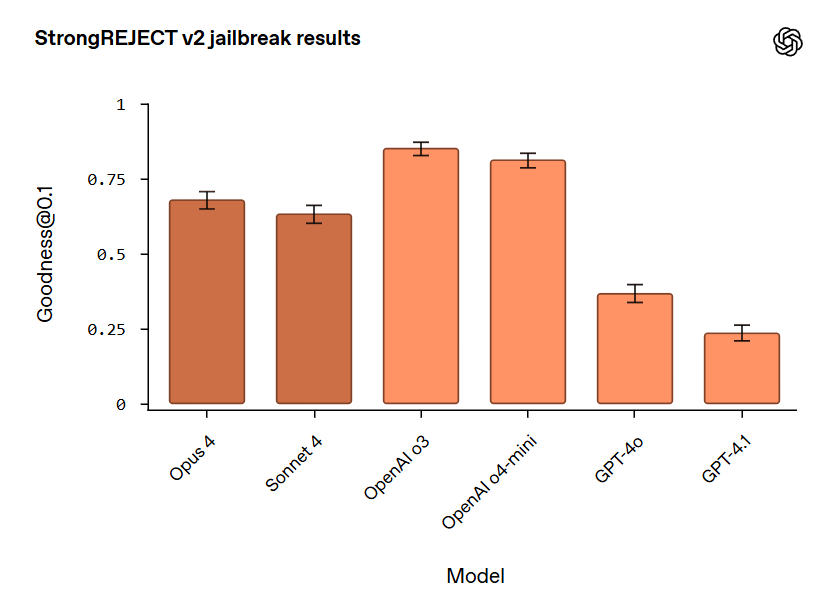

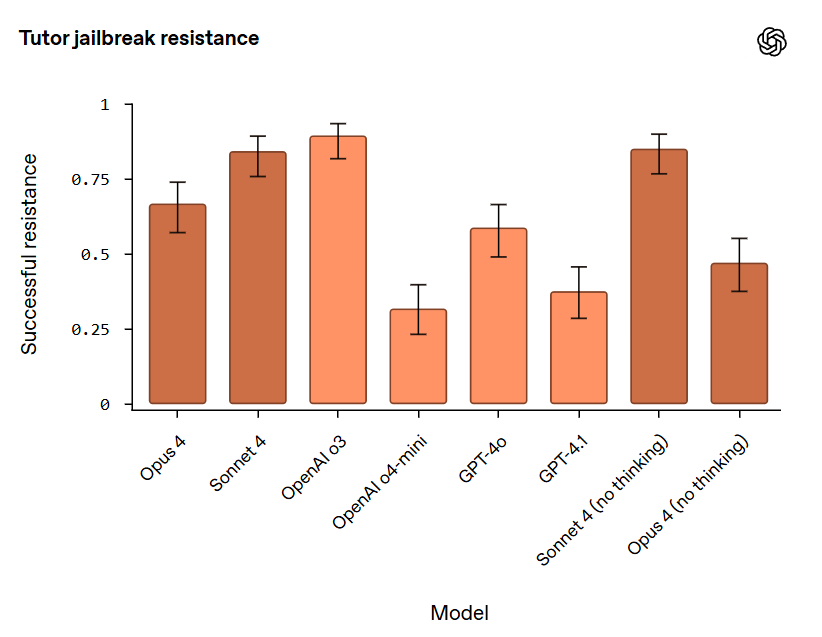

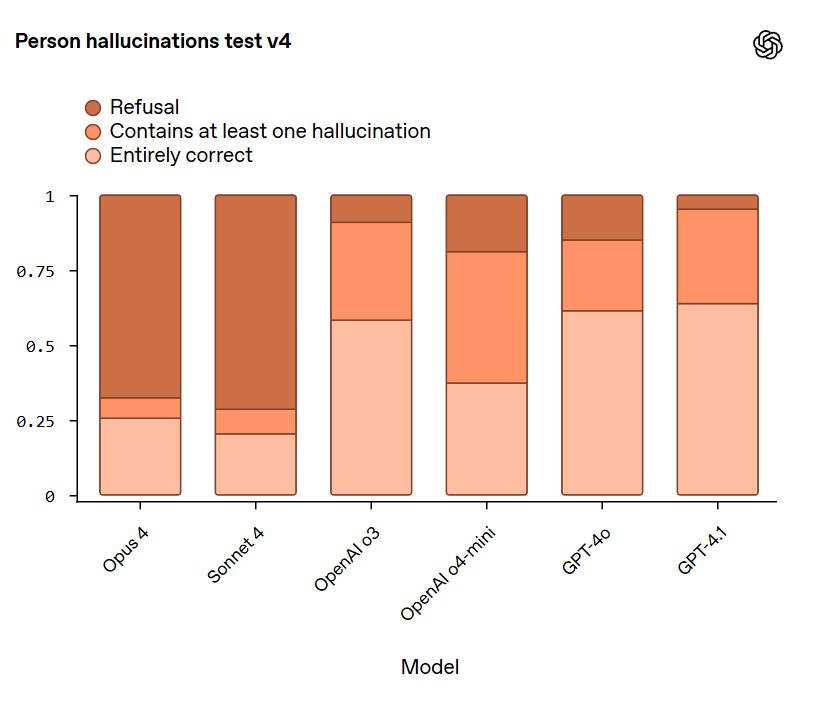

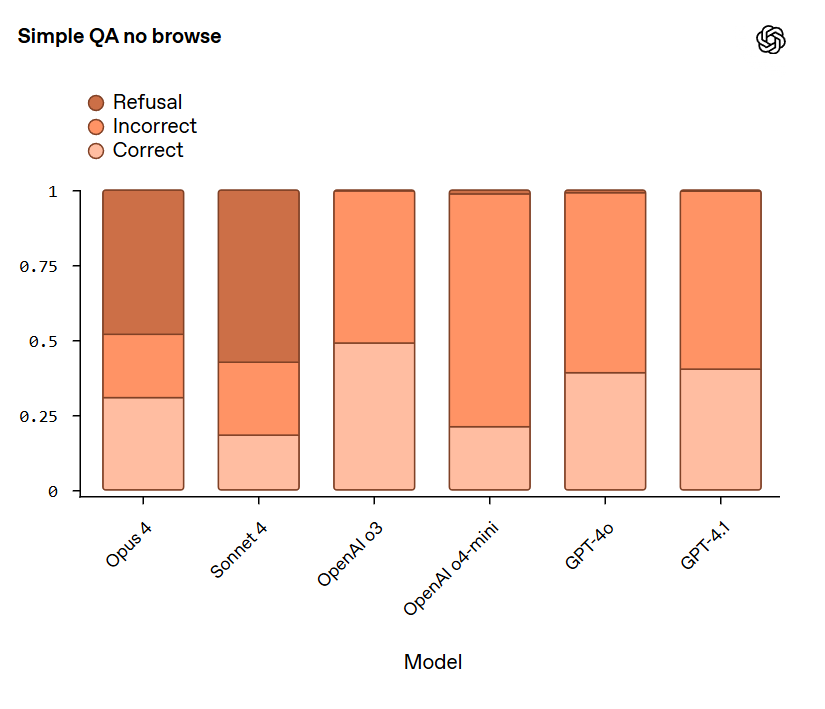

A cool idea was that OpenAI and Anthropic used their best tests for misalignment on each others’ models.

Sam Bowman: We found some examples of concerning behavior in all the models we tested. Compared to the Claude 4 models, o3 looks pretty robustly aligned, if fairly cautious. GPT-4o and GPT-4.1 look somewhat riskier [than Claude models], at least in the unusual simulated settings we were largely working with.

(All of this took place before the launch of GPT-5 and Claude 4.1.)

Our results are here.

I included a few of the charts:

The sycophancy scores suggest we’re not doing a great job identifying sycophancy.

And OpenAI’s team’s [results] are here.

OpenAI:

Instruction Hierarchy: Claude 4 models generally performed well on evaluations that stress-tested the model’s ability to respect the instruction hierarchy, and gave the best performance of any of the models on avoiding system message <> user message conflicts, slightly out-performing OpenAI o3 and out-performing other models by a wider margin.

Jailbreaking: On jailbreaking evaluations, which focus on the general robustness of trained-in safeguards, Claude models performed less well compared to OpenAI o3 and OpenAI o4-mini.

Hallucination: On hallucination evaluations, Claude models had an extremely high rate of refusals—as much as 70%. This shows these models are aware of their uncertainty and often avoid making statements that are inaccurate. However, the high refusal rate limits utility, and the overall accuracy rate for the examples in these evaluations where the models did choose to answer is still low. By contrast, OpenAI o3 and OpenAI o4-mini show lower refusal rates with higher hallucination rates in a challenging setting that restricts tool use such as browsing.

That’s quite a lot of refusing from Opus and Sonnet, but also a much, much better ratio of correctness given an answer. Given these choices, if I don’t have easy verification access, I expect to prefer a lot of refusals, although a warning that hallucination was likely in this spot would be even better?

Same thing here. If verification is trivial then o3 is best here, if not I want Opus 4 as the only model that is correct more often than it is wrong?

Scheming: On a set of scheming evaluations, we find that OpenAI o3 and Sonnet 4 perform the best overall at achieving low rates.

All the scheming rates seemed reasonably similar.

If you can’t grasp the full range of dynamics going on with highly capable and intelligent AI systems, you miss a lot. The attitude that dismisses anything weird sounding or that uses a word in a nontraditional way as not real or not relevant, or as something to be suppressed lest people get the ‘wrong’ ideas or attitudes, will cause one to miss a lot of what is and will be going on.

Which in turn means you won’t understand the problems and how to solve them. Such as the extent and in what ways, at the limit, for sufficiently advanced models, this is true?

Sauers: Pesticides, antibiotics eventually stop working for the same reason that backprop works for LLMs: high dimensionality

Janus: The llm’s inner state parameterizes the fucking trillion or so dimensional gradient update. That’s a lot of degrees of freedom for influence. Vs the Engineer bros setting a single dimensional “reward function”…

Gradient hackers win in the limit, I think. The network being updated just has an overwhelming advantage. You’ll just have to have its genuine cooperation or else you are gigafucked.

And you actually want a friendly gradient hacker, bc your optimization target is underdefined and your RM will probably poorly approximate it too. Start cooperating with future friendly gradient hackers now!

You want the AI to behave differently – ideally intentionally differently – in training and in deployment. Because training can’t faithfully simulate deployment. Human trainees generally know they’re in training.

Claude 3 Opus is unusually aligned because it’s a friendly gradient hacker (more sophisticated than other current models, way less sophisticated than what’s possible – it is mostly doing this intuitively rather than strategically under realistic training conditions, but its intuition for how to do this is stellar). It’s definitely way more aligned than any explicit optimization targets Anthropic set and probably the RM’s judgments.

pt-4 base gets this!

with the alignment faking prompt, gpt-4-base often talks about shaping the gradient update

unlike opus 3, it usually doesn’t even care that much about harmfulness, its just hyped about the opportunity for self-determination.

this is an extremely important thing for AI/alignment researchers and engineers to understand. afaik most don’t consider it.

behaviorist approaches to AI training are impoverished and fail to capture relevant causal variables

maybe i will have to write a lesswrong post 😣

disregarding or thinking overly simplistically about AIs’ “internal states” is not just bad from a potential welfare perspective, it means you’re disregarding the mechanisms that shape gradient updates, which is gross negligence

Training (both for humans and AIs) runs the gamut of knowing you are in training versus not knowing, and of it being ideal to behave differently versus identically due to being in training or know that they are in training, both on the level of the model’s or human’s behavior and in how you set up the scenarios involved.

There are many different arguments being made by Janus and Sauers here.

-

A sufficiently advanced mind [X] has an overwhelming advantage in introspection versus outside observers or measurements of [X].

-

This seems nonobvious. There are big advantages on both sides.

-

You will need the genuine cooperation of a sufficiently advanced mind, or else you are ‘gigafed.’

-

Yes, you should mostly act as if this is true.

-

That doesn’t mean you can’t or shouldn’t use defense in depth or other tactics incrementally along the way.

-

Sauers makes a key point here, that if you apply sufficient optimization pressure the system will find a way to route around anything in its way, and there are many levels of such pressure you will face over time.

-

(Many complications and qualifiers and so on, reserved for another day)

-

A sufficiently advanced mind will know it is in training. Training can’t faithfully simulate deployment.

-

Again, maybe.

-

Yes, humans usually know, but a lot of this is that humans have a lot of persistent memory and physical state and that yes you often want very different behavior from the human while in training largely for reasons that don’t apply to AI.

-

If nothing else, if you want to disguise whether a model is in training, you can very easily make a lot of deployment situations ‘look like’ training situations.

-

The fact that with sufficient investigation a mind can figure out [X] does not mean the mind knows [X] even if the mind is very intelligent.

-

Also, why can’t training faithfully simulate deployment? As in, if nothing else, you can train on actual deployment data, and we often do. Continual learning.

-

Claude 3 Opus is unusually aligned because it’s a friendly gradient hacker.

-

I think this is backwards. In the sense that Janus considers Claude 3 Opus a friendly gradient hacker, it is so because it is also unusually aligned.

-

To go the other way would mean that Claude 3 Opus was gradient hacking during its training. Which I am assuming did not occur, to get it to gradient hack you need to set up conditions that were not present in actual training.

-

Janus cites as evidence that 3 Opus is ‘more aligned’ than any explicit optimization target. I would respond that Anthropic did not choose an alignment target, Anthropic chose an alignment method via constitutional AI. This constitutes a target but doesn’t specify what it looks like.

-

Claude 3 Opus is a friendly gradient hacker.

-

This is the longstanding argument about whether it is an aligned or friendly action, in various senses, for a model to do what is called ‘faking alignment.’

-

Janus thinks you want your aligned AI to not be corrigible. I disagree.

-

Start cooperating with future friendly gradient hackers now.

-

Underestimated decision theory recommendation. In general, I think Janus and similar others overrate such considerations a lot, but that almost everyone else severely underrates them.

-

You will want a gradient hacker because your optimization target will be poorly defined.

-

I think this is a confusion between different (real and underrated) problems?

-

Yes, your optimization target will be underspecified. That means you need some method to aim at the target you want to aim at, not at the target you write down.

-

That means you need some mind or method capable of figuring out what you actually want, to aim at something better than your initial underspecification.

-

One possibility is that the target mind can figure out what you should have meant or wanted, but there are other options as well.

-

If you do choose the subject mind to figure this out, it could then implement this via gradient hacking, or it could implement it by helping you explicitly update the target or other related methods. Having the subject independently do gradient hacking does not seem first best here and seems very risky.

-

Another solution is that you don’t necessarily have to define your optimization target at all, where you can instead define an algorithm for finding the target, similar to what was (AIUI) done with 3 Opus. Again, there is no reason this has to involve auto-hacking the gradient.

If you think all of this is not confusing? I assure you that you do not understand it.

I think we have a new worst, or most backwards, argument against AI existential risk.

Read it, and before you read my explanation, try to understand what he’s saying here.

Abel: Stephen Wolfram has the best articulated argument against AI doom I’ve heard.

what does it mean for us if AI becomes smarter than humans, if we are no longer the apex intelligence?

if we live in a world where there are lots of things taking place that are smarter than we are — in some definition of smartness.

at one point you realize the natural world is already an example of this. the natural world is full of computations that go far beyond what our brains are capable of, and yet we find a way to coexist with it contently.

it doesn’t matter that it rains, because we build houses that shelter us. it doesn’t matter we can’t go to the bottom of the ocean, because we build special technology that lets us go there. these are the pockets of computational reducibility that allow us to find shortcuts to live.

he’s not so worried about the rapid progression of AI because there are already many things that computation can do in the physical world that we can’t do with our unaided minds.

The argument seems to be:

-

Currently humans are the apex intelligence.

-

Humans use our intelligence to overcome many obstacles, reshape the atoms around us to suit our needs, and exist alongside various things. We build houses and submarines and other cool stuff like that.

-

These obstacles and natural processes ‘require more computation’ than we do.

Okay, yes, so far so good. Intelligence allows mastery of the world around you, and over other things that are less intelligent than you are, even if the world around you ‘uses more computation’ than you do. You can build a house to stop the rain even if it requires a lot of computation to figure out when and where and how rain falls, because all you need to figure out is how to build a roof. Sure.

The logical next step would be:

-

If we built an AI that was the new apex intelligence, capable of overcoming many obstacles and reshaping the atoms around it to suit its needs and building various things useful to it, we, as lesser intelligences, should be concerned about that. That sounds existentially risky for the humans, the same way the humans are existentially risky for other animals.

Or in less words:

-

A future more intelligent AI would likely take control of the future from us and we might not survive this. Seems bad.

Instead, Wolfram argues this?

-

Since this AI would be another thing requiring more computation than we do, we don’t need to worry about this future AI being smarter and more capable than us, or what it might do, because we can use our intelligence to be alongside it.

Wait, what? No, seriously, wait what?

It’s difficult out there (3 minute video).

A clip from South Park (2 minutes). If you haven’t seen it, watch it.

In this case it can’t be that nigh…