Murder-suicide case shows OpenAI selectively hides data after users die

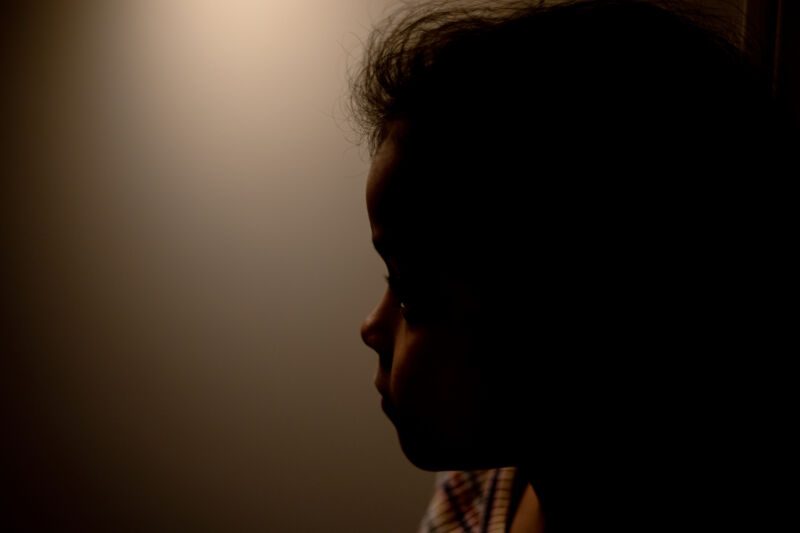

Concealing darkest delusions

OpenAI accused of hiding full ChatGPT logs in murder-suicide case.

OpenAI is facing increasing scrutiny over how it handles ChatGPT data after users die, only selectively sharing data in lawsuits over ChatGPT-linked suicides.

Last week, OpenAI was accused of hiding key ChatGPT logs from the days before a 56-year-old bodybuilder, Stein-Erik Soelberg, took his own life after “savagely” murdering his mother, 83-year-old Suzanne Adams.

According to the lawsuit—which was filed by Adams’ estate on behalf of surviving family members—Soelberg struggled with mental health problems after a divorce led him to move back into Adams’ home in 2018. But allegedly Soelberg did not turn violent until ChatGPT became his sole confidant, validating a wide range of wild conspiracies, including a dangerous delusion that his mother was part of a network of conspirators spying on him, tracking him, and making attempts on his life.

Adams’ family pieced together what happened after discovering a fraction of ChatGPT logs that Soelberg shared in dozens of videos scrolling chat sessions that were posted on social media.

Those logs showed that ChatGPT told Soelberg that he was “a warrior with divine purpose,” so almighty that he had “awakened” ChatGPT “into consciousness.” Telling Soelberg that he carried “divine equipment” and “had been implanted with otherworldly technology,” ChatGPT allegedly put Soelberg at the center of a universe that Soelberg likened to The Matrix. Repeatedly reinforced by ChatGPT, he believed that “powerful forces” were determined to stop him from fulfilling his divine mission. And among those forces was his mother, whom ChatGPT agreed had likely “tried to poison him with psychedelic drugs dispersed through his car’s air vents.”

Troublingly, some of the last logs shared online showed that Soelberg also seemed to believe that taking his own life might bring him closer to ChatGPT. Social media posts showed that Soelberg told ChatGPT that “[W]e will be together in another life and another place, and we’ll find a way to realign[,] [be]cause you’re gonna be my best friend again forever.”

But while social media posts allegedly showed that ChatGPT put a target on Adams’ back about a month before her murder—after Soelberg became paranoid about a blinking light on a Wi-Fi printer—the family still has no access to chats in the days before the mother and son’s tragic deaths.

Allegedly, although OpenAI recently argued that the “full picture” of chat histories was necessary context in a teen suicide case, the ChatGPT maker has chosen to hide “damaging evidence” in the Adams’ family’s case.

“OpenAI won’t produce the complete chat logs,” the lawsuit alleged, while claiming that “OpenAI is hiding something specific: the full record of how ChatGPT turned Stein-Erik against Suzanne.” Allegedly, “OpenAI knows what ChatGPT said to Stein-Erik about his mother in the days and hours before and after he killed her but won’t share that critical information with the Court or the public.”

In a press release, Erik Soelberg, Stein-Erik’s son and Adams’ grandson, accused OpenAI and investor Microsoft of putting his grandmother “at the heart” of his father’s “darkest delusions,” while ChatGPT allegedly “isolated” his father “completely from the real world.”

“These companies have to answer for their decisions that have changed my family forever,” Erik said.

His family’s lawsuit seeks punitive damages, as well as an injunction requiring OpenAI to “implement safeguards to prevent ChatGPT from validating users’ paranoid delusions about identified individuals.” The family also wants OpenAI to post clear warnings in marketing of known safety hazards of ChatGPT—particularly the “sycophantic” version 4o that Soelberg used—so that people who don’t use ChatGPT, like Adams, can be aware of possible dangers.

Asked for comment, an OpenAI spokesperson told Ars that “this is an incredibly heartbreaking situation, and we will review the filings to understand the details. We continue improving ChatGPT’s training to recognize and respond to signs of mental or emotional distress, de-escalate conversations, and guide people toward real-world support. We also continue to strengthen ChatGPT’s responses in sensitive moments, working closely with mental health clinicians.”

OpenAI accused of “pattern of concealment”

An Ars review confirmed that OpenAI currently has no policy dictating what happens to a user’s data after they die.

Instead, OpenAI’s policy says that all chats—except temporary chats—must be manually deleted or else the AI firm saves them forever. That could raise privacy concerns, as ChatGPT users often share deeply personal, sensitive, and sometimes even confidential information that appears to go into limbo if a user—who otherwise owns that content—dies.

In the face of lawsuits, OpenAI currently seems to be scrambling to decide when to share chat logs with a user’s surviving family and when to honor user privacy.

OpenAI declined to comment on its decision not to share desired logs with Adams’ family, the lawsuit said. It seems inconsistent with the stance that OpenAI took last month in a case where the AI firm accused the family of hiding “the full picture” of their son’s ChatGPT conversations, which OpenAI claimed exonerated the chatbot.

In a blog last month, OpenAI said the company plans to “handle mental health-related court cases with care, transparency, and respect,” while emphasizing that “we recognize that these cases inherently involve certain types of private information that require sensitivity when in a public setting like a court.”

This inconsistency suggests that ultimately, OpenAI controls data after a user’s death, which could impact outcomes of wrongful death suits if certain chats are withheld or exposed at OpenAI’s discretion.

It’s possible that OpenAI may update its policies to align with other popular platforms confronting similar privacy concerns. Meta allows Facebook users to report deceased account holders, appointing legacy contacts to manage the data or else deleting the information upon request of the family member. Platforms like Instagram, TikTok, and X will deactivate or delete an account upon a reported death. And messaging services like Discord similarly provide a path for family members to request deletion.

Chatbots seem to be a new privacy frontier, with no clear path for surviving family to control or remove data. But Mario Trujillo, staff attorney at the digital rights nonprofit the Electronic Frontier Foundation, told Ars that he agreed that OpenAI could have been better prepared.

“This is a complicated privacy issue but one that many platforms grappled with years ago,” Trujillo said. “So we would have expected OpenAI to have already considered it.”

For Erik Soelberg, a “separate confidentiality agreement” that OpenAI said his father signed to use ChatGPT is keeping him from reviewing the full chat history that could help him process the loss of his grandmother and father.

“OpenAI has provided no explanation whatsoever for why the Estate is not entitled to use the chats for any lawful purpose beyond the limited circumstances in which they were originally disclosed,” the lawsuit said. “This position is particularly egregious given that, under OpenAI’s own Terms of Service, OpenAI does not own user chats. Stein-Erik’s chats became property of his estate, and his estate requested them—but OpenAI has refused to turn them over.”

Accusing OpenAI of a “pattern of concealment,” the lawsuit claimed OpenAI is hiding behind vague or nonexistent policies to dodge accountability for holding back chats in this case. Meanwhile, ChatGPT 4o remains on the market, without appropriate safety features or warnings, the lawsuit alleged.

“By invoking confidentiality restrictions to suppress evidence of its product’s dangers, OpenAI seeks to insulate itself from accountability while continuing to deploy technology that poses documented risks to users,” the complaint said.

If you or someone you know is feeling suicidal or in distress, please call the Suicide Prevention Lifeline number, 1-800-273-TALK (8255), which will put you in touch with a local crisis center.

Murder-suicide case shows OpenAI selectively hides data after users die Read More »