FCC chairman celebrates court loss in case over Biden-era diversity rule

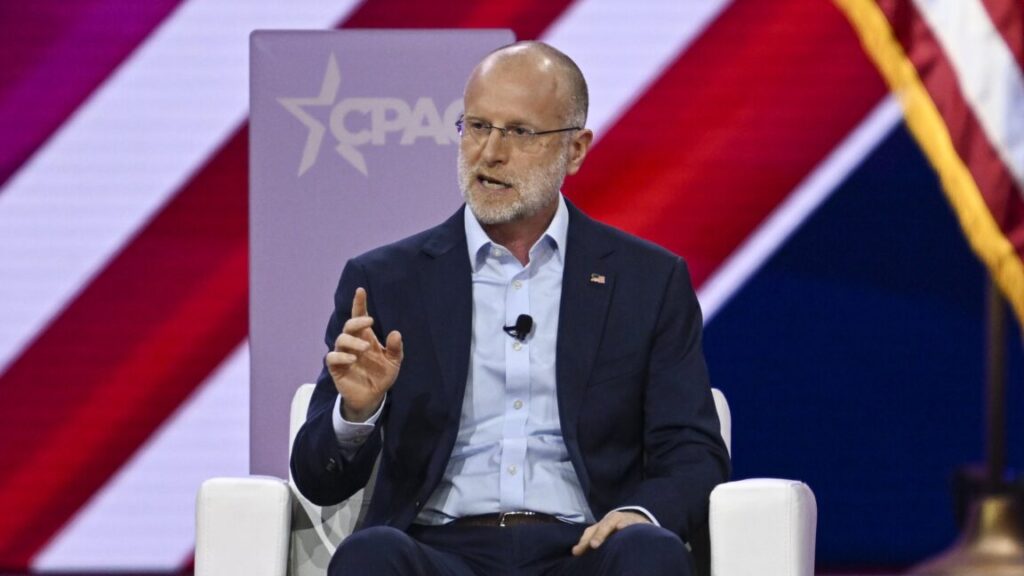

Federal Communications Commission Chairman Brendan Carr celebrated an FCC court loss yesterday after a ruling that struck down Biden-era diversity reporting requirements that Carr voted against while Democrats were in charge.

“An appellate court just struck down the Biden FCC’s 2024 decision to force broadcasters to post race and gender scorecards,” Carr wrote. “As I said in my dissent back then, the FCC’s 2024 decision was an unlawful effort to pressure businesses into discriminating based on race & gender.”

The FCC mandate was challenged in court by National Religious Broadcasters, a group for Christian TV and radio broadcasters and the American Family Association. They sued in the conservative-leaning US Court of Appeals for the 5th Circuit, where a three-judge panel yesterday ruled unanimously against the FCC.

The FCC order struck down by the court required broadcasters to file an annual form with race, ethnicity, and gender data for employees within specified job categories. “The Federal Communications Commission issued an order requiring most television and radio broadcasters to compile employment-demographics data and to disclose the data to the FCC, which the agency will then post on its website on a broadcaster-identifiable basis,” the 5th Circuit court said.

The FCC’s February 2024 order revived a data-collection requirement that was previously enforced from 1970 to 2001. The FCC suspended the data collection in 2001 after court rulings limiting how the commission could use the data, though the data collection itself had not been found to be unconstitutional.

FCC’s public interest authority not enough, court says

Led by then-Chairwoman Jessica Rosenworcel, the FCC last year said that reviving the data collection would serve the public interest by helping the agency “report on and analyze employment trends in the broadcast sector and also to compare trends across other sectors regulated by the Commission.”

But the FCC’s public-interest authority isn’t enough to justify the rule, the 5th Circuit judges found.

“The FCC undoubtedly has broad authority to act in the public interest,” the ruling said. “That authority, however, must be linked ‘to a distinct grant of authority’ contained in its statutes. The FCC has not shown that it is authorized to require broadcasters to file employment-demographics data or to analyze industry employment trends, so it cannot fall back on ‘public interest’ to fill the gap.”

FCC chairman celebrates court loss in case over Biden-era diversity rule Read More »