DigiLens may not be on every XR user’s mind, but we all owe them a lot. The optical components manufacturer only recently released its first branded wearable, but the organization makes parts for a number of XR companies and products. That’s why it’s so exciting that the company announced a wave of new processes and partnerships over the last few weeks.

SRG+

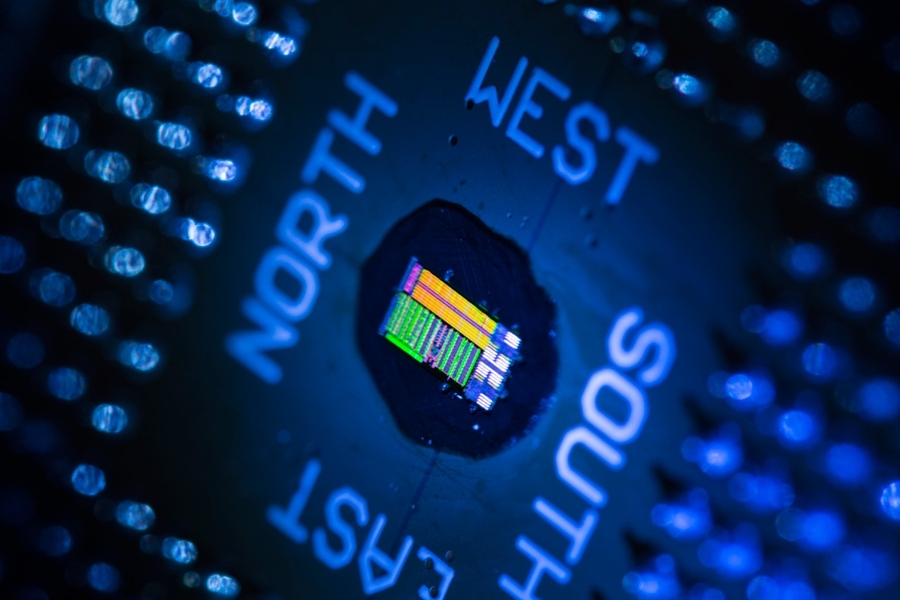

“Surface Relief Gratings” is one complicated process within the production of the complicated system that is a waveguide – the optical component that put DigiLens on the map. The short of it is that waveguides are the translucent screen on which a feed is cast by an accompanying “light engine” in this particular approach to AR displays.

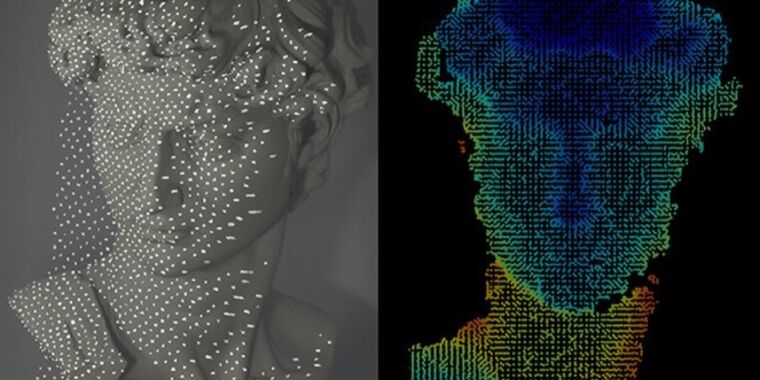

DigiLens doesn’t make light engines, but the methods that they use to produce lenses can reduce “eye glow” – which is essentially wasted light. The company’s new “SRG+” waveguide process achieves these ends at a lower cost, while also increasing the aspect ratio for an improved field of view on a lighter lens that can be produced more efficiently at a larger scale.

Lens benefits aside, this process improvement also allows for a more efficient light engine. A more efficient light engine translates to less energy consumption and a smaller form factor for the complete device. All of those are good selling points for a head-worn display. Many of those benefits are also true for Micro OLED lenses, a different approach to AR displays.

“I am excited about Digilens’ recent SRG+ developments, which provide a new, low-cost replication technology satisfying such drastic nanostructure requirements,” Dr. Bernard Kress, President of SPIE, the international society for optics and photonics, said in a release. “The AR waveguides field is the tip of the iceberg.”

A New Partner in Mojo Vision

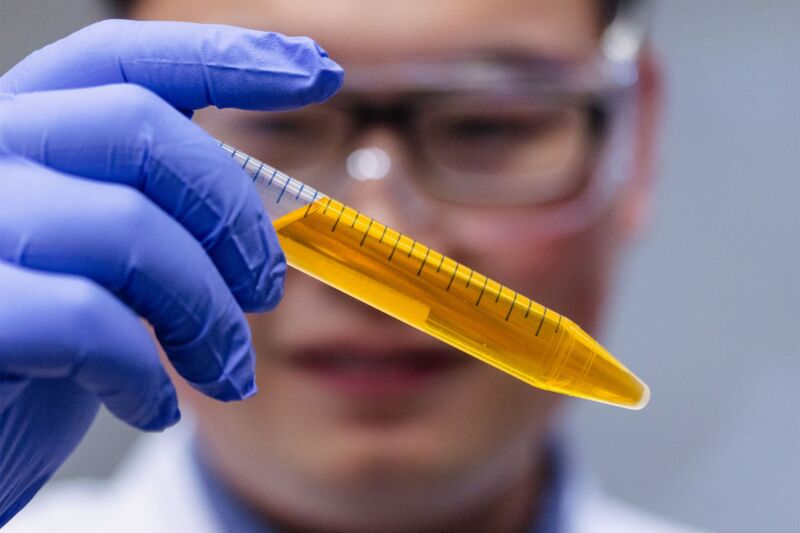

The first major partner to take advantage of this new process is Mojo Vision, a Micro-LED manufacturer that became famous in the industry for pursuing AR contact lenses. While that product has yet to materialize, its pursuit has resulted in Mojo Vision holding records for large displays on small tech. And, it can get even larger and lighter thanks to SRG+.

“Bringing our technologies together will raise the bar on display performance, and efficiency in the AR/XR industry,” Mojo Vision CEO Nikhil Balram said in a release shared with ARPost. “Partnering with DigiLens brings AR glasses closer to mass-scale consumer electronics.”

This partnership may also help to solve another one of AR’s persistent challenges: the sunny problem. AR glasses to date are almost always tinted. That’s because, to see AR elements in high ambient light conditions, the display either needs to be exceptionally bright or artificially darkened. Instead of cranking up the brightness, manufacturers opt for tinted lenses.

“The total form factor of the AR glasses can finally be small and light enough for consumers to wear for long periods of time and bright enough to allow them to see the superimposed digital information — even on a sunny day — without needing to darken the lenses,” DigiLens CEO Chris Pickett said in the release.

ARGO Is DigiLens’ Golden Fleece

After years of working backstage for device manufacturers, DigiLens announced ARGO at the beginning of this year, calling it “the first purpose-built stand-alone AR/XR device designed for enterprise and industrial-lite workers.” The glasses use the company’s in-house waveguides and a custom-built Android-based operating system running on Qualcomm’s Snapdragon XR2 chip.

“This is a big milestone for DigiLens at a very high level. We have always been a component manufacturer,” DigiLens VP and GM of Product, Nima Shams told ARPost at the time. “At the same time, we want to push the market and meet the market and it seems like the market is kind of open and waiting.”

More Opportunities With Qualcomm

Close followers of Qualcomm’s XR operations may recall that the company often saves major news around its XR developer platform Snapdragon Spaces for AWE. The platform launched at AWE in 2021 and became available to the public at AWE last year. This year, among other announcements, Qualcomm announced Spaces compatibility with ARGO.

“We are excited to support the democratization of the XR industry by offering Snapdragon Spaces through DigiLens’ leading all-in-one AR headset,” Qualcomm Senior Director of Product Management XR, Said Bakadir, said in a release shared with ARPost.

“DigiLens’ high-transparency and sunlight-readable optics combined with the universe of leading XR application developers from Snapdragon Spaces are critical in supporting the needs of the expanding enterprise and industrial markets,” said Bakadir.

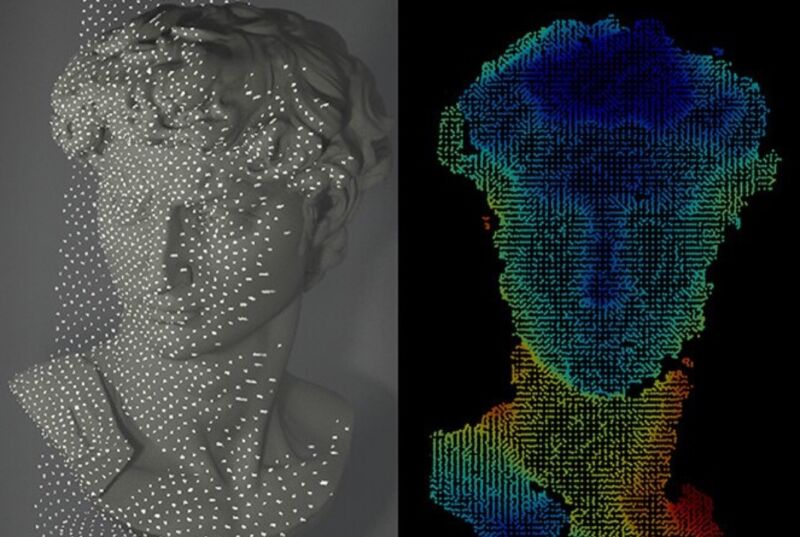

Snapdragon Spaces bundles developer tools including hand and position tracking, scene understanding and persistent anchors, spatial mapping, and plane detection. So, while we’re likely to see more partnerships with more existing applications, this strengthened relationship with Qualcomm could mean more native apps on ARGO.

Getting Rugged With Taqtile

“Industrial-lite” might be getting a bit heavier as DigiLens partners with Taqtile on a “rugged AR-enabled solution for industrial and defense customers” – presumably a more durable version of the original ARGO running Manifest, Taqtile’s flagship enterprise AR solution. Taqtile recently released a free version of Manifest to make its capabilities more available to potential clients.

“ARGO represents just the type of head-mounted, hands-free device that Manifest customers have been looking for,” Taqtile CTO John Tomizuka said in a release. “We continue to evaluate hardware solutions that will meet the unique needs of our deskless workers, and the combination of Manifest and ARGO has the ability to deliver performance and functionality.”

Getting Smart With Wisear

Wisear is a neural interface company that uses “smart earphones” to allow users to control connected devices with their thoughts rather than with touch, gesture, or even voice controls.

For the average consumer, that might just be really cool. For consumers with neurological disorders, that might be a new way to connect to the world. For enterprise, it solves another problem.

Headworn devices mean frontline workers aren’t holding the device, but if they need their hands to interact with it, that still means taking their hands off of the job. Voice controls get around this but some environments and circumstances make voice controls inconvenient or difficult to use. Neural inputs solve those problems too. And Wisear is bringing those solutions to ARGO.

“DigiLens and Wisear share a common vision of using cutting-edge technology to revolutionize the way frontline workers work,” Pickett said in a release shared with ARPost. “Our ARGO smart glasses, coupled with Wisear’s neural interface-powered earphones, will provide frontline workers with the tools they need to work seamlessly and safely.”

More Tracking Options With Ultraleap

Ultraleap is another components manufacturer. They make input accessories like tracking cameras, controllers, and haptics. A brief shared with ARPost only mentions “a groundbreaking partnership” between the companies “offering a truly immersive and user-friendly experience across diverse applications, from gaming and education to industrial training and healthcare.”

That sounds a lot like it hints at more wide availability for ARGO, but don’t get your hopes up yet. This is the announcement about which we know the least. Most of this article has come together from releases shared with ARPost in advance of AWE, which is happening now. So, watch our AWE coverage articles as they come out for more concrete information.

So Much More to Come

Announcements from component manufacturers can be tantalizing. We know that they have huge ramifications for the whole industry, but we know that those ramifications aren’t immediate. We’re closely watching DigiLens and its partners to see when some of these announcements might bear tangible fruit but keep in mind that this company also has its own full model out now.