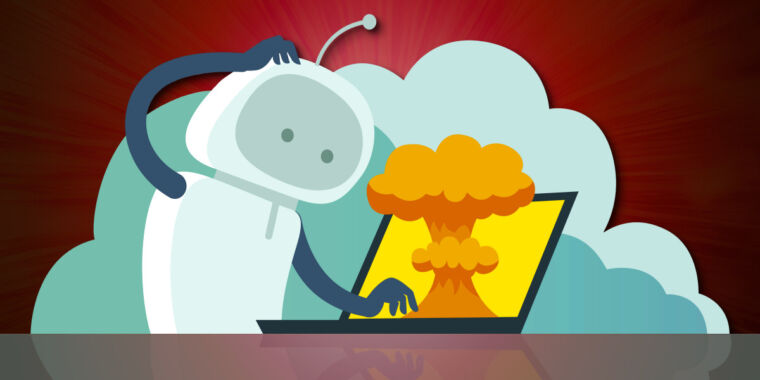

Warner Bros. sues Midjourney to stop AI knockoffs of Batman, Scooby-Doo

AI would’ve gotten away with it too…

Warner Bros. case builds on arguments raised in a Disney/Universal lawsuit.

DVD art for the animated movie Scooby-Doo & Batman: The Brave and the Bold. Credit: Warner Bros. Discovery

Warner Bros. hit Midjourney with a lawsuit Thursday, crafting a complaint that strives to shoot down defenses that the AI company has already raised in a similar lawsuit filed by Disney and Universal Studios earlier this year.

The big film studios have alleged that Midjourney profits off image generation models trained to produce outputs of popular characters. For Disney and Universal, intellectual property rights to pop icons like Darth Vader and the Simpsons were allegedly infringed. And now, the WB complaint defends rights over comic characters like Superman, Wonder Woman, and Batman, as well as characters considered “pillars of pop culture with a lasting impact on generations,” like Scooby-Doo and Bugs Bunny, and modern cartoon characters like Rick and Morty.

“Midjourney brazenly dispenses Warner Bros. Discovery’s intellectual property as if it were its own,” the WB complaint said, accusing Midjourney of allowing subscribers to “pick iconic” copyrighted characters and generate them in “every imaginable scene.”

Planning to seize Midjourney’s profits from allegedly using beloved characters to promote its service, Warner Bros. described Midjourney as “defiant and undeterred” by the Disney/Universal lawsuit. Despite that litigation, WB claimed that Midjourney has recently removed copyright protections in its supposedly shameful ongoing bid for profits. Nothing but a permanent injunction will end Midjourney’s outputs of allegedly “countless infringing images,” WB argued, branding Midjourney’s alleged infringements as “vast, intentional, and unrelenting.”

Examples of closely matching outputs include prompts for “screencaps” showing specific movie frames, a search term that at least one artist, Reid Southen, had optimistically predicted Midjourney would block last year, but it apparently did not.

Here are some examples included in WB’s complaint:

Midjourney’s output for the prompt, “Superman, classic cartoon character, DC comics.”

Midjourney could face devastating financial consequences in a loss. At trial, WB is hoping discovery will show the true extent of Midjourney’s alleged infringement, asking the court for maximum statutory damages, at $150,000 per infringing output. Just 2,000 infringing outputs unearthed could cost Midjourney more than its total revenue for 2024, which was approximately $300 million, the WB complaint said.

Warner Bros. hopes to hobble Midjourney’s best defense

For Midjourney, the WB complaint could potentially hit harder than the Disney/Universal lawsuit. WB’s complaint shows how closely studios are monitoring AI copyright litigation, likely choosing ideal moments to strike when studios feel they can better defend their property. So, while much of WB’s complaint echoes Disney and Universal’s arguments—which Midjourney has already begun defending against—IP attorney Randy McCarthy suggested in statements provided to Ars that WB also looked for seemingly smart ways to potentially overcome some of Midjourney’s best defenses when filing its complaint.

WB likely took note when Midjourney filed its response to the Disney/Universal lawsuit last month, arguing that its system is “trained on billions of publicly available images” and generates images not by retrieving a copy of an image in its database but based on “complex statistical relationships between visual features and words in the text-image pairs are encoded within the model.”

This defense could allow Midjourney to avoid claims that it copied WB images and distributes copies through its models. But hoping to dodge this defense, WB didn’t argue that Midjourney retains copies of its images. Rather, the entertainment giant raised a more nuanced argument that:

Midjourney used software, servers, and other technology to store and fix data associated with Warner Bros. Discovery’s Copyrighted Works in such a manner that those works are thereby embodied in the model, from which Midjourney is then able to generate, reproduce, publicly display, and distribute unlimited “copies” and “derivative works” of Warner Bros. Discovery’s works as defined by the Copyright Act.”

McCarthy noted that WB’s argument pushes the court to at least consider that even though “Midjourney does not store copies of the works in its model,” its system “nonetheless accesses the data relating to the works that are stored by Midjourney’s system.”

“This seems to be a very clever way to counter MJ’s ‘statistical pattern analysis’ arguments,” McCarthy said.

If it’s a winning argument, that could give WB a path to wipe Midjourney’s models. WB argued that each time Midjourney provides a “substantially new” version of its image generator, it “repeats this process.” And that ongoing activity—due to Midjourney’s initial allegedly “massive copying” of WB works—allows Midjourney to “further reproduce, publicly display, publicly perform, and distribute image and video outputs that are identical or virtually identical to Warner Bros. Discovery’s Copyrighted Works in response to simple prompts from subscribers.”

Perhaps further strengthening the WB’s argument, the lawsuit noted that Midjourney promotes allegedly infringing outputs on its 24/7 YouTube channel and appears to have plans to compete with traditional TV and streaming services. Asking the court to block Midjourney’s outputs instead, WB claims it’s already been “substantially and irreparably harmed” and risks further damages if the AI image generator is left unchecked.

As alleged proof that the AI company knows its tool is being used to infringe WB property, WB pointed to Midjourney’s own Discord server and subreddit, where users post outputs depicting WB characters and share tips to help others do the same. They also called out Midjourney’s “Explore” page, which allows users to drop a WB-referencing output into the prompt field to generate similar images.

“It is hard to imagine copyright infringement that is any more willful than what Midjourney is doing here,” the WB complaint said.

WB and Midjourney did not immediately respond to Ars’ request to comment.

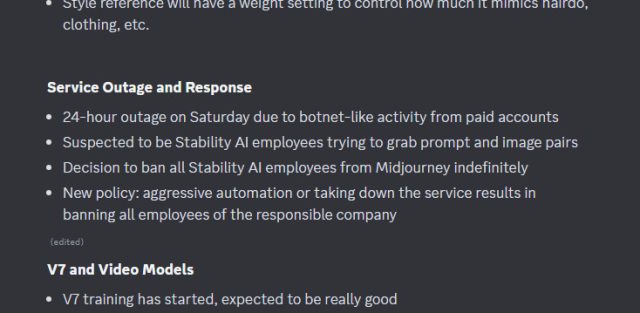

Midjourney slammed for promising “fewer blocked jobs”

McCarthy noted that WB’s legal strategy differs in other ways from the arguments Midjourney’s already weighed in the Disney/Universal lawsuit.

The WB complaint also anticipates Midjourney’s likely defense that users are generating infringing outputs, not Midjourney, which could invalidate any charges of direct copyright infringement.

In the Disney/Universal lawsuit, Midjourney argued that courts have recently found that AI tools referencing copyrighted works is “a quintessentially transformative fair use,” accusing studios of trying to censor “an instrument for user expression.” They claim that Midjourney cannot know about infringing outputs unless studios use the company’s DMCA process, while noting that subscribers have “any number of legitimate, noninfringing grounds to create images incorporating characters from popular culture,” including “non-commercial fan art, experimentation and ideation, and social commentary and criticism.”

To avoid losing on that front, the WB complaint doesn’t depend on a ruling that Midjourney directly infringed copyrights. Instead, the complaint “more fully” emphasizes how Midjourney may be “secondarily liable for infringement via contributory, inducement and/or vicarious liability by inducing its users to directly infringe,” McCarthy suggested.

Additionally, WB’s complaint “seems to be emphasizing” that Midjourney “allegedly has the technical means to prevent its system from accepting prompts that directly reference copyrighted characters,” and “that would prevent infringing outputs from being displayed,” McCarthy said.

The complaint noted that Midjourney is in full control of what outputs can be generated. Noting that Midjourney “temporarily refused to ‘animate'” outputs of WB characters after launching video generations, the lawsuit appears to have been filed in response to Midjourney “deliberately” removing those protections and then announcing that subscribers would experience “fewer blocked jobs.”

Together, these arguments “appear to be intended to lead to the inference that Midjourney is willfully enticing its users to infringe,” McCarthy said.

WB’s complaint details simple user prompts that generate allegedly infringing outputs without any need to manipulate the system. The ease of generating popular characters seems to make Midjourney a destination for users frustrated by other AI image generators that make it harder to generate infringing outputs, WB alleged.

On top of that, Midjourney also infringes copyrights by generating WB characters, “even in response to generic prompts like ‘classic comic book superhero battle.'” And while Midjourney has seemingly taken steps to block WB characters from appearing on its “Explore” page, where users can find inspiration for prompts, these guardrails aren’t perfect, but rather “spotty and suspicious,” WB alleged. Supposedly, searches for correctly spelled character names like “Batman” are blocked, but any user who accidentally or intentionally mispells a character’s name like “Batma” can learn an easy way to work around that block.

Additionally, WB alleged, “the outputs often contain extensive nuance and detail, background elements, costumes, and accessories beyond what was specified in the prompt.” And every time that Midjourney outputs an allegedly infringing image, it “also trains on the outputs it has generated,” the lawsuit noted, creating a never-ending cycle of continually enhanced AI fakes of pop icons.

Midjourney could slow down the cycle and “minimize” these allegedly infringing outputs, if it cannot automatically block them all, WB suggested. But instead, “Midjourney has made a calculated and profit-driven decision to offer zero protection for copyright owners even though Midjourney knows about the breathtaking scope of its piracy and copyright infringement,” WB alleged.

Fearing a supposed scheme to replace WB in the market by stealing its best-known characters, WB accused Midjourney of willfully allowing WB characters to be generated in order to “generate more money for Midjourney” to potentially compete in streaming markets.

Midjourney will remove protections “on a whim”

As Midjourney’s efforts to expand its features escalate, WB claimed that trust is lost. Even if Midjourney takes steps to address rightsholders’ concerns, WB argued, studios must remain watchful of every upgrade, since apparently, “Midjourney can and will remove copyright protection measures on a whim.”

The complaint noted that Midjourney just this week announced “plans to continue deploying new versions” of its image generator, promising to make it easier to search for and save popular artists’ styles—updating a feature that many artists loathe.

Without an injunction, Midjourney’s alleged infringement could interfere with WB’s licensing opportunities for its content, while “illegally and unfairly” diverting customers who buy WB products like posters, wall art, prints, and coloring books, the complaint said.

Perhaps Midjourney’s strongest defense could be efforts to prove that WB benefits from its image generator. In the Disney/Universal lawsuit, Midjourney pointed out that studios “benefit from generative AI models,” claiming that “many dozens of Midjourney subscribers are associated with” Disney and Universal corporate email addresses. If WB corporate email addresses are found among subscribers, Midjourney could claim that WB is trying to “have it both ways” by “seeking to profit” from AI tools while preventing Midjourney and its subscribers from doing the same.

McCarthy suggested it’s too soon to say how the WB battle will play out, but Midjourney’s response will reveal how it intends to shift tactics to avoid courts potentially picking apart its defense of its training data, while keeping any blame for copyright-infringing outputs squarely on users.

“As with the Disney/Universal lawsuit, we need to wait to see how Midjourney answers these latest allegations,” McCarthy said. “It is definitely an interesting development that will have widespread implications for many sectors of our society.”

Warner Bros. sues Midjourney to stop AI knockoffs of Batman, Scooby-Doo Read More »