Google CEO: If an AI bubble pops, no one is getting out clean

Market concerns and Google’s position

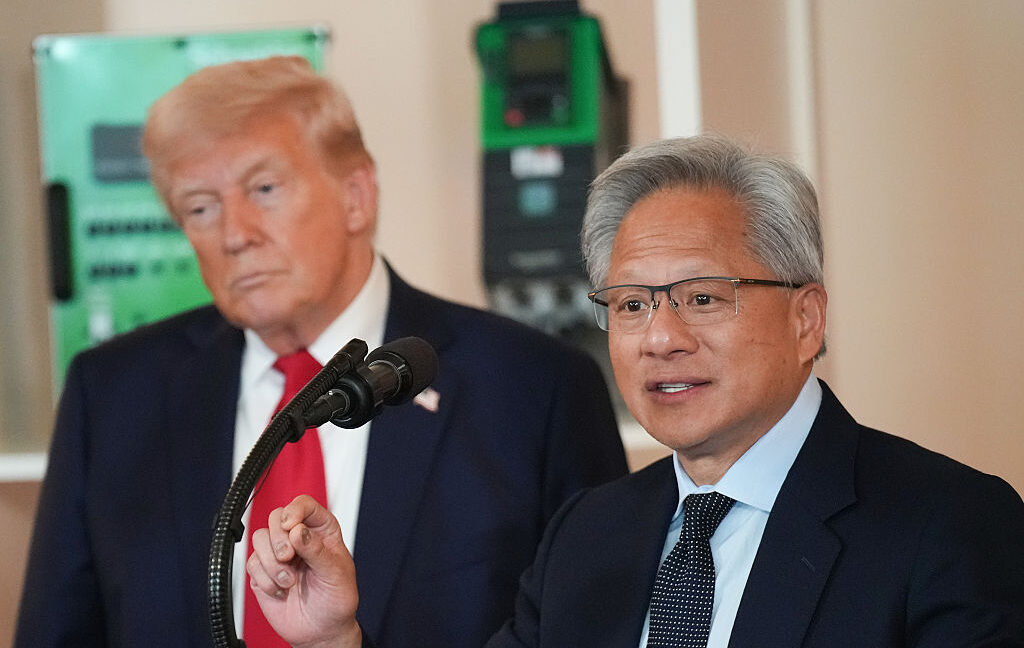

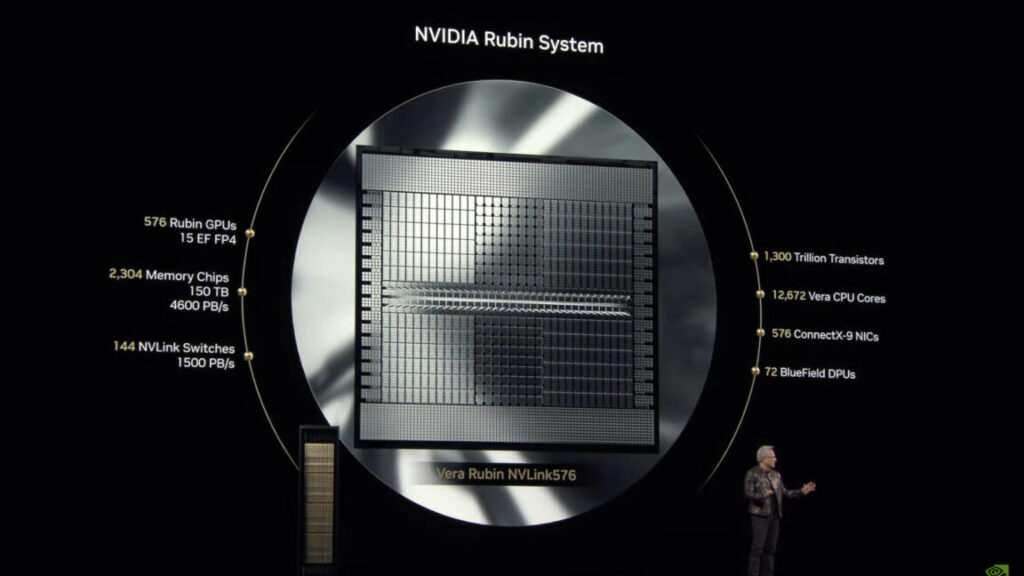

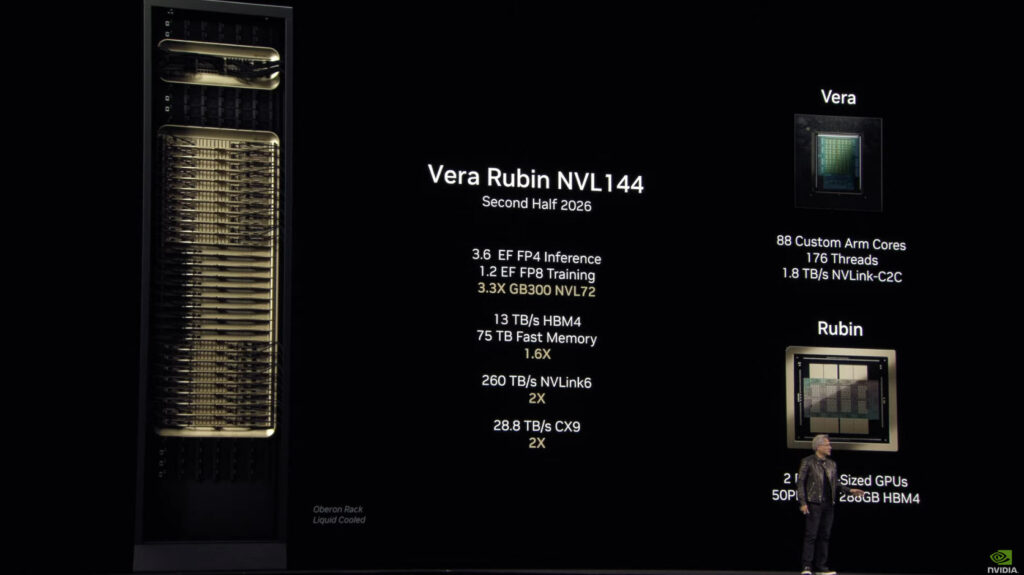

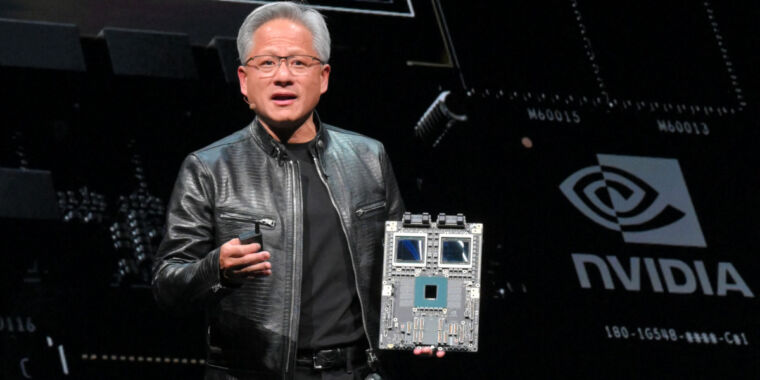

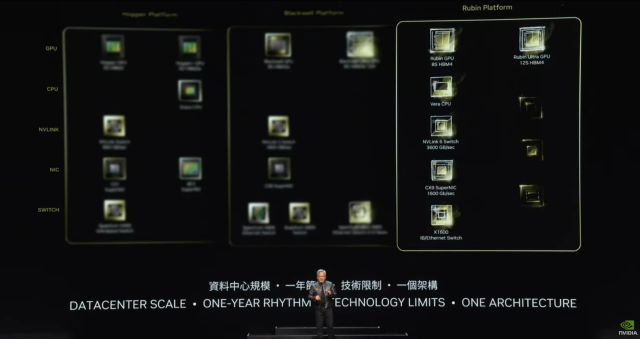

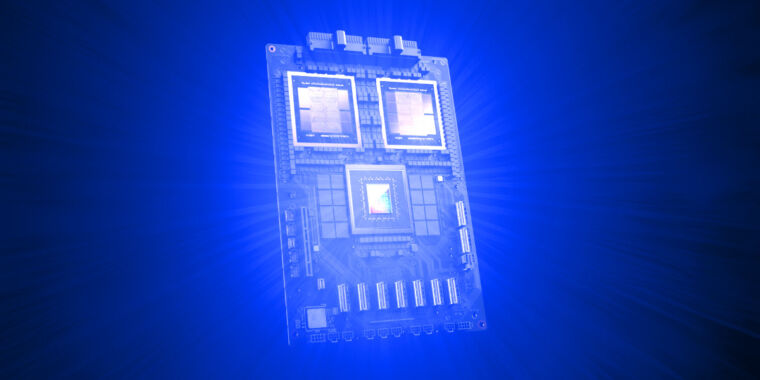

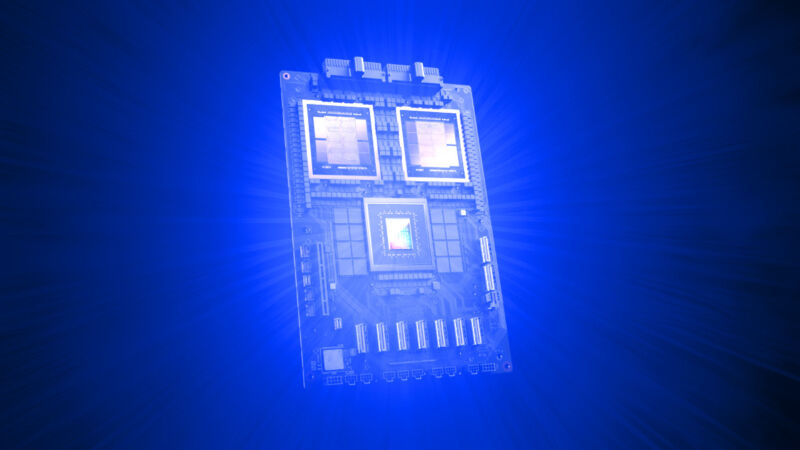

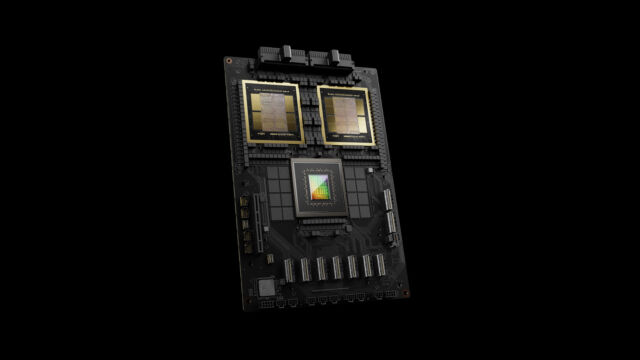

Alphabet’s recent market performance has been driven by investor confidence in the company’s ability to compete with OpenAI’s ChatGPT, as well as its development of specialized chips for AI that can compete with Nvidia’s. Nvidia recently reached a world-first $5 trillion valuation due to making GPUs that can accelerate the matrix math at the heart of AI computations.

Despite acknowledging that no company would be immune to a potential AI bubble burst, Pichai argued that Google’s unique position gives it an advantage. He told the BBC that the company owns what he called a “full stack” of technologies, from chips to YouTube data to models and frontier science research. This integrated approach, he suggested, would help the company weather any market turbulence better than competitors.

Pichai also told the BBC that people should not “blindly trust” everything AI tools output. The company currently faces repeated accuracy concerns about some of its AI models. Pichai said that while AI tools are helpful “if you want to creatively write something,” people “have to learn to use these tools for what they’re good at and not blindly trust everything they say.”

In the BBC interview, the Google boss also addressed the “immense” energy needs of AI, acknowledging that the intensive energy requirements of expanding AI ventures have caused slippage on Alphabet’s climate targets. However, Pichai insisted that the company still wants to achieve net zero by 2030 through investments in new energy technologies. “The rate at which we were hoping to make progress will be impacted,” Pichai said, warning that constraining an economy based on energy “will have consequences.”

Even with the warnings about a potential AI bubble, Pichai did not miss his chance to promote the technology, albeit with a hint of danger regarding its widespread impact. Pichai described AI as “the most profound technology” humankind has worked on.

“We will have to work through societal disruptions,” he said, adding that the technology would “create new opportunities” and “evolve and transition certain jobs.” He said people who adapt to AI tools “will do better” in their professions, whatever field they work in.

Google CEO: If an AI bubble pops, no one is getting out clean Read More »