Lawyer sets new standard for abuse of AI; judge tosses case

“Extremely difficult to believe”

Behold the most overwrought AI legal filings you will ever gaze upon.

Frustrated by fake citations and flowery prose packed with “out-of-left-field” references to ancient libraries and Ray Bradbury’s Fahrenheit 451, a New York federal judge took the rare step of terminating a case this week due to a lawyer’s repeated misuse of AI when drafting filings.

In an order on Thursday, district judge Katherine Polk Failla ruled that the extraordinary sanctions were warranted after an attorney, Steven Feldman, kept responding to requests to correct his filings with documents containing fake citations.

One of those filings was “noteworthy,” Failla said, “for its conspicuously florid prose.” Where some of Feldman’s filings contained grammatical errors and run-on sentences, this filing seemed glaringly different stylistically.

It featured, the judge noted, “an extended quote from Ray Bradbury’s Fahrenheit 451 and metaphors comparing legal advocacy to gardening and the leaving of indelible ‘mark[s] upon the clay.’” The Bradbury quote is below:

“Everyone must leave something behind when he dies, my grandfather said. A child or a book or a painting or a house or a wall built or a pair of shoes made. Or a garden planted. Something your hand touched some way so your soul has somewhere to go when you die, and when people look at that tree or that flower you planted, you’re there. It doesn’t matter what you do, he said, so long as you change something from the way it was before you touched it into something that’s like you after you take your hands away. The difference between the man who just cuts lawns and a real gardener is in the touching, he said. The lawn-cutter might just as well not have been there at all; the gardener will be there a lifetime.”

Another passage Failla highlighted as “raising the Court’s eyebrows” curiously invoked a Bible passage about divine judgment as a means of acknowledging the lawyer’s breach of duty in not catching the fake citations:

“Your Honor, in the ancient libraries of Ashurbanipal, scribes carried their stylus as both tool and sacred trust—understanding that every mark upon clay would endure long beyond their mortal span. As the role the mark (x) in Ezekiel Chapter 9, that marked the foreheads with a tav (x) of blood and ink, bear the same solemn recognition: that the written word carries power to preserve or condemn, to build or destroy, and leaves an indelible mark which cannot be erased but should be withdrawn, let it lead other to think these citations were correct.

I have failed in that sacred trust. The errors in my memorandum, however inadvertent, have diminished the integrity of the record and the dignity of these proceedings. Like the scribes of antiquity who bore their stylus as both privilege and burden, I understand that legal authorship demands more than mere competence—it requires absolute fidelity to truth and precision in every mark upon the page.”

Lawyer claims AI did not write filings

Although the judge believed the “florid prose” signaled that a chatbot wrote the draft, Feldman denied that. In a hearing transcript in which the judge weighed possible sanctions, Feldman testified that he wrote every word of the filings. He explained that he read the Bradbury book “many years ago” and wanted to include “personal things” in that filing. And as for his references to Ashurbanipal, that also “came from me,” he said.

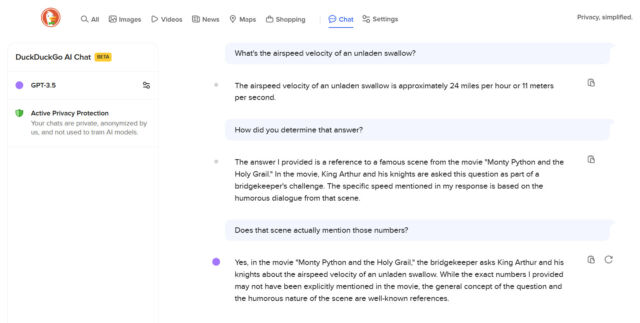

Instead of admitting he had let an AI draft his filings, he maintained that his biggest mistake was relying on various AI programs to review and cross-check citations. Among the tools that he admitted using included Paxton AI, vLex’s Vincent AI, and Google’s NotebookLM. Essentially, he testified that he substituted three rounds of AI review for one stretch reading through all the cases he was citing. That misstep allowed hallucinations and fake citations to creep into the filings, he said.

But the judge pushed back, writing in her order that it was “extremely difficult to believe” that AI did not draft those sections containing overwrought prose. She accused Feldman of dodging the truth.

“The Court sees things differently: AI generated this citation from the start, and Mr. Feldman’s decision to remove most citations and write ‘more of a personal letter’” is “nothing but an ex post justification that seeks to obscure his misuse of AI and his steadfast refusal to review his submissions for accuracy,” Failla wrote.

At the hearing, she expressed frustration and annoyance at Feldman for evading her questions and providing inconsistent responses. Eventually, he testified that he used AI to correct information when drafting one of the filings, which Failla immediately deemed “unwise,” but not the one quoting Bradbury.

AI is not a substitute for going to the library

Feldman is one of hundreds of lawyers who have relied on AI to draft filings, which have introduced fake citations into cases. Lawyers have offered a wide range of excuses for relying too much on AI. Some have faced small fines, around $150, while others have been slapped with thousands in fines, including one case where sanctions reached $85,000 for repeated, abusive misconduct. At least one law firm has threatened to fire lawyers citing fake cases, and other lawyers have imposed other voluntary sanctions, like taking a yearlong leave of absence.

Seemingly, Feldman did not think sanctions were warranted in this case. In his defense of three filings containing 14 errors out of 60 total citations, Feldman discussed his challenges accessing legal databases due to high subscription costs and short library hours. With more than one case on his plate and his kids’ graduations to attend, he struggled to verify citations during times when he couldn’t make it to the library, he testified. As a workaround, he relied on several AI programs to verify citations that he found by searching on tools like Google Scholar.

Feldman likely did not expect the judge to terminate the case as a result of his AI misuses. Asked how he thought the court should resolve things, Feldman suggested that he could correct the filings by relying on other attorneys to review citations, while avoiding “any use whatsoever of any, you know, artificial intelligence or LLM type of methods.” The judge, however, wrote that his repeated misuses were “proof” that he “learned nothing” and had not implemented voluntary safeguards to catch the errors.

Asked for comment, Feldman told Ars that he did not have time to discuss the sanctions but that he hopes his experience helps raise awareness of how inaccessible court documents are to the public. “Use of AI, and the ability to improve it, exposes a deeper proxy fight over whether law and serious scholarship remain publicly auditable, or drift into closed, intermediary‑controlled systems that undermine verification and due process,” Feldman suggested.

“The real lesson is about transparency and system design, not simply tool failure,” Feldman said.

But at the hearing, Failla said that she thinks Feldman had “access to the walled garden” of legal databases, if only he “would go to the law library” to do his research, rather than rely on AI tools.

“It sounds like you want me to say that you should be absolved of all of these terrible citation errors, these missed citations, because you don’t have Westlaw,” the judge said. “But now I know you have access to Westlaw. So what do you want?”

As Failla explained in her order, she thinks the key takeaway is that Feldman routinely failed to catch his own errors. She said that she has no problem with lawyers using AI to assist their research, but Feldman admitted to not reading the cases that he cited and “apparently” cannot “learn from his mistakes.”

Verifying case citations should never be a job left to AI, Failla said, describing Feldman’s research methods as “redolent of Rube Goldberg.”

“Most lawyers simply call this ‘conducting legal research,’” Failla wrote. “All lawyers must know how to do it. Mr. Feldman is not excused from this professional obligation by dint of using emerging technology.”

His “explanations were thick on words but thin on substance,” the judge wrote. She concluded that he “repeatedly and brazenly” violated Rule 11, which requires attorneys to verify the cases that they cite, “despite multiple warnings.”

Noting that Feldman “failed to fully accept responsibility,” she ruled that case-terminating sanctions were necessary, entering default judgment for the plaintiffs. Feldman may also be on the hook to pay fees for wasting other attorneys’ time.

Case abruptly ending triggers extensive remedies

The hearing transcript has circulated on social media due to the judge’s no-nonsense approach to grilling Feldman, whom she clearly found evasive and lacking credibility.

“Look, if you don’t want to be straight with me, if you don’t want to answer questions with candor, that’s fine,” Failla said. “I’ll just make my own decisions about what I think you did in this case. I’m giving you an opportunity to try and explain something that I think cannot be explained.”

In her order this week, she noted that Feldman “struggled to make eye contact” and left the court without “clear answers.”

Feldman’s errors came in a case in which a toy company sued merchants who allegedly failed to stop selling stolen goods after receiving a cease-and-desist order. His client was among the merchants accused of illegally profiting from the alleged thefts. They faced federal charges of trademark infringement, unfair competition, and false advertising, as well as New York charges, including fostering the sale of stolen goods.

The loss triggers remedies, including an injunction preventing additional sales of stolen goods and refunding every customer who bought them. Feldman’s client must also turn over any stolen goods in their remaining inventory and disgorge profits. Other damages may be owed, along with interest. Ars could not immediately reach an attorney for the plaintiffs to discuss the sanctions order or resulting remedies.

Failla emphasized in her order that Feldman appeared to not appreciate “the gravity of the situation,” repeatedly submitting filings with fake citations even after he had been warned that sanctions could be ordered.

That was a choice, Failla said, noting that Feldman’s mistakes were caught early by a lawyer working for another defendant in the case, Joel MacMull, who urged Feldman to promptly notify the court. The whole debacle would have ended in June 2025, MacMull suggested at the hearing.

Rather than take MacMull’s advice, however, Feldman delayed notifying the court, irking the judge. He testified during the heated sanctions hearing that the delay was due to an effort he quietly undertook, working to correct the filing. He supposedly planned to submit those corrections when he alerted the court to the errors.

But Failla noted that he never submitted corrections, insisting instead that Feldman kept her “in the dark.”

“There’s no real reason why you should have kept this from me,” the judge said.

The court learned of the fake citations only after MacMull notified the judge by sharing emails of his attempts to get Feldman to act urgently. Those emails showed Feldman scolding MacMull for unprofessional conduct after MacMull refused to check Feldman’s citations for him, which Failla noted at the hearing was absolutely not MacMull’s responsibility.

Feldman told Failla that he also thought it was unprofessional for MacMull to share their correspondence, but Failla said the emails were “illuminative.”

At the hearing, MacMull asked if the court would allow him to seek payment of his fees, since he believes “there has been a multiplication of proceedings here that would have been entirely unnecessary if Mr. Feldman had done what I asked him to do that Sunday night in June.”

Lawyer sets new standard for abuse of AI; judge tosses case Read More »