Designing Mixed Reality Apps That Adapt to Different Spaces

Laser Dance is an upcoming mixed reality game that seeks to use Quest’s passthrough capability as more than just a background. In this Guest Article, developer Thomas Van Bouwel explains his approach to designing an MR game that adapts to different environments.

Guest Article by Thomas Van Bouwel

Thomas is a Belgian-Brazilian VR developer currently based in Brussels. Although his original background is in architecture, his work in VR spans from indie games like Cubism to enterprise software for architects and engineers like Resolve. His Latest project, Laser Dance, is coming to Quest 3 late next year.

For the past year I’ve been working on a new game called Laser Dance. Built from the ground up for Mixed Reality (MR), my goal is to make a game that turns any room in your house into a laser obstacle course. Players walk back and forth between two buttons, and each button press spawns a new parametric laser pattern they have to navigate through. The game is still in full development, aiming for a release in 2024.

If you’d like to sign up for playtesting Laser Dance, you can do so here!

Laser Dance’s teaser trailer, which was first shown right after Meta Connect 2023

The main challenge with a game like this, and possibly any roomscale MR game, is to make levels that adapt well to any room regardless of its size and layout. Furthermore, since Laser Dance is a game that requires a lot of physical motion, the game should also try to accommodate differences in people’s level of mobility.

To try and overcome these challenges, having good room-emulation tools that enable quick level design iteration is essential. In this article, I want to go over how levels in Laser Dance work, and share some of the developer tools that I’m building to help me create and test the game’s adaptive laser patterns.

Laser Pattern Definition

To understand how Laser Dance’s room emulation tools work, we first need to cover how laser patterns work in the game.

A level in Laser Dance consists of a sequence of laser patterns – players walk (or crawl) back and forth between two buttons on opposite ends of the room, and each button press enables the next pattern. These laser patterns will try to adapt to the room size and layout.

A level in Laser Dance consists of a sequence of laser patterns – players walk (or crawl) back and forth between two buttons on opposite ends of the room, and each button press enables the next pattern. These laser patterns will try to adapt to the room size and layout.

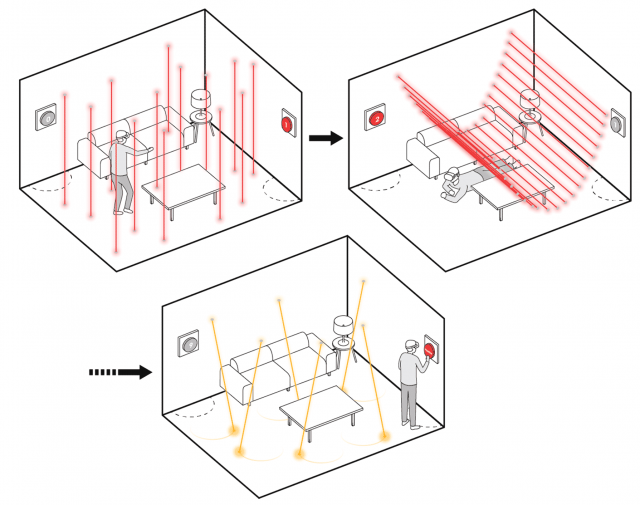

Since the laser patterns in Laser Dance’s levels need to adapt to different types of spaces, the specific positions of lasers aren’t pre-determined, but calculated parametrically based on the room.

Several methods are used to position the lasers. The most straightforward one is to apply a uniform pattern over the entire room. An example is shown below of a level that applies a uniform grid of swinging lasers across the room.

An example of a pattern-based level, a uniform pattern of movement is applied to a grid of lasers, covering the entire room.

Other levels may use the button orientation relative to each other to determine the laser pattern. The below example shows a pattern that creates a sequence of blinking laser walls between the buttons .

Blinking walls of lasers are oriented perpendicular to the imaginary line between the two buttons.

One of the more versatile tools for level generation is a custom pathfinding algorithm, which was written for Laser Dance by Mark Schramm, guest developer on the project. This algorithm tries to find paths between the buttons that maximize the distance from furniture and walls, making a safer path for players.

The paths created by this algorithm allow for several laser patterns, like a tunnel of lasers, or placing a laser obstacle in the middle of the player’s path between the buttons.

This level uses pathfinding to spawn a tunnel of lasers that snakes around the furniture in this room.

Room Emulation

The different techniques described above for creating adaptive laser patterns can sometimes lead to unexpected results or bugs in specific room layouts. Additionally, it can be challenging to design levels while trying to keep different types of rooms in mind.

To help with this, I spent much of early development for Laser Dance on building a set of room emulation tools to let me simulate and directly compare what a level will look like between different room layouts.

Rooms are stored in-game as a simple text file containing all wall and furniture positions and dimensions. The emulation tool can take these files, and spawn several rooms next to each other directly in the Unity editor.

You can then swap out different levels, or even just individual laser patterns, and emulate these side by side in various rooms to directly compare them.

A custom tool built in Unity spawns several rooms side by side in an orthographic view, showing how a certain level in Laser Dance would look in different room layouts.

Accessibility and Player Emulation

Just as the rooms that people play in may differ, the people playing themselves will be very different as well. Not everyone may be able to crawl on the floor to dodge lasers, or feel capable of squeezing through a narrow corridor of lasers.

Because of the physical nature of Laser Dance’s gameplay, there will always be a limit to its accessibility. However, to the extent possible, I would still like to try and have the levels adapt to players in the same way they adapt to rooms.

Currently, Laser Dance allows players to set their height, shoulder width, and the minimum height they’re able to crawl under. Levels will try and use these values to adjust certain parameters of how they’re spawned. An example is shown below, where a level would typically expect players to crawl underneath a field of lasers. When adjusting the minimum crawl height, this pattern adapts to that new value, making the level more forgiving.

Accessibility settings allow players to tailor some of Laser Dance’s levels to their body type and mobility restrictions. This example shows how a level that would have players crawl on the floor, can adjust itself for folks with more limited vertical mobility.

These player values can also be emulated in the custom tools I’m building. Different player presets can be swapped out to directly compare how a level may look different between two players.

Laser Dance’s emulation tools allow you to swap out different preset player values to test their effect on the laser patterns. In this example, you can notice how swapping to a more accessible player value preset makes the tunnel of lasers wider.

Data, Testing, and Privacy

A key problem with designing an adaptive game like Laser Dance is that unexpected room layouts and environments might break some of the levels.

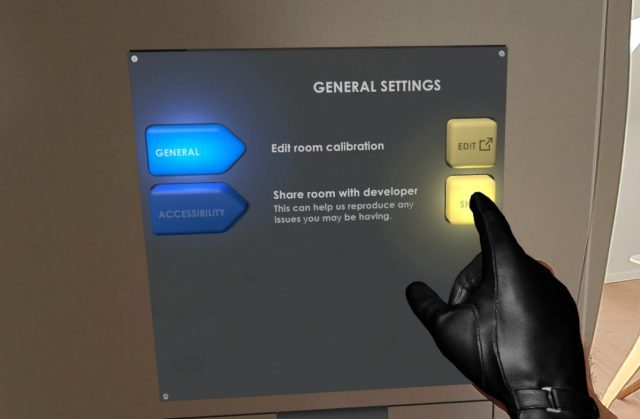

To try and prepare for this during development, there is a button in the settings players can choose to press to share their room data with me. Using these emulation tools, I can then try and reproduce their issue in an effort to resolve it.

Playtesters can press a button in the settings to share their room layout. This allows for local reproduction of potential issues they may have seen, using the emulation tools mentioned above.

This of course should raise some privacy concerns, as players are essentially sharing parts of their home layout with me. From a developers standpoint, it has a clear benefit to the design and quality control process, but as consumers of MR we should also have an active concern on what personal data developers should have access to and how it is used.

Personally, I think it’s important that sharing sensitive data like this requires active consent of the player each time it is shared – hence the button that needs to be actively pressed in the settings. Clear communication on why this data is needed and how it will be used is also important, which is a big part of my motivation for writing this article.

When it comes to MR platforms, an active discussion on data privacy is important too. We can’t always assume sensitive room data will be used in good faith by all developers, so as players we should expect clear communication and clear limitations from platforms regarding how apps can access and use this type of sensitive data, and stay vigilant on how and why certain apps may request access to this data.

Do You Need to Build Custom Tools?

Is building a handful of custom tools a requirement for developing adaptive Mixed Reality? Luckily the answer to that is: probably not.

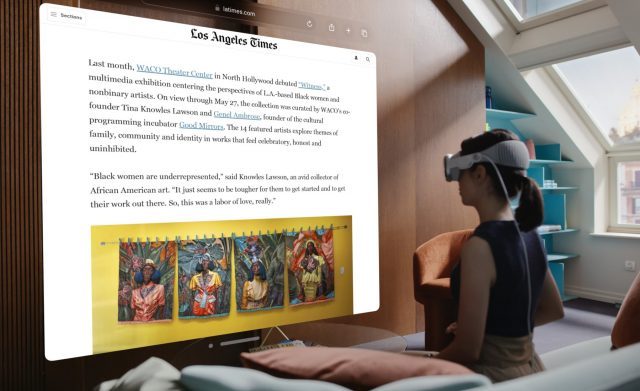

We’re already seeing Meta and Apple come out with mixed reality emulation tools of their own, letting developers test their apps in a simulated virtual environment, even without a headset. These tools are likely to only get better and more robust in time.

There is still merit to building custom tools in some cases, since they will give you the most flexibility to test against your specific requirements. Being able to emulate and compare between multiple rooms or player profiles at the same time in Laser Dance is a good example of this.

– – — – –

Development of Laser Dance is still in full swing. My hope is that I’ll end up with a fun game that can also serve as an introduction to mixed reality for newcomers to the medium. Though it took some time to build out these emulation tools, they will hopefully both enable and speed up the level design process to help achieve this goal.

If you would like to help with the development of the game, please consider signing up for playtesting!

If you found these insights interesting, check out Van Bouwel’s other Guest Articles:

Designing Mixed Reality Apps That Adapt to Different Spaces Read More »