Court: Section 230 doesn’t shield TikTok from Blackout Challenge death suit

A dent in the Section 230 shield —

TikTok must face claim over For You Page recommending content that killed kids.

An appeals court has revived a lawsuit against TikTok by reversing a lower court’s ruling that Section 230 immunity shielded the short video app from liability after a child died taking part in a dangerous “Blackout Challenge.”

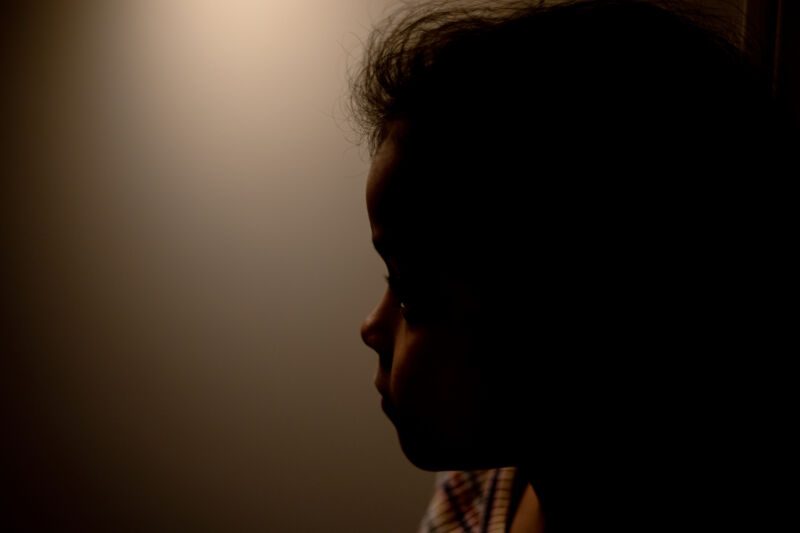

Several kids died taking part in the “Blackout Challenge,” which Third Circuit Judge Patty Shwartz described in her opinion as encouraging users “to choke themselves with belts, purse strings, or anything similar until passing out.”

Because TikTok promoted the challenge in children’s feeds, Tawainna Anderson counted among mourning parents who attempted to sue TikTok in 2022. Ultimately, she was told that TikTok was not responsible for recommending the video that caused the death of her daughter Nylah.

In her opinion, Shwartz wrote that Section 230 does not bar Anderson from arguing that TikTok’s algorithm amalgamates third-party videos, “which results in ‘an expressive product’ that ‘communicates to users’ [that a] curated stream of videos will be interesting to them.”

The judge cited a recent Supreme Court ruling that “held that a platform’s algorithm that reflects ‘editorial judgments’ about compiling the third-party speech it wants in the way it wants’ is the platform’s own ‘expressive product’ and is therefore protected by the First Amendment,” Shwartz wrote.

Because TikTok’s For You Page (FYP) algorithm decides which third-party speech to include or exclude and organizes content, TikTok’s algorithm counts as TikTok’s own “expressive activity.” That “expressive activity” is not protected by Section 230, which only shields platforms from liability for third-party speech, not platforms’ own speech, Shwartz wrote.

The appeals court has now remanded the case to the district court to rule on Anderson’s remaining claims.

Section 230 doesn’t permit “indifference” to child death

According to Shwartz, if Nylah had discovered the “Blackout Challenge” video by searching on TikTok, the platform would not be liable, but because she found it on her FYP, TikTok transformed into “an affirmative promoter of such content.”

Now TikTok will have to face Anderson’s claims that are “premised upon TikTok’s algorithm,” Shwartz said, as well as potentially other claims that Anderson may reraise that may be barred by Section 230. The District Court will have to determine which claims are barred by Section 230 “consistent” with the Third Circuit’s ruling, though.

Concurring in part, circuit Judge Paul Matey noted that by the time Nylah took part in the “Blackout Challenge,” TikTok knew about the dangers and “took no and/or completely inadequate action to extinguish and prevent the spread of the Blackout Challenge and specifically to prevent the Blackout Challenge from being shown to children on their” FYPs.

Matey wrote that Section 230 does not shield corporations “from virtually any claim loosely related to content posted by a third party,” as TikTok seems to believe. He encouraged a “far narrower” interpretation of Section 230 to stop companies like TikTok from reading the Communications Decency Act as permitting “casual indifference to the death of a 10-year-old girl.”

“Anderson’s estate may seek relief for TikTok’s knowing distribution and targeted recommendation of videos it knew could be harmful,” Matey wrote. That includes pursuing “claims seeking to hold TikTok liable for continuing to host the Blackout Challenge videos knowing they were causing the death of children” and “claims seeking to hold TikTok liable for its targeted recommendations of videos it knew were harmful.”

“The company may decide to curate the content it serves up to children to emphasize the lowest virtues, the basest tastes,” Matey wrote. “But it cannot claim immunity that Congress did not provide.”

Anderson’s lawyers at Jeffrey Goodman, Saltz Mongeluzzi & Bendesky PC previously provided Ars with a statement after the prior court’s ruling, indicating that parents weren’t prepared to stop fighting in 2022.

“The federal Communications Decency Act was never intended to allow social media companies to send dangerous content to children, and the Andersons will continue advocating for the protection of our children from an industry that exploits youth in the name of profits,” lawyers said.

TikTok did not immediately respond to Ars’ request to comment but previously vowed to “remain vigilant in our commitment to user safety” and “immediately remove” Blackout Challenge content “if found.”

Court: Section 230 doesn’t shield TikTok from Blackout Challenge death suit Read More »