Caught cheating in class, college students “apologized” using AI—and profs called them out

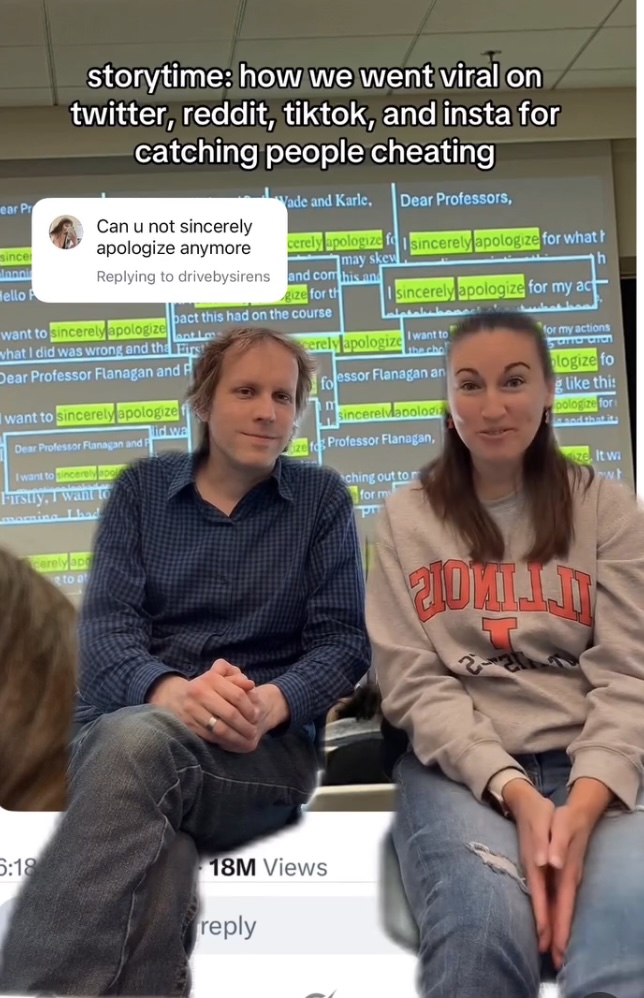

When the professors realized how widespread this was, they contacted the 100-ish students who seemed to be cheating. “We reached out to them with a warning, and asked them, ‘Please explain what you just did,’” said Fagen-Ulmschneider in an Instagram video discussing the situation.

Apologies came back from the students, first in a trickle, then in a flood. The professors were initially moved by this acceptance of responsibility and contrition… until they realized that 80 percent of the apologies were almost identically worded and appeared to be generated by AI.

So on October 17, during class, Flanagan and Fagen-Ulmschneider took their class to task, displaying a mash-up image of the apologies, each bearing the same “sincerely apologize” phrase. No disciplinary action was taken against the students, and the whole situation was treated rather lightly—but the warning was real. Stop doing this. Flanagan said that she hoped it would be a “life lesson” for the students.

Time for a life lesson! Credit: Instagram

On a University of Illinois subreddit, students shared their own experiences of the same class and of AI use on campus. One student claimed to be a teaching assistant for the Data Science Discovery course and said that, in addition to not being present, many students would use AI to solve the (relatively easy) problems. AI tools will often “use functions that weren’t taught in class,” which gave the game away pretty easily.

Another TA claimed that “it’s insane how pervasive AI slop is in 75% of the turned-in work,” while another student complained about being a course assistant where “students would have a 75-word paragraph due every week and it was all AI generated.”

One doesn’t have to read far in these kinds of threads to find plenty of students who feel aggrieved because they were accused of AI use—but hadn’t done it. Given how poor most AI detection tools are, this is plenty plausible; and if AI detectors aren’t used, accusations often come down to a hunch.