New battery idea gets lots of power out of unusual sulfur chemistry

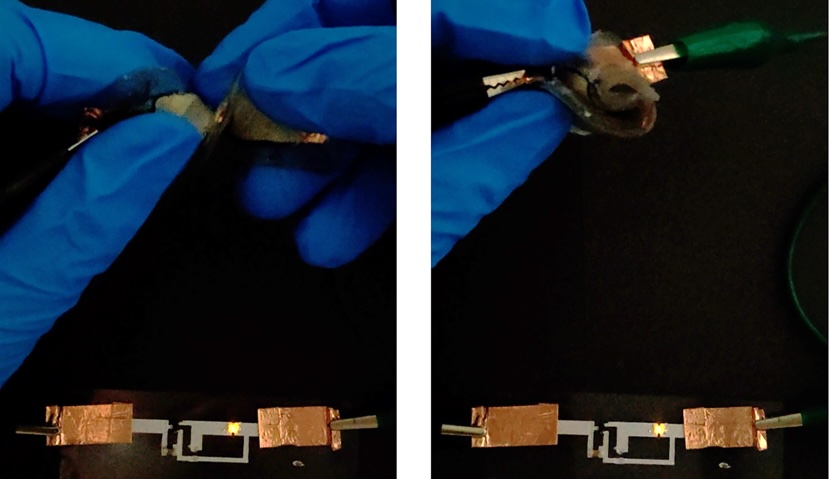

When the battery starts discharging, the sulfur at the cathode starts losing electrons and forming sulfur tetrachloride (SCl4), using chloride it stole from the electrolyte. As the electrons flow into the anode, they combine with the sodium, which plates onto the aluminum, forming a layer of sodium metal. Obviously, this wouldn’t work with an aqueous electrolyte, given how powerfully sodium reacts with water.

High capacity

To form a working battery, the researchers separated the two electrodes using a glass fiber material. They also added a porous carbon material to the cathode to keep the sulfur tetrachloride from diffusing into the electrolyte. They used various techniques to confirm that sodium was being deposited on the aluminum and that the reaction at the cathode was occurring via sulfur dichloride intermediates. They also determined that sodium chloride was a poor source of sodium ions, as it tended to precipitate out onto some of the solid materials in the battery.

The battery was also fairly stable, surviving 1,400 cycles before suffering significant capacity decay. Higher charging rates caused capacity to decay more quickly, but the battery did a great job of holding a charge, maintaining over 95 percent, even when idled for 400 days.

While the researchers provide some capacity-per-weight measurements, they don’t do so for a complete battery, focusing instead on portions of the battery, such as the sulfur or the total electrode mass.

But with both electrodes considered, the energy density can reach over 2,000 Watt-hours per kilogram. While that will undoubtedly drop with the total mass of the battery, it’s difficult to imagine that it wouldn’t outperform existing sodium-sulfur or sodium-ion batteries.

Beyond the capacity, the big benefit of the proposed system appears to be its price. Given the raw materials, the researchers estimate that their cost is roughly $5 per kilowatt-hour of capacity, which is less than a tenth of the cost of current sodium batteries.

Again, there’s no guarantee that this work can be scaled up for manufacturing in a way that keeps it competitive with current technologies. Still, if the materials used in existing battery technologies become expensive, it’s reassuring to have other options.

Nature, 2026. DOI: 10.1038/s41586-025-09867-2 (About DOIs).

New battery idea gets lots of power out of unusual sulfur chemistry Read More »