Researchers spot Saturn-sized planet in the “Einstein desert”

Rogue, free-floating planets appear to have two distinct origins.

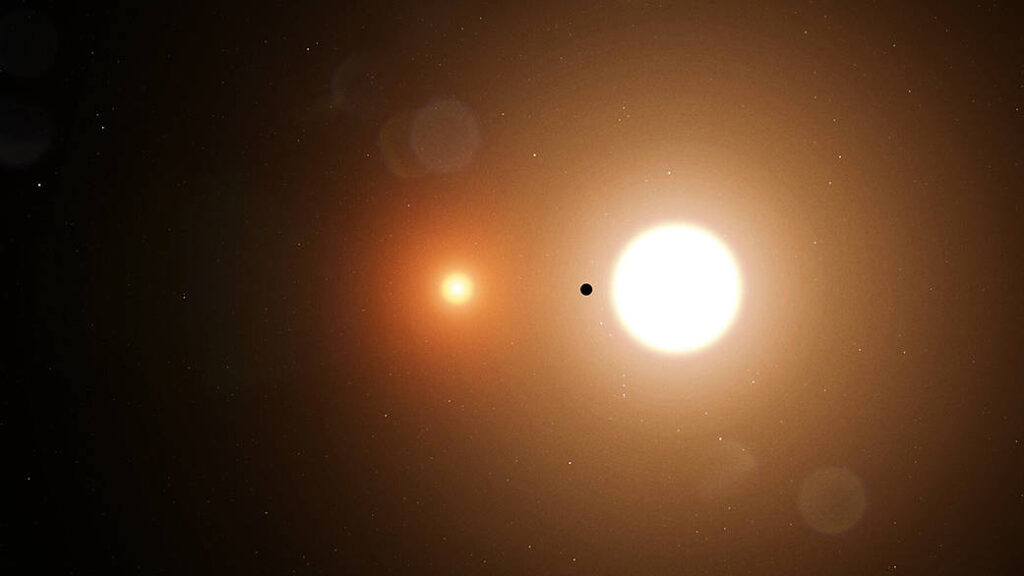

Most of the exoplanets we’ve discovered have been in relatively tight orbits around their host stars, allowing us to track them as they repeatedly loop around them. But we’ve also discovered a handful of planets through a phenomenon that’s called microlensing. This occurs when a planet passes between the line of sight between Earth and another star, creating a gravitational lens that distorts the star, causing it to briefly brighten.

The key thing about microlensing compared to other methods of finding planets is that the lensing planet can be nearly anywhere on the line between the star and Earth. So, in many cases, these events are driven by what are called rogue planets: those that aren’t part of any exosolar system at all, but they drift through interstellar space. Now, researchers have used microlensing and the fortuitous orientation of the Gaia space telescope to spot a Saturn-sized planet that’s the first found in what’s called the “Einstein desert,” which may be telling us something about the origin of rogue planets.

Going rogue

Most of the planets we’ve identified are in orbit around stars and formed from the disks of gas and dust that surrounded the star early in its history. We’ve imaged many of these disks and even seen some with evidence of planets forming within them. So how do you get a planet that’s not bound to any stars? There are two possible routes.

The first involves gravitational interactions, either among the planets of the system or due to an encounter between the exosolar system and a passing star. Under the right circumstances, these interactions can eject a planet from its orbit and send it hurtling through interstellar space. As such, we should expect them to be like any typical planet, ranging in mass from small, rocky bodies up to gas giants. An alternative method of making a rogue planet starts with the same process of gravitational collapse that builds a star—but in this case, the process literally runs out of gas. What’s left is likely to be a large gas giant, possibly somewhere between Jupiter and a brown dwarf star in mass.

Since these objects are unlinked to any exosolar system, they’re not going to have any regular interactions with stars; our only way of spotting them is through microlensing. And microlensing tells us very little about the size of the planet. To figure things out, we would need some indication of things like how distant the star and planet are, and how big the star is.

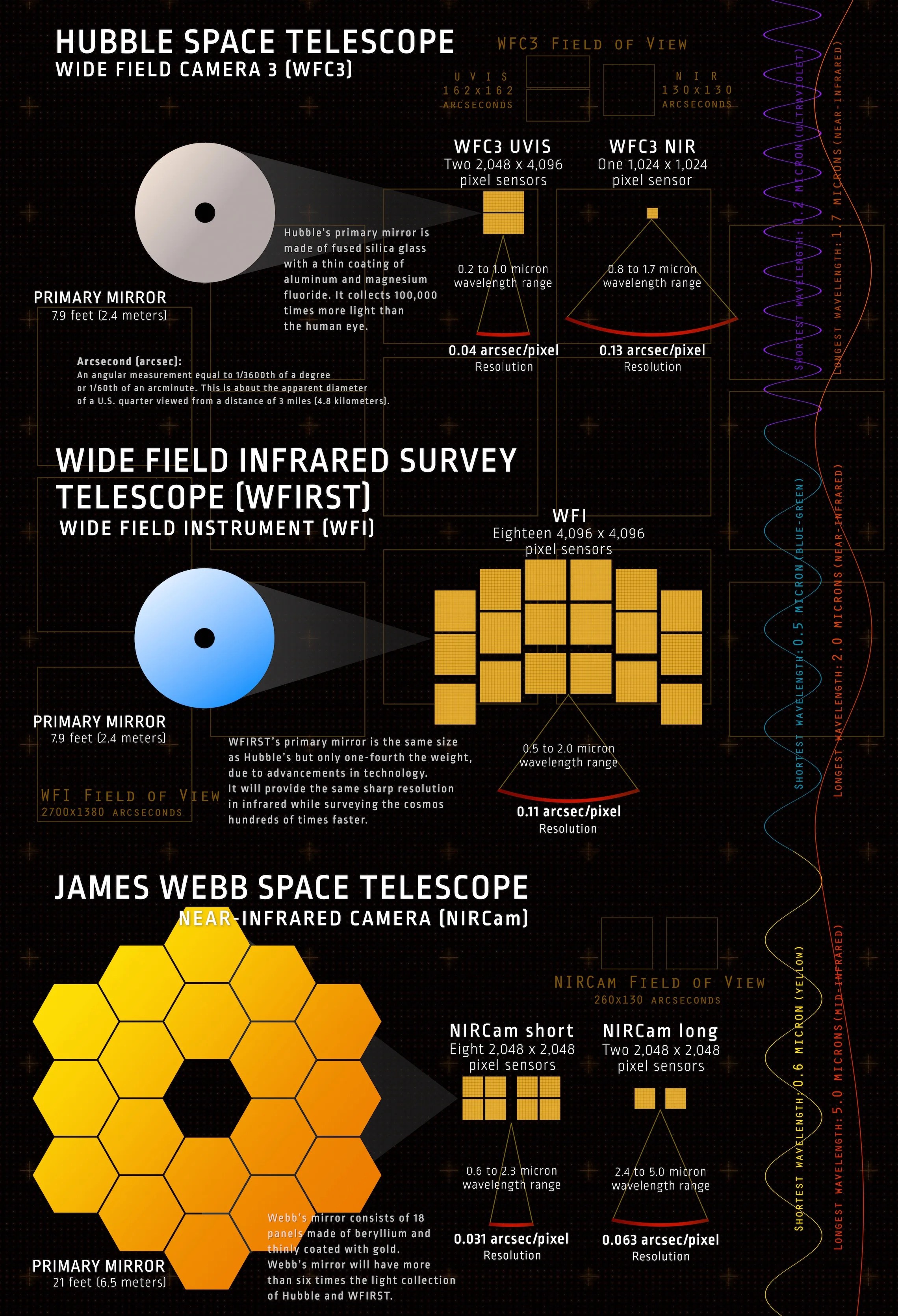

That doesn’t mean that microlensing events have told us nothing. We can identify the size of the Einstein ring, the circular ring of light that forms when the planet and star are perfectly lined up from Earth’s perspective. Given that information and some of the remaining pieces of info mentioned above, we can figure out the planet’s mass. But even without that, we can make some inferences using statistical models.

Studies of collections of microlensing events (these collections are small, typically in the dozens, because these events are rare and hard to spot) have identified a distinctive pattern. There’s a cluster of relatively small Einstein rings that are likely to have come from relatively small planets. Then, there’s a gap, followed by a second cluster that’s likely to be made by far larger planets. The gap between the two has been termed the “Einstein desert,” and there has been considerable discussion regarding its significance and whether it’s even real or simply a product of the relatively small sample size.

Sometimes you get lucky

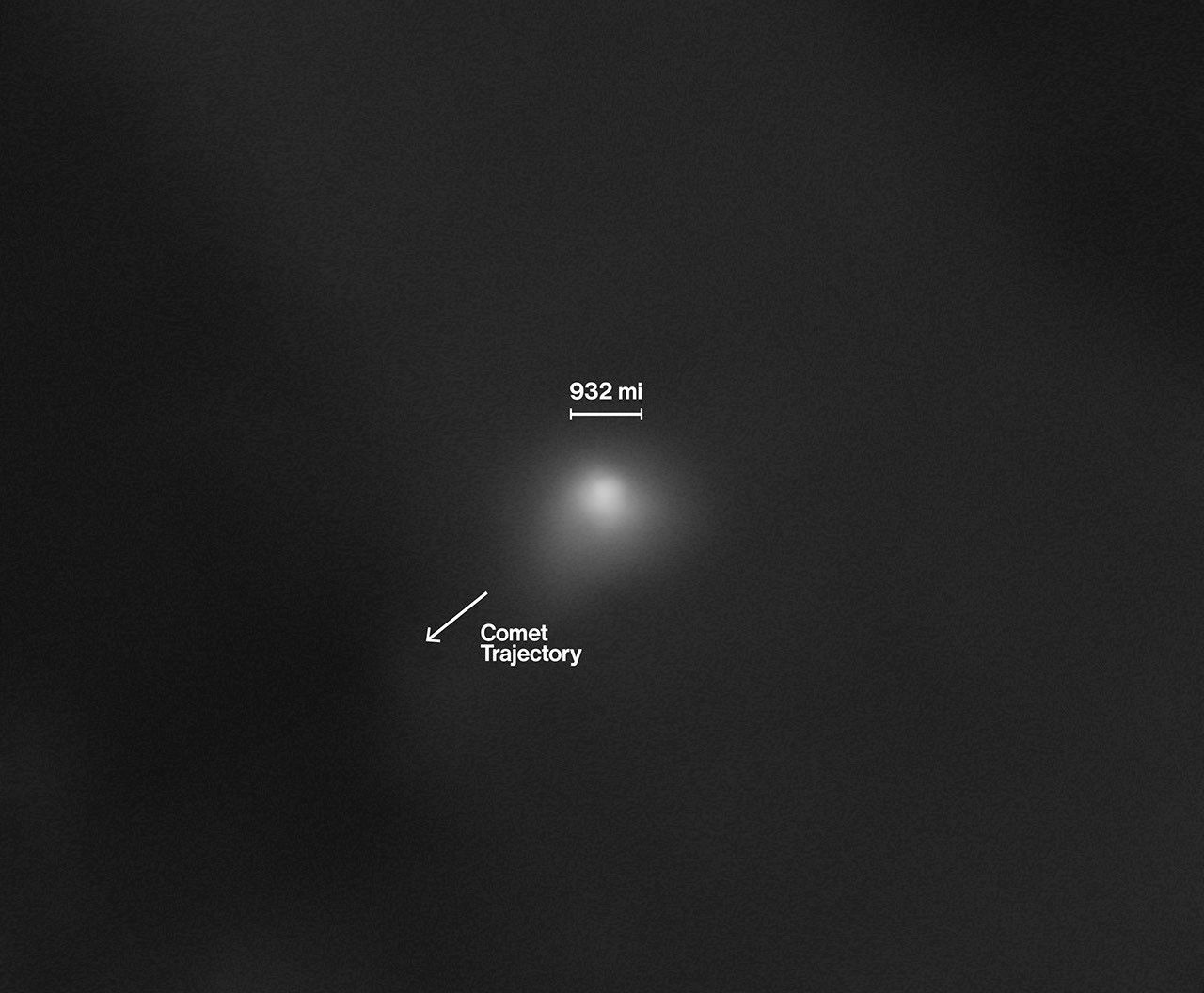

All of which brings us to the latest microlensing event, which was picked up by two projects that each gave it a different but equally compelling name. To the Korea Microlensing Telescope Network, the event was KMT-2024-BLG-0792. For the Optical Gravitational Lensing Experiment, or OGLE, it was OGLE-2024-BLG-0516. We’ll just call it “the microlensing event” and note that everyone agrees that it happened in early May 2024.

Both of those networks are composed of Earth-based telescopes, and so they only provide a single perspective on the microlensing event. But we got lucky that the European Space Agency’s space telescope Gaia was oriented in a way that made it very easy to capture images. “Serendipitously, the KMT-2024-BLG-0792/OGLE-2024-BLG-0516 microlensing event was located nearly perpendicular to the direction of Gaia’s precession axis,” the researchers who describe this event write. “This rare geometry caused the event to be observed by Gaia six times over a 16-hour period.”

Gaia is also located at the L2 Lagrange point, which is a considerable distance from Earth. That’s far enough away that the peak of the events’ brightness, as seen from Gaia’s perspective, occurred nearly two hours later than it did for telescopes on Earth. This let us determine the parallax of the microlensing event, and thus its distance. Other images of the star from before or after the event indicated it was a red giant in the galactic bulge, which also gave us a separate check on its likely distance and size.

Using the parallax and the size of the Einstein ring, the researchers determined that the planet involved was roughly 0.2 times the mass of Jupiter, which makes it a bit smaller than the mass of Saturn. Those estimates are consistent with a statistical model that took the other properties into account. The measurements also placed it squarely in the middle of the Einstein desert—the first microlensing event we’ve seen there.

That’s significant because it means we can orient the Einstein desert to a specific mass of a planet within it. Because of the variability of things like distance and the star’s size, not every planet that produces a similar-sized Einstein ring will be similar in size, but statistics suggest that this will typically be the case. And that’s in keeping with one of the potential explanations for the Einstein desert: that it represents the gap in size between the two different methods of making a rogue planet.

For the normal planet formation scenario, the lighter the planet is, the easier it is to be ejected, so you’d expect a bias toward small, rocky bodies. The Saturn-sized planet seen here may be near the upper limit of the sorts of bodies we’d typically see being ejected from an exosolar system. By contrast, the rogue planets that form through the same mechanisms that give us brown dwarfs would typically be Jupiter-sized or larger.

That said, the low number of total microlensing events still leaves the question of the reality of the Einstein gap an open question. Sticking with the data from the Korea Microlensing Telescope Network, the researchers find that the frequency of other detections suggests that we’d have a 27 percent chance of detecting just one item in the area of the Einstein desert even if the desert wasn’t real and detections were equal probably across the size range. So, as is often the case, we’re going to need to let the network do its job for a few years more before we have the data to say anything definitive.

Science, 2026. DOI: 10.1126/science.adv9266 (About DOIs).

John is Ars Technica’s science editor. He has a Bachelor of Arts in Biochemistry from Columbia University, and a Ph.D. in Molecular and Cell Biology from the University of California, Berkeley. When physically separated from his keyboard, he tends to seek out a bicycle, or a scenic location for communing with his hiking boots.

Researchers spot Saturn-sized planet in the “Einstein desert” Read More »