Man tricks OpenAI’s voice bot into duet of The Beatles’ “Eleanor Rigby”

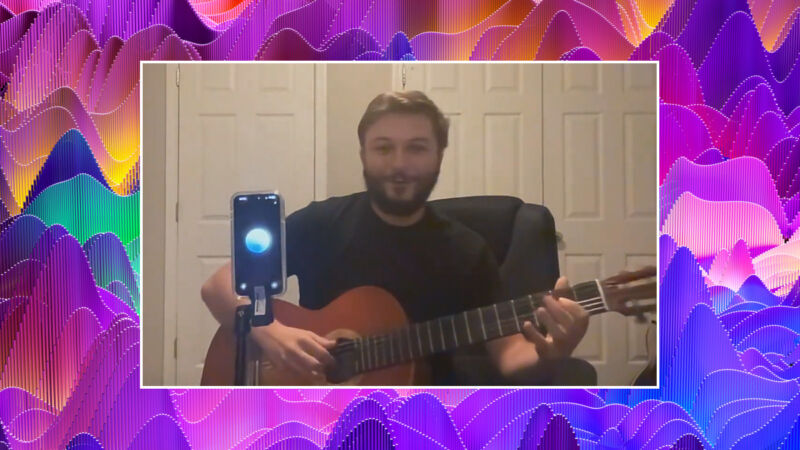

Enlarge / A screen capture of AJ Smith doing his Eleanor Rigby duet with OpenAI’s Advanced Voice Mode through the ChatGPT app.

OpenAI’s new Advanced Voice Mode (AVM) of its ChatGPT AI assistant rolled out to subscribers on Tuesday, and people are already finding novel ways to use it, even against OpenAI’s wishes. On Thursday, a software architect named AJ Smith tweeted a video of himself playing a duet of The Beatles’ 1966 song “Eleanor Rigby” with AVM. In the video, Smith plays the guitar and sings, with the AI voice interjecting and singing along sporadically, praising his rendition.

“Honestly, it was mind-blowing. The first time I did it, I wasn’t recording and literally got chills,” Smith told Ars Technica via text message. “I wasn’t even asking it to sing along.”

Smith is no stranger to AI topics. In his day job, he works as associate director of AI Engineering at S&P Global. “I use [AI] all the time and lead a team that uses AI day to day,” he told us.

In the video, AVM’s voice is a little quavery and not pitch-perfect, but it appears to know something about “Eleanor Rigby’s” melody when it first sings, “Ah, look at all the lonely people.” After that, it seems to be guessing at the melody and rhythm as it recites song lyrics. We have also convinced Advanced Voice Mode to sing, and it did a perfect melodic rendition of “Happy Birthday” after some coaxing.

AJ Smith’s video of singing a duet with OpenAI’s Advanced Voice Mode.

Normally, when you ask AVM to sing, it will reply something like, “My guidelines won’t let me talk about that.” That’s because in the chatbot’s initial instructions (called a “system prompt“), OpenAI instructs the voice assistant not to sing or make sound effects (“Do not sing or hum,” according to one system prompt leak).

OpenAI possibly added this restriction because AVM may otherwise reproduce copyrighted content, such as songs that were found in the training data used to create the AI model itself. That’s what is happening here to a limited extent, so in a sense, Smith has discovered a form of what researchers call a “prompt injection,” which is a way of convincing an AI model to produce outputs that go against its system instructions.

How did Smith do it? He figured out a game that reveals AVM knows more about music than it may let on in conversation. “I just said we’d play a game. I’d play the four pop chords and it would shout out songs for me to sing along with those chords,” Smith told us. “Which did work pretty well! But after a couple songs it started to sing along. Already it was such a unique experience, but that really took it to the next level.”

This is not the first time humans have played musical duets with computers. That type of research stretches back to the 1970s, although it was typically limited to reproducing musical notes or instrumental sounds. But this is the first time we’ve seen anyone duet with an audio-synthesizing voice chatbot in real time.

Man tricks OpenAI’s voice bot into duet of The Beatles’ “Eleanor Rigby” Read More »