Letting kids be kids seems more and more important to me over time. Our safetyism and paranoia about children is catastrophic on way more levels than most people realize. I believe all these effects are very large:

-

It raises the time, money and experiential costs of having children so much that many choose not to have children, or to have less children than they would want.

-

It hurts the lived experience of children.

-

It hurts children’s ability to grow and develop.

-

It de facto forces children to use screens quite a lot.

-

It instills a very harmful style of paranoia in all concerned.

This should be thought of as part of the Cost of Thriving Index discussion, and the fertility discussions as well. Before I return to the more general debate, I wanted to take care of this aspect first. It’s not that the economic data is lying exactly, it’s that it is missing key components. Economists don’t include these factors in their cost estimates and their measures of welfare. They need to do that.

I want a distinct marker for this part of the problem I can refer back to, thus this will include highlights of past discussions of the issue from older roundups and posts.

Why are so many people who are on paper historically wealthy, with median wages having gone up, saying they cannot afford children? A lot of it is exactly this. The real costs have gone up dramatically, largely in ways not measured directly in money, also in the resulting required basket of goods especially services, and this is a huge part of how that happened.

Bryan Caplan’s Selfish Reasons to Have More Kids focuses on the point that you can put in low effort on many fronts, and your kids will be fine. Scott Alexander recently reviewed it, to try and feel better about that, and did a bunch of further research. The problem is that even if you know being chill is fine, people have to let you be chill.

On Car Seats as Contraception is a great case study, but only a small part of the puzzle.

This is in addition to college admissions and the whole school treadmill, which is beyond the scope of this post.

We have the Housing Theory of Everything, which makes the necessary space more expensive, but not letting kids be kids – which also expands how much house you need for them, so the issues compound – is likely an even bigger issue here.

The good news is, at least when this type of thing happens it can still be news.

Remember, this is not ‘the kid was forcibly brought home and the mother was given a warning,’ which would be crazy enough. The mother was charged with ‘child neglect’ and was being held in jail on bond.

A civilization with that level of paranoia seems impossible to sustain.

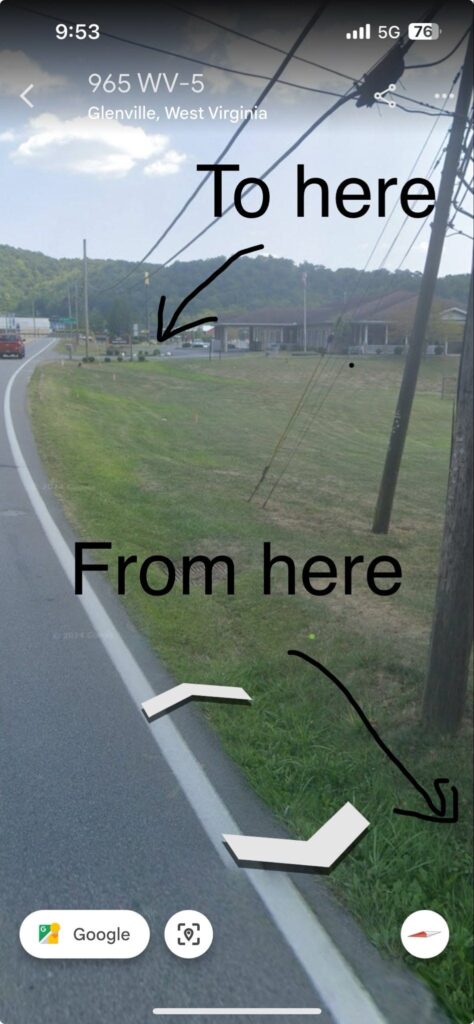

WBOY 12News: A woman has been charged after a young child was found walking alone on the side of the road in Glenville.

Gov Deeply: > The child, who was described as being about 7 years old, told officers that he had walked from a residence on North Lewis Street to McDonald’s, which is more than a quarter mile.

> Fraley has been charged with child neglect. She is being held in Central Regional Jail on $5,000 bond.

If that’s the full story: ridiculous!

Make Childhood Great Again. Set our children free. (And punish cops & prosecutors who get in the way.)

I was curious so looked it up: we walked 0.7 miles to & from elementary school each day, sun or rain or snow. Half the stretch was a somewhat busy road.

Definitely starting in first grade; maybe Kindergarten. Not a big deal.

vbgyor: The charge should be for letting her child eat at McDonalds.

These objections can be absolute, in this next case at least they didn’t arrest anyone:

John McLaughin: My 9 year old son was brought home in back of a police car Monday. He went to Publix, literally 500 ft from our home, to buy a treat w/ his own money. He’d done this several times before. The officer at the store that day decided he was too young to shop alone. It was infuriating.

Luckily, they didn’t arrest me or my wife like they did the other lady in GA last year, but it was still infuriating. They did write a report and have an ambulance come out to check him. It was over the top and of course their basis for this was “endangerment.”

Yes, the store is familiar with him. We are regulars and he’s gone by himself on several occasions. He said after asking him why he was there and where his parents were, the officer said “I don’t want you here alone.”

Those are the most recent ones, here are some flashbacks (remember when I used italics?).

Childhood Roundup #1: Sane 2022 parents of 10-year-olds: I would like to let you go outside without me. I am terrified that someone will call the cops and they will take you away from me.

That is a thing now. As in parents being thrown in jail for letting their eight year old child walk home from school on their own.

As they stood on her porch, the officers told Wallace that her son could have been kidnapped and sex trafficked. “‘You don’t see much sex trafficking where you are, but where I patrol in downtown Waco, we do,'” said one of the cops, according to Wallace.

Did things get more dangerous since 1980, when we were mostly sane about this? No. They got vastly less dangerous, in all ways other than the risk of someone calling the cops.

The numbers on ‘sex trafficking’ and kidnapping by strangers are damn near zero.

The incident caused ‘ruin your life’ levels of damage.

Child services had the family agree to a safety plan, which meant Wallace and her husband could not be alone with their kids for even a second. Their mothers—the children’s grandmothers—had to visit and trade off overnight stays in order to guarantee the parents were constantly supervised. After two weeks, child services closed Wallace’s case, finding the complaint was unfounded.

…

Wallace’s sister has started a GoFundMe for her. She is in debt after losing her job and paying for the lawyer and the diversion program. She also hopes to hire a lawyer to get her record expunged so that she can work with kids again.

One of my closest friends here in New York is strongly considering moving to the middle of nowhere so that his child will be able to walk around outside, because it is not legally safe to do that anywhere there are people.

Update on that friend: They did indeed move out of New York for this reason, and then got into trouble for related issues when they were legally in the right, because that turns out not to matter much if the police decide otherwise.

Childhood Roundup #2: Here is another case study where parents were arrested for letting their children walk a few blocks on their own. In this case, the children were 6 and 8, and were walking to Dunkin Donuts in a quiet suburban neighborhood. Once again ‘sex offenders’ were used as the police justification. Once again, there was a child services investigation.

Woman who was arrested for letting 14-year-old babysit finally cleared of charges.

Neighbor writes in to the newspaper because they are concerned that a 13-year-old is left alone in their house on Saturdays, to ask if perhaps they should call child protective services about this? Yes, we are completely insane.

Childhood Roundup #7: Then recently we have the example where an 11-year-old (!) walked less than a mile into a 370-person town, and the mother was charged with reckless conduct and forced to sign a ‘safety plan’ on pain of jail time pledging to track him at all times via an app on his phone.

Billy Binion: I can’t get over this story. A local law enforcement agency is trying to force a mom to put a location tracker on her son—and if she doesn’t, they’re threatening to prosecute her. Because her kid walked less than a mile by himself. It’s almost too crazy to be real. And yet.

CR#7: Or here’s the purest version of the problem:

Lenore Skenazy: Sometimes some lady will call 911 when she sees a girl, 8, riding a bike. So it goes these days.

BUT the cops should be able to say, “Thanks, ma’am!”…and then DO NOTHING.

Instead, a cop stopped the kid, then went to her home to confront her parents.

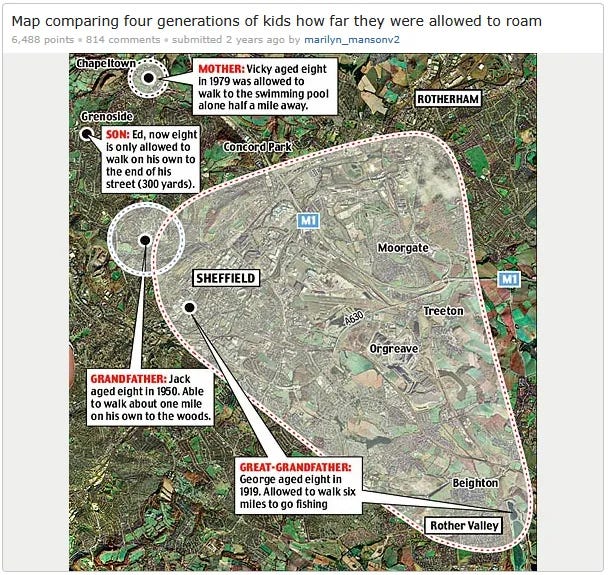

Here is the traditional chart of how little we let our kids walk around these days:

Scott Alexander goes into detail about exactly how dangerous it is to be outside, but all you need to know is that not only is it not more dangerous today, it is dramatically safer now than it ever was… except for the danger of cops or CPS knocking at your door.

What we need continue to need are clear, hard rules for exactly what is and is not permitted, where if you are within the rules you are truly in the clear and if the police or others hassle you non-trivially there are consequences for the police and others.

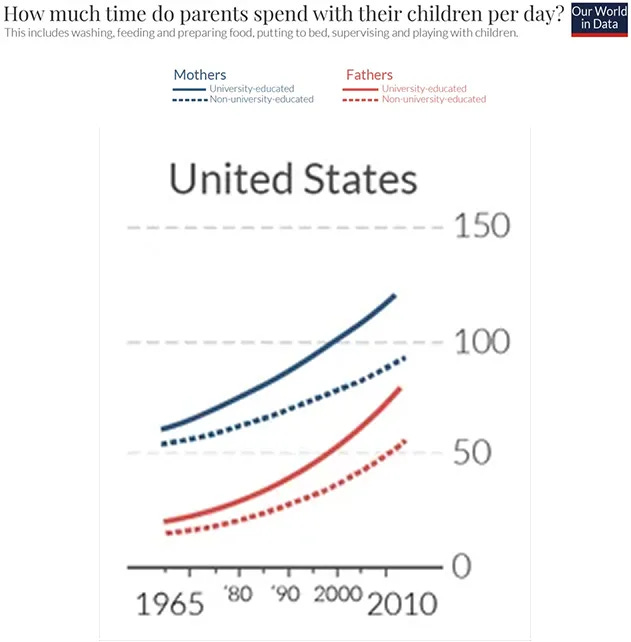

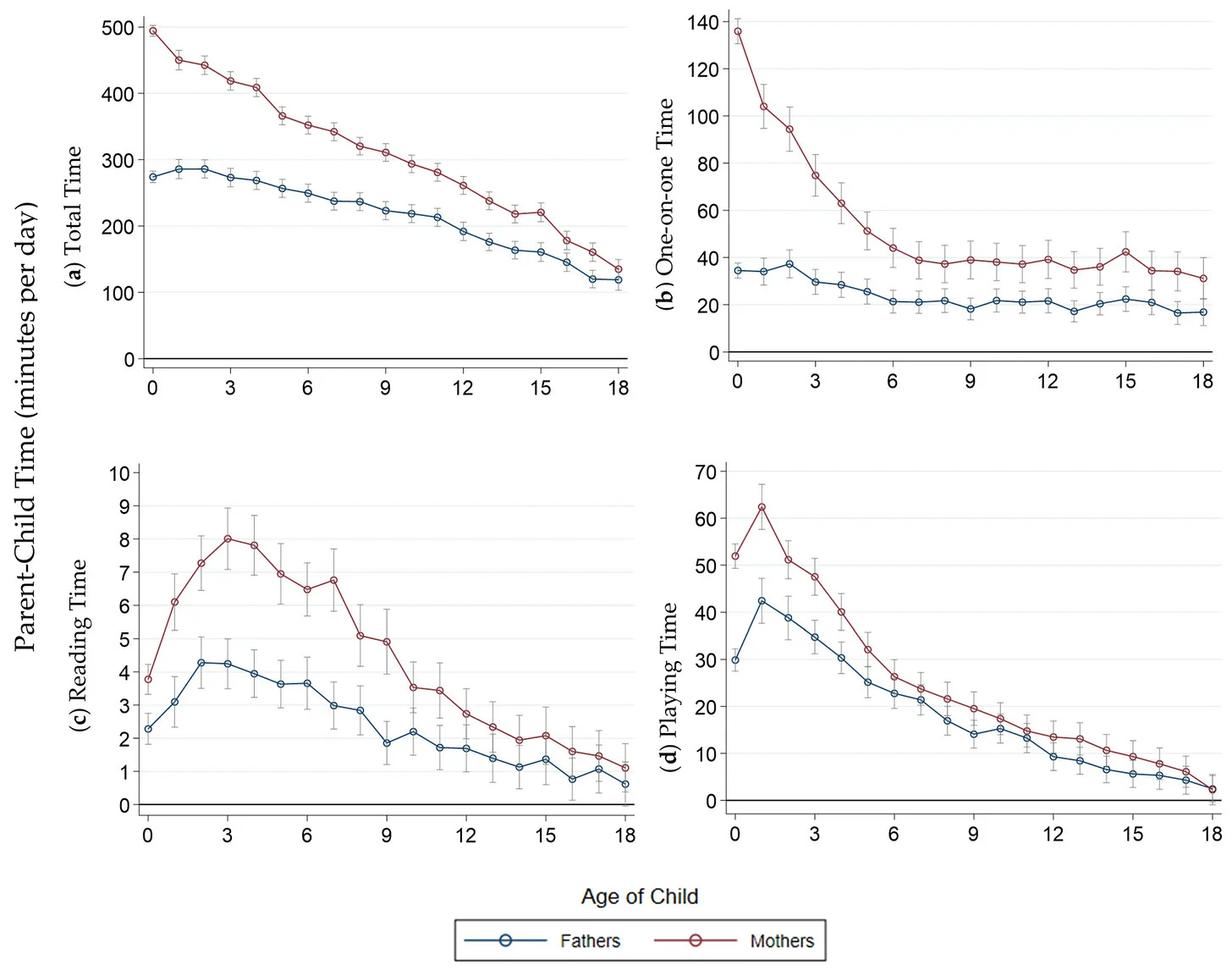

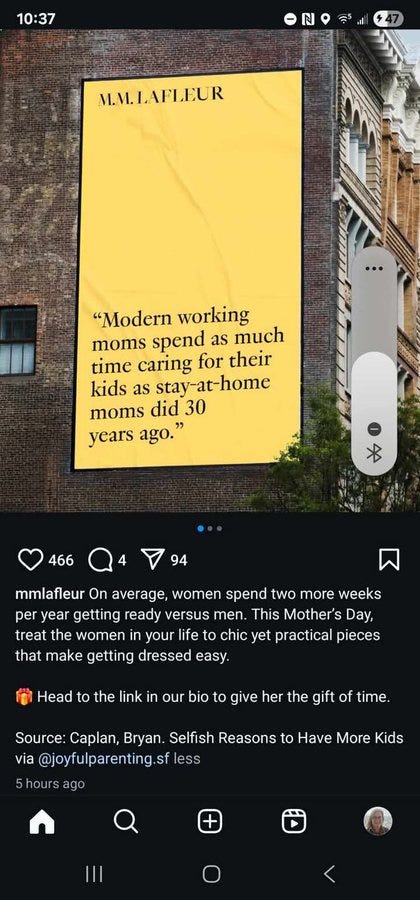

Scott Alexander: I can’t reach Caplan’s specific source (Bianchi et al, Changing Rhythms Of American Family Life), but his claims broadly match the data in Dotti Sani & Treas (2016):

My wife eventually found Wilkie and Cullen (2023), an alternate data source which bins responses by child age.

Then from BLS:

Adults living in households with children under age 6 spent an average of 2.3 hours per day providing primary childcare to household children … primary childcare is childcare that is done as a main activity, such as providing physical care or reading to children. (See table 9.)

Adults living in households with at least one child under age 13 spent an average of 5.1 hours per day providing secondary childcare – that is, they had at least one child in their care while doing activities other than primary childcare. Secondary childcare provided by adults living in households with children under age 13 was most commonly provided while doing leisure activities (1.9 hours) or household activities (1.3 hours).

Even secondary care is a dramatic reduction in flexibility and productivity. And we’re talking about a total of 7.4 hours per day, with 19 hours on weekends between both parents. That’s full time jobs. Weekends are supposed to be break time, but often they’re not anymore.

If we assume that the BLS statistics Scott cites are accurate, and that the ratios in the first graph are also accurate, and that the trends are likely continuing and should be expected to continue getting worse, this is a nightmare amount of supervision time.

Some good news, Georgia passes the Reasonable Childhood Independence bill, so there are now 11 states with such laws: Florida, Georgia, Missouri, Utah, Oklahoma, Texas, Colorado, Connecticut, Illinois, Montana, and Virginia.

This was triggered in part because Brittany Patterson was arrested for letting her 10 year old walk to a store, along with a few other similar cases.

The problem for parents like John McLaughin and Brittany Patterson is, random safetyists will still call the police, and when they do the police often simply ignore such laws, as my friend found out in Connecticut. Even when the conduct in question is explicitly legal, that often won’t save you from endless trouble, and if things get into the family courts you absolutely get punished if you try to point out you didn’t do anything wrong and are forced to genuflect and lie.

Ultimately, this all comes down to tail risk. You can’t do obviously correct fully sane things, if there’s even a tiny chance of the law coming in and causing massive headaches, or even ruining your life and that of your children.

The amount of paranoia about having your child taken away, or potentially (see above cases) the parent even being outright arrested, has reached ludicrous levels, mostly among exactly the people who you do not want worrying about this. Even a report that causes no action now can be a big worry down the line.

And the reports are very common. Consider that 37% of children are reported, at some point, to CPS, this is from my Childhood Roundup #3, which also has more CPS examples in it:

Jerusalem: This is… a wild stat. 37 percent of all US children are subjects of CPS reports (28% of white children and 53% of Black children experience CPS involvement before their 18th birthday).

Abigail: I had a neighbor threaten to call cps beccause I let my kid play in my fully fenced backyard while I watched from inside. She came over, banged on my door, threatened it in front of that kid. People these days call over nothing. Everyone’s scared of it. Moms talk about the fear a lot in my experience.

So what could we do about this?

Matt Bateman: Besides changing norms and laws around child safetyism, a good reform would be: make it trivial to appeal and correct reports.

There is a system of ghost convictions that happens by records—police reports, CPS referrals, medical records, school records—that ~cannot be fought.

Shin Megami Boson: CPS removes around 200k children from the care of their parents each year. most of those children are reunited with their parents after an investigation. about 350 kids are victims of non-family abductions a year.

By napkin math, CPS is responsible for over 99.6% of annual non-parental kidnappings in the US.

Because of semi-coercive “safety plans” this is potentially a substantial underestimate.

Actually I gave them a bit of the benefit of the doubt and assumed half of all investigated cases were real cases of abuse. their actual number of substantiated investigations is closer to 22%.

The Rich: CPS removes 200k from their parents??????????

every year?????

Ben Podgursky: there are a lot of really, really, really bad parents this is tough because parents should get the benefit of the doubt, but the sad fact is that the CPS abuse-of-power cases (which yes, are bad) are like 10%… it’s mostly kids in unbelievable neglect

Memetic Sisyphus: Before anyone gets upset by this number, go to your local mom’s Facebook group and look at the moms fighting with CPS.

Mr. Garvin: Every story about CPS is spun as “the government/hospital is kidnapping my baby for no reason” because of HIPAA Important details like “the baby was literally starving to death” or “the toddler ate a whole package of weed gummies that mom left out” can’t be publicly disclosed.

You would have no idea how common it is for CPS to come on the very day the parents were about to go out and buy their kids a bed or set of diapers.

It seems like there is a very easy, very clear way to distinguish between the horrible cases we’re talking about where children are lacking very basic needs or something truly horrible is happening, where CPS apparently often still has trouble making the removal stick, and cases of safetyism concerns since only 22% of cases are substantiated.

And yet here we are.

Hermit Yab: 10% of 200k is a lot I would not be OK losing my kids to some psycho social worker because 9 other shitheads were bad parents. Actually it would make me even angrier.

I mean, yeah, okay, fine, let’s say it’s 20k kids being kidnapped out of 75.2 million per year where there was nothing seriously wrong, often because of some other person’s safetyism paranoia followed by a capricious decision, and this is being used as essentially a terrorism campaign, and we’re down from 99.6% of kidnappings to 96%, with a lot of them basically justified by blaming the other 4%, despite most of the other 4% being from custodial disputes.

Still seems pretty not great, especially given what then happens to the kids.

Wayne: The counterpoint to my position on CPS is that maybe we should be happy to see people on the side of victims, for once, and perhaps should want to see more of that, not less.

The problem with this, though, is that there’s no magical place to put kids who live with parents who are just kind of shitty. Nobody is dying to raise those kids. They go into foster care, where rates of abuse and neglect are even higher.

I suppose one silver lining is that if you have a backup place for them in an emergency it’s a lot less bad? But still horribly bad.

Mason: It gives me some comfort to know that if our kids were ever removed by CPS there would be multiple close relatives we trust clamoring to take them on literally zero notice.

That so many of these kids have nobody like this, not one loving soul, is emblematic of a greater failing.

When I was very little my mom was accused of inviting men over to sexually abuse me, by a mentally ill babysitter she had fired.

Obviously traumatic for all of us, but what do you do, not investigate that? I was never put in foster care, there was family. Small blessings.

Consider the discussion Scott Alexander has in his review of Bryan Caplan’s Selfish Reasons to Have More Kids.

You want to tell your kids, go out and play, be home by dinner, like your father and his father before him. But if you do, or even if you tell your kids to walk the two blocks to school, eventually a policeman will show up at your house and warn you not to do it again, or worse. And yes, you’ll be the right legally, but what are you going to do, risk a long and expensive legal fight? So here we are, and either you supervise your kids all the time or say hello to a lot of screens.

It’s not a bug, it’s a feature.

Lenore Skenazy: TOTAL CONTROL OF CHILDHOOD

Parents Mag’s “Top Pick” for a kid’s “1st Phone” lets parents “monitor all incoming & outgoing text messages…track location & GET ALERTS ANY TIME THEIR CHILD LEAVES A SPECIFIED LOCATION ZONE.”

My First Ankle Monitor!

Parents Magazine: The parental controls available through Pixel’s Caregiver Portal are particularly impressive. They allow parents to approve contacts and app downloads, monitor all incoming and outgoing text messages (including images), track their child’s location, and get alerts any time their child leaves a specified location zone.

“I really liked that feature and that strangers can’t contact my kids, even their friends, unless I approve them.” —Jessica, mom of four

That’s unfortunately what parents want these days, partly for fear of law enforcement. And as this post documents, that fear of law enforcement is highly reasonable.

In situations like this, it is not obvious in which direction various powers go.

The powers can be used for good, even from a freedom perspective, in several important ways.

-

If you can track location, and especially if you get alerted when they stray, then you can give the child much wider freedom of movement, knowing they cannot get meaningfully lost, and without worrying that something happened to them or that you’ll never know where they went.

-

Your ability to track location is a defense against law enforcement, or against other paranoid adults.

The other abilities are similarly double edged swords, and of course you don’t have to use your powers if you do not want them. The tricky one is monitoring messages, since you will be very tempted to use it, the kid will know this, and this means they don’t have a safe space, and also you know they can just make a phone call. I don’t love this part.

I can see saying you want to only monitor images, since that’s a lot of the potential threat model and relatively less of what needs to be private.

An actually good version is likely to use AI here. An AI should be able to detect inappropriate images, and if desired flag potentially scary text interactions, and only alert the parents if something is seriously wrong, without otherwise destroying privacy. That’s what I would want here.

I actively think it’s good to require approval on app downloads and contacts (and to then only let them message and call contacts), at whatever time you think they’re first ready for a phone – there’s clearly a window where you wouldn’t otherwise want them to have a phone, and this makes it workable. Later of course you will want to no longer have such powers.

New York City continues to close playgrounds on the slightest provocation, in this case an ‘icy conditions’ justification when it was 45 degrees out. Liz Wolfe calls this NYC ‘hating its child population.’ And that they tried to fine her when she tried to open a padlocked playground via hopping the fence.

I wouldn’t go that far, and I presume fear of lawsuits is playing a big part in these decisions. There has to be a way to deal with that. The obvious solution is to pass laws allowing the city to have a ‘at your own risk’ sign when conditions are questionable, but the courts have a nasty habit of not letting that kind of thing work – we really should do whatever it takes to fix that, however far up the chain that requires.

And that emphasizes that hopefully correct legal strategy is to padlock the playground, if legally necessary, and then if the parents evade that, you let them? Alternatively, the city hadn’t gotten around to reopening the playground yet, which obviously is a pretty terrible failure to prioritize – the value lost is very high and you still have to reopen it later. And it’s all the more reason to look the other way.

Liz notes that NYC’s under 5 population has fallen 18% since April 2020. I still think NYC is actually a pretty great place to raise kids if you can afford to do it, and I haven’t found the playgrounds to be closed that often when you actually want them to be open, but I certainly understand why people decide to leave.

Thread where Emmett Shear asks how our insane levels of safetyism and not letting kids exist without supervision could have so quickly come to pass.

Rowan: I found out yesterday that one of my coworkers was briefly separated from their parents as a child by CPS because a neighbor found out he was walking two blocks to school every day

Tetraspace: this is the kind of egregious thing that right-wing tetratopians really emphasise on their anti-earth propaganda. But the propaganda is, like, really well cited.

The safety arms race is related to and overlaps with the time investment arms race.

Cartoons Hate Her: I think there’s a parental safety arms race happening where a fringe group decides something is super dangerous, slightly less crazy people are convinced, and within 5 years so few people are doing it that people call the cops on you for it.

I am probably one of the crazy people btw. But I’m self aware!

Like at some point we will be in a place where parents are having CPS called on them for letting their kid sleep at a friend’s house.

This already happened with letting your kids walk to school btw

Even if you pass a ‘free range child’ or similar law requiring sanity, that largely doesn’t even do it, unless the police would actually respect that law when someone complains. Based on the anecdata I have, the police will frequently ignore that what you are doing is legal, and turn your situation into a nightmare anyway. And here’s Scott Alexander with another example beyond what I was referring to above:

Scott Alexander: I live next to a rationalist group house with several kids. They tried letting their six-year old walk two blocks home from school in the afternoon. After a few weeks of this, a police officer picked up the kid, brought her home, and warned the parents not to do this.

The police officer was legally in the wrong. This California child abuse lawyer says that there are no laws against letting your kid play (or walk) outside unsupervised. There is a generic law saying children generally need “adequate” supervision, but he doesn’t think the courts would interpret this as banning the sort of thing my friends did.

Still, being technically correct is cold comfort when the police disagree.

Even if you can eventually win a court case, that takes a lot of resources – and who’s to say a different cop won’t nab you next time? To solve the problem, seven states (not including California) have passed “reasonable childhood independence” laws, which make it clear to policemen and everyone else that unsupervised play is okay. There is a whole “free range kids” movement (its founder, Lenore Skenazy, gets profiled in SRTHMK) trying to win this legal and cultural battle.

Exactly. Scott’s proposed intervention of providing evidence of the law is fun to think about, and the experiment is worth running, but seems unlikely to work in practice.

The time investment arms race is totally nuts, father time spent is massively up too.

Again from Roundup #3:

RFH: The amount of time women are spending with children today is historically unprecedented and making both women and children insane.

Working moms today spend more time on childcare than housewives did in the 50s and no one seems to think that this is a serious problem and likely contributing to women no longer wanting to be moms, the workload and the pressure of motherhood has gotten out of control.

Zvi: If this was because the extra time brought joy, that would make sense. It isn’t (paper), at least not the extra time that happens when the mother is college-educated.

They can handle a lot more than people think, remarkably often.

Not all 12 year olds, and all that, but yes. This is The Way, all around. Don’t simply not arrest the parents if the kids walk to the store, also let the kids do actual real things.

In You Endohs: Just overheard a father explaining that it’s better to start a casino than a restaurant given the stronger revenue model—but that casinos are harder to set up from a regulatory perspective—to his FIVE YEAR OLD SON.

Emma Steuer: This is literally why I know everything about everything. Starting from infancy my dad would just talk to me like a regular person. I’m pretty sure I know everything he knows at this point

In You Endohs: Oh it rocks.

Henrik Karlsson: I’ve never understood why not everybody talks to kids this way, they love it.

I’ve seen exceptions, yeah. But they are quite rare ime.

When I worked at the art gallery, we had a 19-yr-old do an internship with us, which my boss handled, and I brought along a 12-yr-old as my intern. My boss put the 19-yr-old in the café and complained it was so hard to deal with kids. I taught the 12-yr-old to do our accounting.

I literally can’t understand why ppl behave so weirdly around kids. They are just slightly smaller humans. They like to be useful, they understand things well if you just explain it with enough context. They are fun to have around when you work.

Adam: I could teach a 13 year old to be a capable CAD architectural draftsman. Probably be a project manager by 18.

I mean, yeah, don’t start a restaurant, that never works out. Kids need to know.

Most of all:

Because that’s what they are. People. Some people take this too far. Only treat kids as peers in situations where that makes sense for that situation and that kid, but large parts of our society have gone completely bonkers in the other direction. For example:

Nicolas Decker: I’m ngl I find this sort of thing disgusting. God forbid someone treat a minor like a human being.

[This is from MIT of all places].

Kelsey Piper: I also worry that if you tell all adults with good intentions to absolutely never treat teenagers as peers, then the only people who are willing to treat them like peers are those with sketchy intentions.

Teenagers have a very strong desire to be intellectually respected and treated as peers and “no, never do that, because they value it so highly they can be vulnerable to adults who offer it” strikes me as the wrong way to respond

Sarah Constantin: yeesh this is from MIT?

Sufficiently talented minors *areadults’ peers at intellectual work. they deserve to be real collaborators! Starting to think it’s a red flag for…something when adults repress any identification with “what I would have wanted when I was a kid/teen.”

I have heard enough different stories about MIT letting us down exactly in places where you’d think ‘come on it’s MIT’ that I worry it’s no longer MIT. The more central point is that peers are super great, confidants are great, and this is yet another example of taking away the superior free version and forcing us to pay for a formalized terrible shadow of the same thing.

How do we more generally enable people to have kids without their lives having to revolve around those kids? How do we lower the de facto obligations for absurd amounts of personalized attention for them?

The obvious first thing is that we used to normalize kids being in various places, and how if you take your kids to almost anything people at best look at you like you’re crazy. We need to find a way to have them stop doing that, or to not care.

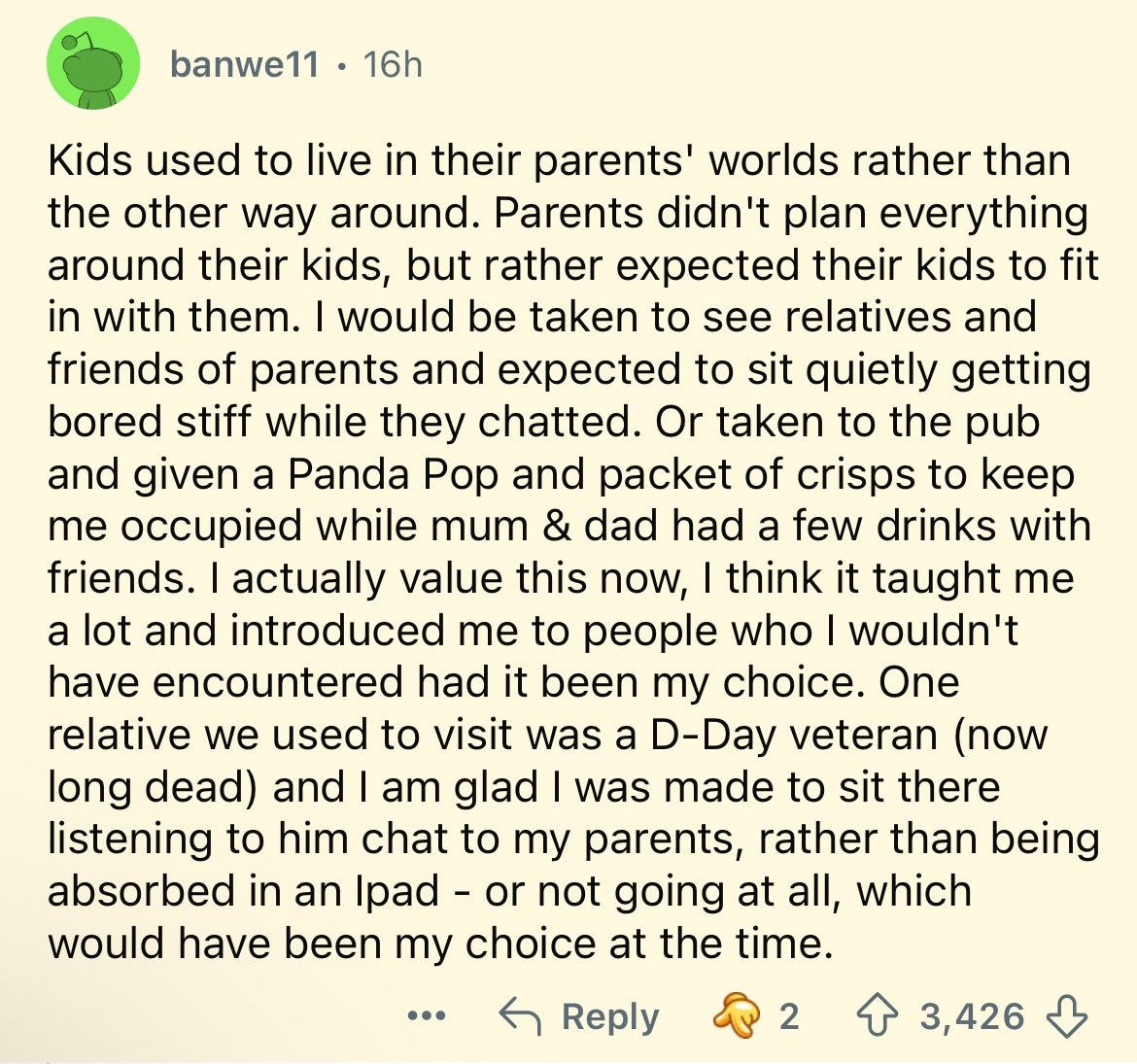

Salad Bar Fan: Comment I saw on Reddit that caught my attention. I recall reading a post by Scott Sumner echoing the same sentiment of there being less mixing of adults and kids in his childhood with each operating in their own little world apart from one another.

Pamela Hobart: Things revolving around the kids seemed to happen almost automatically by the time we had 3 under age 4.

I’ve never done things like cook separate meals or refuse hiring a babysitter to go out w/o kids. But just bringing them along to whatever the adults want to do is really hard and not always acceptable to others. What specifically made this attitude easier in the past? Just that there were so many more kids?

Like a few weeks ago I had to bring my 7 and 5yos to the tax office to renew an auto registration, it was midday on a Wednesday. They were home on break from private school i.e. not the spring break most families had.

Bunch of old folks in there glaring at me like they’d never seen a kid before?

The thing is this also relies upon the children being able to handle it. My understanding is that we used to focus less attention on children, and also to enforce behavior codes on them that were there to benefit adults rather than the children, and got them used to being bored and having nothing to do, and also they got used to being able to play on their own.

While I don’t fully want to go back to that amount of boredom, I do think that it would be a fair and net worthwhile trade to have kids accept far more ‘bored time,’ or ‘here at an adult event they don’t fully understand and have to behave time,’ and to normalize that as good and right. We used to be willing to trade really a ton of kid bored time to save adult time, now we do the opposite. We need middle ground.

Phones in schools is beyond the scope of today’s post, but overall screen time is not. Screen time is one of the few ways to reduce the time burden on caregivers.

It’s another day, and the same screens moral panic we’ve been having for a long time?

Roon: Another day, another moral panic.

Sebastiaan de With: This shit is evil. Plain and simple. I feel like we’ve crossed a point where we have stopped calling out others doing work that’s simply a huge harm to mankind and it’s time for that to change.

Felix (quoting): It’s audience research day at Moonbug Entertainment, the London company that produces 29 of the most popular online kids’ shows in the world, found on more than 150 platforms in 32 languages and with 7.8 billion views on YouTube in March alone. Once a month, children are brought here, one at a time, and shown a handful of episodes to figure out exactly which parts of the shows are engaging and which are tuned out.

For anyone older than 2 years old, the team deploys a whimsically named tool: the Distractatron.

It’s a small TV screen, placed a few feet from the larger one, that plays a continuous loop of banal, real-world scenes — a guy pouring a cup of coffee, someone getting a haircut — each lasting about 20 seconds. Whenever a youngster looks away from the Moonbug show to glimpse the Distractatron, a note is jotted down.

We have had a moral panic about screens in one form or another since we had screens, as in television.

I reiterate, once again, that this panic was and is essentially correct. The TV paranoia was correct, it brought great advantages but the warnings of idiot box, ‘couch potato’ and ‘boob tube,’ and crowding out other activities and so on were very much not wrong.

Modern screens have even huger downsides and dangers, and most of what is served to our kids is utter junk optimized against them, which is what they will mostly choose if left alone to do so.

Even when you are careful about what they watch, and it is educational and reasonable, there are still some rather nasty addictive behaviors to watch out for.

One can imagine ‘good’ versions of all this tech, but right now it doesn’t exist. It seems crazy that it doesn’t exist? Shouldn’t someone sell it? Properly curated experiences that gave parents proper control and steered children in good ways seem super doable, and would have a very large market with high willingness to pay. The business model is different, but also kind of obvious?

Is it going to be fine the way it is? Yeah, sure, for some value of ‘fine.’ And with AI I’m actually net optimistic things will mostly get better on this particular front. But yeah, Cocomelon is freaking scary, a lot of YouTube is far worse than that, giving children access to tablets and phones early will reliably get them addicted and cause big issues, and pretending otherwise is folly.