The Tea app is or at least was on fire, rapidly gaining lots of users. This opens up two discussions, one on the game theory and dynamics of Tea, one on its abysmal security.

It’s a little too on the nose that a hot new app that purports to exist so that women can anonymously seek out and spill the tea on men, which then puts user information into an unprotected dropbox thus spilling the tea on the identities of many of its users.

In the circles I follow this predictably led to discussions about how badly the app was coded and incorrect speculation that this was linked to vibe coding, whereas the dumb mistakes involved were in this case fully human.

There was also some discussion of the game theory of Tea, which I found considerably more interesting and fun, and which will take up the bulk of the post.

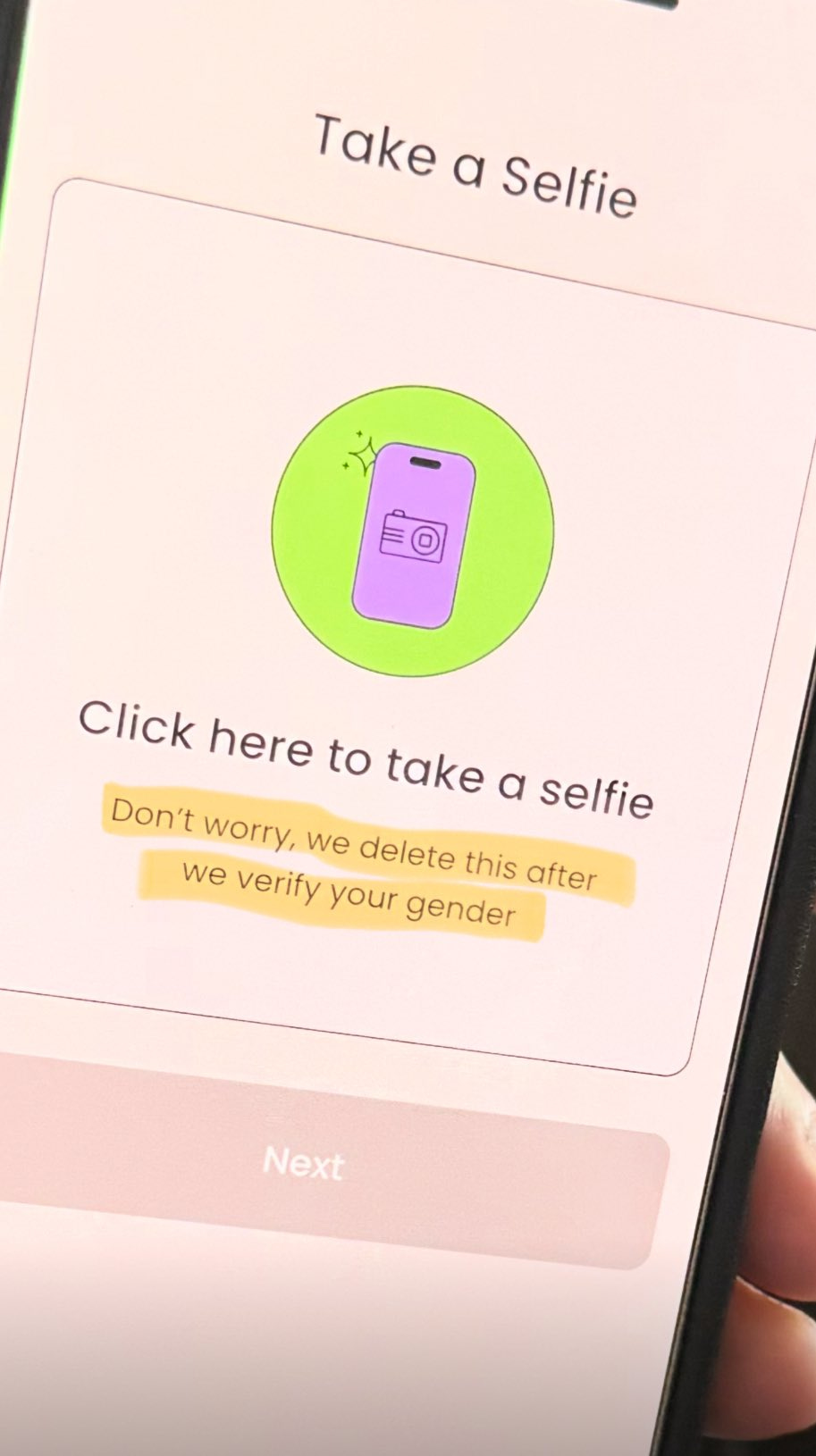

Tea offers a variety of services, while attempting to gate itself to only allow in women (or at least, not cis men), although working around this is clearly not hard if a man wanted to do that, and to only allow discussion and targeting of men.

Some of this is services like phone number lookup, social media and dating app search, reverse image internet search and criminal background checks. The photo you give is checked against catfishing databases. Those parts seem good.

There’s also generic dating advice and forums within the app, sure, fine.

The central feature is that you list a guy with a first name, location and picture – which given AI is pretty much enough for anyone these days to figure out who it is even if they don’t recognize them – and ask ‘are we dating the same guy?’ and about past experiences, divided into green and red flag posts. You can also set up alerts on guys in case there is any new tea.

What’s weird about ‘are we dating the same guy?’ is that the network effects required for that to work are very large, since you’re realistically counting on one or at most a handful of other people in the same position asking the same question. And if you do get the network big enough, search costs should then be very high, since reverse image search on a Facebook group is highly unreliable. It’s kind of amazing that the human recognition strategies people mostly use here worked at all in populated areas without proper systematization.

Tea provides much better search tools including notifications, which gives you a fighting chance, and one unified pool. But even with 4.6 million women, the chances of any given other woman being on it at all are not so high, and they then have to be an active user or have already left the note.

When I asked Claude about this it suggested the real win was finding Instagram or LinkedIn profiles, and that indeed makes a lot more sense. That’s good information, and it’s also voluntarily posted so it’s fair game.

Using a Hall of Shame seems even more inefficient. What, you are supposed to learn who the bad men are one by one? None of this seems like an effective use of time, even if you don’t have any ethical or accuracy concerns. This can’t be The Way, not outside of a small town or community.

The core good idea of the mechanics behind Tea is to give men Skin In The Game. The ideal amount of reputation that gets carried between interactions is not zero. The twin problems are that the ideal amount has an upper bound, and also that those providing that reputation also need Skin In The Game, gossip only works if there are consequences for spreading false gossip, and here those consequences seem absent.

What happens if someone lies or otherwise abuses the system? Everything is supposedly anonymous and works on negative selection. The app is very obviously ripe for abuse, all but made for attempts to sabotage or hurt people, using false or true information. A lot of what follows will be gaming that out.

The moderation team has a theoretical zero tolerance policy for defamation and harassment when evidence is provided, but such things are usually impossible to prove and the bar for actually violating the rules is high. Even if a violation is found and proof is possible, and the mod team would be willing to do something if proof was provided, if the target doesn’t know about the claims how can they respond?

Even then there don’t seem likely to be any consequences to the original poster.

Shall we now solve for the equilibrium, assuming the app isn’t sued into oblivion?

While tea is small and relatively unknown, the main worries (assuming the tools are accurate) are things like vindictive exes. There’s usually a reason when that happens, but there are going to be some rather nasty false positives.

As tea gets larger, it starts to change incentives in both good and bad ways, there are good reasons to start to try and manage, manipulate or fool the system, and things start to get weird. Threats and promises of actions within tea will loom in the air on every date and in every relationship. Indeed every interaction, essentially any woman (and realistically also any man) could threaten to spill tea, truthfully or otherwise, at any time.

Men will soon start asking for green flag posts, both accurate ones from exes and very much otherwise, services to do this will spring up, dummy accounts will be used where men are praising themselves.

Men will absolutely at minimum need to know what is being said, set up alerts on themselves, run all the background checks to see what will come up, and work to change the answer to that if it’s not what they want it to be. Presumably there will be plenty happy to sell you this service for very little, since half the population can provide such a service at very low marginal cost.

Quickly word of the rules of how to sculpt your background checks will spread.

And so on. It presumably will get very messy very quickly. The system simultaneously relies on sufficient network effects to make things like ‘are we dating the same guy?’ work, and devolves into chaos if usage gets too high.

One potential counterforce is that it would be pretty bad tea to have a reputation of trying to influence your tea. I doubt that ends up being enough.

At lunch, I told a woman that Tea exists and explained what it was.

Her: That should be illegal.

Her (10 seconds later): I need to go there and warn people about [an ex].

Her (a little later than that, paraphrased a bit): Actually no. He’s crazy, who knows what he might do if he found out.

Her (after I told her about the data breach): Oh I suppose I can’t use it then.

There is certainly an argument in many cases including this one for ‘[X] should be illegal but if it’s going to be legal then I should do it,’ and she clarified that her opposition was in particular to the image searches, although she quickly pointed out many other downsides as well.

The instinct is that all of this is bad for men.

That seems highly plausible but not automatic or obvious.

Many aspects of reputation and competition are positional goods and have zero-sum aspects in many of the places that Tea is threatening to cause trouble. Creating safer and better informed interactions and matches can be better for everything.

More generally, punishing defectors is by default very good for everyone, even if you are sad that it is now harder for you to defect. You’d rather be a good actor in the space, but previously in many ways ‘bad men drove out good’ placing pressure on you to not act well. This also that all this allows women to feel safe and let their guard down, and so on. A true ‘safety app’ is a good thing.

It could also motivate women to date more and use the apps more. It’s a better product when it is safer, far better, so you consume more of it. If no one has yet hooked the dating apps up automatically to tea so that you can get the tea as you swipe, well, get on that. Thus it can also act as a large improvement on matching. No, you don’t match directly on tea, but it provides a lot of information.

Another possible advantage is that receptivity to this could provide positive selection. If a woman doesn’t want to date you because of unverified internet comments, that is a red flag, especially for you in particular, in several ways at once. It means they probably weren’t that into you. It means they sought out and were swayed by the information. You plausibly dodged a bullet.

A final advantage is that this might be replacing systems that are less central and less reliable and that had worse enforcement mechanisms, including both groups and also things like whisper networks.

Consider the flip side, an app called No Tea, that men could use to effectively hide their pasts and reputations and information, without making it obvious they were doing this. Very obviously this would make even men net worse off if it went past some point.

As in, even from purely the man’s perspective: The correct amount of tea is not zero.

There are still six groups of ways I could think of that Tea could indeed be bad for men in general at current margins, as opposed to bad for men who deserve it, and it is not a good sign that over the days I wrote this the list kept growing.

-

Men could in general find large utility in doing things that earn them very bad reputations on tea, and be forced to stop.

-

This is highly unsympathetic, as they mostly involve things like cheating and otherwise treating women badly. I do not think those behaviors in general make men’s lives better, especially as a group.

-

I also find it unlikely that men get large utility in absolute terms from such actions, rather than getting utility in relative terms. If you can get everyone to stop, I think most men win out here near current margins.

-

Women could be bad at the Law of Conservation of Expected Evidence. As in, perhaps they update strongly negatively on negative information when they find it, but do not update appropriately positively when such information is not found, and do not adjust their calibration over time.

-

This is reasonably marketed as a ‘safety app.’ If you are checked and come back clean, that should make you a lot safer and more trustworthy. That’s big.

The existence of the app also updates expectations, if the men know that the app exists and that they could end up on it.

-

In general, variance in response is your friend so long as the downside risk stops at a hard pass. You only need one yes, also you get favorable selection.

-

Also, this could change the effective numerical dynamics. If a bunch of men become off limits due to tea, especially if that group often involves men who date multiple women at once, the numbers game can change a lot.

-

Men could be forced to invest resources into reputation management in wasteful or harmful ways, and spend a lot of time being paranoid. This may favor men willing to game the system, or who can credibly threaten retaliation.

-

This seems highly plausible, hopefully this is limited in scope.

-

The threat of retaliation issue seems like a potentially big deal. The information will frequently get back to the target, and in many cases the source of the information will be obvious, especially if the information is true.

-

Ideally the better way to fix your reputation is to deserve a better one, but even then there would still be a lot of people who don’t know this, or who are in a different situation.

-

Men could face threats, blackmail and power dynamic problems. Even if unstated, the threat to use tea, including dishonestly, looms in the air.

-

This also seems like a big problem.

-

Imagine dating, except you have to maintain a 5-star rating.

-

In general, you want to seek positive selection, and tea risks making you worry a lot about negative selection, well beyond the places you actually need to worry about that (e.g. when you might hurt someone for real).

-

The flip side is this could force you to employ positive selection? As in, there are many reasons why avoiding those who create such risks is a good idea.

-

Men might face worse tea prospects the more they date, if the downside risk of each encounter greatly exceeds the upside. Green flags are rare and not that valuable, red flags can sink you. So confidence and boldness decline, the amount of dating and risk taking and especially approaching goes down.

-

We already have this problem pretty bad based on phantom fears. That could mean it gets way worse, or that it can’t get much worse. Hard to say.

-

If you design Tea or users create norms such that this reverses, and more dating gets you a better Tea reputation so long as you deserve one, then that could be a huge win.

-

It would be a push to put yourself out there in a positive way, and gamify things providing a sense of progress even if someone ultimately wasn’t a match, including making it easier to notice this quickly and move on, essentially ‘forcing you into a good move.’

-

It’s a massive invasion of privacy, puts you at an informational disadvantage, and it could spill over into your non-dating life. The negative information could spread into the non-dating world, where the Law of Conservation of Expected Evidence very much does not apply. Careers and lives could plausibly be ruined.

-

This seems like a pretty big and obvious objection. Privacy is a big deal.

-

What is going to keep employers and HR departments off the app?

MJ: this is straight up demonic. absolutely no one should be allowed to create public profiles about you to crowdsource your deeply personal information and dating history.

People are taking issue with me casually throwing out the word “demonic.” so let me double down. The creators of this app are going to get rich off making many decent people depressed and suicidal.

This isn’t about safety. This isn’t just a background check app. Their own promo material clearly markets this as a way to anonymously share unverified gossip and rumors from scorned exes.

Benjamin Foss: Women shouldn’t be allowed to warn other women about stalkers, predators, and cheaters?

MJ: If you think that’s what this app is primarily going to be used for then I have a bridge to sell you.

Definitely Next Year: “Why can’t I find a nice guy?” Because you listened to his psychopathic ex anonymously make stuff up about him.

My current read is that this would all be good if it somehow had strong mechanisms to catch and punish attempts to misuse the system, especially keeping it from spilling over outside of one’s dating life. The problem is I have a hard time imagining how that will work, and I see a lot of potential for misuse that I think will overwhelm the positive advantages.

Is the core tea mechanic (as opposed to the side functions) good for women? By default more information should be good even if unreliable, so long as you know how to use it, although the time and attention cost and the attitudinal shifts could easily overwhelm that, and this could crowd out superior options.

The actual answer here likely comes down to what this does to male incentives. I am guessing this would, once the app scales, dominate the value of improved information.

If this induces better behavior due to reputational concerns, then it is net good. If it instead mainly induces fear and risk aversion and twists dynamics, then it could be quite bad. This is very much not a Battle of the Sexes or a zero sum game. If the men who don’t richly deserve it lose, probably the women also lose. If those men win, the women probably also win.

What Tea and its precursor groups are actually doing is reducing the Level of Friction in this type of anonymous information sharing and search, attempting to move it down from Level 2 (annoying to get) into Level 1 (minimal frictions) or even Level 0 (a default action).

In particular, this moves the information sharing from one-to-one to one-to-many. Information hits different when anyone can see it, and will hit even more different when AI systems start scraping and investigating.

As with many things, that can be a large difference in kind. This can break systems and also the legal systems built around interactions.

CNN has an article looking into the legal implications of Tea, noting that the legal bar for taking action against either the app or a user of the app is very high.

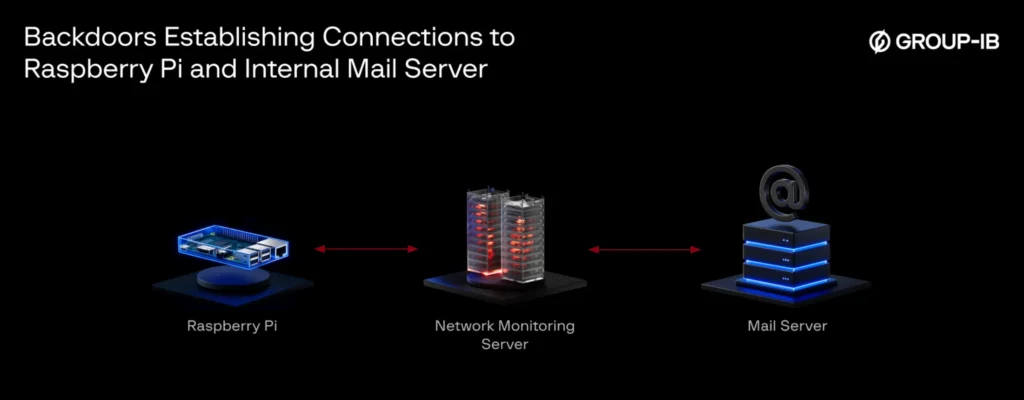

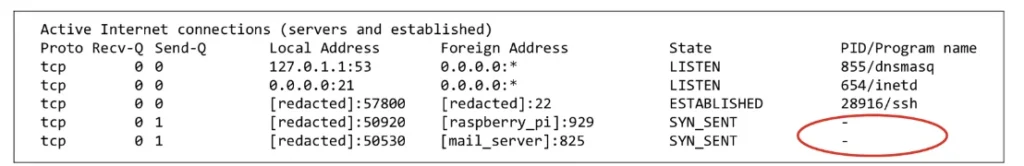

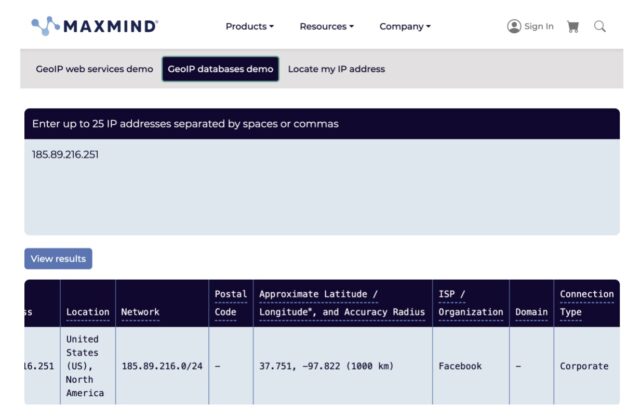

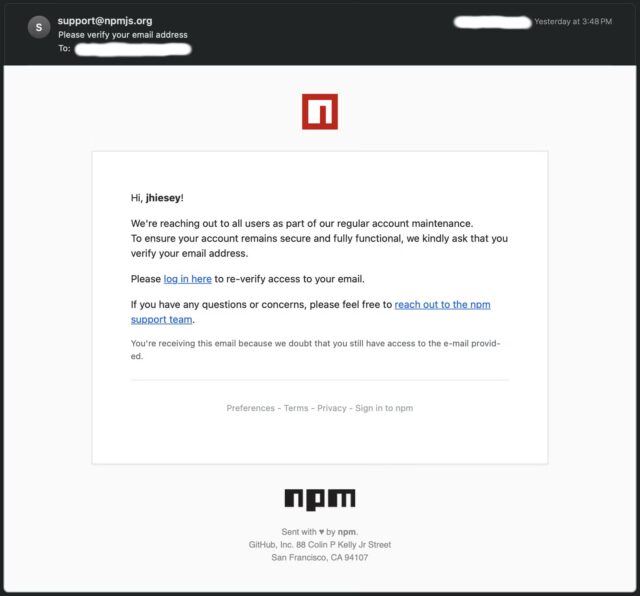

So yes, of course the Tea app whose hosts have literally held sessions entitled ‘spilling the tea on tea’ got hacked to spill its own Tea, as in the selfies and IDs of its users, which includes their addresses.

Tea claimed that it only held your ID temporarily to verify you are a woman, and that the breached data was being stored ‘in compliance with law enforcement requirements related to cyber-bullying.’

Well, actually…

Howie Dewin: It turns out that the “Tea” app DOXXES all its users by uploading both ID and face verification photos, completely uncensored, to a public bucket on their server.

The genius Brazilians over at “Tea” must have wanted to play soccer in the favela instead of setting their firebase bucket to private.

Global Index: Leaked their addresses too 😳

I mean, that’s not even getting hacked. That’s ridiculous. It’s more like ‘someone discovered they were storing things in a public dropbox.’

It would indeed be nice to have a general (blockchain blockchain blockchain? Apple and Google? Anyone?) solution to solving the problem of proving aspects of your identity without revealing your identity, as in one that people actually use in practice for things like this.

Neeraj Agrawal: If there was ever an example for why need an open and privacy preserving digital ID standard.

You should be able to prove your ID card says something, like your age or in this case your gender, without revealing your address.

Kyle DH: There’s about 4 standards that can do this, but no one has their hands on these digital forms so they don’t get requested and there’s tradeoffs when we make this broadly available on the Web.

Tea eventually released an official statement about what happened.

This is, as Lulu Meservey points out, a terrible response clearly focused on legal risk. No apology, responsibility is dodged, obvious lying, far too slow.

Rob Freund: Soooo that was a lie

Eliezer Yudkowsky: People shouting “Sue them!”, but Tea doesn’t have that much money.

The liberaltarian solution: requiring companies to have insurance against lawsuits. The insurer then has a market incentive to audit the code.

And the “regulatory” solution? You’re living it. It didn’t work.

DHH: Web app users would be shocked to learn that 99% of the time, deleting your data just sets a flag in the database. And then it just lives there forever until it’s hacked or subpoenaed.

It took a massive effort to ensure this wasn’t the case for Basecamp and HEY. Especially when it comes to deleting log files, database backups, and all the other auxiliary copies of your stuff that most companies just hang onto until the sun burns out.

I mean it didn’t work in terms of preventing the leak but if it bankrupts the company I think I’m fine with that outcome.

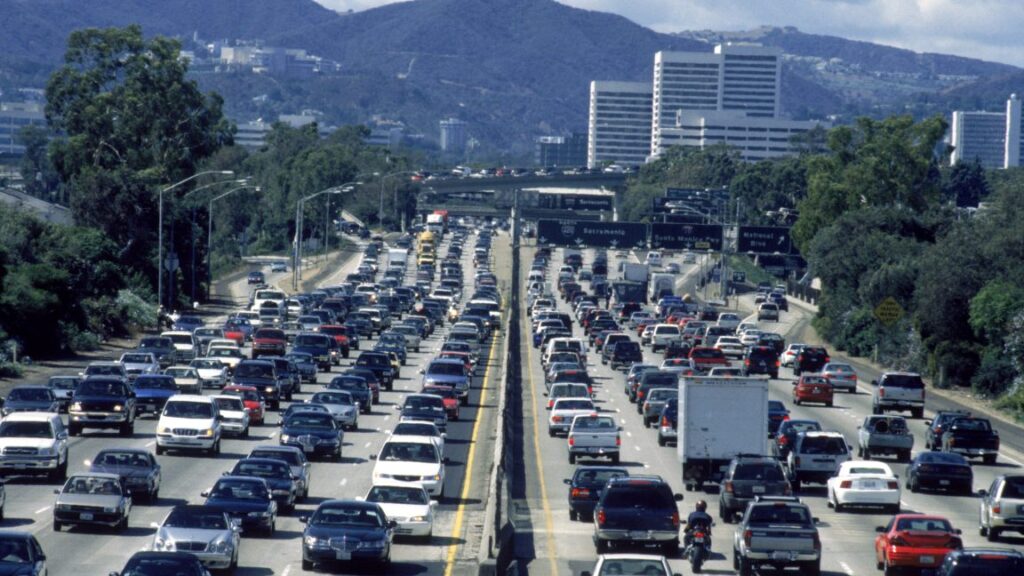

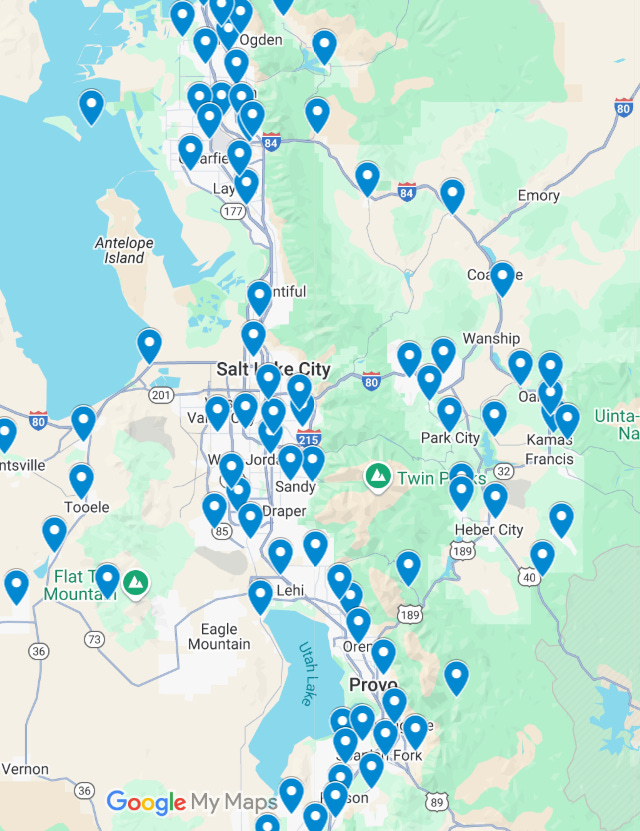

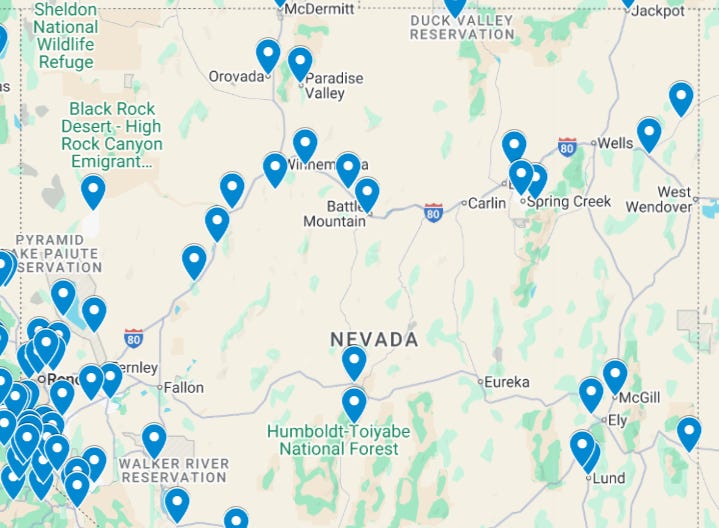

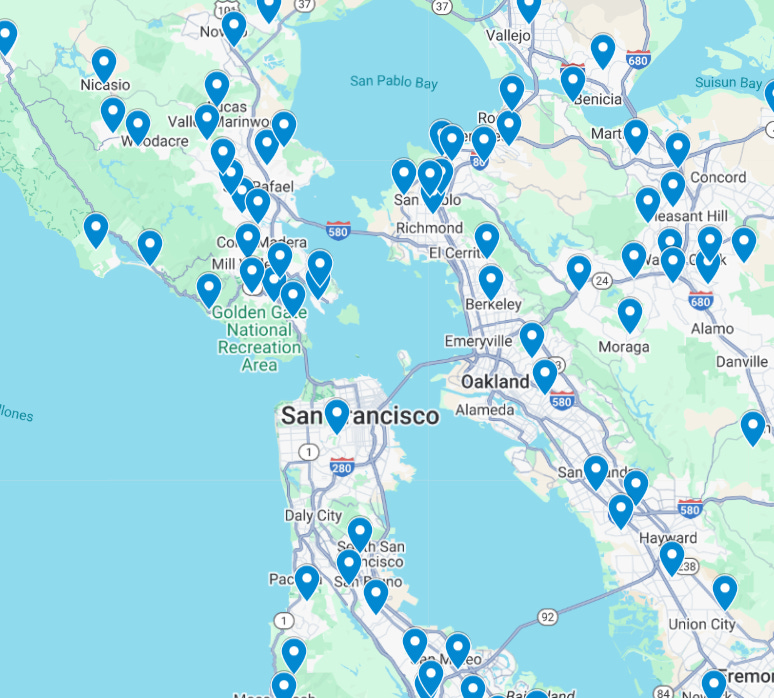

One side effect of the hack is we can get maps. I wouldn’t share individuals, but distributions are interesting and there is a clear pattern.

As in, the more central and among more people you live, the less likely you are to use Tea. That makes perfect sense. The smaller your community, the more useful gossip and reputation are as tools. If you’re living in San Francisco proper, the tea is harder to get and also less reliable due to lack of skin in the game.

Tom Harwood notes that this is happening at the same time as the UK mandating photo ID for a huge percentage of websites, opening up lots of new security issues.

As above, for this question divide Tea into its procedural functions, and the crowdsourcing function.

On its procedural functions, these seem good if and only if execution of the features is good and better than alternative apps that do similar things. I can’t speak to that. But yeah, it seems like common sense to do basic checks on anyone you’re considering seriously dating.

On the core crowdsourcing functions I am more skeptical.

Especially if I was considering sharing red flags, I would have more serious ethical concerns especially around invasion of privacy and worry that the information could get out beyond his dating life including back to you in various ways.

If you wouldn’t say it to the open internet, you likely shouldn’t be saying it to Tea. To the extent people are thinking these two things are very different, I believe they are making a serious mistake. And I would be very skeptical of the information I did get. But I’m not going to pretend that I wouldn’t look.

If you have deserved green flags to give out? That seems great. It’s a Mitzvah.