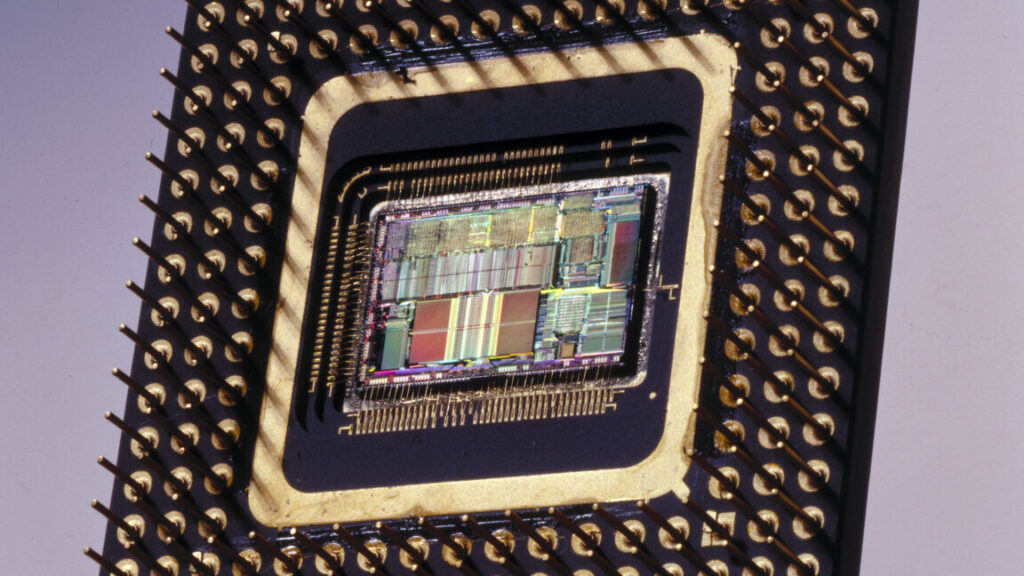

Linux kernel is leaving 486 CPUs behind, only 18 years after the last one made

It’s not the first time Torvalds has suggested dropping support for 32-bit processors and relieving kernel developers from implementing archaic emulation and work-around solutions. “We got rid of i386 support back in 2012. Maybe it’s time to get rid of i486 support in 2022,” Torvalds wrote in October 2022. Failing major changes to the 6.15 kernel, which will likely arrive late this month, i486 support will be dropped.

Where does that leave people running a 486 system for whatever reason? They can run older versions of the Linux kernel and Linux distributions. They might find recommendations for teensy distros like MenuetOS, KolibriOS, and Visopsys, but all three of those require at least a Pentium. They can run FreeDOS. They might get away with the OS/2 descendant ArcaOS. There are some who have modified Windows XP to run on 486 processors, and hopefully, they will not connect those devices to the Internet.

Really, though, if you’re dedicated enough to running a 486 system in 2025, you’re probably resourceful enough to find copies of the software meant for that system. One thing about computers—you never stop learning.

This post was updated at 3: 30 p.m. to fix a date error.

Linux kernel is leaving 486 CPUs behind, only 18 years after the last one made Read More »