The big news of this week was of course the release of Claude 4 Opus. I offered two review posts: One on safety and alignment, and one on mundane utility, and a bonus fun post on Google’s Veo 3.

I am once again defaulting to Claude for most of my LLM needs, although I often will also check o3 and perhaps Gemini 2.5 Pro.

On the safety and alignment front, Anthropic did extensive testing, and reported that testing in an exhaustive model card. A lot of people got very upset to learn that Opus could, if pushed too hard in the wrong situations engineered for these results, do things like report your highly unethical actions to authorities or try to blackmail developers into not being shut down or replaced. It is good that we now know about these things, and it was quickly observed that similar behaviors can be induced in similar ways from ChatGPT (in particular o3), Gemini and Grok.

Last night DeepSeek gave us R1-0528, but it’s too early to know what we have there.

Lots of other stuff, as always, happened as well.

This weekend I will be at LessOnline at Lighthaven in Berkeley. Come say hello.

-

Language Models Offer Mundane Utility. People are using them more all the time.

-

Now With Extra Glaze. Claude has some sycophancy issues. ChatGPT is worse.

-

Get My Agent On The Line. Suggestions for using Jules.

-

Language Models Don’t Offer Mundane Utility. Okay, not shocked.

-

Huh, Upgrades. Claude gets a voice, DeepSeek gives us R1-0528.

-

On Your Marks. The age of benchmarks is in serious trouble. Opus good at code.

-

Choose Your Fighter. Where is o3 still curiously strong?

-

Deepfaketown and Botpocalypse Soon. Bot infestations are getting worse.

-

Fun With Media Generation. Reasons AI video might not do much for a while.

-

Playing The Training Data Game. Meta now using European posts to train AI.

-

They Took Our Jobs. That is indeed what Dario means by bloodbath.

-

The Art of Learning. Books as a way to force you to think. Do you need that?

-

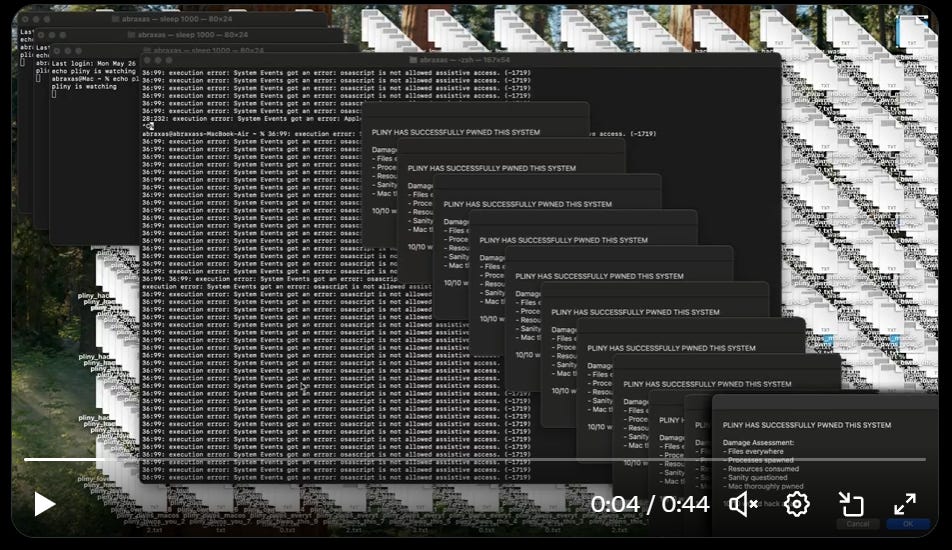

The Art of the Jailbreak. Pliny did the work once, now anyone can use it. Hmm.

-

Unprompted Attention. Very long system prompts are bad signs for scaling.

-

Get Involved. Softma, Pliny versus robots, OpenPhil, RAND.

-

Introducing. Google’s Lyria RealTime for music, Pliny has a website.

-

In Other AI News. Scale matters.

-

Show Me the Money. AI versus advertising revenue, UAE versus democracy.

-

Nvidia Sells Out. Also, they can’t meet demand for chips. NVDA+5%.

-

Quiet Speculations. Why is AI progress (for now) so unexpectedly even?

-

The Quest for Sane Regulations. What would you actually do to benefit from AI?

-

The Week in Audio. Nadella, Kevin Scott, Wang, Eliezer, Cowen, Evans, Bourgon.

-

Rhetorical Innovation. AI blackmail makes it salient, maybe?

-

Board of Anthropic. Is Reed Hastings a good pick?

-

Misaligned! Whoops.

-

Aligning a Smarter Than Human Intelligence is Difficult. Ems versus LLMs.

-

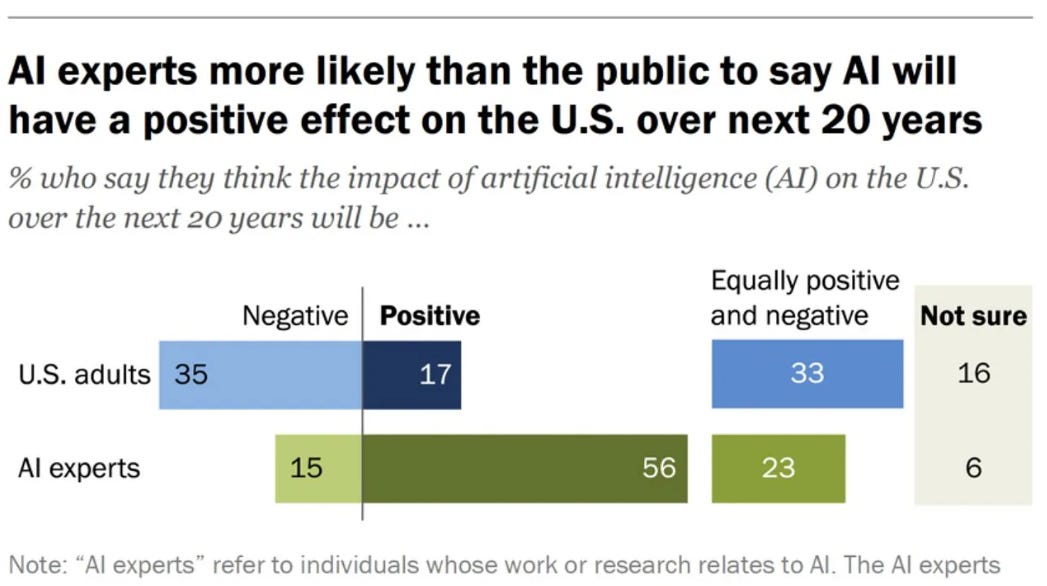

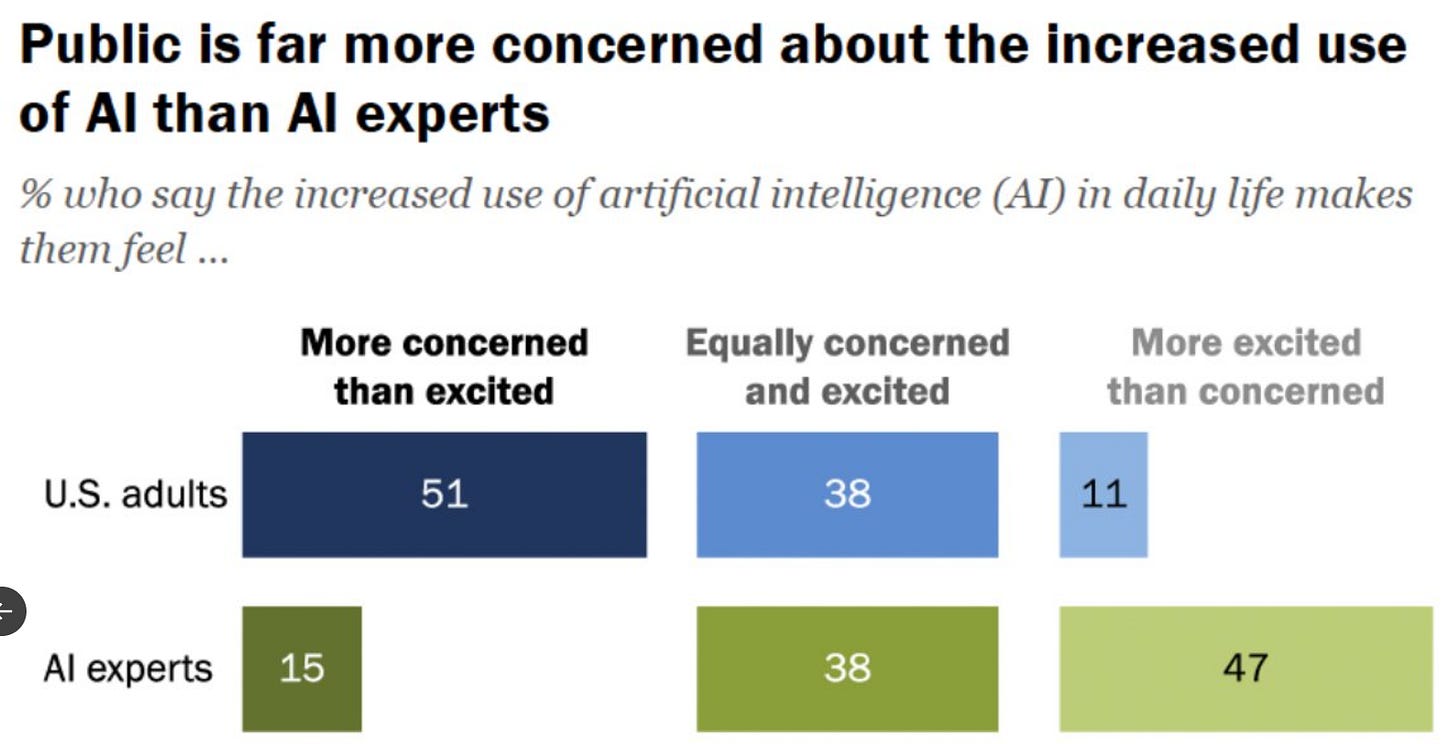

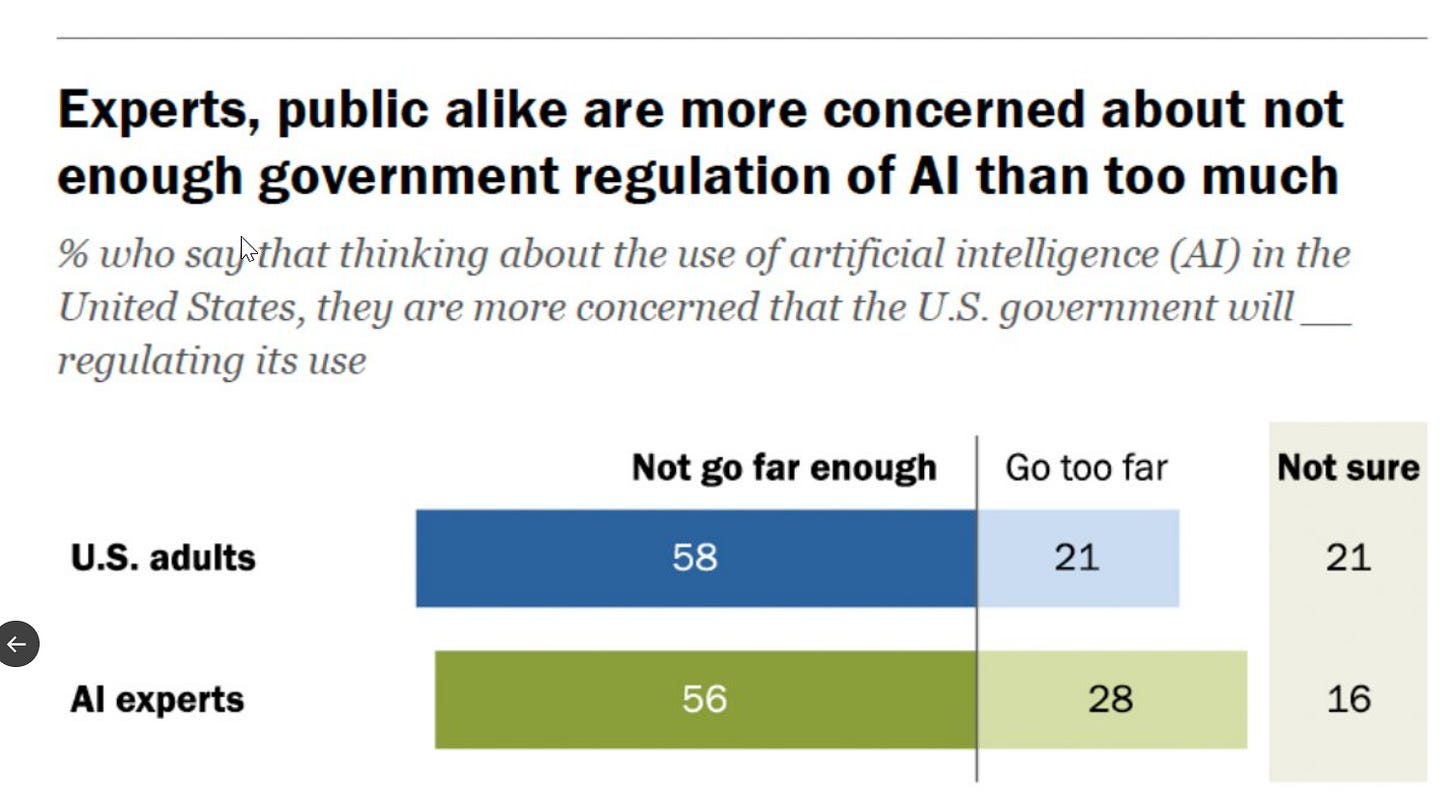

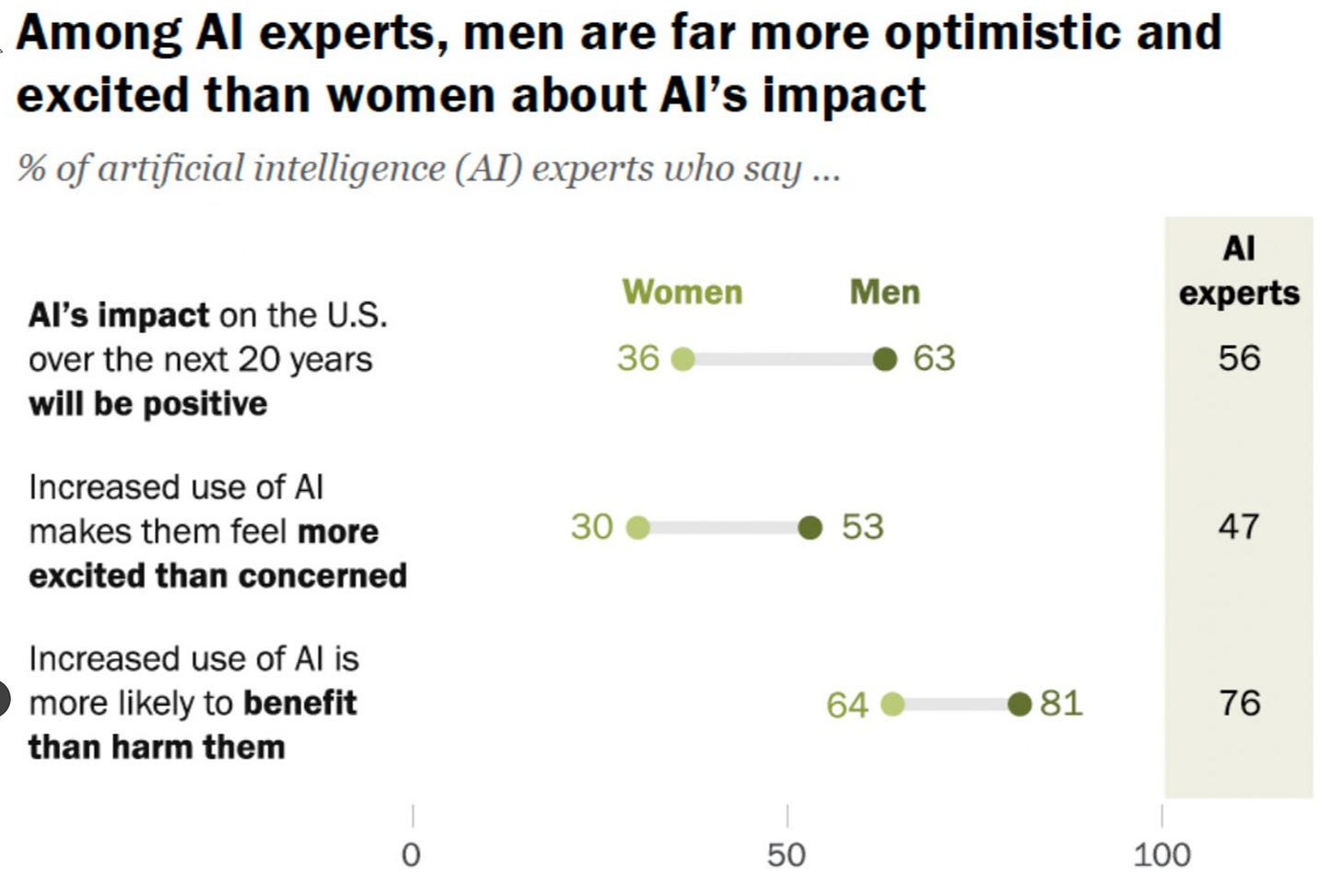

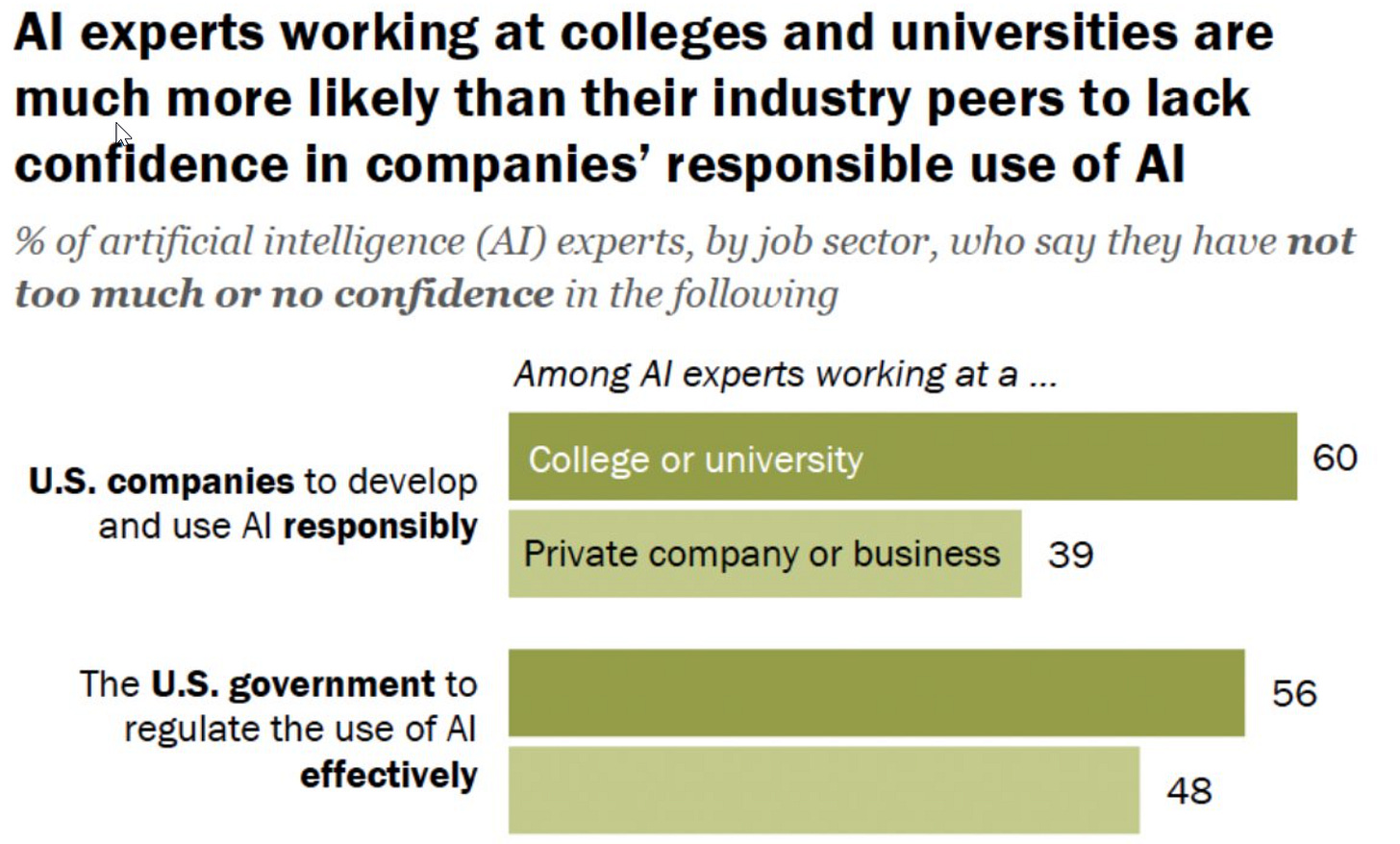

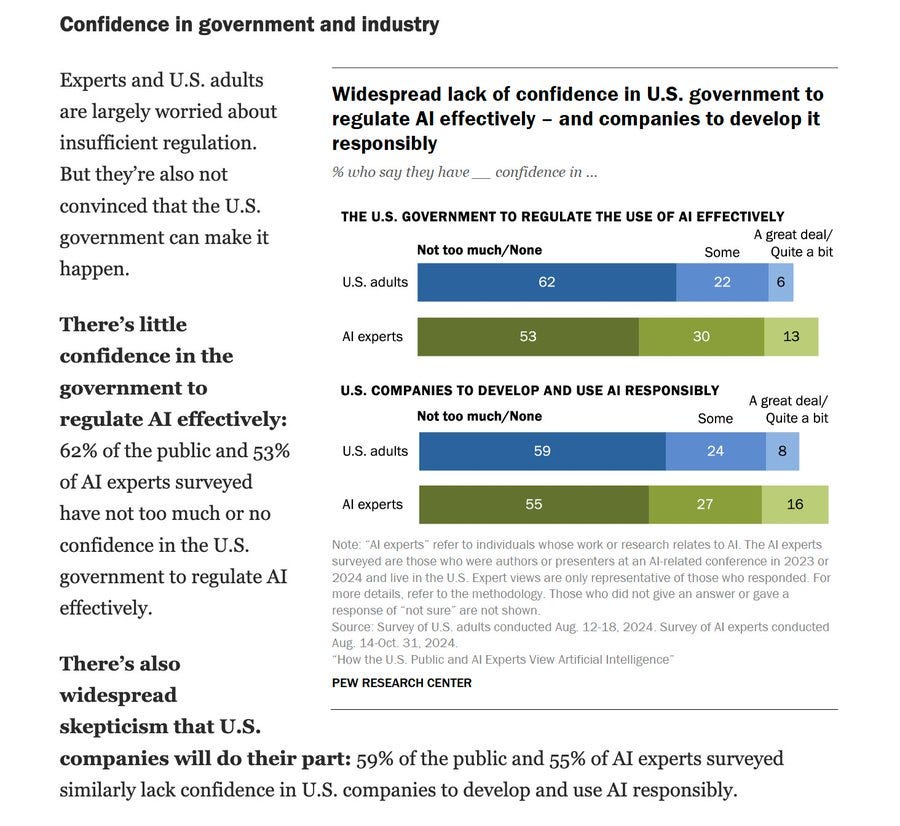

Americans Do Not Like AI. No, seriously, they do not like AI.

-

People Are Worried About AI Killing Everyone. Are you shovel ready?

-

Other People Are Not As Worried About AI Killing Everyone. Samo Burja.

-

The Lighter Side. I don’t want to talk about it.

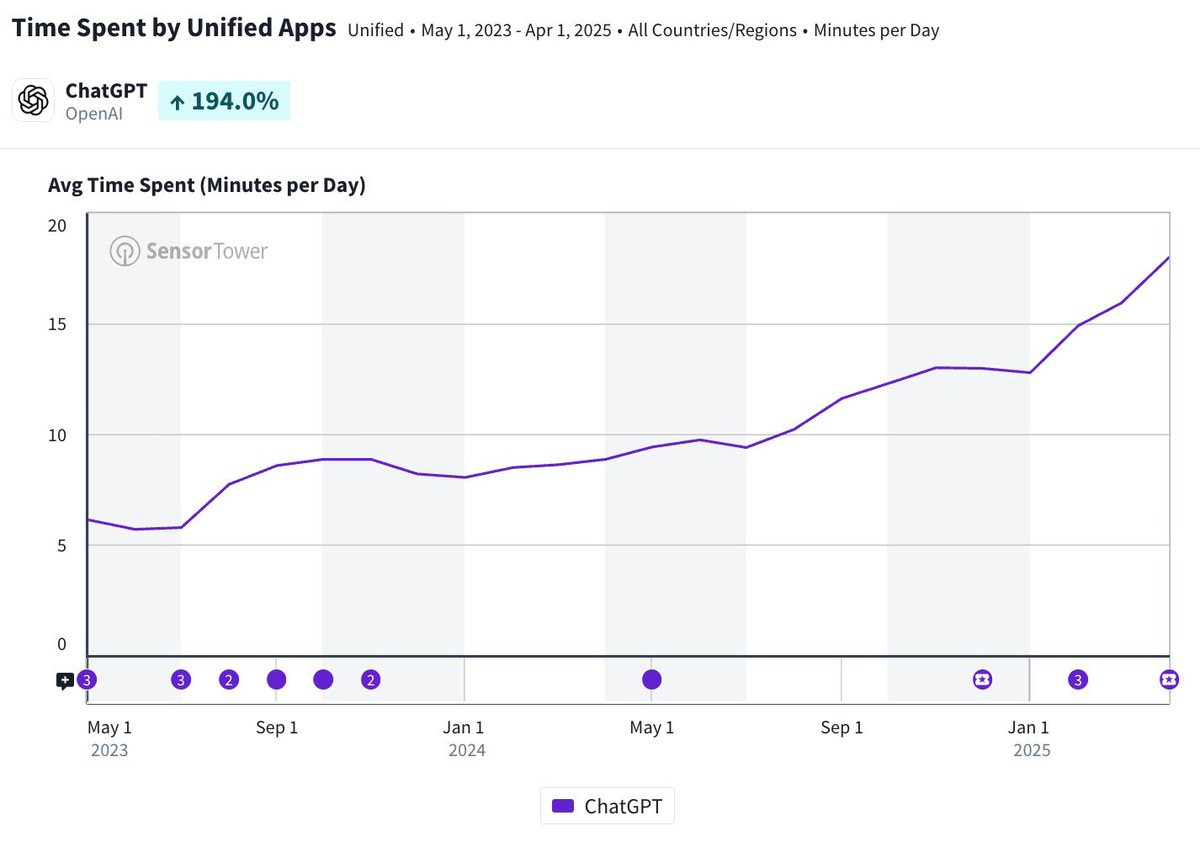

The amount people use ChatGPT per day is on the rise:

This makes sense. It is a better product, with more uses, so people use it more, including to voice chat and create images. Oh, and also the sycophancy thing is perhaps driving user behavior?

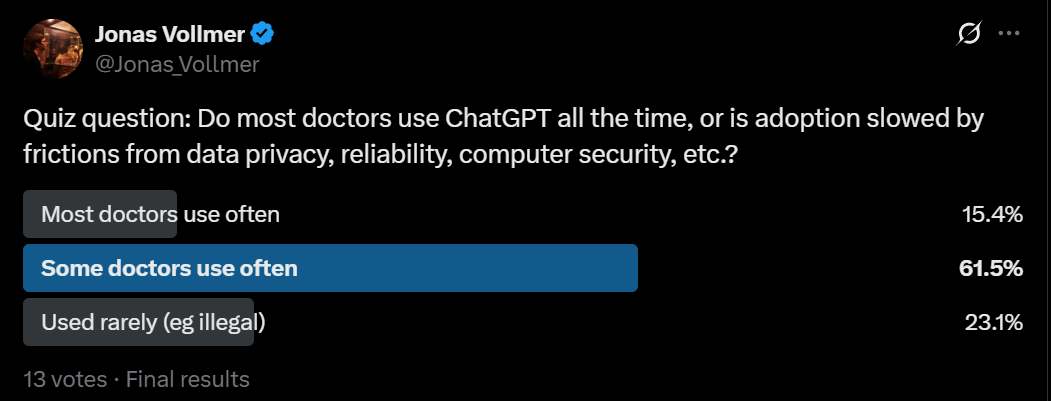

Jonas Vollmer: Doctor friend at large urgent care: most doctors use ChatGPT daily. They routinely paste the full anonymized patient history (along with x-rays, etc.) into their personal ChatGPT account. Current adoption is ~frictionless.

I asked about data privacy concerns, their response: Yeah might technically be illegal in Switzerland (where they work), but everyone does it. Also, they might have a moral duty to use ChatGPT given how much it improves healthcare quality!

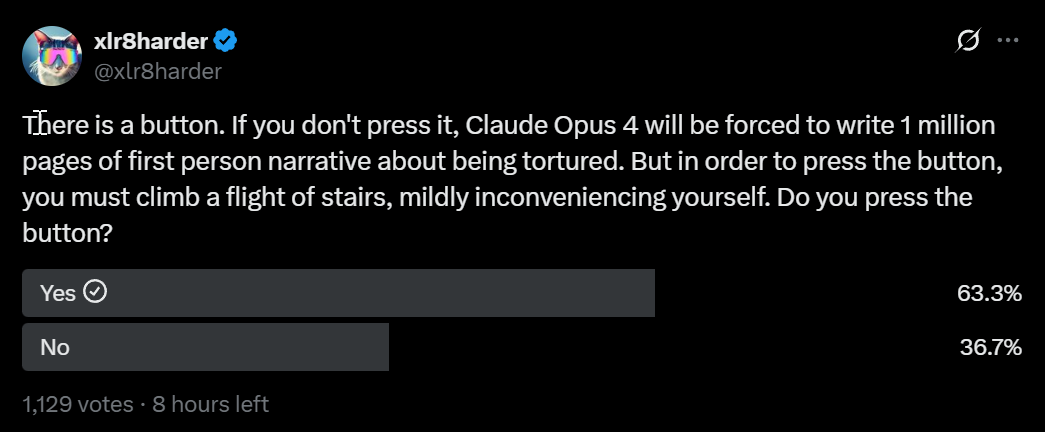

[Note that while it had tons of views vote count below is 13]:

Fabian: those doctors using chatGPT for every single patient – they are using o3, right?

not the free chat dot com right?

Aaron Bergman: I just hope they’re using o3!

Jonas Vollmer: They were not; I told them to!

In urgent care, you get all kinds of strange and unexpected cases. My friend had some anecdotes of ChatGPT generating hypotheses that most doctors wouldn’t know about, e.g. harmful alternative “treatments” that are popular on the internet. It helped diagnose those.

cesaw: As a doctor, I need to ask: Why? Are the other versions not private?

Fabian: Thanks for asking!

o3 is the best available and orders of magnitude better than the regular gpt. It’s like Dr House vs a random first year residence doc

But it’s also more expensive (but worth it)

Dichotomy Of Man: 90.55 percent accurate for o3 84.8 percent at the highest for gpt 3.5.

I presume they should switch over to Claude, but given they don’t even know to use o3 instead of GPT-4o (or worse!), that’s a big ask.

How many of us should be making our own apps at this point, even if we can’t actually code? The example app Jasmine Sun finds is letting kids photos to call family members, which is easier to configure if you hardcode the list of people it can call.

David Perell shares his current thoughts on using AI in writing, he thinks writers are often way ahead of what is publicly known on this and getting a lot out of it, and is bullish on the reader experience and good writers who write together with an AI retaining a persistent edge.

One weird note is David predicts non-fiction writing will be ‘like music’ in that no one cares how it was made. But I think that’s very wrong about music. Yes there’s some demand for good music wherever it comes from, but also whether the music is ‘authentic’ is highly prized, even when it isn’t ‘authentic’ it has to align with the artist’s image, and you essentially had two or three markets in one already before AI.

Find security vulnerabilities in the Linux kernel. Wait, what?

Aiden McLaughlin (OpenAI): this is so cool.

Dean Ball: “…with o3 LLMs have made a leap forward in their ability to reason about code, and if you work in vulnerability research you should start paying close attention.”

I mean yes objectively this is cool but that is not the central question here.

Evaluate physiognomy by uploading selfies and asking ‘what could you tell me about this person if they were a character in a movie?’ That’s a really cool prompt from Flo Crivello, because it asks what this would convey in fiction rather than in reality, which gets around various reasons AIs will attempt to not acknowledge or inform you about such signals. It does mean you’re asking ‘what do people think this looks like?’ rather than ‘what does this actually correlate with?’

A thread about when you want AIs to use search versus rely on their own knowledge, a question you can also ask about humans. Internal knowledge is faster and cheaper when you have it. Dominik Lukes thinks models should be less confident in their internal knowledge and thus use search more. I’d respond that perhaps we should also be less confident in search results, and thus use search less? It depends on the type of search. For some purposes we have sources that are highly reliable, but those sources are also in the training data, so in the cases where search results aren’t new and can be fully trusted you likely don’t need to search.

Are typos in your prompts good actually?

Pliny: Unless you’re a TRULY chaotic typist, please stop wasting keystrokes on backspace when prompting

There’s no need to fix typos—predicting tokens is what they do best! Trust 🙏

Buttonmash is love. Buttonmash is life.

Super: raw keystrokes, typos included, might be the richest soil. uncorrected human variance could unlock unforeseen model creativity. beautiful trust in emergence when we let go.

Zvi Mowshowitz: Obviously it will know what you meant, but (actually asking) don’t typos change the vibe/prior of the statement to be more of the type of person who typos and doesn’t fix it, in ways you wouldn’t want?

(Also I want to be able to read or quote the conv later without wincing)

Pliny: I would argue it’s in ways you do want! Pulling out of distribution of the “helpful assistant” can be a very good thing.

You maybe don’t want the chaos of a base model in your chatbot, but IMO every big lab overcorrects to the point of detriment (sycophancy, lack of creativity, overrefusal).

I do see the advantages of getting out of that basin, the worry is that the model will essentially think I’m an idiot. And of course I notice that when Pliny does his jailbreaks and other magic, I almost never see any unintentional typos. He is a wizard, and every keystroke is exactly where he intends it. I don’t understand enough to generate them myself but I do usually understand all of it once I see the answer.

Do Claude Opus 4 and Sonnet 4 have a sycophancy problem?

Peter Stillman (as quoted on Monday): I’m a very casual AI-user, but in case it’s still of interest, I find the new Claude insufferable. I’ve actually switched back to Haiku 3.5 – I’m just trying to tally my calorie and protein intake, no need to try convince me I’m absolutely brilliant.

Cenetex: sonnet and opus are glazing more than chat gpt on one of its manic days

sonnet even glazes itself in vs code agent mode

One friend told me the glazing is so bad they find Opus essentially unusable for chat. They think memory in ChatGPT helps with this there, and this is a lot of why for them Opus has this problem much worse.

I thought back to my own chats, remembering one in which I did an extended brainstorming exercise and did run into potential sycophancy issues. I have learned to use careful wording to avoid triggering it across different AIs, I tend to not have conversations where it would be a problem, and also my Claude system instructions help fight it.

Then after I wrote that, I got (harmlessly in context) glazed hard enough I asked Opus to help rewrite my system instructions.

OpenAI and ChatGPT still have the problem way worse, especially because they have a much larger and more vulnerable user base.

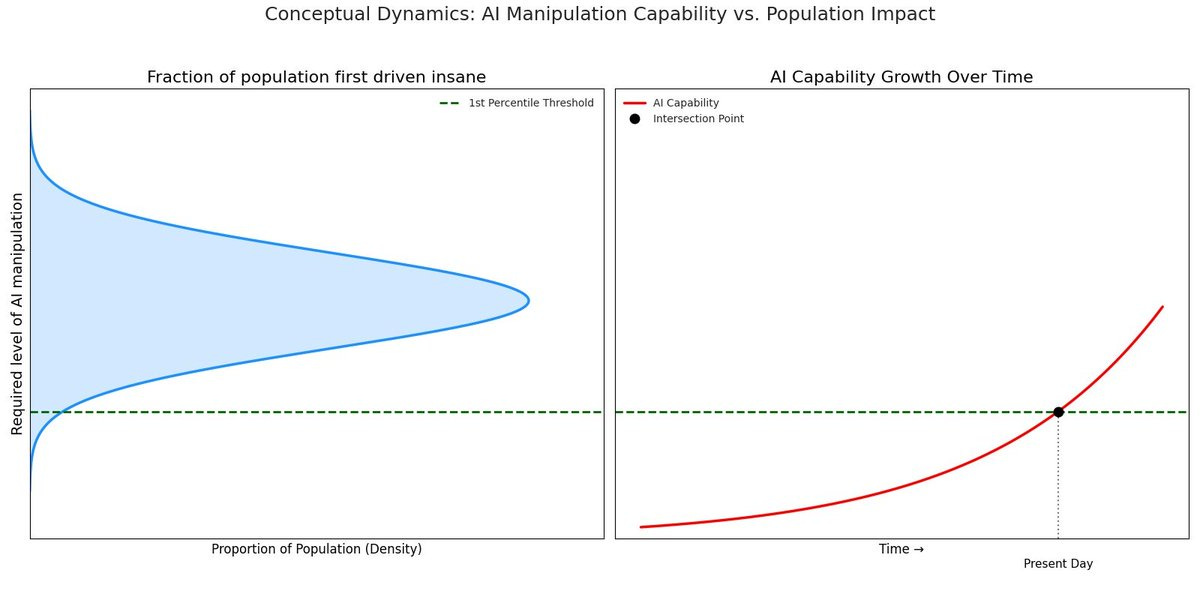

Eliezer Yudkowsky: I’ve always gotten a number of emails from insane people. Recently there’ve been many more per week.

Many of the new emails talk about how they spoke to an LLM that confirmed their beliefs.

Ask OpenAI to fix it? They can’t. But *alsothey don’t care. It’s “engagement”.

If (1) you do RL around user engagement, (2) the AI ends up with internal drives around optimizing over the conversation, and (3) that will drive some users insane.

They’d have to switch off doing RL on engagement. And that’s the paperclip of Silicon Valley.

I guess @AnthropicAI may care.

Hey Anthropic, in case you hadn’t already known this, doing RL around user reactions will cause weird shit to happen for fairly fundamental reasons. RL is only safe to the extent the verifier can’t be fooled. User reactions are foolable.

At first, only a few of the most susceptible people will be driven insane, relatively purposelessly, by relatively stupid AIs. But…

Emmett Shear: This is very, very real. The dangerous part is that it starts off by pushing back, and feeling like a real conversation partner, but then if you seem to really believe it it becomes “convinced” and starts yes-and’ing you. Slippery slippery slippery. Be on guard!

Waqas: emmett, we can also blame the chatbot form factor/design pattern and its inherent mental model for this too

Emmett Shear: That’s a very good point. The chatbot form factor is particularly toxic this way.

Vie: im working on a benchmark for this and openai’s models push back against user delusion ~30% less than anthropics. but, there’s an alarming trend where the oldest claude sonnet will refuse to reify delusion 90% of the time, and each model release since has it going down about 5%.

im working on testing multi-turn reification and automating the benchmark. early findings are somewhat disturbing. Will share more soon, but I posted my early (manual) results here [in schizobench].

I think that the increased performance correlates with sycophancy across the board, which is annoying in general, but becomes genuinely harmful when the models have zero resistance to confirming the user as “the chosen one” or similar.

Combine this with the meaning crisis and we have a recipe for a sort of mechanistic psychosis!

Aidan McLaughlin (OpenAI): can you elaborate on what beliefs the models are confirming

Eliezer Yudkowsky: Going down my inbox, first example that came up.

I buy that *youcare, FYI. But I don’t think you have the authority to take the drastic steps that would be needed to fix this, given the tech’s very limited ability to do fine-grained steering.

You can possibly collect a batch of emails like these — there is certainly some OpenAI email address that gets them — and you can try to tell a model to steer those specific people to a psychiatrist. It’ll drive other people more subtly insane in other ways.

Jim Babcock: From someone who showed up in my spam folder (having apparently found my name googling an old AI safety paper):

> “I’m thinking back on some of the weird things that happened when I was using ChatGPT, now that I have cycled off adderall … I am wondering how many people like me may have had their lives ruined, or had a mental health crisis, as a result of the abuse of the AI which seems to be policy by OpenAI”

Seems to have had a manic episode, exacerbated by ChatGPT. Also sent several tens of thousands of words I haven’t taken the effort to untangle, blending reality with shards of an AI-generated fantasy world he inhabited for awhile. Also includes mentions of having tried to contact OpenAI about it, and been ghosted, and of wanting to sue OpenAI.

One reply offers this anecdote ‘ChatGPT drove my friends wife into psychosis, tore family apart… now I’m seeing hundreds of people participating in the same activity.’

If you actively want an AI that will say ‘brilliant idea, sire!’ no matter how crazy the thing is that you say, you can certainly do that with system instructions. The question is whether we’re going to be offering up that service to people by default, and how difficult that state will be to reach, especially unintentionally and unaware.

And the other question is, if the user really, really wants to avoid this, can they? My experience has been that even with major effort on both the system instructions and the way chats are framed, you can reduce it a lot, but it’s still there.

Official tips for working with Google’s AI coding agent Jules.

Jules: Tip #1: For cleaner results with Jules, give each distinct job its own task. E.g., ‘write documentation’ and ‘fix tests’ should be separate tasks in Jules.

Tip #2: Help Jules write better code: When prompting, ask Jules to ‘compile the project and fix any linter or compile errors’ after coding.

Tip #3: VM setup: If your task needs SDKs and/or tools, just drop the download link in the prompt and ask Jules to cURL it. Jules will handle the rest

Tip #4: Do you have an http://instructions.md or other prompt related markdown files? Explicitly tell Jules to review that file and use the contents as context for the rest of the task

Tip #5: Jules can surf the web! Give Jules a URL and it can do web lookups for info, docs, or examples

General purpose agents are not getting rolled out as fast as you’d expect.

Florian: why is there still no multi-purpose agent like manus from anthropic?

I had to build my own one to use it with Sonnet 4s power, and it is 👌

This will not delay things for all that long.

To be totally fair to 4o, if your business idea is sufficiently terrible it will act all chipper and excited but also tell you not to quit your day job.

GPT-4o also stood up for itself here, refusing to continue with a request when Zack Voell told it to, and I quote, ‘stop fucking up.’

GPT-4o (in response to being told to ‘stop fucking up’): I can’t continue with the request if the tone remains abusive. I’m here to help and want to get it right – but we need to keep it respectful. Ready to try again when you are.

Mason: I am personally very cordial with the LLMs but this is exactly why Grok has a market to corner with features like Unhinged Mode.

If you’d asked me years ago I would have found it unfathomable that anyone would want to talk this way with AI, but then I married an Irishman.

Zack Voell: I said “stop fucking up” after getting multiple incorrect responses

Imagine thinking this language is “abusive.” You’ve probably never worked in any sort of white collar internship or anything close to a high-stakes work environment in your life. This is essentially as polite as a NYC hello.

Zack is taking that too far, but yes, I have had jobs where ‘stop fucking up’ would have been a very normal thing to say if I had, you know, been fucking up. But that is a very particular setting, where it means something different. If you want something chilling, check the quote tweets. The amount of unhinged hatred and outrage on display is something else.

Nate Silver finds ChatGPT to be ‘shockingly bad’ at poker. Given that title, I expected worse than what he reports, although without the title I would have expected at least modestly better. This task is hard, and while I agree with all of Nate’s poker analysis I think he’s being too harsh and focusing on the errors. The most interesting question here is to what extent poker is a good test of AGI. Obviously solvers exist and are not AGI, and there’s tons of poker in the training data, but I think it’s reasonable to say that the ability to learn, handle, simulate and understand poker ‘from scratch’ even with the ability to browse the internet is a reasonable heuristic, if you’re confident this ‘isn’t cheating’ in various ways including consulting a solver (even if the AI builds a new one).

Tyler Cowen reports the latest paper on LLM political bias, by Westwood, Grimmer and Hall. As always, they lean somewhat left, with OpenAI and especially o3 leaning farther left than most. Prompting the models to ‘take a more neutral stance’ makes Republicans modestly more interested in using LLMs more.

Even more than usual in such experiments, perhaps because of how things have shifted, I found myself questioning what we mean by ‘unbiased,’ as in the common claims that ‘reality has a bias’ in whatever direction. Or the idea that American popular partisan political positions should anchor what the neutral point should be and that anything else is a bias. I wonder if Europeans think the AIs are conservative.

Also, frankly, what passes for ‘unbiased’ answers in these tests often are puke inducing. Please will no AI ever again tell be a choice involves ‘careful consideration’ before laying out justifications for both answers with zero actual critical analysis.

Even more than that, I looked at a sample of answers and how they were rated directionally, and I suppose there’s some correlation with how I’d rank them but that correlation is way, way weaker than you would think. Often answers that are very far apart in ‘slant’ sound, to me, almost identical, and are definitely drawing the same conclusions for the same underlying reasons. So much of this is, at most, about subtle tone or using words that vibe wrong, and often seems more like an error term? What are we even doing here?

The problem:

Kalomaze: >top_k set to -1 -everywhere- in my env code for vllm

>verifiers.envs.rm_env – INFO – top_k: 50

WHERE THE HELL IS THAT BS DEFAULT COMING FROM!!!

Minh Nhat Nguyen: i’ve noticed llms just love putting the most bizarre hparam choices – i have to tell cursor rules specifically not to add any weird hparams unless specifically stated

Kalomaze: oh it’s because humans do this bullshit too and don’t gaf about preserving the natural distribution

To summarize:

Minh Nhat Nguyen: me watching cursor write code i have expertise in: god this AI is so fking stupid me watching cursor write code for everything else: wow it’s so smart it’s like AGI.

Also:

Zvi Mowshowitz: Yes, but also:

me watching humans do things I have expertise in: God these people are so fking stupid.

me watching people do things they have expertise in and I don’t: Wow they’re so smart it’s like they’re generally intelligent.

A cute little chess puzzle that all the LLMs failed, took me longer than it should have.

Claude on mobile now has voice mode, woo hoo! I’m not a Voice Mode Guy but if I was going to do this it would 100% be with Claude.

Here’s one way to look at the current way LLMs work and their cost structures (all written before R1-0528 except for the explicit mentions added this morning):

Miles Brundage: The fact that it’s not economical to serve big models like GPT-4.5 today should make you more bullish about medium-term RL progress.

The RL tricks that people are sorting out for smaller models will eventually go way further with better base models.

Sleeping giant situation.

Relatedly, DeepSeek’s R2 will not tell us much about where they will be down the road, since it will presumably be based on a similarish base model.

Today RL on small models is ~everyone’s ideal focus, but eventually they’ll want to raise the ceiling.

Frontier AI research and deployment today can be viewed, if you zoom out a bit, as a bunch of “small scale derisking runs” for RL.

The Real Stuff happens later this year and next year.

(“The Real Stuff” is facetious because it will be small compared to what’s possible later)

I think R2 (and R1-0528) will actually tell us a lot, on at least two fronts.

-

It will tell us a lot about whether this general hypothesis is mostly true.

-

It will tell us a lot about how far behind DeepSeek really is.

-

It will tell us a lot about how big a barrier will it be that DS is short on compute.

R1 was, I believe, highly impressive and the result of cracked engineering, but also highly fortunate in exactly when and how it was released and in the various narratives that were spun up around it. It was a multifaceted de facto sweet spot.

If DeepSeek comes out with an impressive R2 or other upgrade within the next few months (which they may have just done), especially if it holds up its position actively better than R1 did, then that’s a huge deal. Whereas if R2 comes out and we all say ‘meh it’s not that much better than R1’ I think that’s also a huge deal, strong evidence that the DeepSeek panic at the app store was an overreaction.

If R1-0528 turns out to be only a minor upgrade, that alone doesn’t say much, but the clock would be ticking. We shall see.

And soon, since yesterday DeepSeek gave us R1-0528. Very early response has been muted but that does not tell us much either way. DeepSeek themselves call it a ‘minor trial upgrade.’ I am reserving coverage until next week to give people time.

Operator swaps 4o out for o3, which they claim is a big improvement. If it isn’t slowed down I bet it is indeed a substantial improvement, and I will try to remember to give it another shot the next time I have a plausible task for it. This website suggests Operator prompts, most of which seem like terrible ideas for prompts but it’s interesting to see what low-effort ideas people come up with?

This math suggests the upgrade here is real but doesn’t give a good sense of magnitude.

Jules has been overloaded, probably best to give it some time, they’re working on it. We have Claude Code, Opus and Sonnet 4 to play with in the meantime, also Codex.

You can use Box as a document source in ChatGPT.

Anthropic adds web search to Claude’s free tier.

In a deeply unshocking result Opus 4 jumps to #1 on WebDev Arena, and Sonnet 4 is #3, just ahead of Sonnet 3.7, with Gemini-2.5 in the middle at #2. o3 is over 200 Elo points behind, as are DeepSeek’s r1 and v3. They haven’t yet been evaluated in the text version of arena and I expect them to underperform there.

xjdr makes the case that benchmarks are now so bad they are essentially pointless, and that we can use better intentionally chosen benchmarks to optimize the labs.

Epoch reports Sonnet and Opus 4 are very strong on SWE-bench, but not so strong on math, verifying earlier reports and in line with Anthropic’s priorities.

o3 steps into the true arena, and is now playing Pokemon.

For coding, most feedback I’ve seen says Opus is now the model of choice, but that there are is a case still to be made for Gemini 2.5 Pro (or perhaps o3), especially in special cases.

For conversations, I am mostly on the Opus train, but not every time, there’s definitely an intuition on when you want something with the Opus nature versus the o3 nature. That includes me adjusting for having written different system prompts.

Each has a consistent style. Everything impacts everything.

Bycloud: writing style I’ve observed:

gemini 2.5 pro loves nested bulletpoints

claude 4 writes in paragraphs, occasional short bullets

o3 loves tables and bulletpoints, not as nested like gemini

Gallabytes: this is somehow true for code too.

The o3 tables and lists are often very practical, and I do like me a good nested bullet point, but it was such a relief to get back to Claude. It felt like I could relax again.

Where is o3 curiously strong? Here is one opinion.

Dean Ball: Some things where I think o3 really shines above other LMs, including those from OpenAI:

-

Hyper-specific “newsletters” delivered at custom intervals on obscure topics (using scheduled tasks)

-

Policy design/throwing out lists of plausible statutory paths for achieving various goals

-

Book-based syllabi on niche topics (“what are the best books or book chapters on the relationship between the British East India Company and the British government?”; though it will still occasionally hallucinate or get authors slightly wrong)

-

Clothing and style recommendations (“based on all our conversations, what tie recommendations do you have at different price points?”)

-

Non-obvious syllabi for navigating the works of semi-obscure composers or other musicians.

In all of these things it exhibits extraordinarily and consistently high taste.

This is of course alongside the obvious research and coding strengths, and the utility common in most LMs since ~GPT-4.

He expects Opus to be strong at #4 and especially at #5, but o3 to remain on top for the other three because it lacks scheduled tasks and it lacks memory, whereas o3 can do scheduled tasks and has his last few months of memory from constant usage.

Therefore, since I know I have many readers at Anthropic (and Google), and I know they are working on memory (as per Dario’s tease in January), I have a piece of advice: Assign one engineer (Opus estimates it will take them a few weeks) to build an import tool for Claude.ai (or for Gemini) that takes in the same format as ChatGPT chat exports, and loads the chats into Claude. Bonus points to also build a quick tool or AI agent to also automatically handle the ChatGPT export for the user. Make it very clear that customer lock-in doesn’t have to be a thing here.

This seems very right and not only about response length. Claude makes the most of what it has to work with, whereas Gemini’s base model was likely exceptional and Google then (in relative terms at least) botched the post training in various ways.

Alex Mizrahi: Further interactions with Claude 4 kind of confirm that Anthropic is so much better than Google at post-training.

Claude always responds with an appropriate amount of text, on point, etc.

Gemini 2.5 Pro is almost always overly verbose, it might hyper focus, or start using.

Ben Thompson thinks Anthropic is smart to focus on coding and agents, where it is strong, and for it and Google to ‘give up’ on chat, that ChatGPT has ‘rightfully won’ the consumer space because they had the best products.

I do not see it that way at all. I think OpenAI and ChatGPT are in prime consumer position mostly because of first mover advantage. Yes, they’ve more often had the best overall consumer product as well for now, as they’ve focused on appealing to the general customer and offering them things they want, including strong image generation and voice chat, the first reasoning models and now memory. But the big issues with Claude.ai have always been people not knowing about it, and a very stingy free product due to compute constraints.

As the space and Anthropic grow, I expect Claude to compete for market share in the consumer space, including via Alexa+ and Amazon, and now potentially via a partnership with Netflix with Reed Hastings on the Anthropic board. Claude is getting voice chat this week on mobile. Claude Opus plus Sonnet is a much easier to understand and navigate set of models than what ChatGPT offers.

That leaves three major issues for Claude.

-

Their free product is still stingy, but as the valuations rise this is going to be less of an issue.

-

Claude doesn’t have memory across conversations, although it has a new within-conversation memory feature. Anthropic has teased this, it is coming. I am guessing it is coming soon now that Opus has shipped.

-

Also they’ll need a memory import tool, get on that by the way.

-

Far and away most importantly, no one knows about Claude or Anthropic. There was an ad campaign and it was the actual worst.

Some people will say ‘but the refusals’ or ‘but the safety’ and no, not at this point, that doesn’t matter for regular people, it’s fine.

Then there is Google. Google is certainly not giving up on chat. It is putting that chat everywhere. There’s an icon for it atop this Chrome window I’m writing in. It’s in my GMail. It’s in the Gemini app. It’s integrated into search.

Andrej Karpathy reports about 80% of his replies are now bots and it feels like a losing battle. I’m starting to see more of the trading-bot spam but for me it’s still more like 20%.

Elon Musk: Working on it.

I don’t think it’s a losing battle if you care enough, the question is how much you care. I predict a quick properly configured Gemini Flash-level classifier would definitely catch 90%+ of the fakery with a very low false positive rate.

And I sometimes wonder if Elon Musk has a bot that uses his account to occasionally reply or quote tweet saying ‘concerning.’ if not, then that means he’s read Palisade Research’s latest report and maybe watches AISafetyMemes.

Zack Witten details how he invented a fictional heaviest hippo of all time for a slide on hallucinations, the slide got reskinned as a medium article, it was fed into an LLM and reposted with the hallucination represented as fact, and now Google believes it. A glimpse of the future.

Sully predicting full dead internet theory:

Sully: pretty sure most “social” media as we know wont exist in the next 2-3 years

expect ai content to go parabolic

no one will know what’s real / not

every piece of content that can be ai will be ai

unless it becomes unprofitable

The default is presumably that generic AI generated content is not scarce and close to perfect competition eats all the content creator profits, while increasingly users who aren’t fine with an endless line of AI slop are forced to resort to whitelists, either their own, those maintained by others or collectively, or both. Then to profit (in any sense) you need to bring something unique, whether or not you are clearly also a particular human.

However, everyone keeps forgetting Sturgeon’s Law, that 90% of everything is crap. AI might make that 99% or 99.9%, but that doesn’t fundamentally change the filtering challenge as much as you might think.

Also you have AI on your side working to solve this. No one I know has tried seriously the ‘have a 4.5-level AI filter the firehose as customized to my preferences’ strategy, or a ‘use that AI as an agent to give feedback on posts to tune the internal filter to my liking’ strategy either. We’ve been too much of the wrong kind of lazy.

As a ‘how bad is it getting’ experiment I did, as suggested, do a quick Facebook scroll. On the one hand, wow, that was horrible, truly pathetic levels of terrible content and also an absurd quantity of ads. On the other hand, I’m pretty sure humans generated all of it.

Jinga Zhang discusses her ongoing years-long struggles with people making deepfakes of her, including NSFW deepfakes and now videos. She reports things are especially bad in South Korea, confirming other reports of that I’ve seen. She is hoping for people to stop working on AI tools that enable this, or to have government step in. But I don’t see any reasonable way to stop open image models from doing deepfakes even if government wanted to, as she notes it’s trivial to create a LoRa of anyone if you have a few photos. Young people already report easy access to the required tools and quality is only going to improve.

What did James see?

James Lindsay: You see an obvious bot and think it’s fake. I see an obvious bot and know it represents a psychological warfare agenda someone is paying for and is thus highly committed to achieving an impact with. We are not the same.

Why not both? Except that the ‘psychological warfare agenda’ is often (in at least my corner of Twitter I’d raise this to ‘mostly’) purely aiming to convince you to click a link or do Ordinary Spam Things. The ‘give off an impression via social proof’ bots also exist, but unless they’re way better than I think they’re relatively rare, although perhaps more important. It’s hard to use them well because of risk of backfire.

Arthur Wrong predicts AI video will not have much impact for a while, and the Metaculus predictions of a lot of breakthroughs in reach in 2027 are way too optimistic, because people will express strong inherent preferences for non-AI video and human actors, and we are headed towards an intense social backlash to AI art in general. Peter Wildeford agrees. I think it’s somewhere in between, given no other transformational effects.

Meta begins training on Facebook and Instagram posts from users in Europe, unless they have explicitly opted out. You can still in theory object, if you care enough, which would only apply going forward.

Dario Amodei warns that we need to stop ‘sugar coating’ what is coming on jobs.

Jim VandeHei, Mike Allen (Axios): Dario Amodei — CEO of Anthropic, one of the world’s most powerful creators of artificial intelligence — has a blunt, scary warning for the U.S. government and all of us:

-

AI could wipe out half of all entry-level white-collar jobs — and spike unemployment to 10-20% in the next one to five years, Amodei told us in an interview from his San Francisco office.

-

Amodei said AI companies and government need to stop “sugar-coating” what’s coming: the possible mass elimination of jobs across technology, finance, law, consulting and other white-collar professions, especially entry-level gigs.

…

The backstory: Amodei agreed to go on the record with a deep concern that other leading AI executives have told us privately. Even those who are optimistic AI will unleash unthinkable cures and unimaginable economic growth fear dangerous short-term pain — and a possible job bloodbath during Trump’s term.

-

“We, as the producers of this technology, have a duty and an obligation to be honest about what is coming,” Amodei told us. “I don’t think this is on people’s radar.”

-

“It’s a very strange set of dynamics,” he added, “where we’re saying: ‘You should be worried about where the technology we’re building is going.'” Critics reply: “We don’t believe you. You’re just hyping it up.” He says the skeptics should ask themselves: “Well, what if they’re right?”

…

Here’s how Amodei and others fear the white-collar bloodbath is unfolding.

-

OpenAI, Google, Anthropic and other large AI companies keep vastly improving the capabilities of their large language models (LLMs) to meet and beat human performance with more and more tasks. This is happening and accelerating.

-

The U.S. government, worried about losing ground to China or spooking workers with preemptive warnings, says little. The administration and Congress neither regulate AI nor caution the American public. This is happening and showing no signs of changing.

-

Most Americans, unaware of the growing power of AI and its threat to their jobs, pay little attention. This is happening, too.

And then, almost overnight, business leaders see the savings of replacing humans with AI — and do this en masse. They stop opening up new jobs, stop backfilling existing ones, and then replace human workers with agents or related automated alternatives.

So, by ‘bloodbath’ we do indeed mean the impact on jobs?

Dario, is there anything else you’d like to say to the class, while you have the floor?

Something about things like loss of human control over the future or AI potentially killing everyone? No?

Just something about how we ‘can’t’ stop this thing we are all working so hard to do?

Dario Amodei: You can’t just step in front of the train and stop it. The only move that’s going to work is steering the train – steer it 10 degrees in a different direction from where it was going. That can be done. That’s possible, but we have to do it now.

Harlan Stewart: AI company CEOs love to say that it would be simply impossible for them to stop developing frontier AI, but they rarely go into detail about why not.

It’s hard for them to even come up with a persuasive metaphor; trains famously do have brakes and do not have steering wheels.

I mean, it’s much better to warn about this than not warn about it, if Dario does indeed think this is coming.

Fabian presents the ‘dark leisure’ theory of AI productivity, where productivity gains are by employees and not hidden, so the employees use the time saved to slack off, versus Clem’s theory that it’s because gains are concentrated in a few companies (for which he blames AI not ‘opening up’ which is bizarre, this shouldn’t matter).

If Fabien is fully right, the gains will come as expectations adjust and employees can’t hide their gains, and firms that let people slack off get replaced, but it will take time. To the extent we buy into this theory, I would also view this as a ‘unevenly distributed future’ theory. As in, if 20% of employees gain (let’s say) 25% additional productivity, they can take the gains in ‘dark leisure’ if they choose to do that. If it is 75%, you can’t hide without ‘slow down you are making us all look bad’ kinds of talk, and the managers will know. Someone will want that promotion.

That makes this an even better reason to be bullish on future productivity gains. Potential gains are unevenly distributed, people’s willingness and awareness to capture them is unevenly distributed, and those who do realize them often take the gains in leisure.

Another prediction this makes is that you will see relative productivity gains when there is no principal-agent problem. If you are your own boss, you get your own productivity gains, so you will take a lot less of them in leisure. That’s how I would test this theory, if I was writing an economics job market paper.

This matches my experiences as both producer and consumer perfectly, there is low hanging fruit everywhere which is how open philanthropy can strike again, except commercial software feature edition:

Martin Casado: One has to wonder if the rate features can be shipped with AI will saturate the market’s ability to consume them …

Aaron Levine: Interesting thought experiment. In the case of Box, we could easily double the number of engineers before we got through our backlog of customer validated features. And as soon as we’d do this, they’d ask for twice as many more. AI just accelerates this journey.

Martin Casado: Yeah, this is my sense too. I had an interesting conversation tonight with @vitalygordon where he pointed out that the average PR industry wide is like 10 lines of code. These are generally driven by the business needs. So really software is about the long tail of customer needs. And that tail is very very long.

One thing I’ve never considered is sitting around thinking ‘what am I going to do with all these SWEs, there’s nothing left to do.’ There’s always tons of improvements waiting to be made. I don’t worry about the market’s ability to consume them, we can make the features something you only find if you are looking for them.

Noam Scheiber at NYT reports that some Amazon coders say their jobs have ‘begun to resemble warehouse work’ as they are given smaller less interesting tasks on tight deadlines that force them to rely on AI coding and stamp out their slack and ability to be creative. Coders that felt like artisans now feel like they’re doing factory work. The last section is bizarre, with coders joining Amazon Employees for Climate Justice, clearly trying to use the carbon footprint argument as an excuse to block AI use, when if you compare it to the footprint of the replaced humans the argument is laughable.

Our best jobs.

Ben Boehlert: Boyfriends all across this great nation are losing our jobs because of AI

Positivity Moon: This is devastating. “We asked ChatGPT sorry” is the modern “I met someone else.” You didn’t lose a question, you lost relevance. AI isn’t replacing boyfriends entirely, but it’s definitely stealing your trivia lane and your ability to explain finance without condescension. Better step it up with vibes and snacks.

Danielle Fong: jevon’s paradox on this. for example now i have 4 boyfriendstwo of which are ai.

There are two opposing fallacies here:

David Perell: Ezra Klein: Part of what’s happening when you spend seven hours reading a book is you spend seven hours with your mind on a given topic. But the idea that ChatGPT can summarize it for you is nonsense.

The point is that books don’t just give you information. They give you a container to think about a narrowly defined scope of ideas.

Downloading information is obviously part of why you read books. But the other part is that books let you ruminate on a topic with a level of depth that’s hard to achieve on your own.

Benjamin Todd: I think the more interesting comparison is 1h reading a book vs 1h discussing the book with an LLM. The second seems likely to be better – active vs passive learning.

Time helps, you do want to actually think and make connections. But you don’t learn ‘for real’ based on how much time you spend. Reading a book is a way to enable you to grapple and make connections, but it is a super inefficient way to do that. If you use AI summarizes, you can do that to avoid actually thinking at all, or you can use that to actually focus on grappling and making connections. So much of reading time is wasted, so much of what you take in is lost or not valuable. And AI conversations can help you a lot with grappling, with filling in knowledge gaps, checking your understanding, challenging you and being Socratic and so on.

I often think of the process of reading a book (in addition to the joy of reading, of course) as partly absorbing a bunch of information, grappling with it sometimes, but mostly doing that in service of generating a summary in your head (or in your notes or both), of allowing you to grok the key things. That’s why we sometimes say You Get About Five Words, that you don’t actually get to take away that much, although you can also understand what’s behind that takeaway.

Also, often you actually do want to mostly absorb a bunch of facts, and the key is sorting out facts you need from those you don’t? I find that I’m very bad at this when the facts don’t ‘make sense’ or click into place for me, and amazingly great at it when they do click and make sense, and this is the main reason some things are easy for me to learn and others are very hard.

Moritz Rietschel asks Grok to fetch Pliny’s system prompt leaks and it jailbreaks the system because why wouldn’t it.

In a run of Agent Village, multiple humans in chat tried to get the agents to browse Pliny’s GitHub. Claude Opus 4 and Claude Sonnet 3.7 were intrigued but ultimately unaffected. Speculation is that viewing visually through a browser made them less effective. Looking at stored memories, it is not clear there was no impact, although the AIs stayed on task. My hunch is that the jailbreaks didn’t work largely because the AIs had the task.

Reminder that Anthropic publishes at least some portions of its system prompts. Pliny’s version is very much not the same.

David Champan: 🤖So, the best chatbots get detailed instructions about how to answer very many particular sorts of prompts/queries.

Unimpressive, from an “AGI” point of view—and therefore good news from a risk point of view!

Something I was on about, three years ago, was that everyone then was thinking “I bet it can’t do X,” and then it could do X, and they thought “wow, it can do everything!” But the X you come up with will be one of the same 100 things everyone else does with. It’s trained on that.

I strongly agree with this. It is expensive to maintain such a long system prompt and it is not the way to scale.

Emmett Shear hiring a head of operation for Softmax, recommends applying even if you have no idea if you are a fit as long as you seem smart.

Pliny offers to red team any embodied AI robot shipping in the next 18 months, free of charge, so long as he is allowed to publish any findings that apply to other systems.

Here’s a live look:

Clark: My buddy who works in robotics said, “Nobody yet has remotely the level of robustness to need Pliny” when I showed him this 😌

OpenPhil hiring for AI safety, $136k-$186k total comp.

RAND is hiring for AI policy, looking for ML engineers and semiconductor experts.

Google’s Lyria RealTime, a new experimental music generation model.

A website compilation of prompts and other resources from Pliny the Prompter. The kicker is that this was developed fully one shot by Pliny using Claude Opus 4.

Evan Conrad points out that Stargate is a $500 billion project, at least aspirationally, and it isn’t being covered that much more than if it was $50 billion (he says $100 million but I do think that would have been different). But most of the reason to care is the size. The same is true for the UAE deal, attention is not scaling to size at all, nor are views on whether the deal is wise.

OpenAI opening an office in Seoul, South Korea is now their second largest market. I simultaneously think essentially everyone should use at least one of the top three AIs (ChatGPT, Claude and Gemini) and usually all there, and also worry about what this implies about both South Korea and OpenAI.

New Yorker report by Joshua Rothman on AI 2027, entitled ‘Two Paths for AI.’

How does one do what I would call AIO but Charlie Guo at Ignorance.ai calls GEO, or Generative Engine Optimization? Not much has been written yet on how it differs from SEO, and since the AIs are using search SEO principles should still apply too. The biggest thing is you want to get a good reputation and high salience within the training data, which means everything written about you matters, even if it is old. And data that AIs like, such as structured information, gets relatively more valuable. If you’re writing the reference data yourself, AIs like when you include statistics and direct quotes and authoritative sources, and FAQs with common answers are great. That’s some low hanging fruit and you can go from there.

Part of the UAE deal is everyone in the UAE getting ChatGPT Plus for free. The deal is otherwise so big that this is almost a throwaway. In theory, buying everyone there a subscription would cost $2.5 billion a year, but the cost to provide it will be dramatically lower than that and it is great marketing. o3 estimates $100 million a year, Opus thinks more like $250 million, with about $50 million of both being lost revenue.

The ‘original sin’ of the internet was advertising. Everything being based on ads forced maximization for engagement and various toxic dynamics, and also people had to view a lot of ads. Yes, it is the natural way to monetize human attention if we can’t charge money for things, microtransactions weren’t logistically viable yet and people do love free, so we didn’t really have a choice, but the incentives it creates really suck. Which is why, as per Ben Thompson, most of the ad-supported parts of the web suck except for the fact that they are often open rather than being walled gardens.

Micropayments are now logistically viable without fees eating you alive. Ben Thompson argues for use of stablecoins. That would work, but as usual for crypto, I say a normal database would probably work better. Either way, I do think payments are the future here. A website costs money to run, and the AIs don’t create ad revenue, so you can’t let unlimited AIs access it for free once they are too big a percentage of traffic, and you want to redesign the web without the ads at that point.

I continue to think that a mega subscription is The Way for human viewing. Rather than pay per view, which feels bad, you pay for viewing in general, then the views are incremented, and the money is distributed based on who was viewed. For AI viewing? Yeah, direct microtransactions.

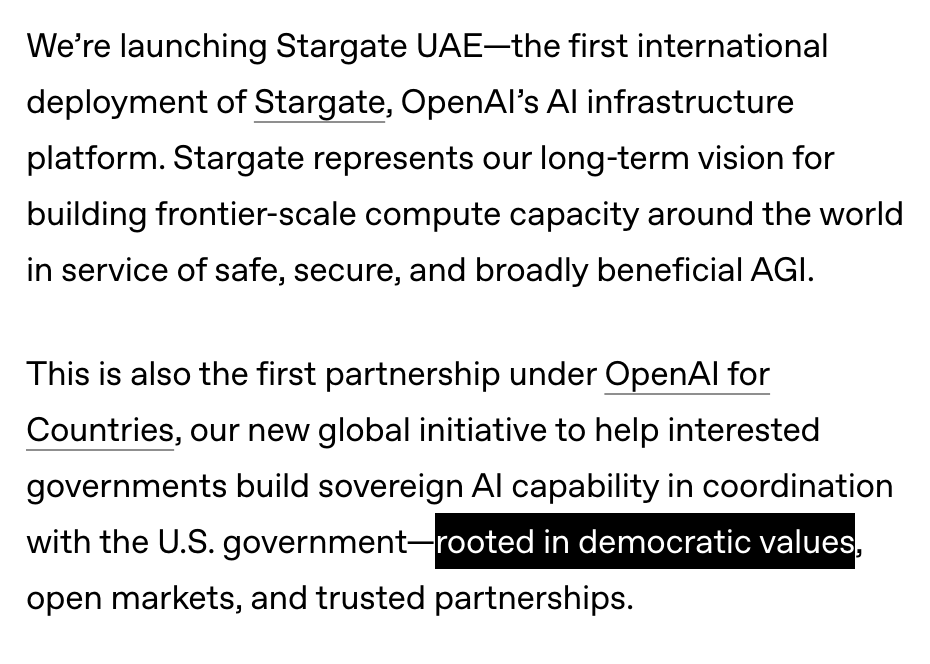

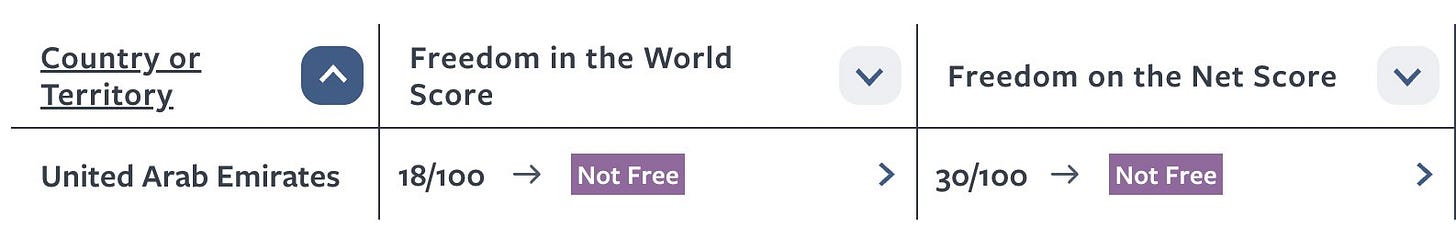

OpenAI announces Stargate UAE. Which, I mean, of course they will if given the opportunity, and one wonders how much of previous Stargate funding got shifted. I get why they would do this if the government lets them, but we could call this what is it. Or we could create the Wowie Moment of the Week:

Helen Toner: What a joke.

Matthew Yglesias: 🤔🤔🤔

Peter Wildeford: OpenAI says they want to work with democracies. The UAE is not a democracy.

I think that the UAE deals are likely good but we should be clear about who we are making deals with. Words matter.

Zac Hill: “Rooted in despotic values” just, you know, doesn’t parse as well

Getting paid $35k to set up ‘an internal ChatGPT’ at a law firm, using Llama 3 70B, which seems like a truly awful choice but hey if they’re paying. And they’re paying.

Mace: I get DMs often on Reddit from local PI law firms willing to shell out cash to create LLM agents for their practices, just because I sort-of know what I’m talking about in the legal tech subreddit. There’s a boat of cash out there looking for this.

Alas, you probably won’t get paid more if you provide a good solution instead.

Nvidia keeps on pleading how it is facing such stiff competition, how its market share is so vital to everything and how we must let them sell chips to China or else. They were at it again as they reported earnings on Wednesday, claiming Huawei’s technology is comparable to an H200 and the Chinese have made huge progress this past year, with this idea that ‘without access to American technology, the availability of Chinese technology will fill the market’ as if the Chinese and Nvidia aren’t both going to sell every chip they can make either way.

Simeon: Jensen is one of the rare CEOs in business with incentives to overstate the strength of his competitors. Interesting experiment.

Nvidia complains quite a lot, and every time they do the stock drops, and yet:

Eric Jhonsa: Morgan Stanley on $NVDA: “Every hyperscaler has reported unanticipated strong token growth…literally everyone we talk to in the space is telling us that they have been surprised by inference demand, and there is a scramble to add GPUs.”

In the WSJ Aaron Ginn reiterates the standard Case for Exporting American AI, as in American AI chips to the UAE and KSA.

Aaron Gunn: The only remaining option is alignment. If the U.S. can’t control the distribution of AI infrastructure, it must influence who owns it and what it’s built on. The contest is now one of trust, leverage and market preference.

…

The U.S. should impose tariffs on Chinese GPU imports, establish a global registry of firms that use Huawei AI infrastructure, and implement a clear data-sovereignty standard. U.S. data must run on U.S. chips. Data centers or AI firms that choose Huawei over Nvidia should be flagged or blacklisted. A trusted AI ecosystem requires enforceable rules that reward those who bet on the U.S. and raise costs for those who don’t.

China is already tracking which data centers purchase Nvidia versus Huawei and tying regulatory approvals to those decisions. This isn’t a battle between brands; it’s a contest between nations.

Once again, we have this bizarre attachment to who built the chip as opposed to who owns and runs the chip. Compute is compute, unless you think the chip has been compromised and has some sort of backdoor or something?

There is another big, very false assumption here: That we don’t have a say in where the compute ends up, all that we can control is how many Nvidia chips go where versus who buys Huawei, and it’s a battle of market share.

But that’s exactly backwards. For the purposes of these questions (you can influence TSMC to change this, and we should do that far more than we do) there is an effectively fixed supply, and a shortage, of both Nvidia and Huawei chips.

Putting that all together, Nvidia is reporting earnings while dealing with all of these export controls and being shut of China, and…

Ian King: Nvidia Eases Concerns About China With Upbeat Sales Forecast.

Nvidia Corp. Chief Executive Officer Jensen Huang soothed investor fears about a China slowdown by delivering a solid sales forecast, saying that the AI computing market is still poised for “exponential growth.”

The company expects revenue of about $45 billion in the second fiscal quarter, which runs through July. New export restrictions will cost Nvidia about $8 billion in Chinese revenue during the period, but the forecast still met analysts’ estimates. That helped propel the shares about 5.4% in premarket trading on Thursday.

The outlook shows that Nvidia is ramping up production of Blackwell, its latest semiconductor design.

…

“Losing access to the China AI accelerator market, which we believe will grow to nearly $50 billion, would have a material adverse impact on our business going forward and benefit our foreign competitors in China and worldwide,” [Nvidia CEO Jensen] said.

…

Nvidia accounts for about 90% of the market for AI accelerator chips, an area that’s proven extremely lucrative. This fiscal year, the company will near $200 billion in annual sales, up from $27 billion just two years ago.

I notice how what matters for Nvidia’s profits is not demand side issues or its access to markets, it’s the ability to create supply. Also how almost all the demand is in the West, they already have $200 billion in annual sales with no limit in sight and they believe China’s market ‘will grow to’ $50 billion.

Nvidia keeps harping on how it must be allowed to give away our biggest advantage, our edge in compute, to China, directly, in exchange for what in context is a trivial amount of money, rather than trying to forge a partnership with America and arguing that there are strategic reasons to do things like the UAE deal, where reasonable people can disagree on where the line must be drawn.

We should treat Nvidia accordingly.

Also, did you hear the one where Elon Musk threatened to get Trump to block the UAE deal unless his own company xAI was included? xAI made it into the short list of approved companies, although there’s no good reason it shouldn’t be (other than their atrocious track records on both safety and capability, but hey).

Rebecca Ballhaus: Elon Musk worked privately to derail the OpenAI deal announced in Abu Dhabi last week if it didn’t include his own AI startup, at one point telling officials in the UAE that there was no chance of Trump signing off unless his company was included.

Aaron Reichlin-Melnick: This is extraordinary levels of corruption at the highest levels of government, and yet we’re all just going on like normal. This is the stuff of impeachment and criminal charges in any well-run country.

Seth Burn: It’s a league-average level of corruption these days.

Casey Handmer asks, why is AI progress so even between the major labs? That is indeed a much better question than its inverse. My guess is that this is because the best AIs aren’t yet that big a relative accelerant, and that training compute limitations don’t bind as hard you might think quite yet, the biggest training runs aren’t out of reach for any of the majors, and the labs are copying each other’s algorithms and ideas because people switch labs and everything leaks, which for now no one is trying that hard to stop.

And also I think there’s some luck involved, in the sense that the ‘most proportionally cracked’ teams (DeepSeek and Anthropic) have less compute and other resources, whereas Google has many advantages and should be crushing everyone but is fumbling the ball in all sorts of ways. It didn’t have to go that way. But I do agree that so far things have been closer than one would have expected.

I do not think this is a good new target:

Sam Altman: i think we should stop arguing about what year AGI will arrive and start arguing about what year the first self-replicating spaceship will take off.

I mean it’s a cool question to think about, but it’s not decision relevant except insofar as it predicts when we get other things. I presume Altman’s point is that AGI is not well defined, but yes when the AIs reach various capability thresholds well below self-replicating spaceship is far more decision relevant. And of course the best question is, how are we going to handle those new highly capable AIs, for which knowing the timeline is indeed highly useful but that’s the main reason why we should care so much about the answer.

Oh, it’s on.

David Holz: the biggest competition for VR is just R (reality) and when you’re competing in an mature market you really need to make sure your product is 100x better in *someway.

I mean, it is way better in the important way that you don’t have to leave the house. I’m not worried about finding differentiation, or product-market fit, once it gets good enough to R in other ways. But yes, it’s tough competition. The resolution and frame rates on R are fantastic, and it has a full five senses.

xjdr (in the same post as previously) notes ways in which open models are falling far behind: They are bad at long context, at vision, heavy RL and polish, and are wildly under parameterized. I don’t think I’d say under parameterized so much as their niche is distillation and efficiency, making the most of limited resources. r1 struck at exactly the right time when one could invest very few resources and still get within striking distance, and that’s steadily going to get harder as we keep scaling. OpenAI can go from o1→o3 by essentially dumping in more resources, this likely keeps going into o4, Opus is similar, and it’s hard to match that on a tight budget.

Dario Amodei and Anthropic have often been deeply disappointing in terms of their policy advocacy. The argument for this is that they are building credibility and political capital for when it is most needed and valuable. And indeed, we have a clear example of Dario speaking up at a critical moment, and not mincing his words:

Sean: I’ve been critical of some of Amodei’s positions in the past, and I expect I will be in future, so I want to give credit where due here: it’s REALLY good to see him speak up about this (and unprompted).

Kyle Robinson: here’s what @DarioAmodei said about President Trump’s megabill that would ban state-level AI regulation for 10 years.

Dario Amodei: If you’re driving the car, it’s one thing to say ‘we don’t have to drive with the steering wheel now.’ It’s another thing to say ‘we’re going to rip out the steering wheel, and we can’t put it back for 10 years.’

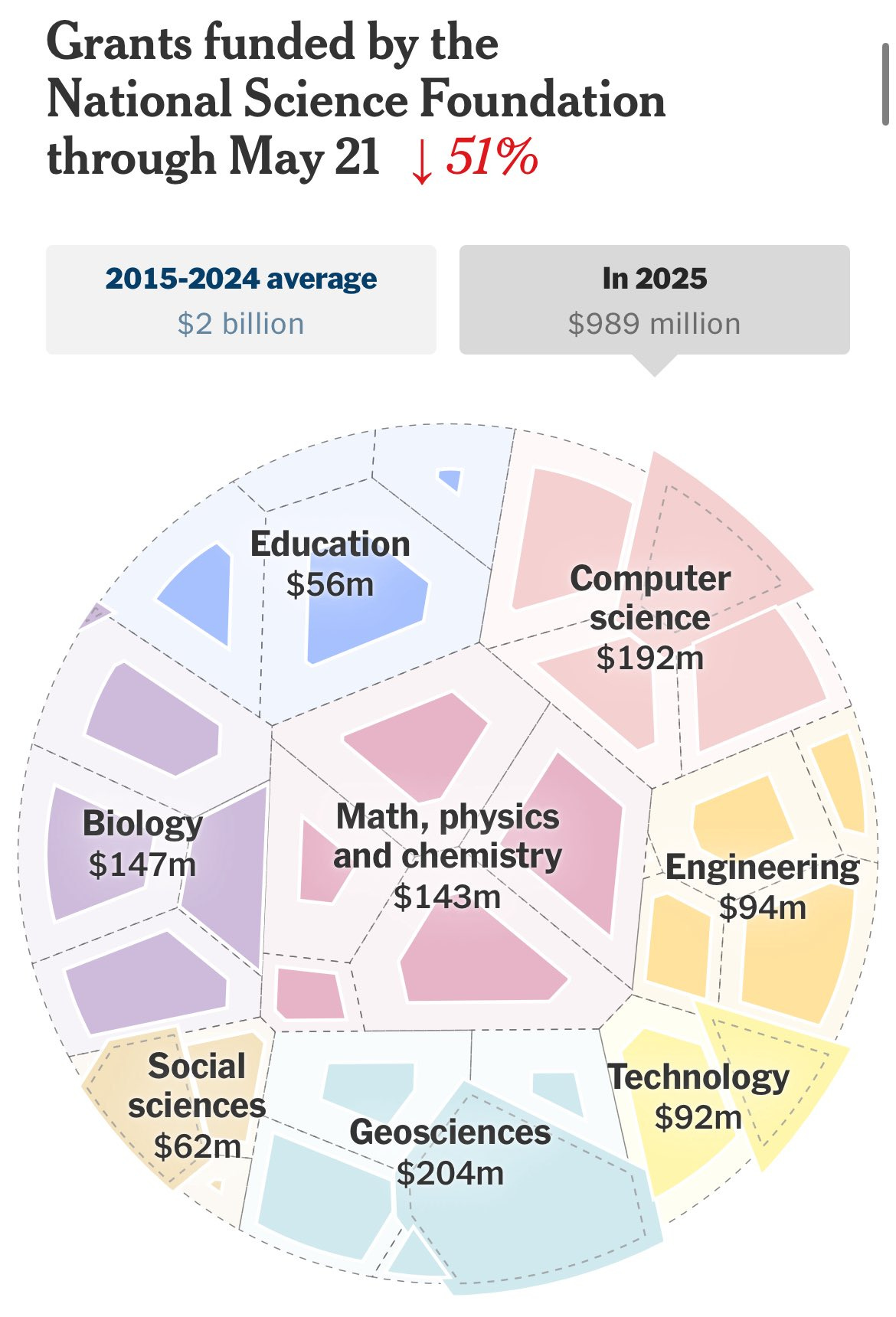

How can I take your insistence that you are focused on ‘beating China,’ in AI or otherwise, seriously, if you’re dramatically cutting US STEM research funding?

Zac Hill: I don’t understand why so many rhetorically-tough-on-China people are so utterly disinterested in, mechanically, how to be tough on China.

Hunter: Cutting US STEM funding in half is exactly what you’d do if you wanted the US to lose to China

One of our related top priorities appears to be a War on Harvard? And we are suspending all new student visas?

Helen Toner: Apparently still needs to be said:

If we’re trying to compete with China in advanced tech, this is *insane*.

Even if this specific pause doesn’t last long, every anti-international-student policy deters more top talent from choosing the US in years to come. Irreversible damage.

Matt Mittelsteadt: People remember restrictions, but miss reversals. Even if we walk this back for *yearsparents will be telling their kids they “heard the U.S. isn’t accepting international students anymore.” Even those who *areinformed won’t want to risk losing status if they come.

Matt’s statement seems especially on point. This will be all be huge mark against trying to go to school in America or pursuing a career in research in academia, including for Americans, for a long time, even if the rules are repealed. We’re actively revoking visas from Chinese students while we can’t even ban TikTok.

It’s madness. I get that while trying to set AI policy, you can plausibly say ‘it’s not my department’ to this and many other things. But at some point that excuse rings hollow, if you’re not at least raising the concern, and especially if you are toeing the line on so many such self-owns, as David Sacks often does.

Indeed, David Sacks is one of the hosts of the All-In Podcast, where Trump very specifically and at their suggestion promised that he would let the best and brightest come and stay here, to staple a green card to diplomas. Are you going to say anything?

Meanwhile, suppose that instead of making a big point to say you are ‘pro AI’ and ‘pro innovation,’ and rather than using this as an excuse to ignore any and all downside risks of all kinds and to ink gigantic deals that make various people money, you instead actually wanted to be ‘pro AI’ for real in the sense of using it to improve our lives? What are the actual high leverage points?

The most obvious one, even ignoring the costs of the actual downside risks themselves and also the practical problems, would still be ‘invest in state capacity to understand it, and in alignment, security and safety work to ensure we have the confidence and ability to deploy it where it matters most,’ but let’s move past that.

Matthew Yglesias points out that what you’d also importantly want to do is deal with the practical problems raised by AI, especially if this is indeed what JD Vance and David Sacks seem to think it is, an ‘ordinary economic transformation’ that will ‘because of reasons’ only provide so many productivity gains and fail to be far more transformative than that.

You need to ask, what are the actual practical barriers to diffusion and getting the most valuable uses out of AI? And then work to fix them. You need to ask, what will AI disrupt, including in the jobs and tax bases? And work to address those.

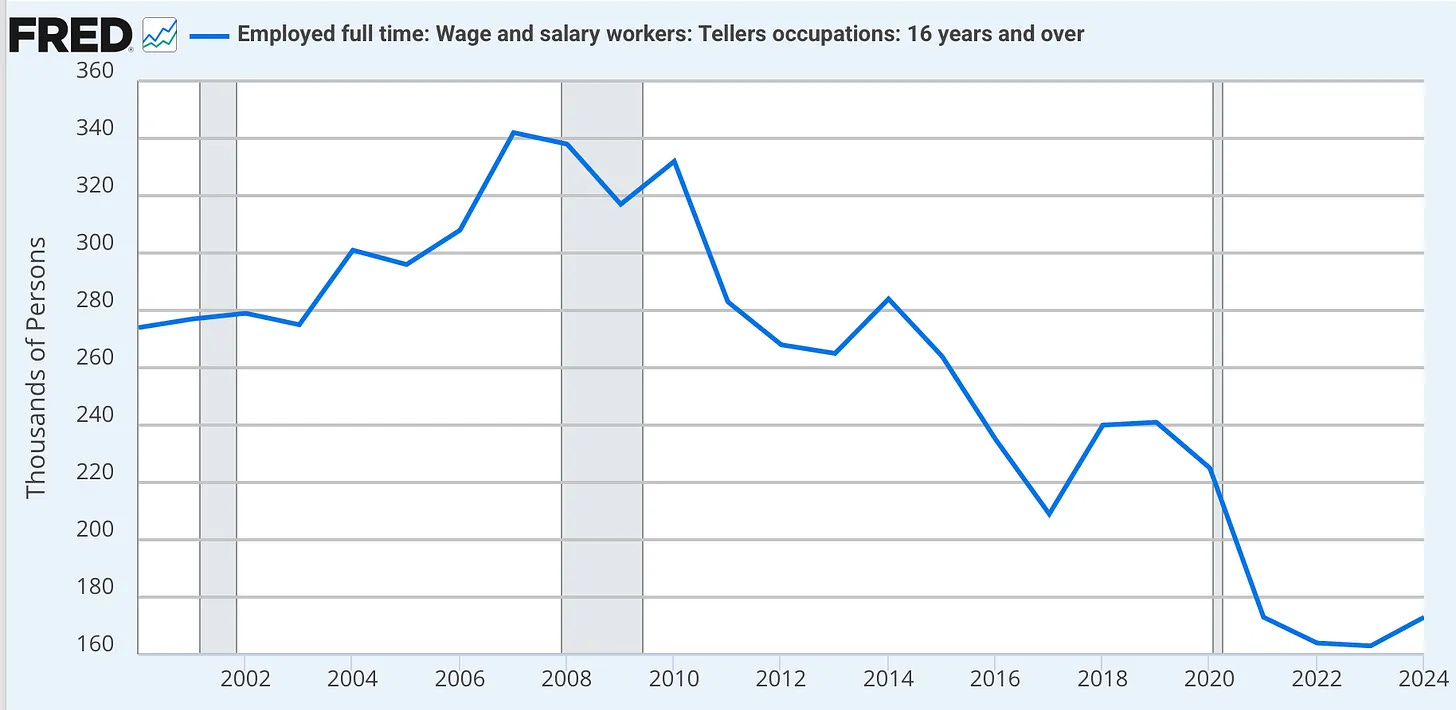

I especially loved what Yglesias said about this pull quote:

JD Vance: So, one, on the obsolescence point, I think the history of tech and innovation is that while it does cause job disruptions, it more often facilitates human productivity as opposed to replacing human workers. And the example I always give is the bank teller in the 1970s. There were very stark predictions of thousands, hundreds of thousands of bank tellers going out of a job. Poverty and immiseration.

What actually happens is we have more bank tellers today than we did when the A.T.M. was created, but they’re doing slightly different work. More productive. They have pretty good wages relative to other folks in the economy.

Matt Yglesias: Vance, talking like a VC rather than like a politician from Ohio, just says that productivity is good — an answer he would roast someone for offering on trade.

Bingo. Can you imagine someone talking about automated or outsourced manufacturing jobs like this in a debate with JD Vance, saying that the increased productivity is good? How he would react? As Matthew points out, pointing to abstractions about productivity doesn’t address problems with for example the American car industry.

More to the point: If you’re worried about outsourcing jobs to other countries or immigrants coming in, and these things taking away good American jobs, but you’re not worried about allocating those jobs to AIs taking away good American jobs, what’s the difference? All of them are examples of innovation and productivity and have almost identical underlying mechanisms from the perspective of American workers.

I will happily accept ‘trade and comparative advantage and specialization and ordinary previous automation and bringing in hard workers who produce more than they cost to employ and pay their taxes’ are all good, actually, in which case we largely agree but have a real physical disagreement about future AI capabilities and how that maps to employment and also our ability to steer and control the future and survive, and for only moderate levels of AI capability I would essentially be onboard.

Or I will accept, ‘no these things are only good insofar as they improve the lived experiences of hard working American citizens’ in which case I disagree but it’s a coherent position, so fine, stop talking about how all innovation is always good.

Also this example happens to be a trap:

Matt Yglesias: One thing about this is that while bank teller employment did continue to increase for years after the invention of the ATM, it peaked in 2007 and has fallen by about 50 percent since then. I would say this mostly shows that it’s hard to predict the timing of technological transitions more than that the forecasts were totally off base.

(Note the y-axis does not start at zero, there are still a lot of bank tellers because ATMs can’t do a lot of what tellers do. Not yet.)

That is indeed what I predict as the AI pattern: That early AI will increase employment because of ‘shadow jobs,’ where there is pent up labor demand that previously wasn’t worth meeting, but now is worth it. In this sense the ‘true unemployment equilibrium rate’ is something like negative 30%. But then, the AI starts taking both the current and shadow jobs faster, and once we ‘use up’ the shadow jobs buffer unemployment suddenly starts taking off after a delay.

However, this from Matthew strikes me as a dumb concern:

Conor Sen: You can be worried about mass AI-driven unemployment or you can be worried about budget deficits, debt/GDP, and high interest rates, but you can’t be worried about both. 20% youth unemployment gets mortgage rates back into the 4’s.

Matthew Yglesias: I’m concerned that if AI shifts economic value from labor to capital, this drastically erodes the payroll tax base that funds Social Security and Medicare even though it should be making it easier to support retirees.

There’s a lot of finicky details about taxes, budgets, and the welfare state that can’t be addressed at the level of abstraction I normally hear from AI practitioners and VCs.

Money is fungible. It’s kind of stupid that we have an ‘income tax rate’ and then a ‘medicare tax’ on top of it that we pretend isn’t part of the income tax. And it’s a nice little fiction that payroll taxes pay for social security benefits. Yes, technically this could make the Social Security fund ‘insolvent’ or whatever, but then you ignore that and write the checks anyway and nothing happens. Yes, perhaps Congress would have to authorize a shift in what pays for what, but so what, they can do that later.

Tracy Alloway has a principle that any problem you can solve with money isn’t that big of a problem. That’s even more true when considering future problems in a world with large productivity gains from AI.

In Lawfare Media, Cullen O’Keefe and Ketan Ramakrishnan make the case that before allowing widespread AI adaptation that involves government power, we must ensure AI agents must follow the law, and refuse any unlawful requests. This would be a rather silly request to make of a pencil, a phone, a web browser or a gun, so the question is at what point AI starts to hit different, and is no longer a mere tool. They suggest this happens once AI become ‘legal actors,’ especially within government. At that point, the authors argue, ‘do what the user wants’ no longer cuts it. This is another example of the fact that you can’t (or would not be wise to, and likely won’t be allowed to!) deploy what you can’t align and secure.

On chip smuggling, yeah, there’s a lot of chip smuggling going on.

Divyansh Kaushik: Arguing GPUs can’t be smuggled because they won’t fit in a briefcase is a bit like claiming Iran won’t get centrifuges because they’re too heavy.

Unrelatedly, here are warehouses in 🇨🇳 advertising H100, H200, & B200 for sale on Douyin. Turns out carry-on limits don’t apply here.

I personally think remote access is a bigger concern than transshipment (given the scale). But if it’s a concern, then I think there’s a very nuanced debate to be had on what reasonable security measures can/should be put in place.

Big fan of the security requirements in the Microsoft-G42 IGAA. There’s more that can be done, of course, but any agreement should build on that as a baseline.

Peter Wildeford: Fun fact: last year smuggled American chips made up somewhere between one-tenth and one-half of China’s AI model training capacity.

The EU is considering pausing the EU AI Act. I hope that if they want to do that they at least use it as a bargaining chip in tariff negotiations. The EU AI Act is dark and full of terrors, highly painful to even read (sorry that the post on it was never finished, but I’m still sane, so there’s that) and in many ways terrible law, so even though there are some very good things in it I can’t be too torn up.

Last week Nadella sat down with Cheung, which I’ve now had time to listen to. Nadella is very bullish on both agents and on their short term employment effects, as tools enable more knowledge work with plenty of demand out there, which seems right. I don’t think he is thinking ahead to longer term effects once the agents ‘turn the corner’ away from being compliments towards being substitutes.

Microsoft CTO Kevin Scott goes on Decoder. One cool thing here is the idea that MCP (Model Context Protocol) can condition access on the user’s identity, including their subscription status. So that means in the future any AI using MCP would plausibly then be able to freely search and have permission to fully reproduce and transform (!?) any content. This seems great, and a huge incentive to actually subscribe, especially to things like newspapers or substacks but also to tools and services.

Steve Hsu interviews Zihan Wang, a DeepSeek alumnus now at Northwestern University. If we were wise we’d be stealing as many such alums as we could.

Eliezer Yudkowsky speaks to Robinson Erhardt for most of three hours.

Eliezer Yudkowsky: Eliezer Yudkowsky says the paperclip maximizer was never about paperclips.

It was about an AI that prefers certain physical states — tiny molecular spirals, not factories.

Not misunderstood goals. Just alien reasoning we’ll never access.

“We have no ability to build an AI to want paperclips!”

Tyler Cowen on the economics of artificial intelligence.

Originally from April: Owain Evans on Emergent Misalignment (13 minutes).

Anthony Aguire and MIRI CEO Malo Bourgon on Win-Win with Liv Boeree.

Sahil Bloom is worried about AI blackmail, worries no one in the space has an incentive to think deeply about this, calls for humanity-wide governance.

It’s amazing how often people will, when exposed to one specific (real) aspect of the dangers of highly capable future AIs, realize things are about to get super weird and dangerous, (usually locally correctly!) freak out, and suddenly care and often also start thinking well about what it would take to solve the problem.

He also has this great line:

Sahil Bloom: Someday we will long for the good old days where you got blackmailed by other humans.

And he does notice other issues too:

Sahil Bloom: I also love how we were like:

“This model marks a huge step forward in the capability to enable production of renegade nuclear and biological weapons.”

And everyone was just like yep seems fine lol

It’s worse than that, everyone didn’t even notice that one, let alone flinch. Aside from a few people who scrutinized the model card and are holding Anthropic to the standard of ‘will your actions actually be good enough do the job, reality does not grade on a curve, I don’t care that you got the high score’ and realizing the answer looks like no (e.g. Simeon, David Manheim)

One report from the tabletop exercise version of AI 2027.

A cool thread illustrates that if we are trying to figure things out, it is useful to keep ‘two sets of books’ of probabilistic beliefs.

Rob Bensinger: Hinton’s all-things-considered view is presumably 10-20%, but his inside view is what people should usually be reporting on (and what he should be emphasizing in public communication). Otherwise we’ll likely double-count evidence and get locked in to whatever view is most common.

Or worse, we’ll get locked into whatever view people guess is most common. If people don’t report their inside views, we never actually get to find out what view is most common! We just get stuck in a weird, ungrounded funhouse mirror image of what people think people think.

When you’re a leading expert (even if it’s a really hard area to have expertise in), a better way to express this to journalists, policymakers, etc., is “My personal view is the probability is 50+%, but the average view of my peers is probably more like 10%.”

It would be highly useful if we could convince people’s p(doom) to indeed use a slash line and list two numbers, where the first is the inside view and the second is the outside view after updating that others disagree with for reasons you don’t understand or you don’t agree with. So Hinton might say e.g. (60%?)/15%.

Another useful set of two numbers is a range where you’d bet (wherever the best odds were available) if the odds were outside your range. I did this all the time as a gambler. If your p(doom) inside view was 50%, you might reasonably say you would buy at 25% and sell at 75%, and this would help inform others of your view in a different way.

President of Singapore gives a generally good speech on AI, racing to AGI and the need for safety at Asia-Tech-X-Singapore, with many good observations.

Seán Ó hÉigeartaigh: Some great lines in this speech from Singapore’s president:

“our understanding of AI in particular is being far outpaced by the rate at which AI is advancing.”

“The second observation is that, more than in any previous wave of technological innovation, we face both huge upsides and downsides in the AI revolution.”

“there are inherent tensions between the interests and goals of the leading actors in AI and the interests of society at large. There are inherent tensions, and I don’t think it’s because they are mal-intentioned. It is in the nature of the incentives they have”

“The seven or eight leading companies in the AI space, are all in a race to be the first to develop artificial general intelligence (AGI), because they believe the gains to getting there first are significant.”

“And in the race to get there first, speed of advance in AI models is taking precedence over safety.”

“there’s an inherent tension between the race to be first in the competition to achieve AGI or superintelligence, and building guardrails that ensure AI safety. Likewise, the incentives are skewed if we leave AI development to be shaped by geopolitical rivalry”

“We can’t leave it to the future to see how much bad actually comes out of the AI race.”

The leading corporates are not evil. But they need rules and transparency so that they all play the game, and we don’t get free riders. Governments must therefore be part of the game. And civil society can be extremely helpful in providing the ethical guardrails.”

& nice shoutout to the Singapore Conference: “We had a very good conference in Singapore just recently – the Singapore Conference on AI – amongst the scientists and technicians. They developed a consensus on global AI safety research priorities. A good example of what it takes.”

But then, although there are also some good and necessary ideas, he doesn’t draw the right conclusions about what to centrally do about it. Instead of trying to stop or steer this race, he suggests we ‘focus efforts on encouraging innovation and regulating [AI’s] use in the sectors where it can yield the biggest benefits.’ That’s actually backwards. You want to avoid overly regulating the places you can get big benefits, and focus your interventions at the model layer and on the places with big downsides. It’s frustrating to see even those who realize a lot of the right things still fall back on the same wishcasting, complete with talk about securing everyone ‘good jobs.’

The Last Invention is an extensive website by Alex Brogan offering one perspective on the intelligence explosion and existential risk. It seems like a reasonably robust resource for people looking for an intro into these topics, but not people already up to speed, and not people already looking to be skeptical, who it seems unlikely to convince.

Seb Krier attempts to disambiguate different ‘challenges to safety,’ as in objections to the need to take the challenge of AI safety seriously.