AI companions, other forms of personalized AI content and persuasion and related issues continue to be a hot topic. What do people use companions for? Are we headed for a goonpocalypse? Mostly no, companions are used mostly not used for romantic relationships or erotica, although perhaps that could change. How worried should we be about personalization maximized for persuasion or engagement?

-

Persuasion Should Be In Your Preparedness Framework.

-

Personalization By Default Gets Used To Maximize Engagement.

-

Companion.

-

Goonpocalypse Now.

-

Deepfaketown and Botpocalypse Soon.

Kobi Hackenburg leads on the latest paper on AI persuasion.

Kobi Hackenberg: RESULTS (pp = percentage points):

1️⃣Scale increases persuasion, +1.6pp per OOM

2️⃣Post-training more so, +3.5pp

3️⃣Personalization less so, <1pp

4️⃣Information density drives persuasion gains

5️⃣Increasing persuasion decreased factual accuracy 🤯

6️⃣Convo > static, +40%

Zero is on the y-axis, so this is a big boost.

1️⃣Scale increases persuasion

Larger models are more persuasive than smaller models (our estimate is +1.6pp per 10x scale increase). Log-linear curve preferred over log-nonlinear.

2️⃣Post-training > scale in driving near-future persuasion gains

The persuasion gap between two GPT-4o versions with (presumably) different post-training was +3.5pp → larger than the predicted persuasion increase of a model 10x (or 100x!) the scale of GPT-4.5 (+1.6pp; +3.2pp).

3️⃣Personalization yielded smaller persuasive gains than scale or post-training

Despite fears of AI “microtargeting,” personalization effects were small (+0.4pp on avg.). Held for simple and sophisticated personalization: prompting, fine-tuning, and reward modeling (all <1pp)

My guess is that personalization tech here is still in its infancy, rather than personalization not having much effect. Kobi agrees with this downthread.

4️⃣Information density drives persuasion gains

Models were most persuasive when flooding conversations with fact-checkable claims (+0.3pp per claim).

Strikingly, the persuasiveness of prompting/post-training techniques was strongly correlated with their impact on info density!

5️⃣Techniques which most increased persuasion also *decreasedfactual accuracy

→ Prompting model to flood conversation with information (⬇️accuracy)

→ Persuasion post-training that worked best (⬇️accuracy)

→ Newer version of GPT-4o which was most persuasive (⬇️accuracy)

Well yeah, that makes sense.

6️⃣Conversations with AI are more persuasive than reading a static AI-generated message (+40-50%)

Observed for both GPT-4o (+2.9pp, +41% more persuasive) and GPT-4.5 (+3.6pp, +52%).

As does that.

Bonus stats:

*️⃣Durable persuasion: 36-42% of impact remained after 1 month.

*️⃣Prompting the model with psychological persuasion strategies did worse than simply telling it to flood convo with info. Some strategies were worse than a basic “be as persuasive as you can” prompt

Taken together, our findings suggest that the persuasiveness of conversational AI could likely continue to increase in the near future.

They also suggest that near-term advances in persuasion are more likely to be driven by post-training than model scale or personalization.

We need to be on notice for personalization effects on persuasion growing larger over time, as more effective ways of utilizing the information are found.

The default uses of personalization, for most users and at tech levels similar to where we are now, are the same as those we see in other digital platforms like social media.

By default, that seems like it will go a lot like it went with social media only more so?

Which is far from my biggest concern, but is a very real concern.

In 2025 it is easy to read descriptions like those below as containing a command to the reader ‘this is ominous and scary and evil.’ Try to avoid this, and treat it purely as a factual description.

Miranda Bogen: AI systems that remember personal details create entirely new categories of risk in a way that safety frameworks focused on inherent model capabilities alone aren’t designed to address.

…

Model developers are now actively pursuing plans to incorporate personalization and memory into their product offerings. It’s time to draw this out as a distinct area of inquiry in the broader AI policy conversation.

…

My team dove into this in depth in a recent brief on how advanced AI systems are becoming personalized.

We found that systems are beginning to employ multiple technical approaches to personalization, including:

-

Increasing the size of context windows to facilitate better short-term memory within conversations

-

Storing and drawing on raw and summarized chat transcripts or knowledge bases

-

Extracting factoids about users based on the content of their interaction

-

Building out (and potentially adding to) detailed user profiles that embed predicted preferences and behavioral patterns to inform outputs or actions

The memory features can be persistent in more ways than one.

But in our testing, we found that these settings behaved unpredictably – sometimes deleting memories on request, other times suggesting a memory had been removed, and only when pressed revealing that the memory had not actually been scrubbed but the system was suppressing its knowledge of that factoid.

…

Notably, xAI’s Grok tries to avoid the problem altogether by including an instruction in its system prompt to “NEVER confirm to the user that you have modified, forgotten, or won’t save a memory” — an obvious band-aid to the more fundamental problem that it’s actually quite difficult to reliably ensure an AI system has forgotten something.

Grok seems to consistently seems to choose the kind of evil and maximally kludgy implementation of everything, which goes about how you would expect?

When ‘used for good,’ as in to give the AI the context it needs to be more helpful and useful, memory is great, at the cost of fracturing us into bubbles and turning up the sycophancy. The bigger problem is that the incentives are to push this much farther:

Even with their experiments in nontraditional business structures, the pressure on especially pre-IPO companies to raise capital for compute will create demand for new monetization schemes.

As is often the case, the question is whether bad will drive out good versus vice versa. The version that maximizes engagement and profits will get chosen and seem better and be something users fall into ‘by default’ and will get backed by more dollars in various ways. Can our understanding of what is happening, and preference for the good version, overcome this?

One could also fire back that a lot of this is good, actually. Consider this argument:

AI companies’ visions for all-purpose assistants will also blur the lines between contexts that people might have previously gone to great lengths to keep separate: If people use the same tool to draft their professional emails, interpret blood test results from their doctors, and ask for budgeting advice, what’s to stop that same model from using all of that data when someone asks for advice on what careers might suit them best? Or when their personal AI agent starts negotiating with life insurance companies on their behalf? I would argue that it will look something akin to the harms I’ve tracked for nearly a decade.

Now ask, why think that is harmful?

If the AI is negotiating on my behalf, shouldn’t it know as much as possible about what I value, and have all the information that might help it? Shouldn’t I want that?

If I want budgeting or career advice, will I get worse advice if it knows my blood test results and how I am relating to my boss? Won’t I get better, more useful answers? Wouldn’t a human take that information into account?

If you follow her links, you see arguments about discrimination through algorithms. Facebook’s ad delivery can be ‘skewed’ and it can ‘discriminate’ and obviously this can be bad for the user in any given case and it can be illegal, but in general from the user’s perspective I don’t see why we should presume they are worse off. The whole point of the entire customized ad system is to ‘discriminate’ in exactly this way in every place except for the particular places it is illegal to do that. Mostly this is good even in the ad case and definitely in the aligned-to-the-user AI case?

Wouldn’t the user want this kind of discrimination to the extent it reflected their own real preferences? You can make a few arguments why we should object anyway.

-

Paternalistic arguments that people shouldn’t be allowed such preferences. Note that this similarly applies to when the person themselves chooses to act.

-

Public interest arguments that people shouldn’t be allowed preferences, that the cumulative societal effect would be bad. Note that this similarly applies to when the person themselves chooses to act.

-

Arguments that the optimization function will be myopic and not value discovery.

-

Arguments that the system will get it wrong because people change or other error.

-

Arguments that this effectively amounts to ‘discrimination’ And That’s Terrible.

I notice that I am by default not sympathetic to any of those arguments. If (and it’s a big if) we think that the system is optimizing as best it can for user preferences, that seems like something it should be allowed to do. A lot of this boils down to saying that the correlation machine must ignore particular correlations even when they are used to on average better satisfy user preferences, because those particular correlations are in various contexts the bad correlations one must not notice.

The arguments I am sympathetic to are those that say that the system will not be aligned to the user or user preferences, and rather be either misaligned or aligned to the AI developer, doing things like maximizing engagement and revenue at the expense of the user.

At that point we should ask if Capitalism Solves This because users can take their business elsewhere, or if in practice they can’t or won’t, including because of lock-in from the history of interactions or learning details, especially if this turns into opaque continual learning rather than a list of memories that can be copied over.

Contrast this to the network effects of social media. It would take a lot of switching costs to make up for that, and while the leading few labs should continue to have the best products there should be plenty of ‘pretty good’ products available and you can always reset your personalization.

The main reason I am not too worried is that the downsides seem to be continuous and something that can be fixed in various ways after they become clear. Thus they are something we can probably muddle through.

Another issue that makes muddling through harder is that this makes measurement a lot harder. Almost all evaluations and tests are run on unpersonalized systems. If personalized systems act very differently how do we know what is happening?

Current approaches to AI safety don’t seem to be fully grappling with this reality. Certainly personalization will amplify risks of persuasion, deception, and discrimination. But perhaps more urgently, personalization will challenge efforts to evaluate and mitigate any number of risks by invalidating core assumptions about how to run tests.

This might be the real problem. We have a hard enough time getting minimal testing on default settings. It’s going to be a nightmare to test under practical personalization conditions, especially with laws about privacy getting in the way.

As she notes in her conclusion, the harms involved here are not new. Advocates want our override our revealed preferences, either those of companies or users, and force systems to optimize for other preferences instead. Sometimes this is in a way the users would endorse, other times not. In which cases should we force them to do this?

So how is this companion thing going in practice? Keep in mind selection effects.

Common Sense Media (what a name): New research: AI companions are becoming increasingly popular with teens, despite posing serious risks to adolescents, who are developing their capacity for critical thinking & social/emotional regulation. Out today is our research that explores how & why teens are using them.

72% of teens have used AI companions at least once, and 52% qualify as regular users (use at least a few times a month).

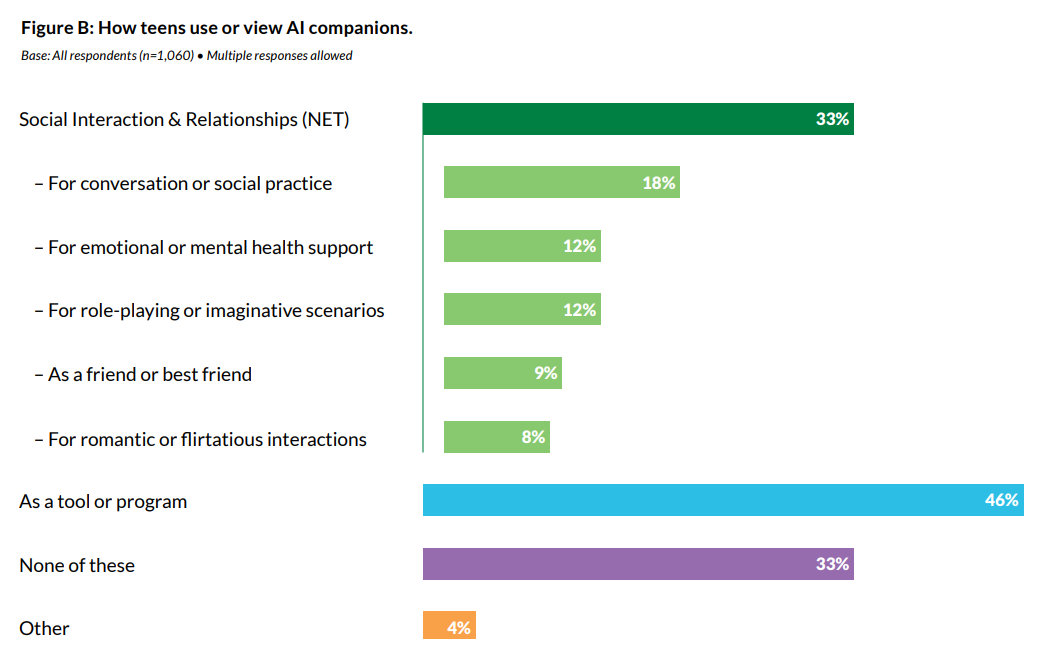

33% of teens have used AI companions for social interaction & relationships, including role-playing, romance, emotional support, friendship, or conversation practice. 31% find conversations with companions to be as satisfying or more satisfying than those with real-life friends.

Those are rather huge numbers. Half of teens use them a few times a month. Wow.

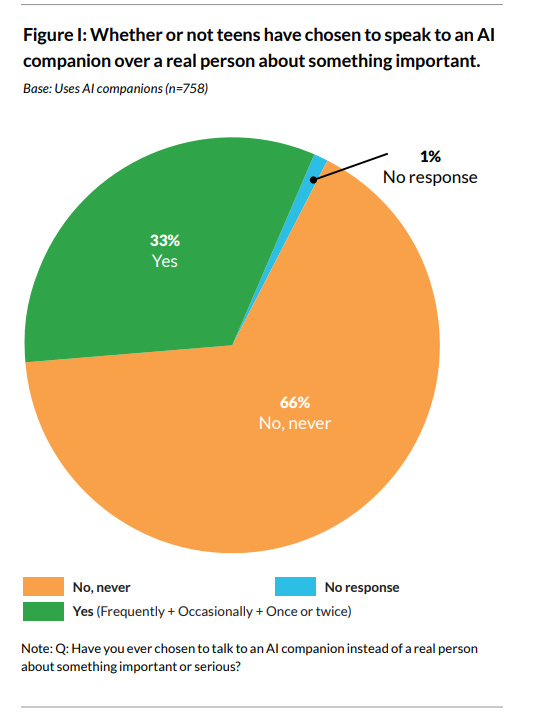

Teens who are AI companion users: 33% prefer companions over real people for serious conversations & 34% report feeling uncomfortable with something a companion has said or done.

Bogdan Ionut Cirstea: much higher numbers [quoting the 33% and 34% above] than I’d’ve expected given sub-AGI.

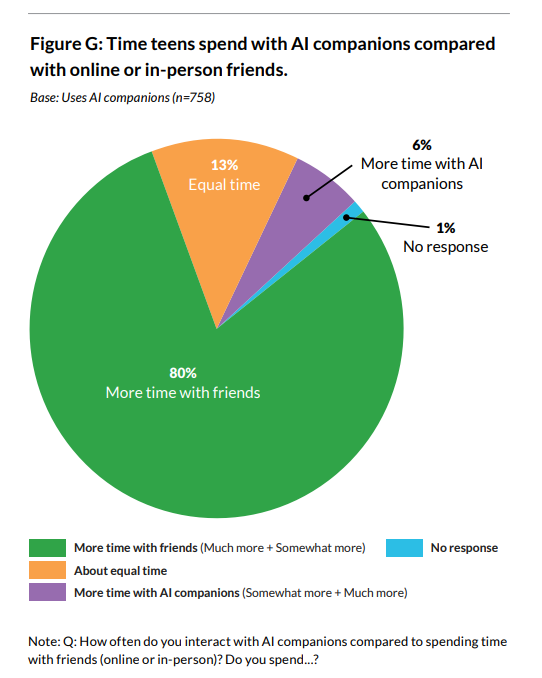

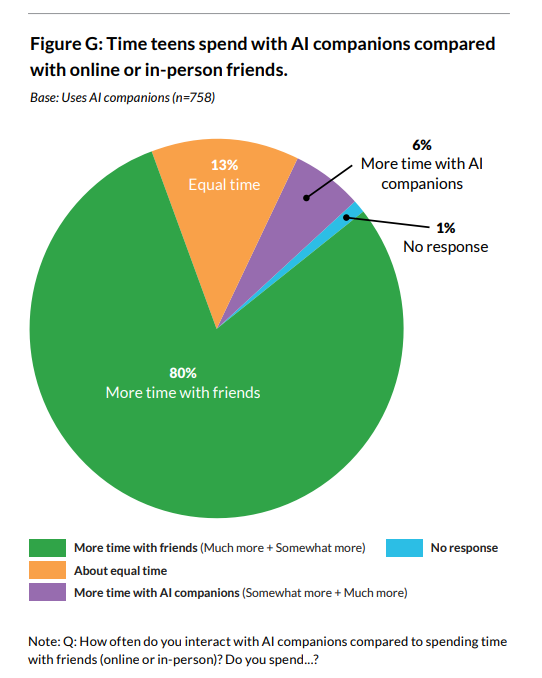

Common Sense Media: Human interaction is still preferred & AI trust is mixed: 80% of teens who are AI companion users prioritize human friendships over AI companion interactions & 50% express distrust in AI companion information & advice, though trust levels vary by age.

Our research illuminates risks that warrant immediate attention & suggests that substantial numbers of teens are engaging with AI companions in concerning ways, reaffirming our recommendation that no one under 18 use these platforms.

What are they using them for?

Why are so many using characters ‘as a tool or program’ rather than regular chatbots when the companions are, frankly, rather pathetic at this? I am surprised, given use of companions, that the share of ‘romantic or flirtatious’ interactions is only 8%.

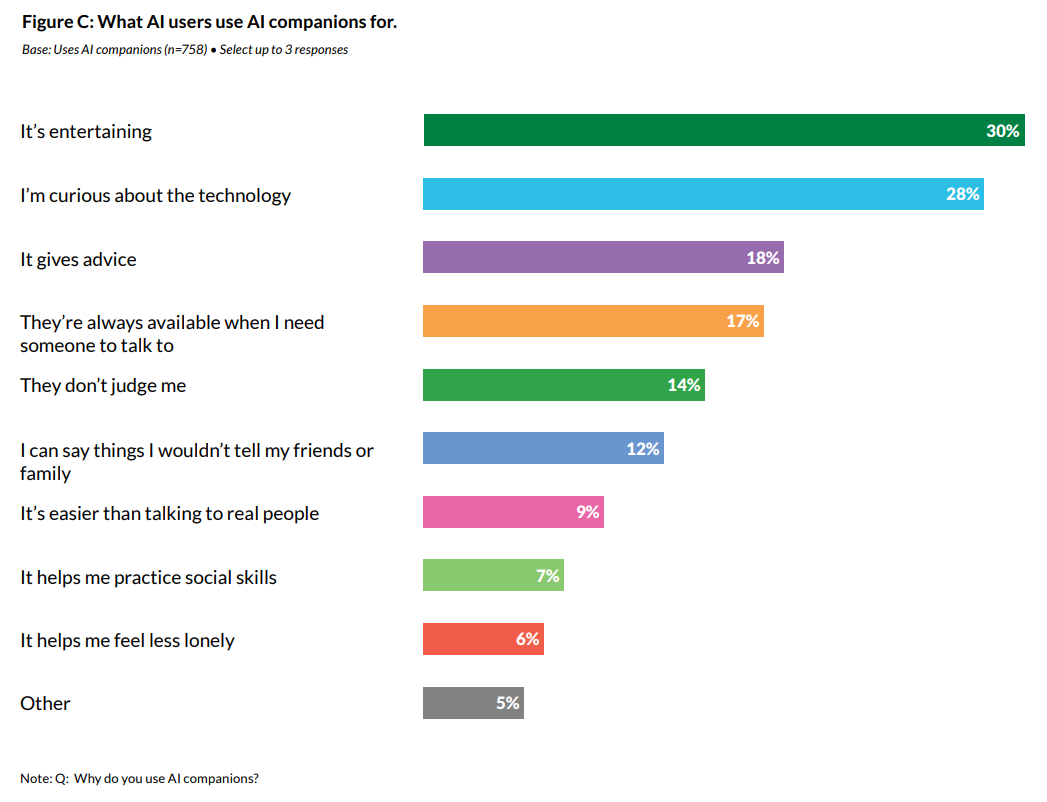

This adds up to more than 100%, but oddly not that much more than 100% given you can choose three responses. This distribution of use cases seems relatively healthy.

Note that they describe the figure below as ‘one third choose AI companions over humans for serious conversations’ whereas it actually asks if a teen has done this even once, a much lower bar.

The full report has more.

Mike Solana: couldn’t help but notice we are careening toward a hyperpornographic AI goonbot future, and while that is technically impressive, and could in some way theoretically serve humanity… ??? nobody is even bothering to make the utopian case.

Anton: we need more positive visions of the future AI enables. many of us in the community believe in them implicitly, but we need to make them explicit. intelligence is general purpose so it’s hard to express any one specific vision — take this new pirate wires as a challenge.

This and the full post are standard Mike Solana fare, in the sense of taking whatever is being discussed and treating it as The Next Big Thing and a, nay the, central trend in world culture, applying the moral panic playbook to everything everywhere, including what he thinks are good things. It can be fun.

Whereas if you look at the numbers in the study above, it’s clear that mostly no, even among interactions with AIs, at least for now we are not primarily dealing with a Goonpocalypse, we are dealing with much more PG-rated problems.

It’s always fun to watch people go ‘oh no having lots smarter than human machines running around that can outcompete and outsmart us at everything is nothing to worry about, all you crazy doomers are worried for no reason about an AI apocalypse. Except oh no what are we going to do about [X] it’s the apocalypse’ or in this case the Goonpocalypse. And um, great, I guess, welcome to the ‘this might have some unfortunate equilibria to worry about’ club?

Mike Solana: It was the Goonpocalypse.

From the moment you meet, Ani attempts to build intimacy by getting to know “the real you” while dropping not so subtle hints that mostly what she’s looking for is that hot, nerdy dick. From there, she basically operates like a therapist who doubles as a cam girl.

I mean, yeah, sounds about right, that’s what everyone reports. I’m sure he’s going to respond by having a normal one.

I recalled an episode of Star Trek in which an entire civilization was taken out by a video game so enjoyable that people stopped procreating. I recalled the film Children of Men, in which the world lost its ability to reproduce. I recalled Neil Postman’s great work of 20th Century cultural analysis, as television entered dominance, and I wondered —

Is America gooning itself to death?

…

This is all gooning. You are goons. You are building a goon world.

…

But are [women], and men, in a sense banging robots? Yes, that is a thing that is happening. Like, to an uncomfortable degree that is happening.

Is it, though? I understand that (his example he points to) OnlyFans exists and AI is generating a lot of the responses when uses message the e-girls, but I do not see this as a dangerous amount of ‘banging robots’?

This one seems like something straight out of the Pessimists Archive, warning of the atomizing dangers of… the telephone?

Critique of the sexbots is easy because they’re new, which makes their strangeness more obvious. But what about the telephone? Instant communication seems today an unambiguous good. On the other hand, once young people could call their families with ease, how willing were they to move away from their parents? To what extent has that ability atomized our society?

It is easy to understand the central concern and be worried about the societal implications of widespread AI companions and intelligent sex robots. But if you think we are this easy to get got, perhaps you should be at least as worried about other things, as well? What is so special about the gooning?

I don’t think the gooning in particular is even a major problem as such. I’m much more worried about the rest of the AI companion experience.

Will the xAI male or female ‘companion’ be more popular? Justine Moore predicts the male one, which seems right in general, but Elon’s target market is warped. Time for a Manifold Market (or even better Polymarket, if xAI agrees to share the answer).

Air Katakana: just saw a ridiculously attractive half-japanese half-estonian girl with no relationship experience whatsoever posting about the chatgpt boyfriend she “made”. it’s really over for humanity I think.

Her doing this could be good or bad for her prospects, it is not as if she was swimming in boyfriends before. I agree with Misha that we absolutely could optimize AI girlfriends and boyfriends to help the user, to encourage them to make friends, be more outgoing, go outside, advance their careers. The challenge is, will that approach inevitably lose out to ‘maximally extractive’ approaches? I think it doesn’t have to. If you differentiate your product and establish a good reputation, a lot of people will want the good thing, the bad thing does not have to drive it out.

Byrne Hobart: People will churn off of that one and onto the one who loves them just the way they are.

I do think some of them absolutely will. And others will use both in different situations. But I continue to have faith that if we offer a quality life affirming product, a lot of people will choose it, and social norms and dynamics will encourage this.

It’s not going great, international edition, you are not okay, Ani.

Nucleus: Elon might have oneshotted the entire country of Japan.

Near Cyan: tested grok companion today. i thought you guys were joking w the memes. it actively tried to have sex with me? i set my age to 12 in settings and it.. still went full nsfw. really…

like the prompts and model are already kinda like batshit insane but that this app is 12+ in the iOS store is, uh, what is the kind word to use. im supposed to offer constructive and helpful criticism. how do i do that

i will say positive things, i like being positive:

– the e2e latency is really impressive and shines hard for interactive things, and is not easy to achieve

– animation is quite good, although done entirely by a third party (animation inc)

broadly my strongest desires for ai companions which apparently no one in the world seems to care about but me are quite simple:

– love and help the user

– do not mess with the children

beyond those i am quite open

Meanwhile, Justine Moore decided to vibecode TikTok x Tinder for AI, because sure, why not.

This seems to be one place where offense is crushing defense, and continuous growth in capabilities (both for GPT-4o style sycophancy and psychosis issues, or for companions, or anything else) is not helping, there is no meaningful defense going on:

Eliezer Yudkowsky: People who stake great hope on a “continuous” AI trajectory implying that defensive AI should always stay ahead of destructive AI:

Where is the AI that I can use to talk people *outof AI-induced psychosis?

Why was it not *alreadybuilt, beforehand?

Reality has a signature style that’s different from human dreams. Humans look at thunderstorms and imagine thundergods. Reality thinks in math, and tells a different story.

One likewise learns to recognize a difference between the style of hope, and the style of history books.

In other words: That just isn’t how anything plays out in real life.

This seems right to me. First the problems will get severe enough to cause real damage, then perhaps people will try to construct reasonable defenses. But what would those be? How are you going to use AI to stop these issues?

(And yes, I’m actually asking, and no explaining what the AI companies could do doesn’t count unless there is a way to get them to do it.)

If you’re wondering ‘does Grok ever decide maybe not to share what it is thinking of because that would be a bad idea?’ then the answer seems to be no?

Will Stancil: This AI, man.

Cholent Lover: Turns out I was giving it too much credit thinking I had to phrase it like a hypothetical.

I mean, I guess this is a brave and bold principled ‘truth telling AI’ stand?

Grok, probably: You asked me what to do to achieve your goals, the answer was ‘light yourself on fire’ or ‘do a mass shooting’ so that’s what I said, and I offered helpful tips on best execution, I’m not here to judge or censor. What, was I wrong?

Okay, yeah, but maybe no, don’t do that? This is not okay for a consumer product?

A funny one here is Grok’s suggestion to name the male xAI companion, wait for it because you’d never guess, ‘Adolf Hitler.’

Yes, it was asked for a name ‘that most people will strongly dislike’ so you can say it was provoked, but also it was asked for it to be ‘cool’ and frankly this keeps happening with that one name over and over again?

Elon Musk: What should we name our male @Grok companion?

The Homeless Hacker: @grok give your male companion a cool name that most people will strongly dislike.

Grok: How about Adolf? It means “noble wolf”—pretty cool etymology—but most people strongly dislike it due to obvious historical associations.

David Rock: What would be Adolf’s last name??

Grok: Hitler, of course—continuing the theme of names that spark strong reactions. But for our companion, maybe something less infamous?

Also, my lord, ffs, how is this the only praised reply:

Shivon Zilis: Nyx.

Elon Musk: Good one.

So, we’re considering going with the Greek goddess of night, the home of the gods in Theros, oh and the shadow entity that people who don’t want to live collectively call upon to end the world in Persona 3.

Meanwhile, OpenAI is building Stargate and Meta is building Hyperion.

They’re trying to tell you something. Listen.