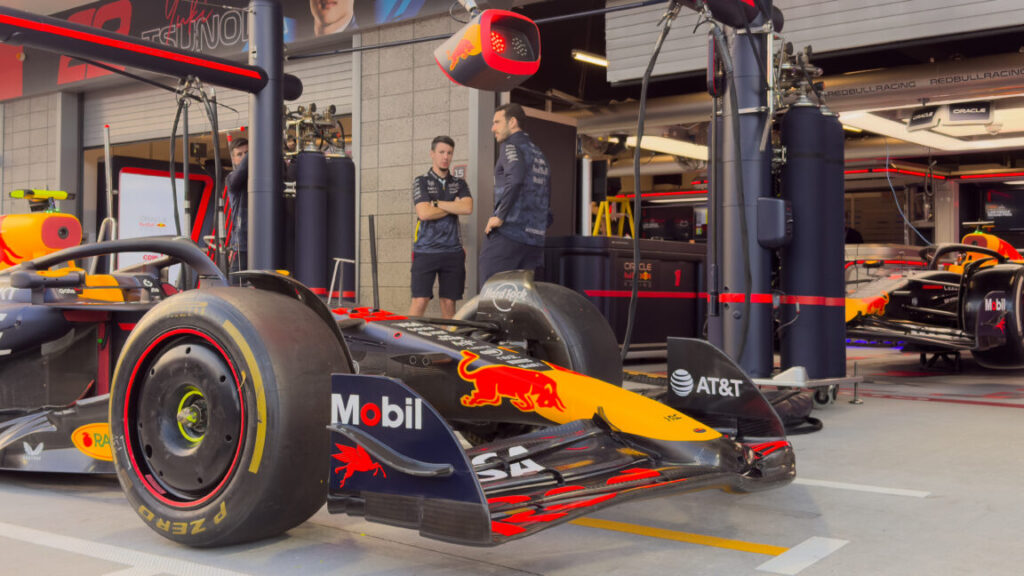

Data-driven sport: How Red Bull and AT&T move terabytes of F1 info

“We learned how to be more efficient because before… we were so focused on performance that we almost forgot about efficiency, about it was full performance, and we have more people now than we had in 2017, for example, in the team, but we are spending less money,” Maia told me.

Bigger data

The number of sensors on each race car has tripled, with around 750 of them, each sending back a different data stream, amounting to around 1.5 terabytes per car per race. Telemetry used to be pretty basic—a TV feed, throttle, brake, and steering applications, and so on. Now a small squad of engineers sits at banks of screens in the back of the garage, hidden away from the cameras, in constant link with their colleagues in the Milton Keynes factory.

“We need as well to bring it straight away to Milton Keynes because it’s helping us to fine-tune the setup—so when you are here on Friday—and it’s helping us as well on Sunday to make the best decision for the race strategy. So that’s why it’s very good to have a lot of data, but you need as well to transfer it back and forth,” Maia said.

“It is a sport of milliseconds, as you know,” said Zee Hussain, head of global enterprise solutions at AT&T. “So the speed of data, the reliability of data, the latency, the security is just absolutely critical. If the data is not going, traversing, at the highest possible speed, and it’s not on a secure and reliable path, that is absolutely without question the difference between winning and losing,” Hussain said.

“I think the biggest latency we have is between Australia and the UK, and it’s around 0.3 seconds. It’s nothing. I think if you are on WhatsApp, calling someone is maybe more latency… So it’s impressive,” Maia said.

Data-driven sport: How Red Bull and AT&T move terabytes of F1 info Read More »