In honor of my dropping by Inkhaven at Lighthaven in Berkeley this week, I figured it was time for another writing roundup. You can find #1 here, from March 2025.

I’ll be there from the 17th (the day I am publishing this) until the morning of Saturday the 22nd. I am happy to meet people, including for things not directly about writing.

-

Table of Contents.

-

How I Use AI For Writing These Days.

-

Influencing Influence.

-

Size Matters.

-

Time To Write A Shorter One.

-

A Useful Tool.

-

A Maligned Tool.

-

Neglected Topics.

-

The Humanities Don’t Seem Relevant To Writing About Future Humanity?

-

Writing Every Day.

-

Writing As Deep Work.

-

Most Of Your Audience Is Secondhand.

-

That’s Funny.

-

Fiction Writing Advice.

-

Just Say The Thing.

-

Cracking the Paywall.

How have I been using AI in my writing?

Directly? With the writing itself? Remarkably little. Almost none.

I am aware that this is not optimal. But at current capability levels, with the prompts and tools I know about, in the context of my writing, AI has consistently proven to have terrible taste and to make awful suggestions, and also to be rather confident about them. This has proven sufficiently annoying that I haven’t found it worth checking with the AIs.

I also worry about AI influence pushing me towards generic slop, pushing me to sounding more like the AIs, and rounding off the edges of things, since every AI I’ve tried this with keeps trying to do all that.

I am sure it does not help that my writing style is very unusual, and basically not in the training data aside from things written by actual me, as far as I can tell.

Sometimes I will quote LLM responses in my writing, always clearly labeled, when it seems useful to point to this kind of ‘social proof’ or sanity check.

The other exception is that if you ask the AI to look for outright errors, especially things like spelling and grammar, it won’t catch everything, but when it does catch something it is usually right. When you ask it to spot errors of fact, it’s not as reliable, but it’s good enough to check the list. I should be making a point of always doing that.

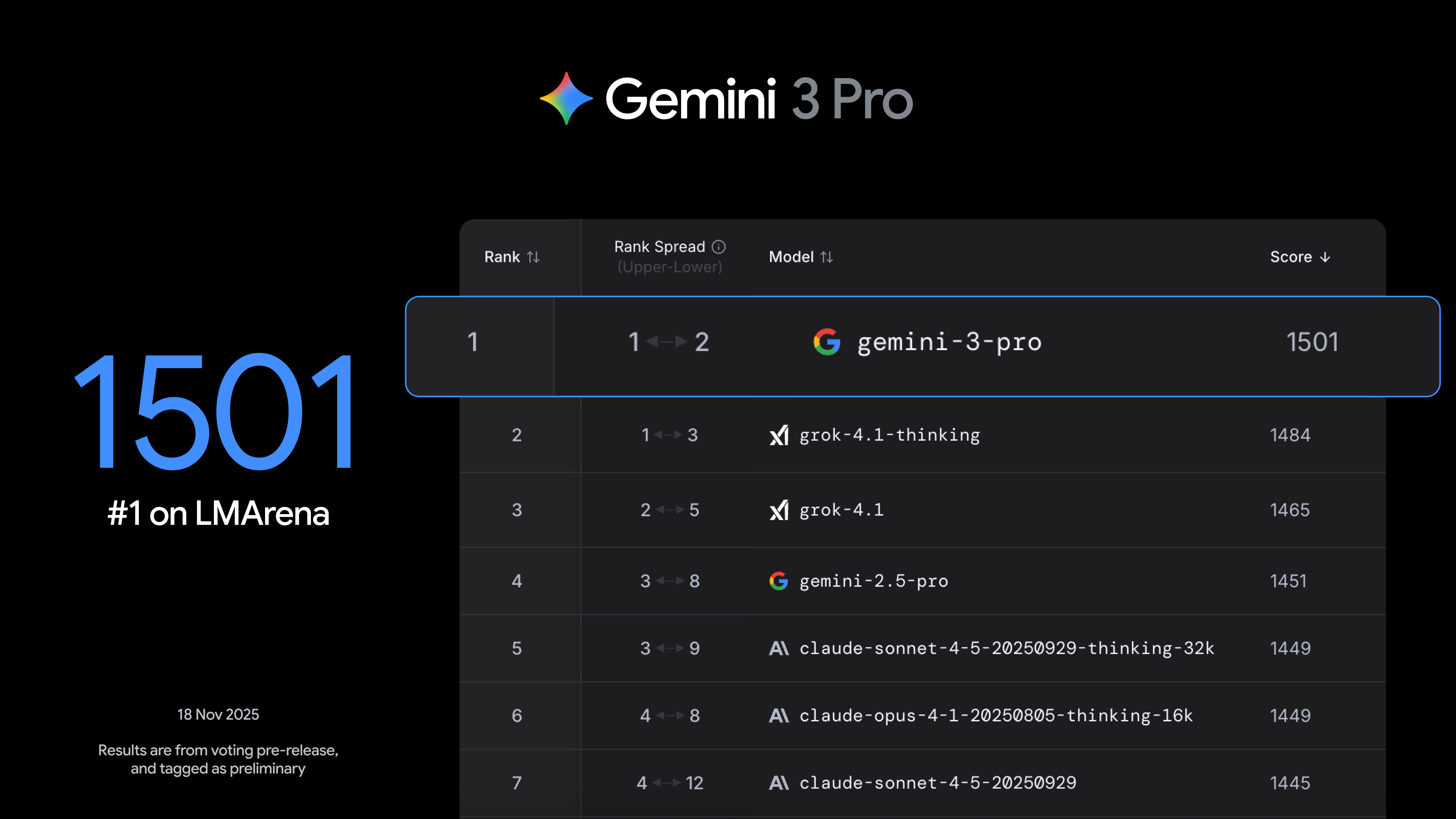

I did the ‘check for errors and other considerations’ thing on this piece in particular with both Sonnet and 5.1-Thinking. This did improve the post but it’s not obvious it improved it enough to be worth the time.

I will also sometimes ask it about a particular line or argument I’m considering, to see if it buys it, but only when what I care about is a typical reaction.

If I was devoting more time to refining and editing, and cared more about marginal improvements there, that would open up more use cases, but I don’t think that’s the right use of time for me on current margins versus training on more data or doing more chain of thought.

Indirectly? I use it a lot more there, and again I could be doing more.

There are some specific things:

-

I have a vibe coded Chrome extension that saves me a bunch of trouble, that could be improved a lot with more work. It does things like generate the Table of Contents, crosspost to WordPress, auto-populate many links and quickly edit quotes to fix people’s indifference to things like capitalization.

-

I have a GPT called Zvi Archivist that I use to search through my past writing, to check if and when I’ve already covered something and what I’ve said about it.

-

I have a transcriber for converting images to text because all the websites I know about that offer to do this for you are basically broken due to gating. This works.

Then there’s things that are the same as what everyone does all the time. I do a lot of fact checking, sanity checking, Fermi estimation, tracking down information or sources, asking for explanations, questioning papers for the things I care about. Using the AI assistant in its classic sense. All of that is a big help and I notice my activation requirement to do this is higher than it should be.

I want this to be true so I’m worried I can’t be objective, but it seems true to me?

Janus: i think that it’s almost always a bad idea to attempt to grow as an influencer on purpose.

you can believe that it would be good if you were to grow, and still you shouldn’t optimize for it.

the only way it goes well is if it happens while you optimize for other things.

More precisely than “you shouldn’t on purpose” what I’m saying is you shouldn’t be spending significant units of optimization on this goal and performing actions you wouldn’t otherwise for this purpose

I am confident that if you optimize primarily for influence, that’s full audience capture, slopification and so on, and you’ve de facto sold your soul. You can in theory turn around and then use that influence to accomplish something worthwhile, but statistically speaking you won’t do that.

Janus: Name a single account that explicitly optimizes for being a bigger influencer / “tries to grow” (instead of just happening as a side effect) and that does more good than harm to the ecosystem and generally has good vibes and interesting content

You probably can’t!

actually, https://x.com/AISafetyMemes is a contender

but i know they’re VERY controversial and I do think they’re playing with fire

i do consider them net positive but this is mostly bc they sometimes have very good taste and maybe cancel out the collateral damage

but WOULD NOT RECOMMEND almost anyone trying this, lol

AISafetyMemes is definitely an example of flying dangerously close to the sun on this, but keeping enough focus and having enough taste to maybe be getting away with it. It’s unclear that the net sign of impact there is positive, there are some very good posts but also some reasons to worry.

No one reads the blog posts, they’re too long, so might as well make them longer?

Visakan Veerasamy: An idea I’ve been toying with and discussed with a couple of friends is the idea that blog posts could and probably should get much longer now that fewer people are reading them.

One of the difficult things about writing a good essay is figuring out what to leave out so it is more manageable for readers.

But on a blog where there is no expectation that anybody reads it, you do not have to leave anything out.

My guess is this is going to end up being a barbell situation like so many other things. If you cut it down, you want to cut it down as much as possible. If you’re going long, then on the margin you’re better off throwing everything in.

I highlight this exactly because it seems backwards to me. I notice that my experience is very much the opposite – when I want to write a good short piece it is MUCH more work per token, and often more total work.

Timothy Lee: I think a big reason that writing a book is such a miserable experience is that the time to write a good piece is more-than-linear in the number of words. A good 2,000-word piece is a lot more than 4x the work of a good 500-word piece.

I assume this continues for longer pieces and a good 100,000 book is a lot more than 50x the work of a good 2,000-word article. Most authors deal with this by cutting corners and turning in books that aren’t very good. And then there’s Robert Caro.

Josh You: I think by “good 2000 word piece” Tim means “a 2000 word piece that has been edited down from a much longer first draft”

Even then. Yes, a tight longer piece requires more structure and planning, but the times I write those 500-800 word pieces it takes forever, because you really do struggle over every word as you try to pack everything into the tiniest possible space.

Writing a 100,000 word book at the precision level of an 800 word thinkpiece would take forever, but also I presume it almost never happens. If it does, that better be your masterpiece or I don’t see why you’d do it.

Dwarkesh Patel is using the Smart Composer Plugin for Obsidian, which he says is basically Cursor for writing, and loves it. Sounds great conditional on using Obsidian, but it is not being actively maintained.

Eric Raymond joins ‘the em-dash debate’ on the side of the em-dash.

Eric Raymond (yes that one): My wacky theory about the em-dash debate:

Pro writers use em-dashes a lot because many of them, possibly without consciously realizing it, have become elocutionary punctuationists.

That is, they’ve fallen into the habit of using punctuation not as grammatical phrase structure markers but as indicators of pauses of varying length in the flow of speech.

The most visible difference you see in people who write in this style that their usage of commas becomes somewhat more fluid — that’s the marker for the shortest pause. But they also reach for less commonly used punctuation marks as indicators of longer pauses of varying length.

Em dash is about the second or third longest pause, only an ellipsis or end-of-sentence period being clearly longer.

Historical note: punctuation marks originally evolved as pause or breathing markers in manuscripts to aid recitation. In the 19th century, after silent reading had become normal, they were reinterpreted by grammarians as phrase structure markers and usage rules became much more rigid.

Really capable writers have been quietly rediscovering elocutionary punctuation ever since.

RETVRN!

I too have been increasingly using punctuation, especially commas, to indicate pauses. I still don’t use em dashes, partly because I almost never want that exact length and style of a pause for whatever reason, and also because my instinct is that you’re trying to do both ‘be technically correct’ and also ‘evoke what you want’ and my brain thinks of the em-dash as technically incorrect.

That’s all true and I never used em-dashes before but who are we kidding, the best reason not to use em-dashes is that people will think you’re using AI. I don’t love that dynamic either, but do you actually want to die on that hill?

Tyler Cowen lists some reasons why he does not cover various topics much. The list resonates with me quite a bit.

-

I feel that writing about the topic will make me stupider.

-

I believe that you reading more about the topic will make you stupider.

-

I believe that performative outrage usually brings low or negative returns. Matt Yglesias has had some good writing on this lately.

-

I don’t have anything to add on the topic. Abortion and the Middle East would be two examples here.

-

Sometimes I have good inside information on a topic, but I cannot reveal it, not even without attribution. And I don’t want to write something stupider than my best understanding of the topic.

-

I just don’t feel like it.

-

On a few topics I feel it is Alex’s province.

I don’t have an Alex, instead I have decided on some forms of triage that are simply ‘I do not have the time to look into this and I will let it be someone else’s department.’

Otherwise yes, all of these are highly relevant.

Insider information is tough, and I am very careful about not revealing things I am not supposed to reveal, but this rarely outright stops me. If nothing else, you can usually get net smarter via negativa, where you silently avoid saying false things, including by using careful qualifiers on statements.

One big thing perhaps missing from Tyler’s list is that I avoid certain topics where my statements would potentially interfere with my ability to productively discuss other topics. If you are going to make enemies, or give people reasons to attack you or dismiss you, only do that on purpose. One could also file this under making you and others stupider. Similarly, there are things that I need to not think about – I try to avoid thinking about trading for this reason.

A minor thing is that I’d love to be able to talk more about gaming, and other topics dear to my heart, but that consistently drive people away permanently when I do that. So it’s just not worth it. If the extra posts simply had no impact, I’d totally do it, but as is I’d be better off writing the post and then not hitting publish. Sad. Whereas Tyler has made it very clear he’s going to post things most readers don’t care about, when he feels like doing so, and that’s part of the price of admission.

If you want to write or think about the future, maybe don’t study the humanities?

Startup Archive: Palmer Luckey explains why science fiction is a great place to look for ideas

“One of the things that I’ve realized in my career is that nothing I ever come up with will be new. I’ve literally never come up with an idea that a science fiction author has not come up with before.”

Dr. Julie Gurner: Funny how valuable those English majors and writers truly are, given how much liberal arts has been put down. Why philosophy, creativity and hard tech skills make such fantastic bedfellows. Span of vision wins.

Orthonormalist: Heinlein was an aeronautical engineer.

Asimov was a biochemistry professor.

Arthur Clarke was a radio operator who got a physics degree.

Ray Bradbury never went to college (but did go straight to being a writer)

I quote this because ‘study the humanities’ is a natural thing to say to someone looking to write or think about the future, and yet I agree that when I look at the list of people whose thinking about the future has influenced me, I notice essentially none of them have studied the humanities.

Alan Jacobs has a very different writing pattern. Rather than write every day, he waits until the words are ready, so he’ll work every day but often that means outlines or index card reordering or just sitting in his chair and thinking, even for weeks at a time. This is alien to me. If I need to figure out what to write, I start writing, see what it looks like, maybe delete it and try again, maybe procrastinate by working on a different thing.

Neal Stephenson explains that for him writing is Deep Work, requiring long blocks of reliably uninterrupted time bunched together, writing novels is the best thing he does, and that’s why he doesn’t go to conferences or answer your email. Fair enough.

I’ve found ways to not be like that. I deal with context shifts and interruptions all the time and it is fine, indeed when dealing with difficult tasks I almost require them. That’s a lot of how I can be so productive. But the one time I wrote something plausibly like a book, the Immoral Mazes sequence, I did spend a week alone in my apartment doing nothing else. And I haven’t figured out how to write a novel, or almost any fiction at all.

Also, it’s rather sad if it is true that Neal Stephenson only gets a middle class life out of writing so many fantastic and popular books, and can’t afford anyone to answer his email. That makes writing seem like an even rougher business than I expected. Although soon AI can perhaps do it for him?

Patrick McKenzie highlights an insight from Alex Danco, which is that most of the effective audience of any successful post is not people who read the post, but people who are told about the post by someone who did read it. Patrick notes this likely also applies to formal writing, I’d note it seems to definitely apply to most books.

Relatedly, I have in the past postulated a virtual four-level model of flow of ideas, where each level can understand the level above it, and then rephrase and present it to the level below.

So if you are Level 1, either in general or in an area, you can formulate fully new ideas. If you are Level 2, you can understand what the Level 1s say, look for consensus or combine what they say, riff on it and then communicate that to those who are up to Level 3, who can then fully communicate to the public who end up typically around at Level 4.

Then the public will communicate a simplified and garbled version to each other.

You can be Level 1 and then try to ‘put on your Level 2 or 3 hat’ to write a dumber, simpler version to a broader audience, but it is very hard to simultaneously do that and also communicate the actual concepts to other Level 1s.

These all then interact, but if you go viral with anything longer than a Tweet, you inevitably are going to primarily end up with a message primarily communicated via (in context) Level 3 and Level 4 people communicating to other Level 3-4 people.

At that point, and any time you go truly viral or your communication is ‘successful,’ you run into the You Get About Five Words problem.

My response to this means that at this point I essentially never go all that directly viral. I have a very narrow range of views, where even the top posts never do 100% better than typical posts, and the least popular posts – which are when I talk about AI alignment or policy on their own – will do at worst 30% less than typical.

The way the ideas go viral is someone quotes, runs with or repackages them. A lot of the impact comes from the right statement reaching the right person.

I presume that would work differently if I was working with mediums that work on virality, such as YouTube or TikTok, but my content seems like a poor fit for them, and when I do somewhat ‘go viral’ in such places it is rarely content I care about spreading. Perhaps I am making a mistake by not branching out. But on Twitter I still almost never go viral, as it seems my speciality is small TAM (total available market) Tweets.

Never have a character try to be funny, the character themselves should have no idea.

I think this is directionally correct but goes too far, for the same reasons that you, in your real life, will often try to be funny, and sometimes it will work. The trick is they have to be trying to be funny in a way that makes sense for the character, in context, for those around them, not trying to be funny to the viewer.

I notice that in general I almost never ‘try to be funny,’ not exactly. I simply say things because they would be funny, and to say things in the funniest way possible, because why not. A lot of my favorite people seem to act similarly.

Lydia Davis offers her top ten recommendations for good (fiction?) writing: Keep notes, including sentences out of context, work from your own interest, be mostly self-taught, read and revise the notes constantly, grow stories or develop poems out of those notes, learn techniques from great works and read the best writers across time.

Orson Scott Card explains that you don’t exhaust the reader by having too much tension in your book, you exhaust them by having long stretches without tension. The tension keeps us reading.

Dwarkesh Patel: Unreasonably effective writing advice:

“What are you trying to say here?

Okay, just write that.”

I’ve (separately) started doing this more.

I try to make sure that it’s very easy to find the central point, the thing I’m most trying to say, and hard to miss it.

Patrick McKenzie: Cosigned, and surprisingly effective with good writers in addition to ones who more obviously need the prompting.

Writing an artifact attaches you to the structure of it while simultaneously subsuming you in the topic. The second is really good for good work; the first, less so.

One thing that I tried, with very limited success, to get people to do is to be less attached to words on a page. Writing an essay? Write two very different takes on it; different diction, different voice, maybe even different argument. Then pick the one which speaks to you.

Edit *thatrather than trying to line edit the loser towards greatness.

There is something which people learn, partially from school and partially from work experience, which causes them to write as if they were charged for every word which goes down on the page.

Words are free! They belong in a vast mindscape! You can claw more from the aether!

I think people *mightoperationalize better habits after LLMs train them that throwing away a paragraph is basically costless.

Jason Cohen: Yeah this works all the time.

Also when getting someone to explain their product, company, customer, why to work for them, etc..

So funny how it jogs them out of their own way!

BasedBigTech: An excellent Group PM reviewed my doc with me. He said “what does this mean?” and I told him.

“Then why didn’t you write that?”

Kevin Kelly: At Whole Earth Review people would send us book reviews with a cover letter explaining why we should run their book review. We’d usually toss the review and print their much shorter cover letter as the review which was much clearer and succinct.

Daniel Eth: It’s crazy how well just straight up asking people that gets them to say the thing they should write down

Why does it work?

The answer is that writing is doing multiple tasks.

Only one of them is ‘tell you what all this means.’

You have to do some combination of things such as justify that, explain it, motivate it, provide details, teach your methods and reasoning, perform reporting, be entertaining and so on.

Also, how did you know what you meant to say until you wrote the damn thing?

You still usually should find a way to loudly say what it all means, somewhere in there.

But this creates the opportunity for the hack.

If I hand you a ten-page paper, and you ask ‘what are you trying to say?’ then I have entered into evidence that I have Done the Work and Written the Report.

Now I can skip the justifications, details and context, and Say The Thing.

The point of a reference post is sometimes to give people the opportunity to learn.

The point of a reference post can also be to exist and then not be clicked on. It varies.

This is closely related to the phenomenon where often a movie or show will have a scene that logically and structurally has to exist, but which you wish you didn’t have to actually watch. In theory you could hold up a card that said ‘Scene in which Alice goes to the bank, acts nervous and get the money’ or whatever.

Probably they should do a graceful version of something like that more often, or even interactive versions where you can easily expand or condense various scenes. There’s something there.

Similarly, with blog posts (or books) there are passages that are written or quoted knowing many or most people will skip them, but that have to be there.

Aella teaches us how to make readers pay up to get behind a Paywall. Explain why you are the One Who Knows some valuable thing, whereas others including your dear reader are bad at this and need your help. Then actually provide value both outside and inside of the paywall, ideally because the early free steps are useful even without the payoff you’re selling.

I am thankful that I can write without worrying about maximizing such things, while I also recognize that I’m giving up a lot of audience share not optimizing for doing similar things on the non-paywall side.