The Last of Us episode 5 recap: There’s something in the air

New episodes of season 2 of The Last of Us are premiering on HBO every Sunday night, and Ars’ Kyle Orland (who’s played the games) and Andrew Cunningham (who hasn’t) will be talking about them here every Monday morning. While these recaps don’t delve into every single plot point of the episode, there are obviously heavy spoilers contained within, so go watch the episode first if you want to go in fresh.

Andrew: We’re five episodes into this season of The Last of Us, and most of the infected we’ve seen have still been of the “mindless, screeching horde” variety. But in the first episode of the season, we saw Ellie encounter a single “smart” infected person, a creature that retained some sense of strategy and a self-preservation instinct. It implied that the show’s monsters were not done evolving and that the seemingly stable fragments of civilization that had managed to take root were founded on a whole bunch of incorrect assumptions about what these monsters were and what they could do.

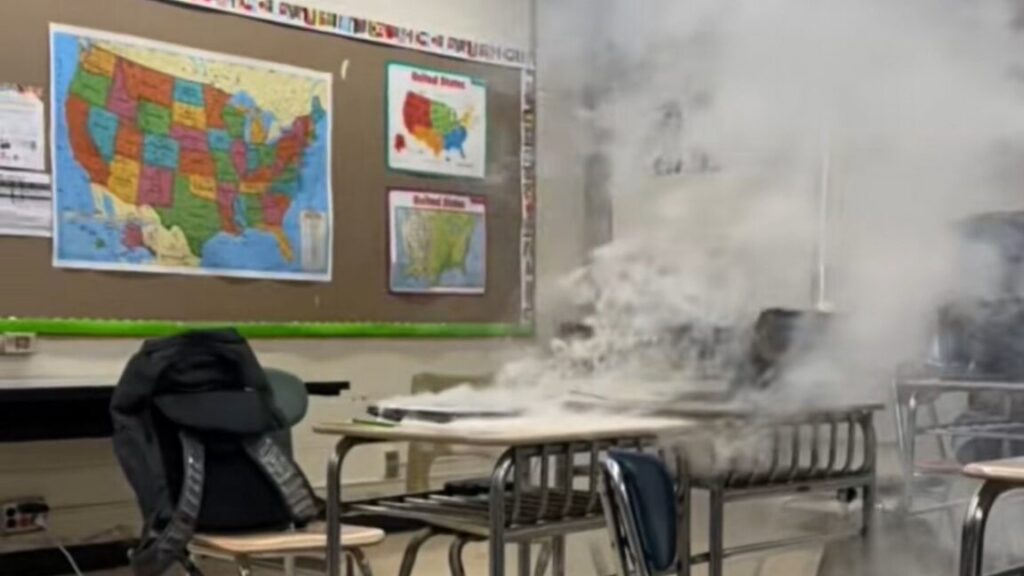

Amidst all the human-created drama, the changing nature of the Mushroom Zombie Apocalypse is the backdrop of this week’s entire episode, starting and ending with the revelation that a 2003-vintage cordyceps nest has become a hotbed of airborne spores, ready to infect humans with no biting required.

This is news to me, as a Non-Game Player! But Kyle, I’m assuming this is another shoe that you knew the series was going to drop.

Kyle: Actually, no. I suppose it’s possible I’m forgetting something, but I think the “some infected are actually pretty smart now” storyline is completely new to the show. It’s just one of myriad ways the show has diverged enough from the games at this point that I legitimately don’t know where it’s going to go or how it’s going to get there at any given moment, which is equal parts fun and frustrating.

I will say that the “smart zombies” made for my first real “How are Ellie and Dina going to get out of this one?” moment, as Dina’s improvised cage was being actively torn apart by a smart and strong infected. But then, lo and behold, here came Deus Ex Jesse to save things with a timely re-entrance into the storyline proper. You had to know we hadn’t seen the last of him, right?

Ellie is good at plenty of things, but not so good at lying low. Credit: HBO

Andrew: As with last week’s subway chase, I’m coming to expect that any time Ellie and Dina seem to be truly cornered, some other entity is going to swoop down and “save” them at the last minute. This week it was an actual ally instead of another enemy that just happened to take out the people chasing Ellie and Dina. But it’s the same basic narrative fake-out.

I assume their luck will run out at some point, but I also suspect that if it comes, that point will be a bit closer to the season finale.

Kyle: Without spoiling anything from the games, I will say you can expect both Ellie and Dina to experience their fair share of lucky and unlucky moments in the episodes to come.

Speaking of unlucky moments, while our favorite duo is hiding in the park we get to see how the local cultists treat captured WLF members, and it is extremely not pretty. I’m repeating myself a bit from last week, but the lingering on these moments of torture feels somehow more gratuitous in an HBO show, even when compared to similarly gory scenes in the games.

Andrew: Well we had just heard these cultists compared to “Amish people” not long before, and we already know they don’t have tanks or machine guns or any of the other Standard Issue The Last of Us Paramilitary Goon gear that most other people have, so I guess you’ve got to do something to make sure the audience can actually take the cultists seriously as a threat. But yeah, if you’re squeamish about blood-and-guts stuff, this one’s hard to watch.

I do find myself becoming more of a fan of Dina and Ellie’s relationship, or at least of Dina as a character. Sure, her tragic backstory’s a bit trite (she defuses this criticism by pointing out in advance that it is trite), but she’s smart, she can handle herself, she is a good counterweight to Ellie’s rush-in-shooting impulses. They are still, as Dina points out, doing something stupid and reckless. But I am at least rooting for them to make it out alive!

Kyle: Personality wise the Dina/Ellie pairing has just as many charms as the Joel/Ellie pairing from last season. But while I always felt like Joel and Ellie had a clear motivation and end goal driving them forward, the thirst for revenge pushing Dina and Ellie deeper into Seattle starts to feel less and less relevant the more time goes on.

The show seems to realize this, too, stopping multiple times since Joel’s death to kind of interrogate whether tracking down these killers is worth it when the alternative is just going back to Jackson and prepping for a coming baby. It’s like the writers are trying to convince themselves even as they’re trying (and somewhat failing, in my opinion) to convince the audience of their just and worthy cause.

Andrew: Yeah, I did notice the points where Our Heroes paused to ask “are we sure we want to be doing this?” And obviously, they are going to keep doing this, because we have spent all this time setting up all these different warring factions and we’re going to use them, dang it!! But this has never been a thing that was going to bring Joel back, and it only seems like it can end in misery, especially because I assume Jesse’s plot armor is not as thick as Ellie or Dina’s.

Kyle: Personally I think the “Ellie and Dina give up on revenge and prepare to start a post-apocalyptic family (while holding off zombies)” would have been a brave and interesting direction for a TV show. It would have been even braver for the game, although very difficult for a franchise where the main verbs are “shoot” and “stab.”

Andrew: Yeah if The Last of Us Part II had been a city-building simulator where you swap back and forth between managing the economy of a large town and building defenses to keep out the hordes, fans of the first game might have been put off. But as an Adventure of Link fan I say: bring on the sequels with few-if-any gameplay similarities to their predecessors!

The cordyceps threat keeps evolving. Credit: HBO

Kyle: “We killed Joel” team member Nora definitely would have preferred if Ellie and Dina were playing that more domestic kind of game. As it stands, Ellie ends up pursuing her toward a miserable-looking death in a cordyceps-infested basement.

The chase scene leading up to this mirrors a very similar one in the game in a lot of ways. But while I found it easy to suspend my disbelief for the (very scripted) chase on the PlayStation, watching it in a TV show made me throw up my hands and say “come on, these heavily armed soldiers can’t stop a little girl that’s making this much ruckus?”

Andrew: Yeah Jesse can pop half a dozen “smart” zombies in half a dozen shots, but when it’s a girl with a giant backpack running down an open hallway everyone suddenly has Star Wars Stormtrooper aim. The visuals of the cordyceps den, with the fungified guys breathing out giant clouds of toxic spores, is effective in its unsettling-ness, at least!

This episode’s other revelation is that what Joel did to the Fireflies in the hospital at the end of last season is apparently not news to Ellie, when she hears it from Nora in the episode’s final moments. It could be that Ellie, Noted Liar, is lying about knowing this. But Ellie is also totally incapable of controlling her emotions, and I’ve got to think that if she had been surprised by this, we would have been able to tell.

Kyle: Yeah, saying too much about what Ellie knows and when would be risking some major spoilers. For now I’ll just say the way the show decided to mix things up by putting this detailed information in Nora’s desperate, spore-infested mouth kind of landed with a wet thud for me.

I was equally perplexed by the sudden jump cut from “Ellie torturing a prisoner” to “peaceful young Ellie flashback” at the end of the episode. Is the audience supposed to assume that this is what is going on inside Ellie’s head or something? Or is the narrative just shifting without a clutch?

Andrew: I took it to mean that we were about to get a timeline-breaking departure episode next week, one where we spend some time in flashback mode filling in what Ellie knows and why before we continue on with Abby Quest. But I guess we’ll see, won’t we!

Kyle: Oh, I’ve been waiting with bated breath for a bevy of flashbacks I knew were coming in some form or another. But the particular way they shifted to the flashback here, with mere seconds left in this particular brutal episode, was baffling to me.

Andrew: I think you do it that way to get people hyped about the possibility of seeing Joel again next week. Unless it’s just a cruel tease! But it’s probably not, right? Unless it is!

Kyle: Now I kind of hope the next episode just goes back to Ellie and Dina and doesn’t address the five seconds of flashback at all. Screw you, audience!

The Last of Us episode 5 recap: There’s something in the air Read More »